This article is reproduced from Lei Feng.com. If you need to reprint, please go to the official website of Lei Feng.com to apply for authorization.

Cerebras, a company famous for creating the world's largest accelerator chip CS-2 Wafer Scale Engine, announced yesterday that they have taken an important step in using "giant cores" for artificial intelligence training. The company has trained the world's largest NLP (natural language processing) AI model on a single chip.

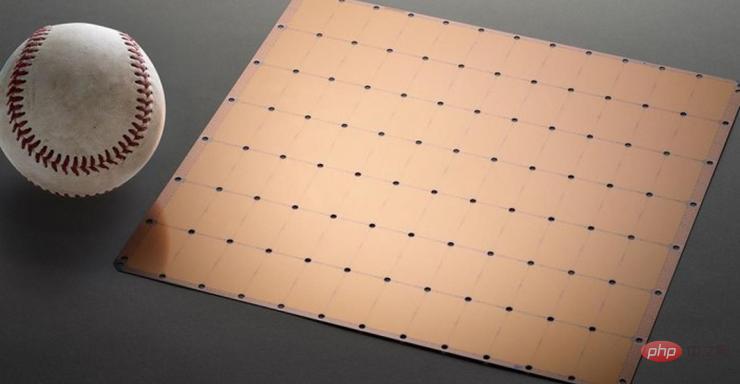

The model has 2 billion parameters and is trained on the CS-2 chip. The world's largest accelerator chip uses a 7nm process and is etched from a square wafer. It is hundreds of times larger than mainstream chips and has a power of 15KW. It integrates 2.6 trillion 7nm transistors, packages 850,000 cores and 40GB of memory.

Figure 1 CS-2 Wafer Scale Engine chip

The development of NLP models is an important area in artificial intelligence. Using NLP models, artificial intelligence can "understand" the meaning of text and take corresponding actions. OpenAI's DALL.E model is a typical NLP model. This model can convert text information input by users into image output.

For example, when the user enters "avocado-shaped armchair", AI will automatically generate several images corresponding to this sentence.

Picture: The "avocado-shaped armchair" picture generated by AI after receiving the information

More than just In addition, this model can also enable AI to understand complex knowledge such as species, geometry, and historical eras.

But it is not easy to achieve all this. The traditional development of NLP models has extremely high computing power costs and technical thresholds.

In fact, if we only discuss numbers, the 2 billion parameters of the model developed by Cerebras seem a bit mediocre compared with its peers.

The DALL.E model mentioned earlier has 12 billion parameters, and the largest model currently is Gopher, launched by DeepMind at the end of last year, with 280 billion parameters.

But apart from the staggering numbers, the NLP developed by Cerebras has a huge breakthrough: it reduces the difficulty of developing NLP models.

According to the traditional process, developing NLP models requires developers to divide huge NLP models into several functional parts and spread their workload across hundreds or thousands of graphics processing units.

Thousands of graphics processing units mean huge costs for manufacturers.

Technical difficulties also make manufacturers miserable.

The slicing model is a custom problem. Each neural network, the specifications of each GPU, and the network that connects (or interconnects) them together are unique and are not portable across systems.

Manufacturers must consider all these factors clearly before the first training.

This work is extremely complex and sometimes takes several months to complete.

Cerebras said this is "one of the most painful aspects" of NLP model training. Only a handful of companies have the necessary resources and expertise to develop NLP. For other companies in the AI industry, NLP training is too expensive, time-consuming, and unavailable.

But if a single chip can support a model with 2 billion parameters, it means that there is no need to use massive GPUs to spread the workload of training the model. This can save manufacturers thousands of GPU training costs and related hardware and scaling requirements. It also saves vendors from having to go through the pain of slicing up models and distributing their workloads across thousands of GPUs.

Cerebras is not just obsessed with numbers. To evaluate the quality of a model, the number of parameters is not the only criterion.

Rather than hope that the model born on the "giant core" will be "hard-working", Cerebras hopes that the model will be "smart".

The reason why Cerebras can achieve explosive growth in the number of parameters is because it uses weighted flow technology. This technology decouples the computational and memory footprints and allows memory to be expanded to be large enough to store any number of parameters that increase in AI workloads.

Thanks to this breakthrough, the time to set up a model has been reduced from months to minutes. And developers can switch between models such as GPT-J and GPT-Neo with "just a few keystrokes." This makes NLP development easier.

This has brought about new changes in the field of NLP.

As Dan Olds, Chief Research Officer of Intersect360 Research, commented on Cerebras’ achievements: “Cerebras’ ability to bring large language models to the masses in a cost-effective, accessible way opens up an exciting new space for artificial intelligence. ”

The above is the detailed content of The world's largest AI chip breaks the record for single-device training of large models, Cerebras wants to 'kill” GPUs. For more information, please follow other related articles on the PHP Chinese website!