In actual work projects, cache has become a key component of high-concurrency and high-performance architecture. So why can Redis be used as a cache? First of all, there are two main characteristics of caching:

Being in memory/CPU with good access performance in a layered system,

Cache data Saturated, with a good data elimination mechanism

Because Redis naturally has these two characteristics, Redis is based on memory operations, and it has a complete data elimination mechanism, which is very suitable as a cache component. .

Among them, based on memory operation, the capacity can be 32-96GB, and the average operation time is 100ns, and the operation efficiency is high. Moreover, there are many data elimination mechanisms. After Redis 4.0, there are 8 types, which makes Redis applicable to many scenarios as a cache.

So why does Redis cache need a data elimination mechanism? What are the 8 data elimination mechanisms?

The Redis cache is implemented based on memory, so its cache capacity is limited. When the cache is full, how should Redis handle it?

Redis When the cache is full, Redis needs a cache data elimination mechanism to select and delete some data through certain elimination rules so that the cache service can be used again. So what elimination strategies does Redis use to delete data?

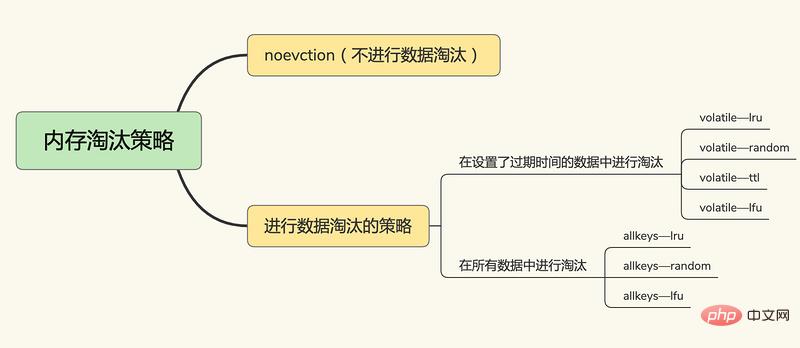

After Redis 4.0, there are 6 2 Redis cache elimination strategies, including three major categories:

No data elimination

noeviction, no data elimination is performed. When the cache is full, Redis does not provide services and directly returns an error.

In the key-value pair that sets the expiration time,

volatile-random, in the key that sets the expiration time Randomly delete

volatile-ttl from the value pairs. The key-value pairs that set the expiration time will be deleted based on the expiration time. The earlier the expiration date, the earlier it will be deleted.

volatile-lru, based on the LRU (Least Recently Used) algorithm to filter key-value pairs with an expiration time, and filter data based on the least recently used principle

volatile-lfu uses the LFU (Least Frequently Used) algorithm to select the key-value pairs with expiration time set, and filter the data by using the key-value pairs with the least frequency.

Among all key-value pairs,

allkeys-random, randomly selected from all key-value pairs And delete the data

allkeys-lru, use the LRU algorithm to filter in all data

##Note: LRU (Least Recently Used) algorithm, LRU maintains a two-way linked list. The head and tail of the linked list represent the MRU end and the LRU end respectively, representing the most recently used data and the most recently least commonly used data respectively. When the LRU algorithm is actually implemented, it is necessary to use a linked list to manage all cached data, which will bring additional space overhead. Moreover, when data is accessed, the data needs to be moved to the MRU on the linked list. If a large amount of data is accessed, many linked list move operations will occur, which will be very time-consuming and reduce Redis cache performance.

Among them, LRU and LFU are implemented based on the lru and refcount attributes of redisObject, the object structure of Redis:

typedef struct redisObject {

unsigned type:4;

unsigned encoding:4;

// 对象最后一次被访问的时间

unsigned lru:LRU_BITS; /* LRU time (relative to global lru_clock) or

* LFU data (least significant 8 bits frequency

// 引用计数 * and most significant 16 bits access time). */

int refcount;

void *ptr;

} robj;Redis’ LRU will use redisObject’s lru to record the last access time , randomly select the number configured with the parameter maxmemory-samples as a candidate set, and select the data with the smallest lru attribute value among them to eliminate them.

In actual projects, how to choose the data elimination mechanism?

Redis cache mode

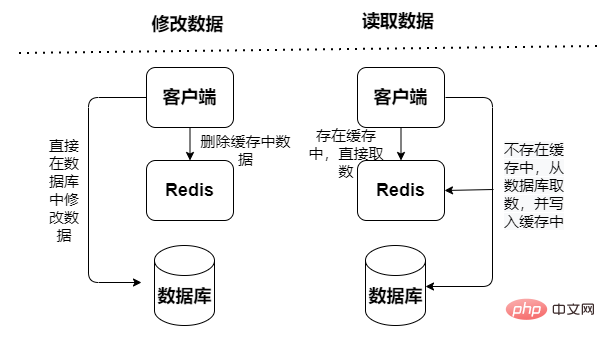

Read-only cache: only handles read operations, all All update operations are performed in the database, so there is no risk of data loss.

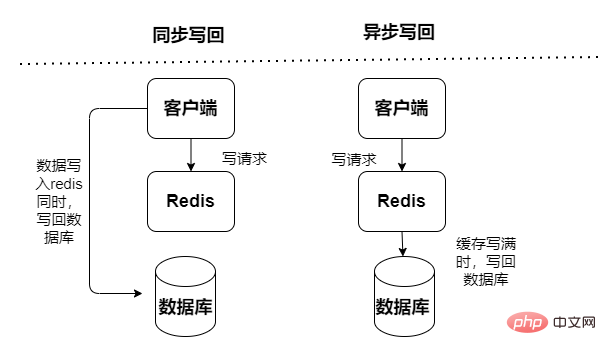

##Read and write cache, read and write operations are performed in the cache, appear Downtime failure will lead to data loss. Cache write-back data to the database is divided into two types: synchronous and asynchronous:

Write-Behind Mode

Query The data is first read from the cache. If it does not exist in the cache, the data is read from the database. After the data is obtained, it is updated into the cache. However, when updating data, the data in the database will be updated first. Then invalidate the cached data.

Moreover, the Cache Aside mode will have concurrency risks: the read operation will not hit the cache, and then the database will be queried to fetch the data. The data has been queried but has not been put into the cache. At the same time, an update write operation will invalidate the cache. , and then the read operation loads the query data into the cache, resulting in cached dirty data.

Both query data and update data directly access the cache service, the cache service updates the data to the database synchronously. The probability of dirty data is low, but it relies heavily on cache and has greater requirements for the stability of the cache service. However, synchronous updates will lead to poor performance.

Both query data and update data access the cache service directly, but the cache service uses an asynchronous way to update the data to the database (through asynchronous tasks) Speed It is fast and the efficiency will be very high, but the consistency of the data is relatively poor, there may be data loss, and the implementation logic is also relatively complex.

In actual project development, the cache mode is selected according to the actual business scenario requirements. After understanding the above, why do we need to use redis cache in our application?

Using Redis cache in applications can improve system performance and concurrency, mainly reflected in

High performance: based on memory query, KV structure, simple logical operation

High concurrency: Mysql can only support about 2,000 requests per second, and Redis can easily exceed 1W per second. Allowing more than 80% of queries to go through the cache and less than 20% of the queries to go through the database can greatly improve the system throughput

Although using Redis cache can greatly improve the performance of the system, using Without caching, some problems will arise, such as bidirectional inconsistency between the cache and the database, cache avalanche, etc. How to solve these problems?

When using cache, there will be some problems, mainly reflected in:

The cache is inconsistent with the double write of the database

Cache avalanche: Redis cache cannot handle a large number of application requests, and the transfer to the database layer causes a surge in pressure on the database layer;

Cache penetration: access The data does not exist in the Redis cache and the database, causing a large number of accesses to penetrate the cache and transfer directly to the database, causing a surge in pressure on the database layer;

Cache breakdown: the cache cannot handle high-frequency hot spots Data, resulting in direct high-frequency access to the database, resulting in a surge in pressure on the database layer;

Read-only cache (Cache Aside mode)

For read-only cache (Cache Aside mode), read operations all occur in the cache, and data inconsistency only occurs in delete operations (new The operation will not happen, because new additions will only be processed in the database). When a deletion operation occurs, the cache will mark the data as invalid and update the database. Therefore, during the process of updating the database and deleting cached values, regardless of the execution order of the two operations, as long as one operation fails, data inconsistency will occur.

The above is the detailed content of java web instance analysis. For more information, please follow other related articles on the PHP Chinese website!