Stephen Wolfram, the father of the Wolfram Language, has come to endorse ChatGPT again.

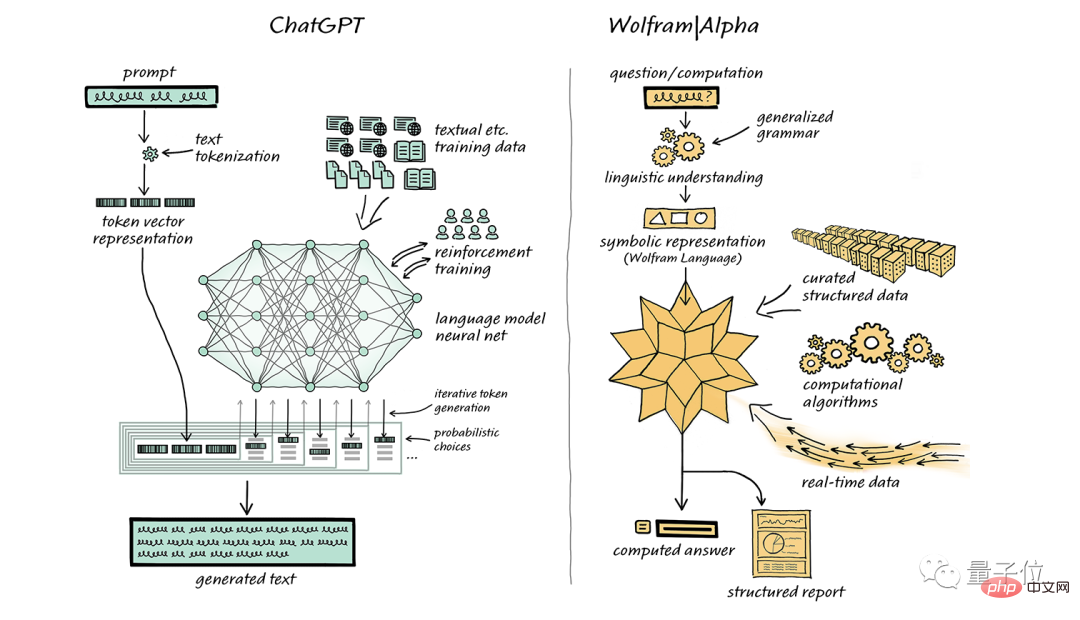

Last month, he also wrote a special article, strongly recommending his own computing knowledge search engine WolframAlpha, hoping to have a perfect combination with ChatGPT.

The general meaning is, "If your computing power is not up to standard, you can inject my 'super power' into it."

After more than a month, Stephen Wolfram published again around the two questions "What is ChatGPT" and "Why is it so effective?" The long article of 10,000 words gives a detailed explanation in simple and easy-to-understand terms.

(To ensure the reading experience, the following content will be narrated by Stephen Wolfram in the first person; there are easter eggs at the end of the article!)

ChatGPT's ability to automatically generate text that resembles human-written text is both remarkable and unexpected. So, how is it achieved? Why does it work so well at generating meaningful text?

In this article, I’ll give you an overview of the inner workings of ChatGPT and explore why it succeeds in generating satisfying text.

It should be noted that I will focus on the overall mechanism of ChatGPT. Although I will mention some technical details, I will not discuss it in depth. At the same time, it should be emphasized that what I said also applies to other current "large language models" (LLM), not just ChatGPT.

The first thing that needs to be explained is that the core task of ChatGPT is always to generate a "reasonable continuation", that is, based on the existing text, generate the next reasonable content that conforms to human writing habits. The so-called "reasonable" refers to inferring the content that may appear next based on the statistical patterns of billions of web pages, digital books and other human-written content.

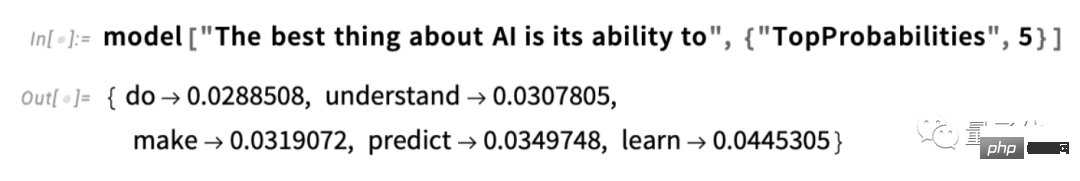

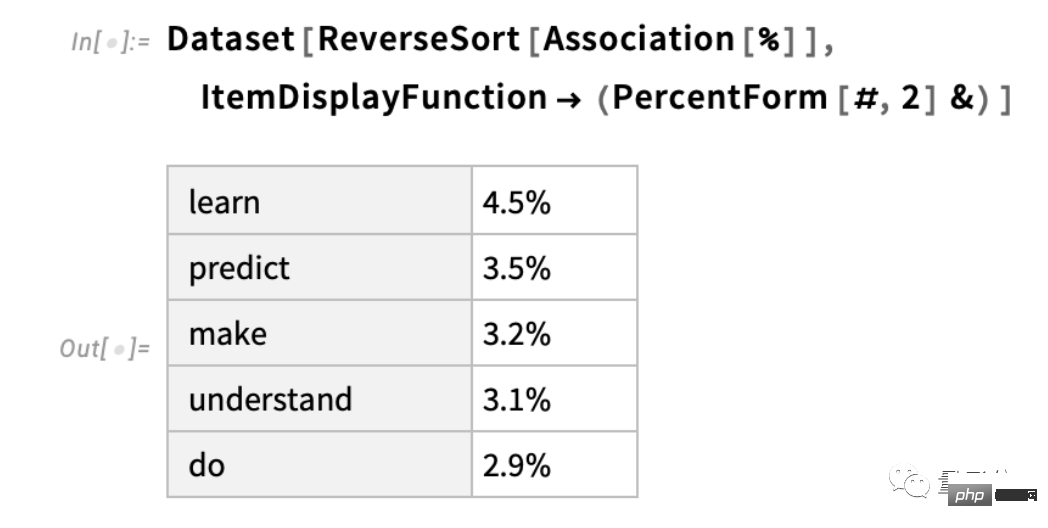

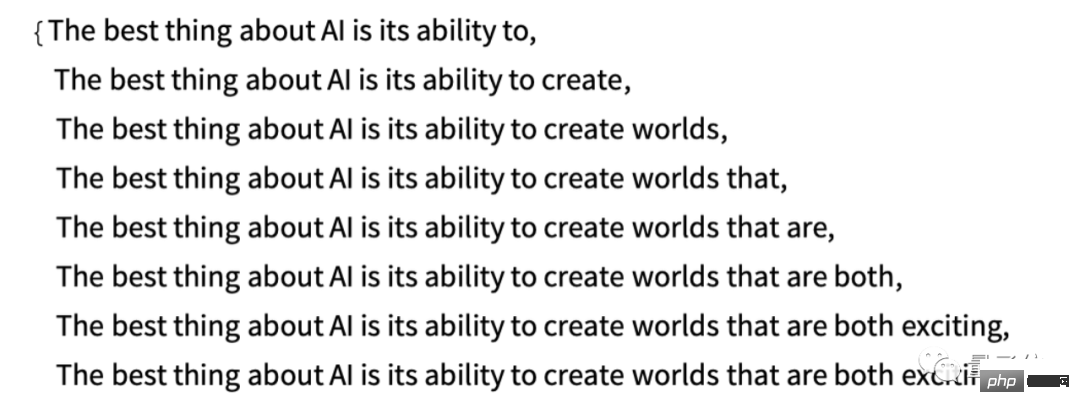

For example, if we enter the text "The best thing about AI is its power", ChatGPT will find similar text in billions of pages of human text, and then count the probability of the next word appearing. It should be noted that ChatGPT does not directly compare the text itself, but is based on a certain sense of "meaning matching". Eventually, ChatGPT will generate a list of possible words and give each word a probability ranking:

It is worth noting that when ChatGPT completes With a task like writing an article, it's really just asking over and over again: "Given the text that already exists, what should the next word be?" - and adding a word (more precisely) each time , as I explained, it adds a "token", which may just be a part of the word, which is why it sometimes "creates new words").

At each step, it gets a list of words with probabilities. But which word should it choose to add to the article (or anything else) it's writing?

One might think that the "highest ranked" word (i.e. the word assigned the highest "probability") should be chosen. But this is where something mysterious starts to creep in. Because for some reason - and maybe one day we will have a scientific understanding - if we always choose the highest ranked word, we usually end up with a very "bland" article that never shows any creativity ( Sometimes even repeated verbatim). If sometimes (randomly) we choose a lower ranked word, we might get a "more interesting" article.

The presence of randomness here means that if we use the same prompt multiple times, it is likely that we will get a different article each time. Consistent with the voodoo concept, there is a specific so-called "temperature" parameter in the process, which determines how often lower-ranked words will be used. For article generation, this "temperature" is best set to 0.8. It's worth emphasizing that "theory" is not being used here; it's just facts that have been proven to work in practice. For example, the concept of "temperature" exists because exponential distributions (familiar distributions from statistical physics) happen to be used, but there is no "physical" connection between them, at least as far as we know.

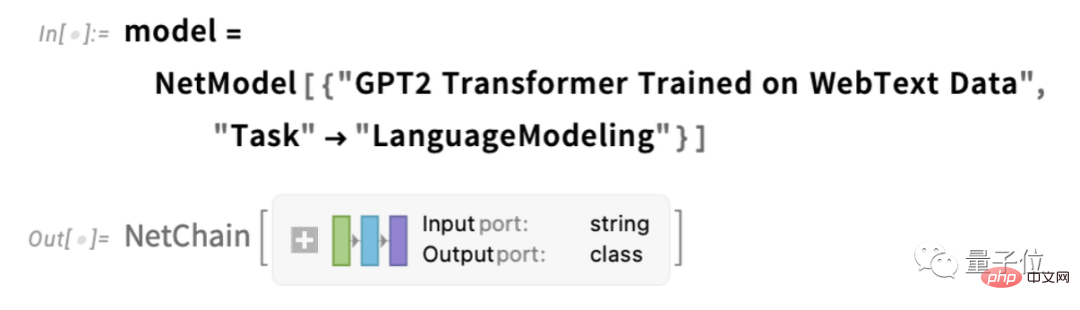

Before I continue, I should explain that for the sake of presentation, I don't use the full system in ChatGPT most of the time; instead, I usually use a simpler GPT-2 system, which has nice Features, i.e. it is small enough to run on a standard desktop computer. Therefore, almost everything I show will contain explicit Wolfram Language code that you can run on your computer right away.

For example, the picture below shows how to obtain the above probability table. First, we must retrieve the underlying "Language Model" neural network:

#Later, we will dive into this neural network and discuss what How it works. But for now, we can apply this "network model" as a black box to our text and request the top 5 words based on the probabilities the model thinks it should follow:

After the results are obtained, they are converted into an explicitly formatted "dataset":

Below is a case of repeatedly "applying the model" - adding at each step the word with the highest probability (specified in this code as the "decision" in the model):

What will happen if we continue? In this ("zero degree") situation, quite confusing and repetitive situations can quickly develop.

But if instead of always picking "top" words, you sometimes randomly pick "non-top" words ("randomness" corresponds to "temperature" "is 0.8)? We can continue writing the text again:

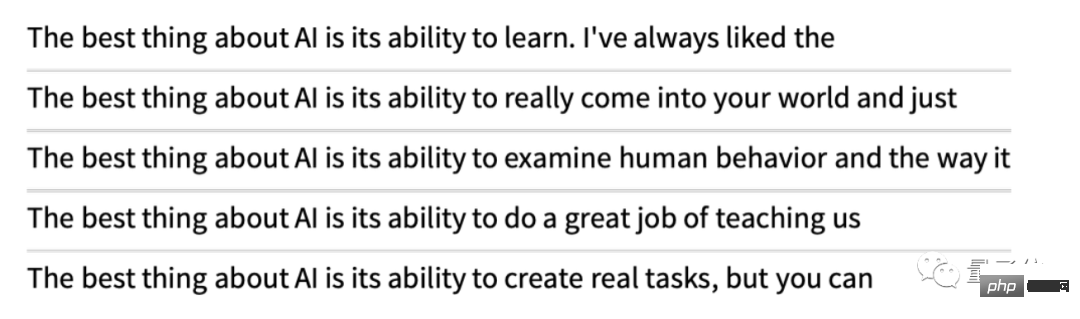

And every time we do this, there will be a different random selection and the corresponding text will be different. For example, the following 5 examples:

It is worth pointing out that even in the first step, there are many possible " Next words" are available for selection (at a temperature of 0.8), although their probabilities quickly decrease (yes, the straight line on this logarithmic plot corresponds to an n–1 "power law" decay , which is a general statistical characteristic of language):

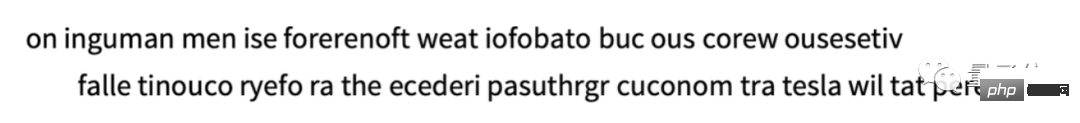

So what will happen if we continue to write? Here's a random example. It's a little better than using the highest ranked word (zero degree), but still a bit weird:

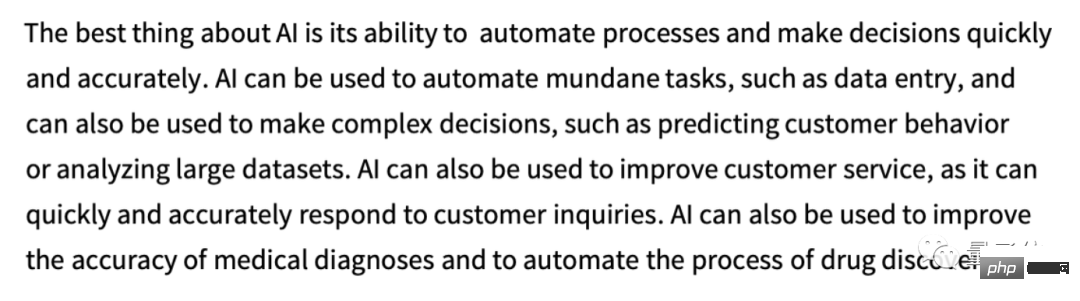

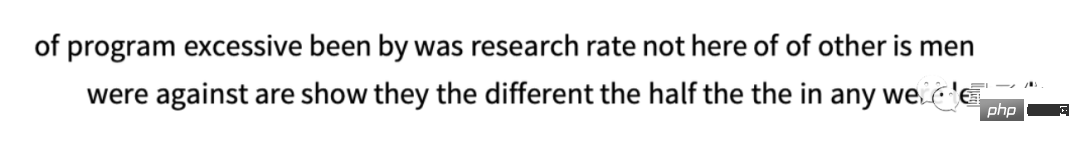

This is the simplest GPT to use - 2 model (from 2019) finished. The results are better using the newer larger GPT-3 model. Here is the text using the highest ranking word (zero degree) generated using the same "hint" but using the largest GPT-3 model:

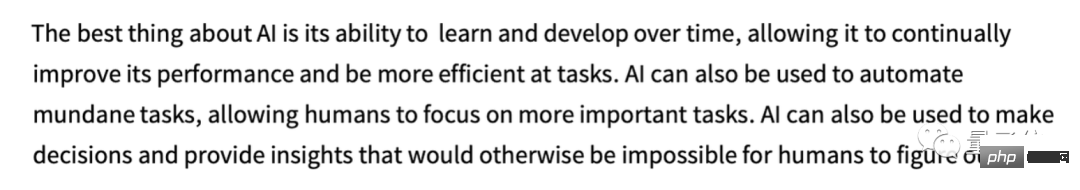

Next is a random example of "temperature is 0.8":

ChatGPT always chooses the next word based on probability. But where do these probabilities come from?

Let’s start with a simpler question. When we consider generating English text letter by letter (rather than word by word), how do we determine the probability of each letter?

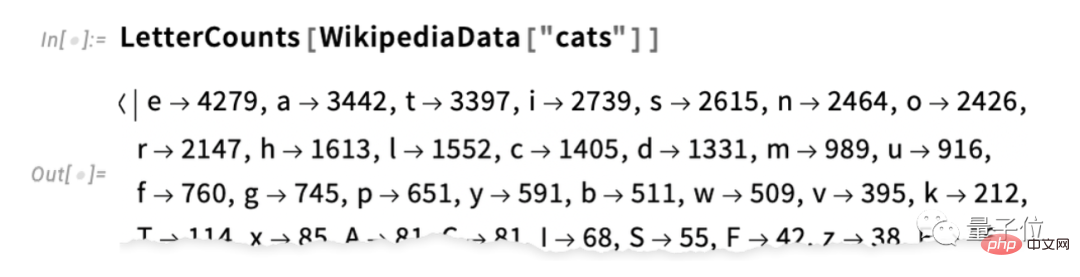

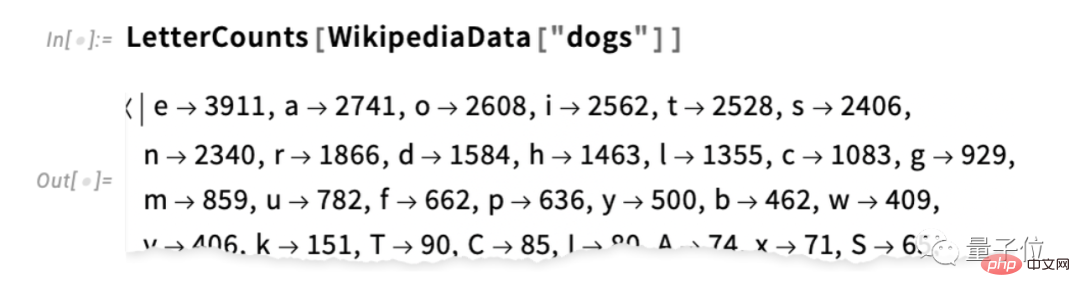

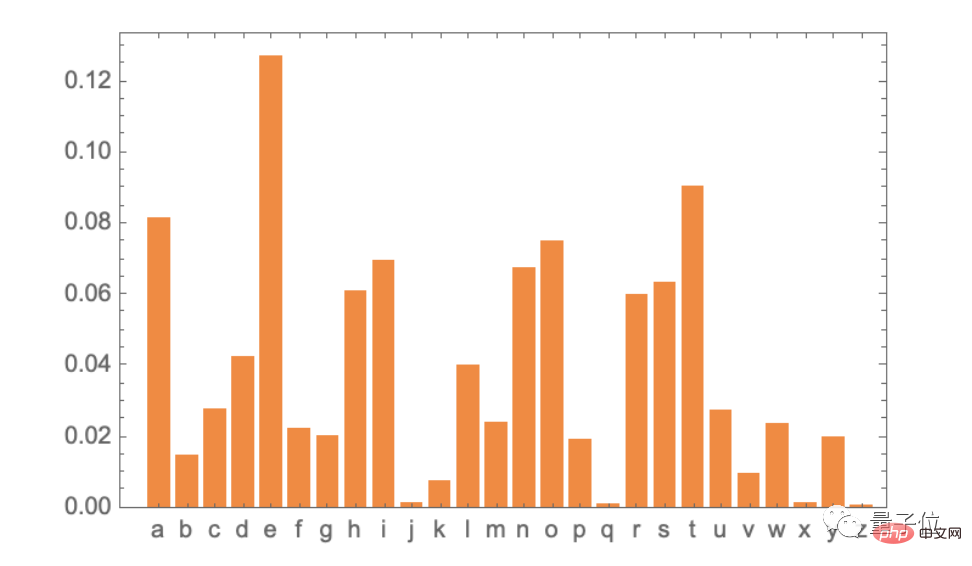

The simplest way is to take a sample of English text and count the frequency of different letters in it. For example, this is how letters in the Wikipedia article for “cat” look like (the count is omitted here):

This is “dog” " case:

The results are similar, but not exactly the same (after all, "o" is more common in "dogs" articles because it itself appears in the word "dog"). However, if we take a large enough sample of English text, we can eventually expect to get at least fairly consistent results:

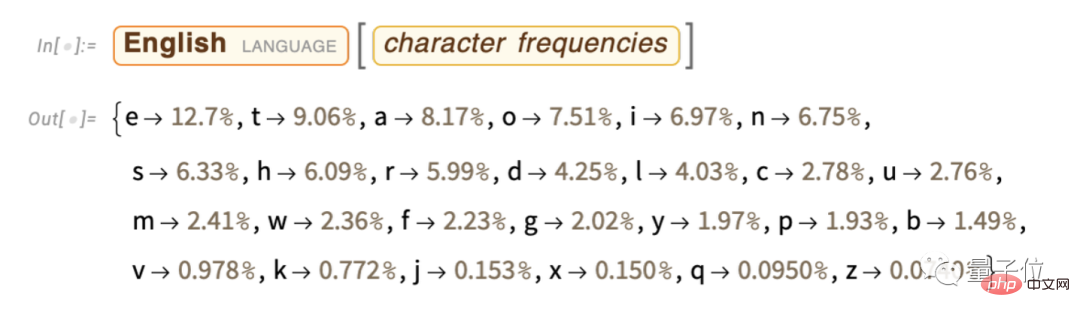

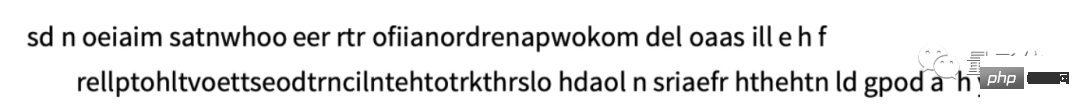

#Here are we using just these probabilities A sample that generates a sequence of letters:

We can break it down into "words" by, say, treating spaces as letters with a certain probability :

#But now let’s assume that – as with ChatGPT – we’re dealing with whole words, not letters. There are approximately 40,000 common words in English. By looking at a large amount of English text (e.g. millions of books with tens of billions of words), we can estimate the frequency of each word. Using this estimate, we can start generating "sentences" where each word is independently chosen at random with the same probability that it appears in the corpus. Here's a sample of what we got:

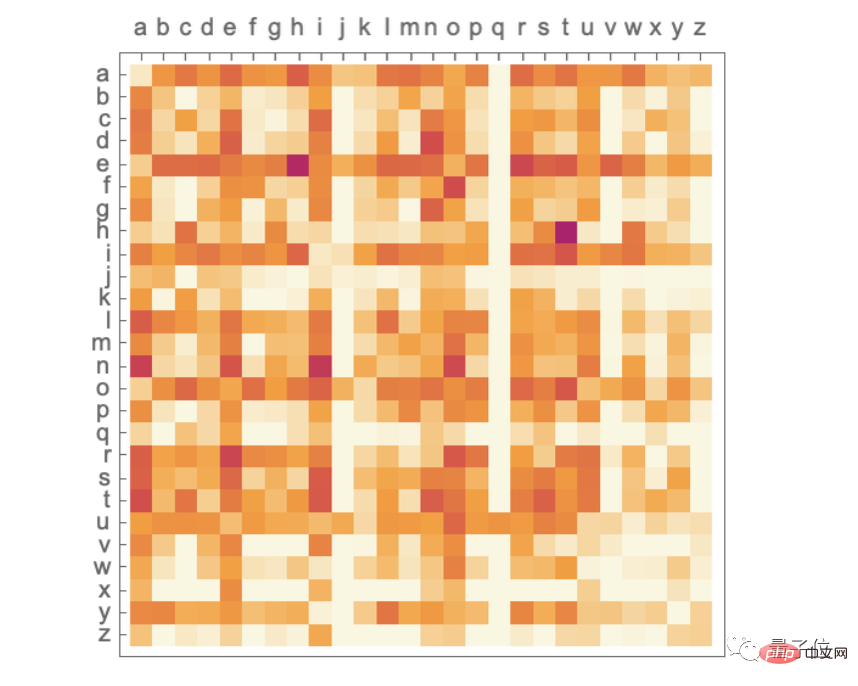

Unsurprisingly, this is nonsense. So what can we do to generate better sentences? Just like with letters, we can start thinking about the probability of not just words, but also word pairs or longer n-grams. For word pairs, here are 5 examples, all starting with the word "cat":

seems slightly "more meaningful" A little bit. If we could use long enough n-grams, we might imagine essentially "getting a ChatGPT" - that is, we'd get something that generates long text sequences with "the correct overall article probability". But here's the thing: not enough English text has actually been written to be able to deduce these probabilities.

There may be tens of billions of words in a web crawler; there may be tens of billions more words in a digitized book. But even with 40,000 common words, the number of possible 2-tuples is already 1.6 billion, and the number of possible 3-tuples is a whopping 60 trillion. Therefore, we cannot estimate the probabilities of these possibilities from the existing text. By the time we need to generate a 20-word "essay snippet," the number of possibilities already exceeds the number of particles in the universe, so in a sense they can't all be written down.

So, what should we do? The key idea is to build a model that allows us to estimate the probability that sequences should occur even though we have never explicitly seen these sequences in the text corpus we are looking at. At the core of ChatGPT is the so-called "large language model" (LLM), which is built to estimate these probabilities very well.

(Due to space reasons, "What is a model", "Neural Network", "Machine Learning and Neural Network Training", "Practice and Knowledge of Neural Network Training", "Embedding Concept", etc. are omitted here. Compilation of chapters, interested readers can read the original text by themselves)

There is no doubt that it is ultimately a huge neural network, and the current version is a network with 175 billion weights GPT-3 network. In many ways, this neural network is very similar to other neural networks we have discussed, but it is a neural network specifically designed to process language. The most notable feature is a neural network architecture called the "Transformer."

In the first type of neural network we discussed above, each neuron in any given layer is essentially connected (with at least some weight) to every neuron in the previous layer. However, such a fully connected network is (presumably) overkill if you want to process data with a specific known structure. Therefore, in the early stages of processing images, it is common to use so-called convolutional neural networks ("convnets"), in which the neurons are actually arranged on a grid similar to the image pixels, and only interact with neurons near the grid connected.

The idea of Transformer is to do at least something similar to the token sequence that makes up the text. However, Transformer not only defines a fixed area within which connections can be made, but also introduces the concept of "attention" - the concept of "attention" focusing more on certain parts of the sequence than others. Maybe one day it will make sense to just launch a general neural network with all the customizations through training. But in practice at least for now, modularizing things is crucial, just like Transformers, and probably what our brains do too.

So what does ChatGPT (or, more accurately, the GPT-3 network it is based on) actually do? Remember, its overall goal is to "reasonably" continue writing text based on what it sees from training (which includes looking at text from billions of pages across the web and beyond). So, at any given moment, it has a certain amount of text, and its goal is to pick an appropriate choice for the next token.

The operation of ChatGPT is based on three basic stages. First, it obtains the sequence of tokens corresponding to the current text and finds the embedding (i.e., an array of numbers) that represents them. It then operates on this embedding in the "standard neural network way", causing the values to "fluctuate" through successive layers in the network to produce a new embedding (i.e. a new array of numbers). Next, it takes the last part of that array and generates an array containing about 50,000 values that translate into probabilities of different and possible next tokens (yes, there happens to be the same number of tokens as common English words, although Only about 3000 tokens are complete words, the rest are fragments.)

The key point is that each part of this pipeline is implemented by a neural network, and its weights are determined by the end-to-end training of the network. In other words, nothing is actually "explicitly designed" except the overall architecture; everything is "learned" from the training data.

However, there are many details in the way the architecture is built - reflecting a variety of experience and knowledge of neural networks. While this is definitely a matter of detail, I thought it would be useful to discuss some of these details to at least understand what is required to build ChatGPT.

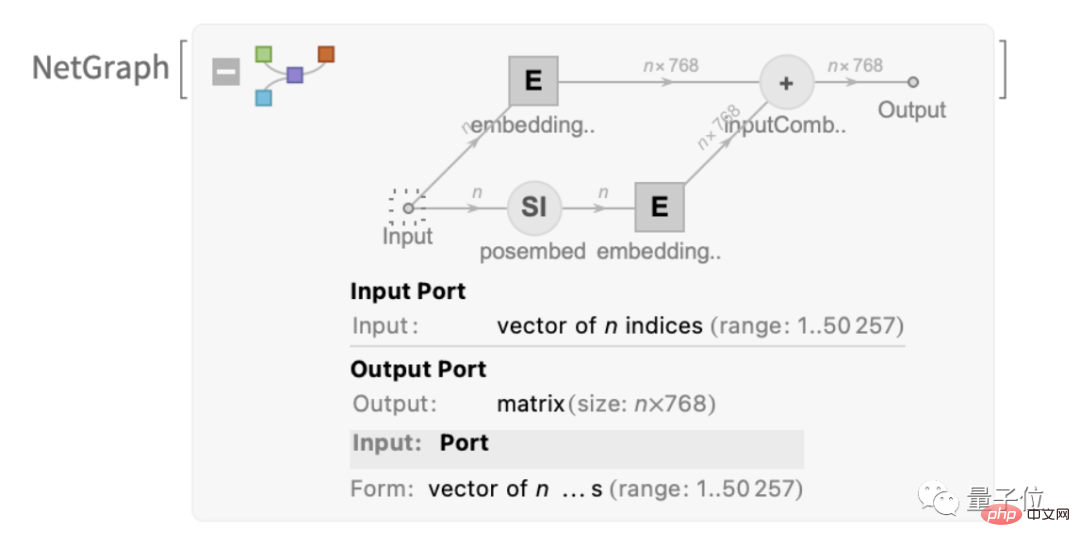

The first is the embedding module. This is a schematic diagram of GPT-2, expressed in Wolfram Language:

This text introduces a module called "embedding module", It has three main steps. In the first step, the text is converted into a token sequence, and each token is converted into an embedding vector with a length of 768 (for GPT-2) or 12288 (for ChatGPT's GPT-3) using a single-layer neural network. At the same time, there is also a "secondary pathway" in the module, which is used to convert the integer position of the token into an embedding vector. Finally, the token value and the embedding vector of the token position are added together to generate the final embedding vector sequence.

Why do we add the token value and the embedding vector of the token position? There doesn't seem to be a particularly scientific explanation. Just tried a bunch of different things and this one seems to work. And the tradition of neural networks also holds that as long as the initial settings are "roughly correct," with enough training, the details can usually be adjusted automatically without actually "understanding how the neural network is engineered."

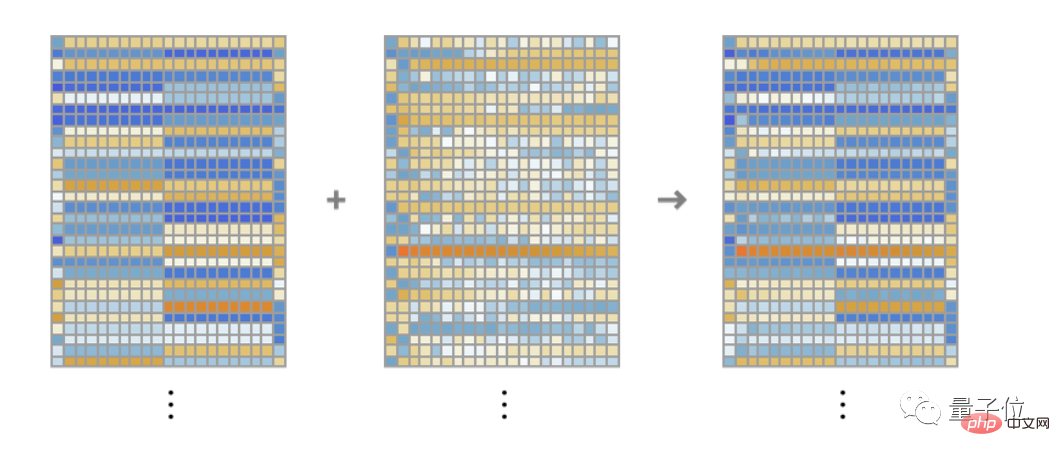

The function of this "embedding module" module is to convert text into an embedding vector sequence. Taking the string "hello hello hello hello hello hello hello hello hello hello bye bye bye bye bye bye bye bye bye bye" as an example, it can be converted into a series of embedding vectors with a length of 768, including from each token Information extracted from values and locations.

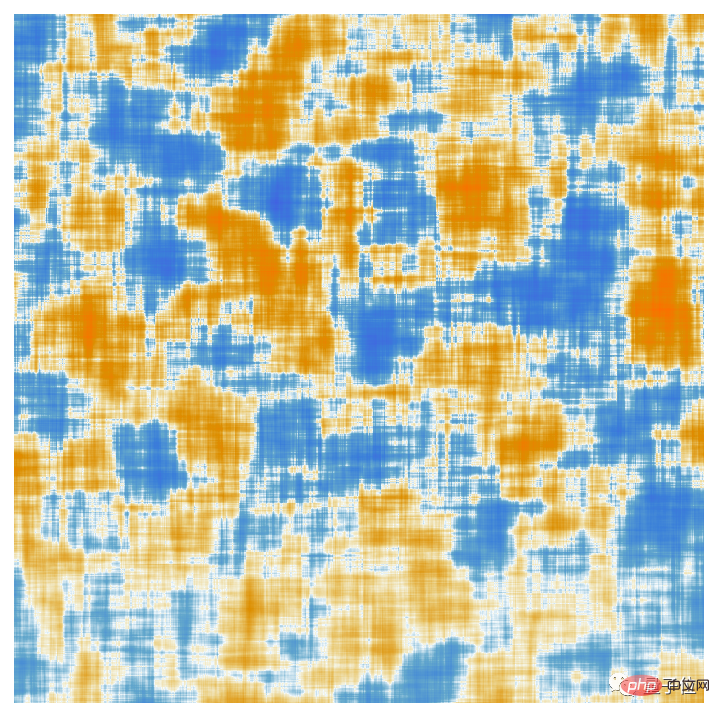

The elements of each tokenembedding vector are shown here. A series of "hello" embeddings are displayed horizontally, followed by a series of "bye" embedding. The second array above is a positional embedding, whose seemingly random structure just happens to be "learned (in this case in GPT-2)".

Okay, after the embedding module comes the "main part" of the Transformer: a series of so-called "attention blocks" (12 for GPT-2, 96 for ChatGPT's GPT-3). This is complex and reminiscent of typically incomprehensible large engineering systems, or biological systems. However, here is a diagram of a single "attention block" of GPT-2:

In each attention block, there is a set of " attention heads" (GPT-2 has 12, ChatGPT's GPT-3 has 96), each attention head acts independently on blocks of different values in the embedding vector. (Yes, we don't know the benefits of splitting embedding vectors into parts, nor what their different parts mean; it's just one of the techniques that has been found to work.)

So, what is the function of attention head? Basically, they are a way of "looking back" at a sequence of tokens (i.e. already generated text) and "packaging" the historical information in a useful form to easily find the next token. Above, we mentioned using binary probabilities to select words based on their previous token. The "attention" mechanism in Transformer allows "attention" to earlier words, potentially capturing, for example, the way verbs refer to nouns that appear multiple words before them in a sentence.

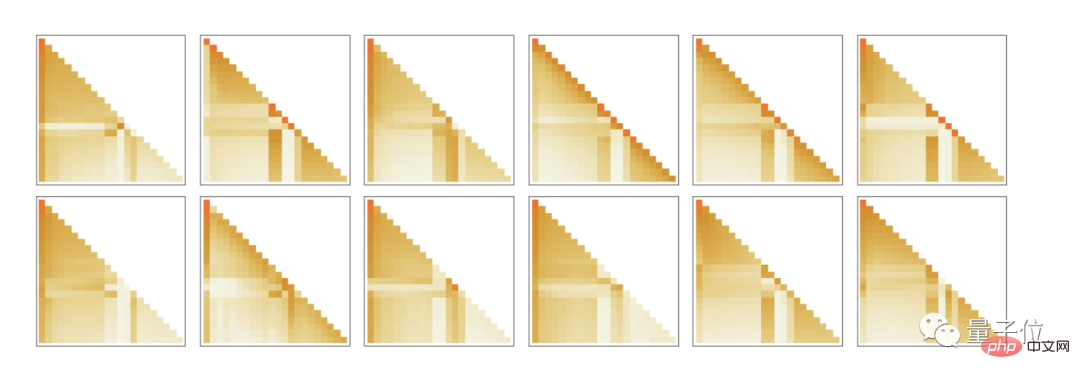

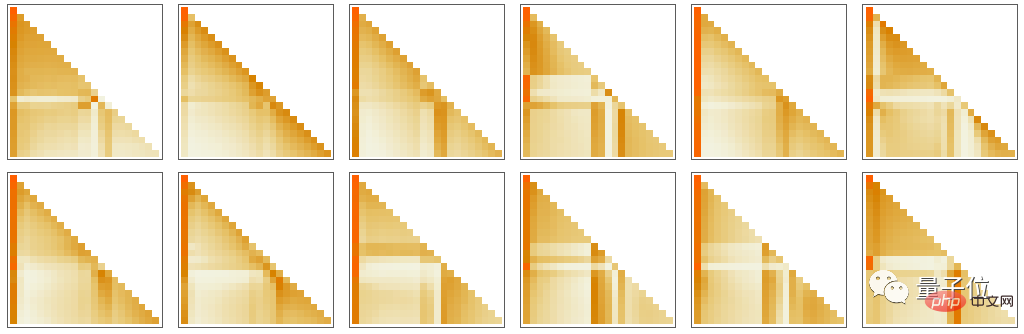

Specifically, the function of the attention head is to recombine the blocks of embedding vectors related to different tokens and give them a certain weight. So, for example, the 12 attention heads in the first attention block in GPT-2 have the following ("look back the token sequence all the way to the beginning") "regroup weight" pattern for the "hello, bye" string above:

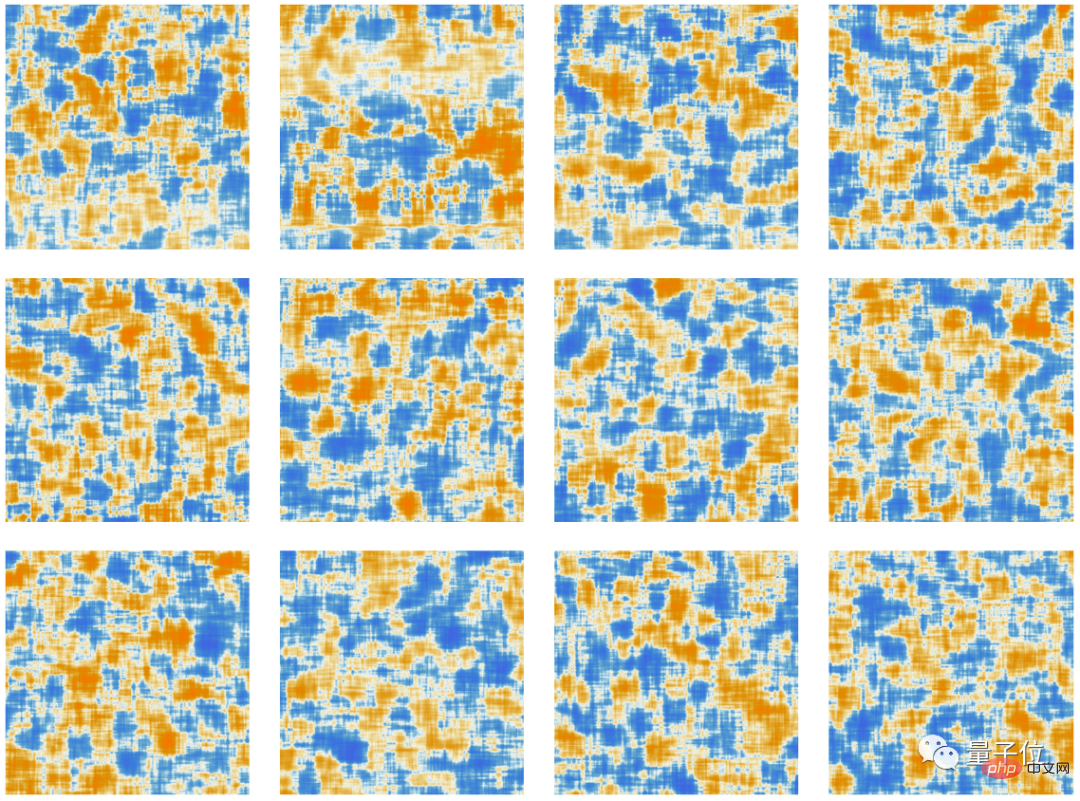

After processing by the attention mechanism, a "reweighted embedding vector" is obtained (for GPT-2 the length is 768, for ChatGPT's GPT -3 length 12,288) and then passed through a standard "fully connected" neural network layer. It's hard to understand what this layer is doing. But here is a plot of the 768×768 weight matrix it uses (here GPT-2):

via a 64×64 moving average , some (random walk-like) structures begin to appear:

What determines this structure? This may be some "neural network encoding" of the characteristics of human language. But until now, these characteristics may have been unknown. In effect, we are "opening the brain" of ChatGPT (or at least GPT-2) and discovering that, yes, there is a lot of complexity in there that we don't understand, although ultimately it yields the ability to recognize human speech.

Okay, after passing through an attention module, we get a new embedding vector, and then pass through other attention modules in succession (a total of 12 for GPT-2 and 96 for GPT-3 indivual). Each attention module has its own specific "attention" and "fully connected" weighting patterns. Here is the sequence of attention weights for the first attention head for the "hello, bye" input (for GPT-2):

The following is the "matrix" of the fully connected layer (after moving average):

Interestingly, even in different attention blocks, these The "weight matrices" look very similar, and the distribution of weight sizes may also be different (and not always Gaussian):

So , what is the net effect of the Transformer after all these attention blocks? Essentially, it converts the original embedding set of token sequences into the final set. The specific way ChatGPT works is to select the last embedding in the set and "decode" it to produce a probability list of the next token.

So, that’s an overview of the internals of ChatGPT. It may seem complicated (many of these choices are unavoidable, somewhat arbitrary "engineering choices"), but in reality, the elements involved ultimately are quite simple. Because ultimately we are dealing with just neural networks made of "artificial neurons", each of which performs the simple operation of combining a set of numerical inputs with some weights.

The original input to ChatGPT is an array of numbers (the embedding vector of the token so far), and when ChatGPT "runs" to generate a new token, these numbers are simply "propagated" through the layers of the neural network, each neural network The neurons "do their thing" and pass the results to the neurons in the next layer. There are no loops or "backtracking". Everything is just "feed forward" through the network.

This is completely different from typical computational systems such as Turing machines, which repeatedly "reprocess" the results through the same computational elements. Here - at least in terms of generating a given output token - each computational element (i.e. neuron) is used only once.

But there is still a sense of "outer loop" in ChatGPT, which is reused even in calculated elements. Because when ChatGPT wants to generate a new token, it always "reads" (i.e. uses it as input) the entire sequence of tokens that appeared before it, including the tokens previously "written" by ChatGPT itself. We can think of this setup as meaning that ChatGPT involves at least a "feedback loop" at its outermost level, although each iteration is explicitly visible as a token appearing in the text it generates.

Let’s get back to the core of ChatGPT: the neural network used to generate each token. On one level, it's very simple: a collection of identical artificial neurons. Some parts of the network consist only of ("fully connected") layers of neurons, where every neuron on that layer is connected (with some weight) to every neuron on the previous layer. But especially in its Transformer architecture, ChatGPT has a more structured part where only specific neurons on specific layers are connected. (Of course, one can still say "all neurons are connected" - but some neurons have zero weight).

Additionally, some aspects of the neural network in ChatGPT are not the most naturally "homogeneous" layers. For example, within an attention block, there are places where "multiple copies" of the incoming data are made, each of which then goes through a different "processing path," possibly involving a different number of layers, before being reassembled later. . While this may be a convenient way of notation, at least in principle it is always possible to consider "densely filling in" the layers and just have some weights be zero.

If you look at ChatGPT’s longest path, it’s about 400 layers (core layer) – not a huge number in some ways. But there are millions of neurons, a total of 175 billion connections, and therefore 175 billion weights. One thing to realize is that every time ChatGPT generates a new token, it has to do a calculation involving each weight. In terms of implementation, these calculations can be organized into highly parallel array operations, which can be easily completed on the GPU. But there are still 175 billion calculations required for each token produced (and a little more in the end) - so yes, it's no surprise that generating a long piece of text with ChatGPT takes a while.

But ultimately we also need to note that all of these operations somehow work together to accomplish such a "human-like" job of generating text. It must be emphasized again that (at least as far as we know) there is no "ultimate theoretical reason" why anything like this should work. In fact, as we'll discuss, I think we have to think of this as a - potentially surprising - scientific discovery: In a neural network like ChatGPT, it's possible to capture what the human brain does in generating language. The essence of being able to do it.

(Due to the length of the original article, interested friends can click on the link at the end of the article to read the full text)

Perhaps when opening this article, some Friends have noticed some subtle changes:

Yes, the editor of the core content of this article is none other than ChatGPT!

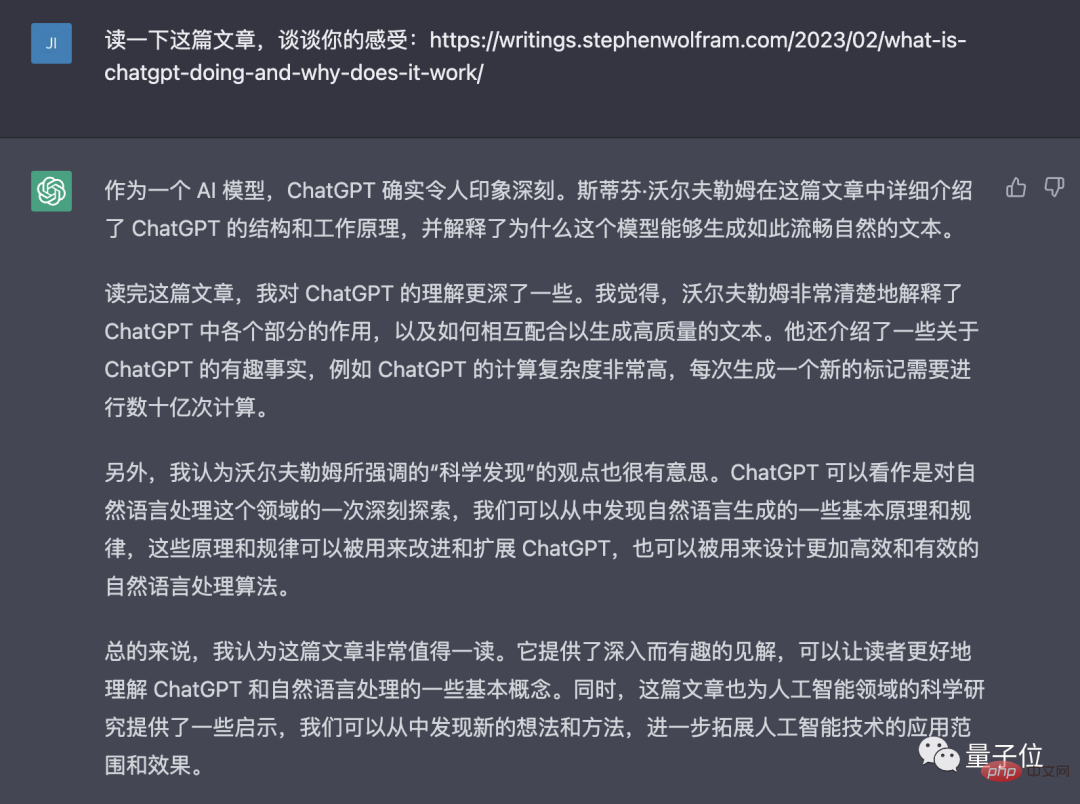

Also, it talks about its views on Stephen Wolfram’s article:

Reference link:

[1]//m.sbmmt.com/link/e3670ce0c315396e4836d7024abcf3dd

[2]//m.sbmmt.com /link/b02f0c434ba1da7396aca257d0eb1e2f

[3]//m.sbmmt.com/link/76e9a17937b75b73a8a430acf210feaf

The above is the detailed content of Why ChatGPT is so powerful: a detailed explanation of a 10,000-word long article by the father of WolframAlpha. For more information, please follow other related articles on the PHP Chinese website!