In the science fiction novel "The Three-Body Problem", the Trisolaran people who attempt to occupy the earth are given a very unique setting: they share information through brain waves, and they are transparent in their thinking and not conspiratorial. For them, thinking and speaking are the same word. Human beings, on the other hand, took advantage of the opaque nature of their thinking to come up with the "Wall-Facing Plan", and finally succeeded in deceiving the Trisolarans and achieved a staged victory.

So the question is, is human thinking really completely opaque? With the emergence of some technical means, the answer to this question seems not so absolute. Many researchers are trying to decode the mysteries of human thinking and decode some brain signals into text, images and other information.

Recently, Two research teams have made important progress in the direction of image decoding at the same time, and related papers have been accepted by CVPR 2023.

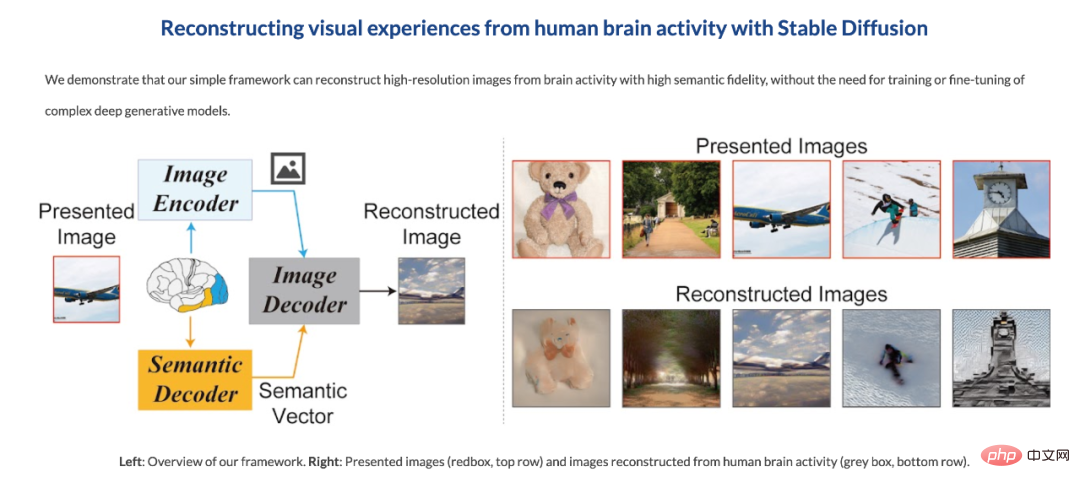

The first team is from Osaka University. They use the recently very popular Stable Diffusion to reconstruct brain activity patterns from human brain activity images obtained by functional magnetic resonance imaging (fMRI). High-resolution, high-precision images (see "Stable Diffusion reads your brain signals to reproduce images, and the research was accepted by CVPR").

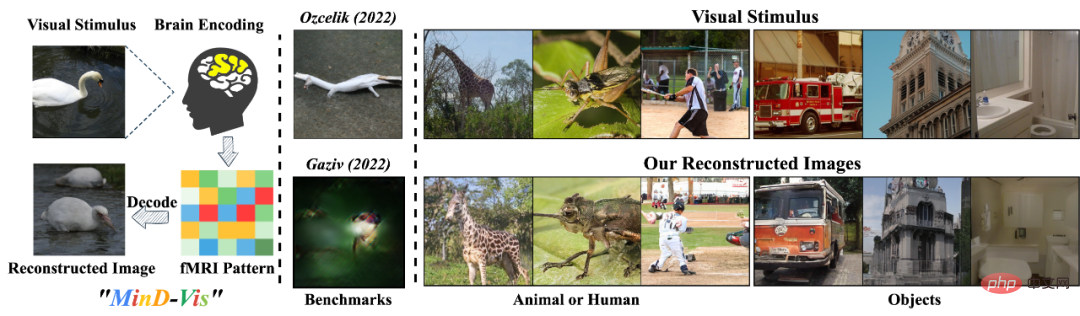

##Coincidentally, at almost the same time, Chinese teams from the National University of Singapore, the Chinese University of Hong Kong and Stanford University also produced similar results. They developed a human visual decoder called "MinD-Vis", which can directly decode from fMRI data through a pre-trained mask modeling and latent diffusion model. Human visual stimulation. It generates these images that are not only reasonably detailed, but also accurately represent the semantics and features of the image (such as texture and shape). Currently, the code for this research is open source.

Thesis title: Seeing Beyond the Brain: Conditional Diffusion Model with Sparse Masked Modeling for Vision Decoding

Research Overview

"What you see is what you think."Human perception and prior knowledge are closely related in the brain. Our perception of the world is not only affected by objective stimuli, but also by our experience. These effects form complex brain activity. Understanding these brain activities and decoding the information is one of the important goals of cognitive neuroscience, where decoding visual information is a challenging problem.

Functional magnetic resonance imaging (fMRI) is a commonly used non-invasive and effective method for recovering visual information such as image categories.

The purpose of MinD-Vis is to explore the possibility of using deep learning models to decode visual stimuli directly from fMRI data.

When previous methods decode complex neural activities directly from fMRI data, there is a lack of {fMRI - image} pairing and effective biological guidance, so the reconstructed images are usually blurry and Semantically meaningless. Therefore, it is an important challenge to effectively learn fMRI representations, which help establish the connection between brain activity and visual stimuli.

Additionally, individual variability complicates the problem, and we need to learn representations from large datasets and relax the constraints of generating conditional synthesis from fMRI.

Therefore, The author believes that using self-supervised learning (Self-supervised learning with pre-text task) plus a large-scale generative model can enable the model to be fine-tuned on a relatively small data set With contextual knowledge and amazing generative abilities. Driven by the above analysis, MinD-Vis proposed a mask signal modeling and a biconditional latent diffusion model for human visual decoding. The specific contributions are as follows:

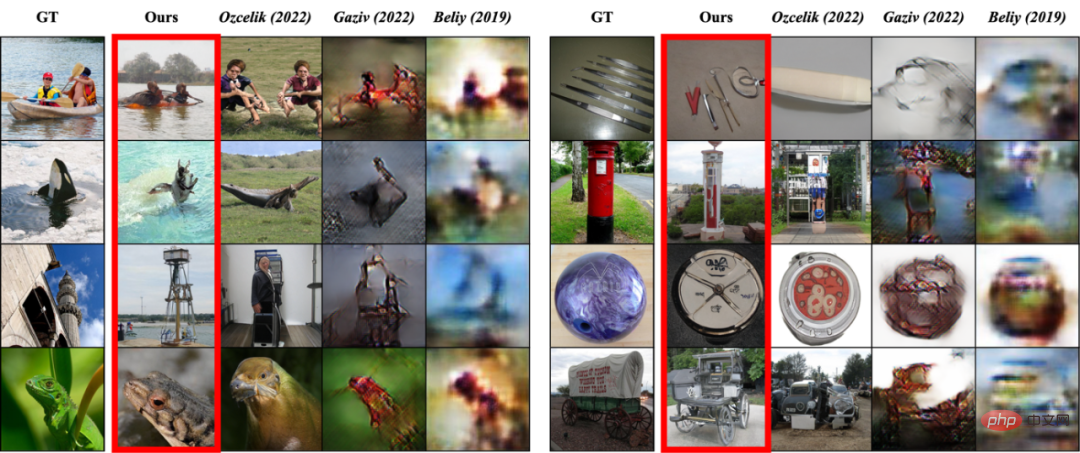

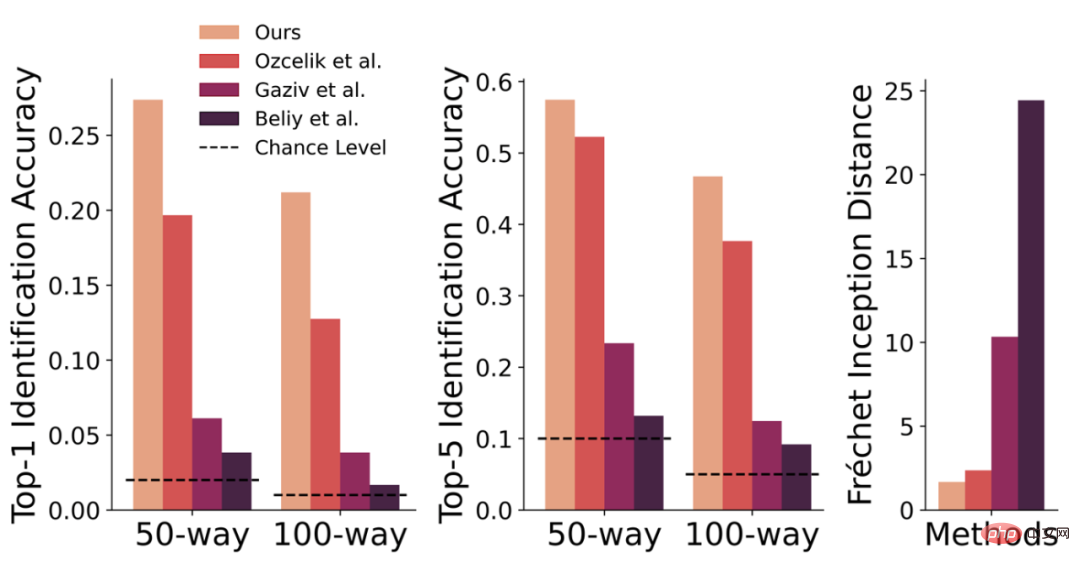

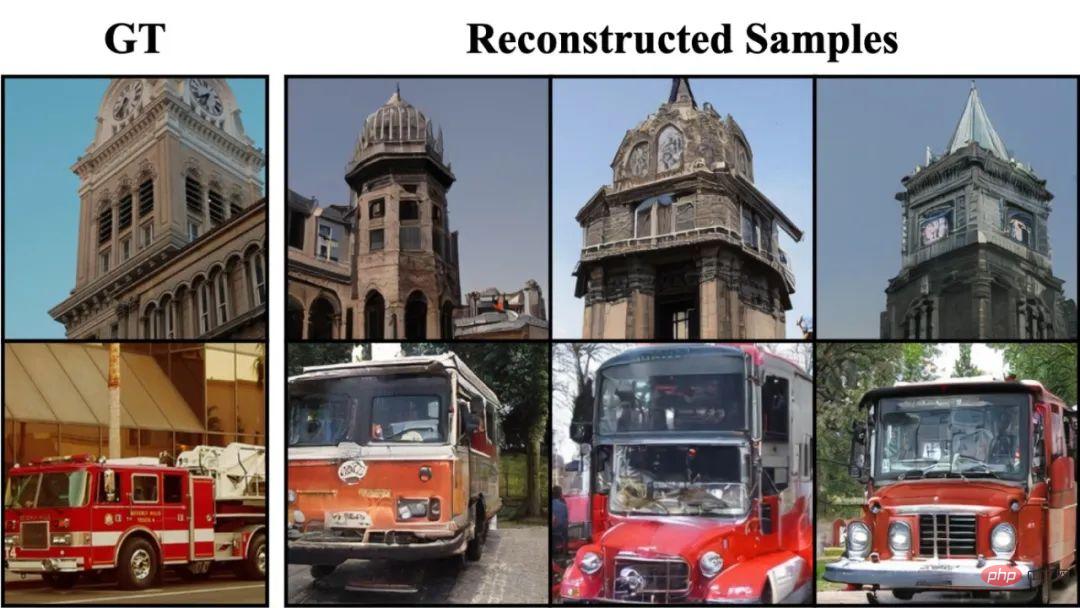

Comparison with previous methods – Generation quality

Comparison with previous methods – Quantitative comparison of evaluation indicators

Since collecting {fMRI - image} pairs is very expensive and time-consuming, this task has always suffered from a lack of data annotation. In addition, each data set and each individual's data will have a certain domain offset.

In this task, the researchers aimed to establish a connection between brain activity and visual stimuli and thereby generate corresponding image information.

To do this, they used self-supervised learning and large-scale generative models. They believe this approach allows models to be fine-tuned on relatively small datasets and gain contextual knowledge and stunning generative capabilities.

The following will introduce the MinD-Vis framework in detail and introduce the reasons and ideas for the design.

fMRI data has these characteristics and problems:

To address these issues, MinD-Vis consists of two stages:

These two steps will be introduced in detail here.

MinD-Vis Overview

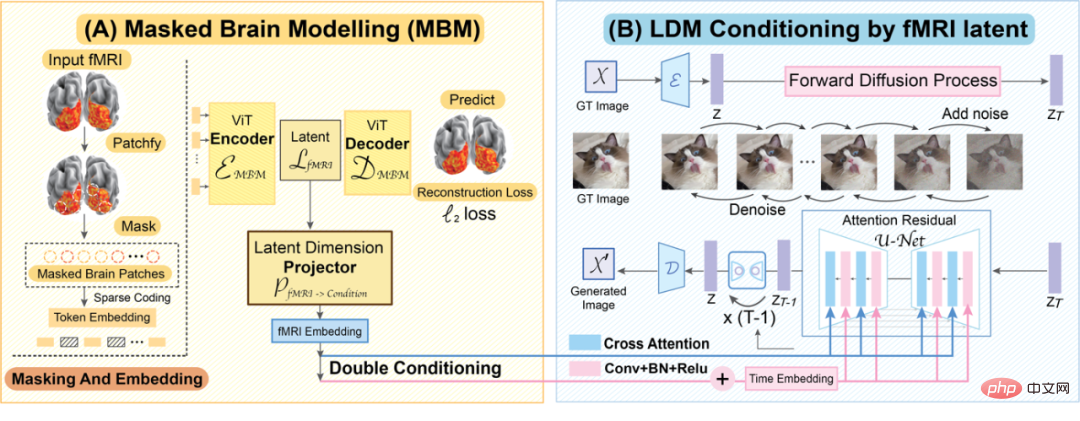

(A) Sparse-Coded Masked Brain Modeling (SC-MBM) (MinD-Vis Overview left)

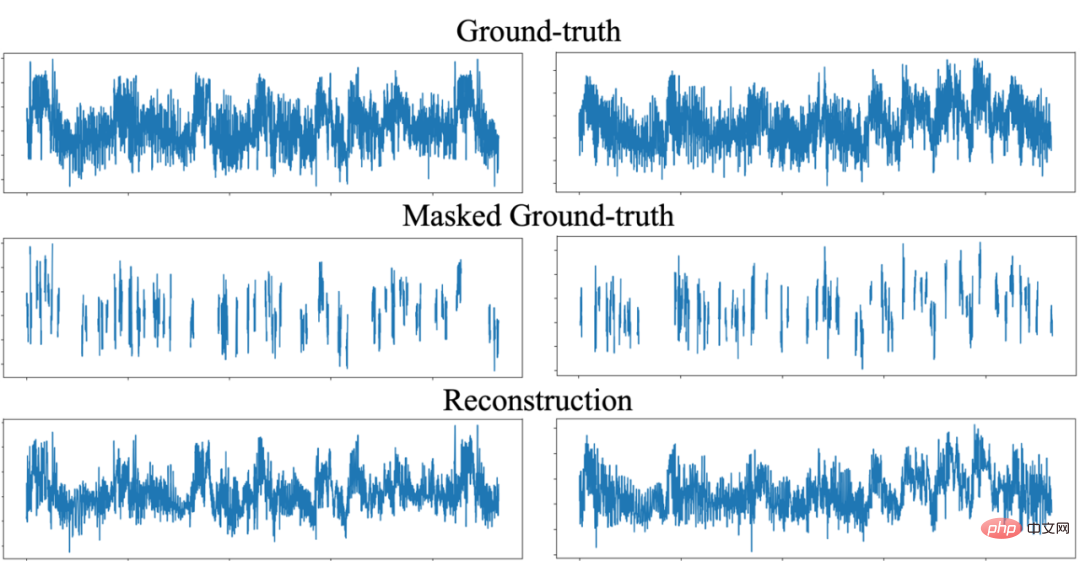

Due to the redundancy of fMRI spatial information, even if most of it is Masked, fMRI data can still be recovered. Therefore, in the first stage of MinD-Vis, most of the fMRI data are masked to save computational time. Here, the author uses an approach similar to Masked Autoencoder:

##SC-MBM can effectively restore the masked fMRI information

This design is similar to Mask What is the difference between ed Autoencoder?

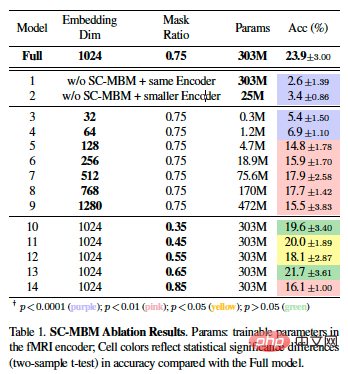

Ablation experiment of SC-MBM

(B) Double-Conditioned LDM (DC-LDM) (MinD-Vis Overview right)

Large-scale was performed in Stage A After context learning, the fMRI encoder can convert the fMRI data into a sparse representation with locality constraints. Here, the authors formulate the decoding task as a conditional generation problem and use pre-trained LDM to solve this problem.

We demonstrate the stability of our method by decoding images in different random states multiple times.

Fine-tuning

After the fMRI encoder is pre-trained by SC-MBM, it is combined with the pre-trained LDM by double conditioning integrated together. Here, author:

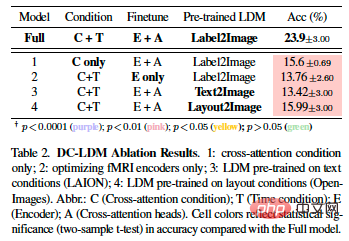

Ablation experiment of DC-LDM

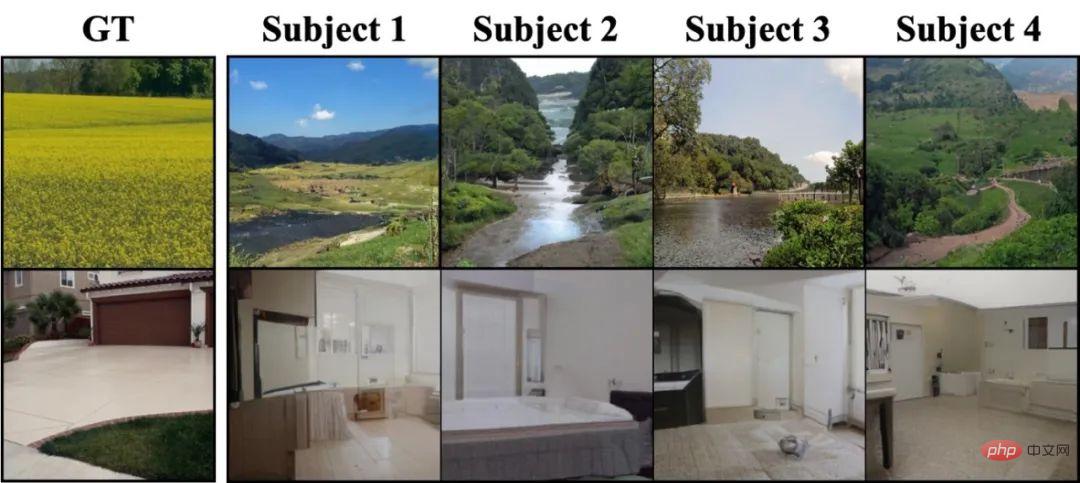

Surprisingly, MinD-Vis can decode some details that do not actually exist in the ground truth image, but are very relevant to the image content. For example, when the picture is a natural scenery, MinD-Vis decodes a river and a blue sky; when it is a house, MinD-Vis decodes a similar interior decoration. This has both advantages and disadvantages. The good thing is that this shows that we can decode what we imagined; the bad thing is that this may affect the evaluation of the decoding results.

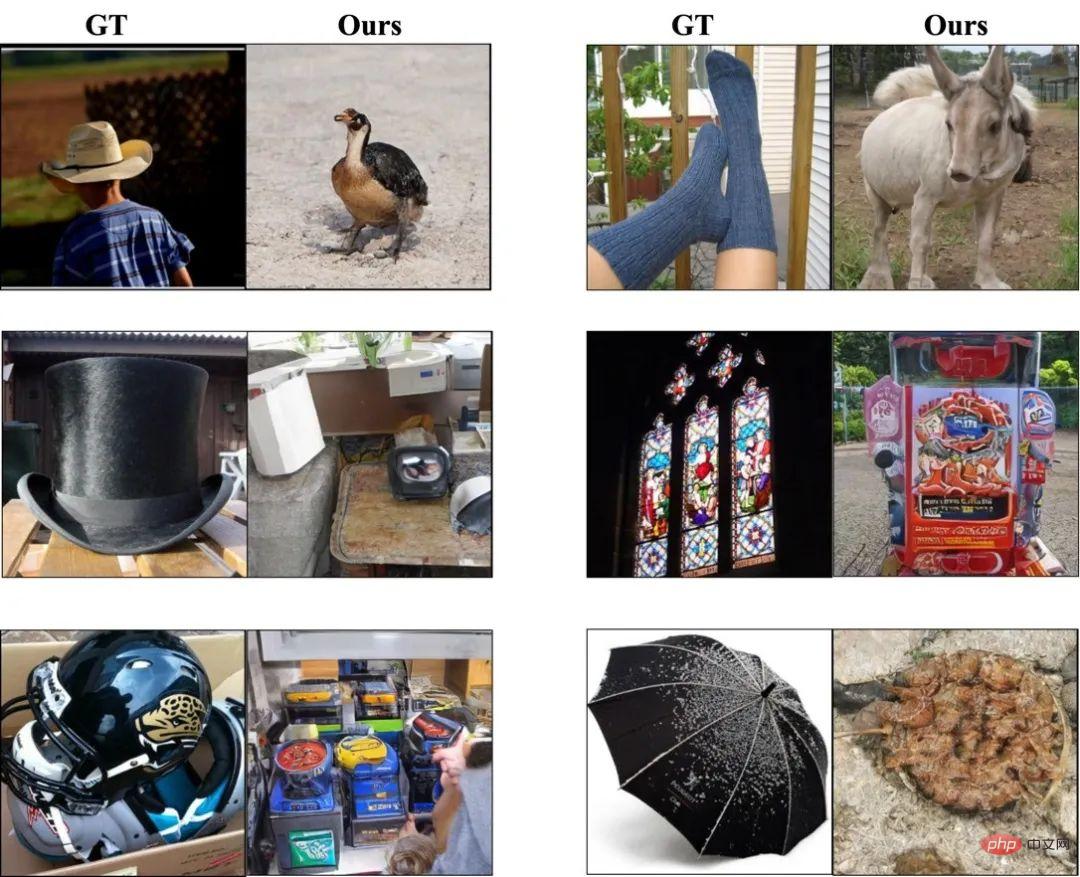

##A collection of favorite rollovers

The author believes that when the number of training samples is small, the difficulty of decoding the stimulus will be different. For example, the GOD dataset contains more animal training samples than clothing. This means that a word semantically similar to "furry" is more likely to be decoded as an animal rather than a garment, as shown in the image above, where a sock is decoded as a sheep.

Experimental settingDataset

Here, the author used three public data set.

Model structure

The design of the model structure (ViT and diffusion model) of this article mainly refers to past literature. Please refer to the text for model parameter details. Likewise, they also adopt an asymmetric architecture: the encoder aims to learn meaningful fMRI representations, while the decoder tries to predict the obscured blocks. Therefore, we follow the previous design and make the decoder smaller, which we discard after pre-training.

Evaluation Index

Like previous literature, the author also used n-way top-1 and top-5 classification accuracy to Evaluate the semantic correctness of the results. This is a method that evaluates results by calculating top-1 and top-5 classification accuracy for n-1 randomly selected categories and the correct category over multiple trials. Unlike previous methods, here they adopt a more direct and replicable evaluation method, using a pre-trained ImageNet1K classifier to judge the semantic correctness of the generated images instead of using handcrafted features. Additionally, they used the Fréchet inception distance (FID) as a reference to evaluate the quality of the generated images. However, due to the limited number of images in the dataset, FID may not perfectly assess the image distribution.

Effect

The experiments in this article are conducted at the individual level, that is, the model is trained and tested on the same individual. For comparison with previous literature, the results for the third subject of the GOD data set are reported here, and the results for the other subjects are listed in the Appendix.

Through this project, the author demonstrated the feasibility of restoring visual information of the human brain through fMRI. However, there are many issues that need to be addressed in this field, such as how to better handle differences between individuals, how to reduce the impact of noise and interference on decoding, and how to combine fMRI decoding with other neuroscience techniques to achieve a more comprehensive understanding. Mechanisms and functions of the human brain. At the same time, we also need to better understand and respect the ethical and legal issues surrounding the human brain and individual privacy.

In addition, we also need to explore wider application scenarios, such as medicine and human-computer interaction, in order to transform this technology into practical applications. In the medical field, fMRI decoding technology may be used in the future to help special groups such as visually impaired people, hearing impaired people, and even patients with general paralysis to decode their thoughts. Due to physical disabilities, these people are unable to express their thoughts and wishes through traditional communication methods. By using fMRI technology, scientists can decode their brain activity to access their thoughts and wishes, allowing them to communicate with them more naturally and efficiently. In the field of human-computer interaction, fMRI decoding technology can be used to develop more intelligent and adaptive human-computer interfaces and control systems, such as decoding the user's brain activity to achieve a more natural and efficient human-computer interaction experience.

We believe that with the support of large-scale data sets and large model computing power, fMRI decoding will have a broader and far-reaching impact, promoting the development of cognitive neuroscience and artificial intelligence. develop.

Note: *Biological basis for learning visual stimulus representations in the brain using sparse coding: Sparse coding has been proposed as a strategy for the representation of sensory information. Research shows that visual stimuli are sparsely encoded in the visual cortex, which increases information transmission efficiency and reduces redundancy in the brain. Using fMRI, the visual content of natural scenes can be reconstructed from small amounts of data collected in the visual cortex. Sparse coding can be an efficient way of coding in computer vision. The article mentioned the SC-MBM method, which divides fMRI data into small blocks to introduce locality constraints, and then sparsely encodes each small block into a high-dimensional vector space, which can be used as a biologically effective and efficient brain feature learner. , used for visual encoding and decoding.

The above is the detailed content of AI knows what you are thinking and draws it for you. The project code has been open source. For more information, please follow other related articles on the PHP Chinese website!