The lightweight version of ChatGPT based on the Meta model is here?

Just three days after Meta announced the launch of LLaMA, an open source training method that turned it into ChatGPT appeared in the industry, claiming that the training speed is up to 15 times faster than ChatGPT.

LLaMA is an ultra-fast and ultra-small GPT-3 launched by Meta. The number of parameters is only 10% of the latter, and it only requires a single GPU to run.

The method to turn it into ChatGPT is called ChatLLaMA, which is trained based on RLHF (reinforcement learning based on human feedback) and quickly became popular on the Internet.

So, Meta’s open source version of ChatGPT is really coming?

Wait a minute, things are not that simple.

Click on the ChatLLaMA project homepage and you will find that it actually integrates four parts -

DeepSpeed, RLHF method, LLaMA and data sets generated based on LangChain agent.

Among them, DeepSpeed is an open source deep learning training optimization library, including an existing optimization technology called Zero, which is used to improve large model training capabilities. Specifically, it refers to helping the model improve training speed, reduce costs, improve model availability, etc. .

RLHF will use the reward model to fine-tune the pre-trained model. The reward model first uses multiple models to generate questions and answers, and then relies on manual sorting of the questions and answers so that it can learn to score. Then, it scores the answers generated by the model based on reward learning, and enhances the model's capabilities through reinforcement learning.

LangChain is a large language model application development library that hopes to integrate various large language models and create a practical application combined with other knowledge sources or computing capabilities. The LangChain agent will release the entire process of GPT-3 thinking like a thought chain and record the operations.

At this time you will find that the most critical thing is still the LLaMA model weight. Where does it come from?

Hey, go to Meta and apply yourself, ChatLLaMA does not provide it. (Although Meta claims to open source LLaMA, you still need to apply)

So essentially, ChatLLaMA is not an open source ChatGPT project, but just a training method based on LLaMA. Several projects integrated in its library were originally open source.

In fact, ChatLLaMA was not built by Meta, but from a start-up AI company called Nebuly AI.

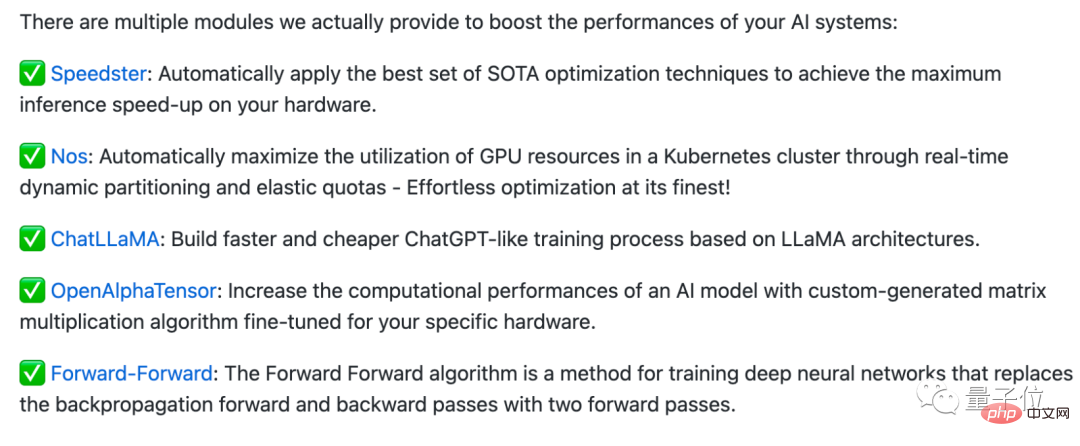

Nebuly AI has made an open source library called Nebullvm, which integrates a series of plug-and-play optimization modules to improve AI system performance.

For example, these are some modules currently included in Nebullvm, including OpenAlphaTensor based on DeepMind's open source AlphaTensor algorithm, optimization modules that automatically sense hardware and accelerate it...

ChatLLaMA is also in this series of modules, but it should be noted that its open source license is not commercially available.

So if you want to use the "domestic self-developed ChatGPT" directly, it may not be that simple (doge).

After reading this project, some netizens said that it would be great if someone really got the model weights (code) of LLaMA...

But Some netizens also pointed out that the statement "15 times faster than the ChatGPT training method" is purely misleading:

The so-called 15 times faster is just because the LLaMA model itself is very small and can even be used on a single GPU. running on it, but it shouldn't be because of anything done by this project, right?

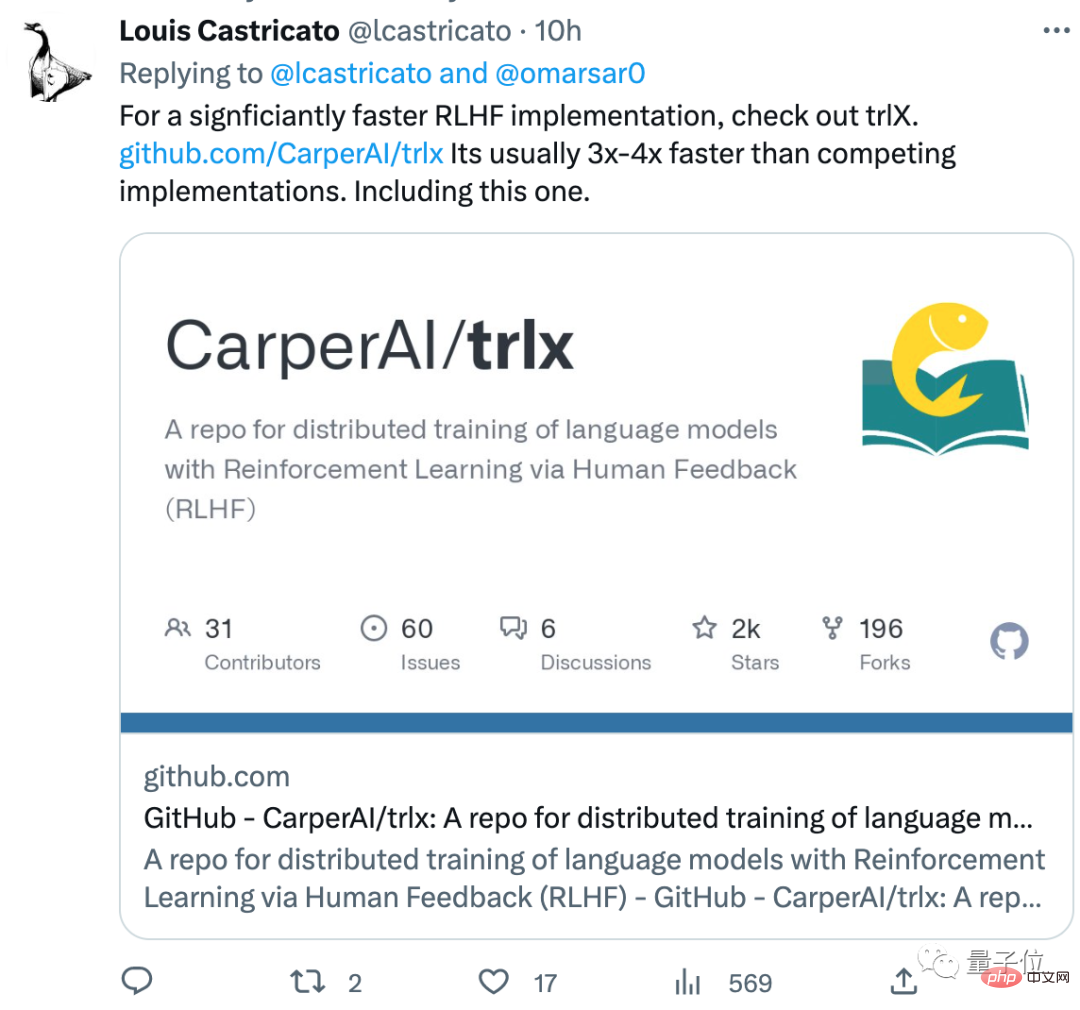

This netizen also recommended a RLHF training method that is better than the one in the library, called trlx, and the training speed is faster than the usual RLHF method. 3~4 times:

#Have you got the code for LLaMA? What do you think of this training method?

ChatLLaMA address://m.sbmmt.com/link/fed537780f3f29cc5d5f313bbda423c4

Reference link://m.sbmmt.com/link/fe27f92b1e3f4997567807f38d567a35

The above is the detailed content of The lightweight version of ChatGPT training method is open source! Built around LLaMA in just 3 days, the training speed is claimed to be 15 times faster than OpenAI. For more information, please follow other related articles on the PHP Chinese website!

Introduction to the usage of vbs whole code

Introduction to the usage of vbs whole code How do I set up WeChat to require my consent when people add me to a group?

How do I set up WeChat to require my consent when people add me to a group? What are the Chinese programming languages?

What are the Chinese programming languages? What is the appropriate virtual memory setting?

What is the appropriate virtual memory setting? Top ten currency trading software apps ranking list

Top ten currency trading software apps ranking list Introduction to Kirchhoff's theorem

Introduction to Kirchhoff's theorem How to implement jsp paging function

How to implement jsp paging function The role of vga interface

The role of vga interface