Has AI advanced again?

#And it is the kind that generates a continuous 30-second video from one picture.

emm....Isn’t the quality a bit too blurry

Be aware that this is only generated from a single image (the first frame) and does not have any geometry information displayed.

This is a general framework for image modeling and vision tasks based on probabilistic frame prediction recently proposed by DeepMind - Transframer.

#To put it simply, Transframer is used to predict the probability of any frame.

These frames can be conditioned on one or more annotated context frames, which can be previous video frames, time stamps, or camera-tagged view scenes. .

Let’s first take a look at how this magical Transframer architecture works.

The paper address is posted below. Interested children can take a look ~ https://arxiv.org /abs/2203.09494

To estimate the predicted distribution on a target image, we need an expression generation model that can produce diverse, high-quality output.

Although the results of DC Transformer on a single image domain can meet the needs, it is not in the multi-image text set {(In,an)}n we need as condition.

# Therefore, we extended DC Transformer to enable image and annotation conditional prediction.

We replace DC Transformer with a Vision-Transformer style encoder that operates on a single DCT image using a multi-frame U-Net architecture, using For processing a set of annotated frames and partially hidden target DCT images.

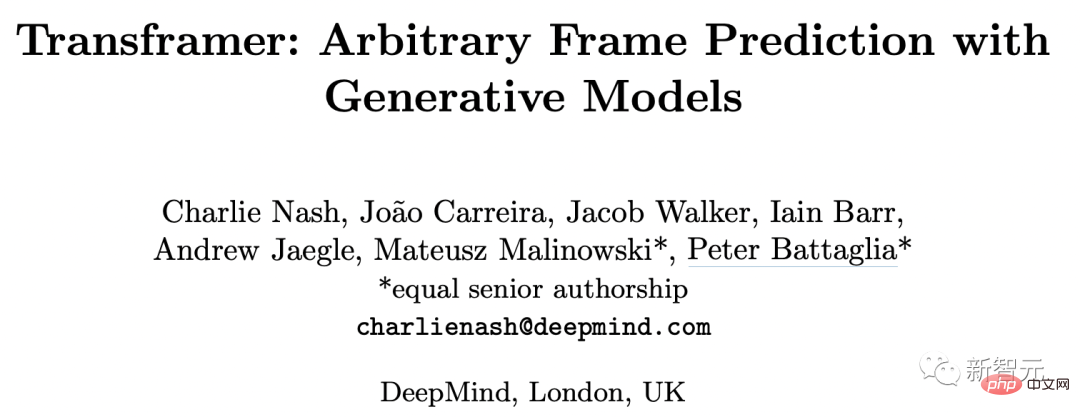

#Let’s take a look at how the Transframer architecture works.

(a) Transframer takes as input the DCT images (a1 and a2) as well as the partially hidden target DCT image (aT) and additional annotations, which are processed by the multi-frame U-Net encoder. Next, the U-Net output is passed to the DC-Transformer decoder through cross attention, which automatically regresses to generate a DCT Token sequence (green letters) corresponding to the hidden part of the target image. (b) The multi-frame U-Net block consists of NF-Net convolution blocks and multi-frame self-attention blocks, which exchange information between input frames and Transformer-style residual MLP.

Let’s take a look at Multi-Frame U-Net that processes image input.

The input to U-Net is a sequence consisting of N DCT frames and partially hidden target DCT frames, annotated information is associated with each input frame Provided in vector form.

The core component of U-Net is a computational block that first applies a shared NF-ResNet convolution block to each input frame and then applies A Transformer-style self-attention block to aggregate information across frames. (Figure 2 b)

NF-ResNet block consists of grouped convolutions and squeeze and excitation layers, aiming to improve the performance of TPU.

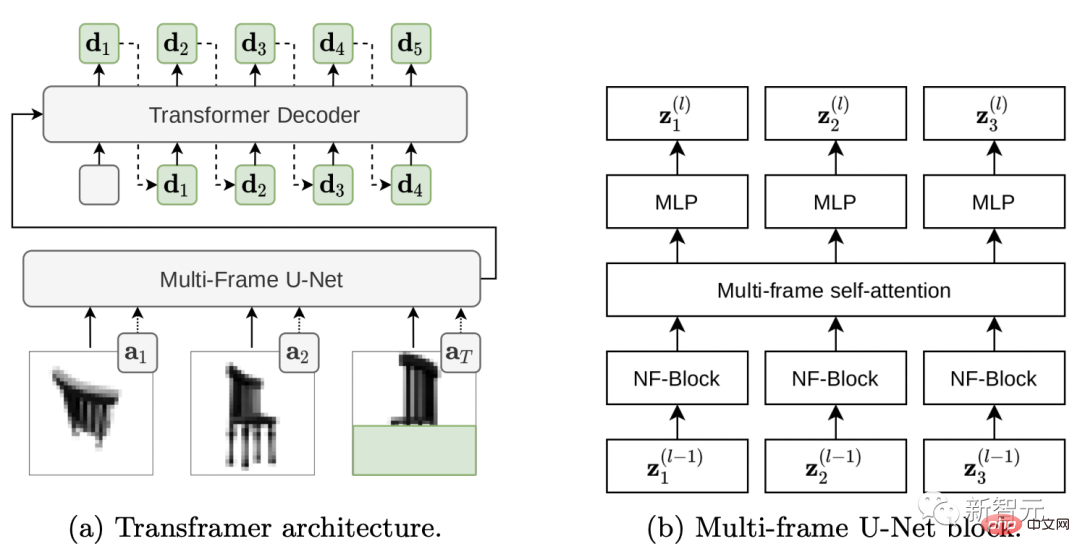

Below, Figure (a) compares the sparsity of absolute and residual DCT representations of RoboNet (128x128) and KITTI videos.

Since RoboNet consists of static videos with only a few moving elements, the sparsity of the residual frame representation increases significantly.

And KITTI videos often have a moving camera, resulting in differences almost everywhere in consecutive frames.

#But in this case, the benefits of small sparsity are also weakened.

Through a series of tests on data sets and tasks, the results show Transframer can be applied to a wide range of tasks.

This includes video modeling, new view synthesis, semantic segmentation, object recognition, depth estimation, optical flow prediction, etc.

Video Modeling

By Transframer Predict the next frame given a sequence of input video frames.

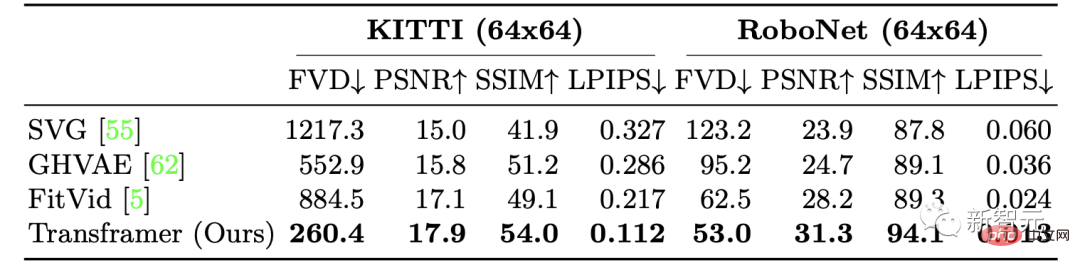

The researchers trained Transframer's performance in video generation on the KITTI and RoboNet data sets respectively.

For KITTI, given 5 context frames and 25 sample frames, the results show that the Transframer model performs better on all metrics The performance of LPIPS and FVD has been improved, among which the improvements of LPIPS and FVD are the most obvious.

On RoboNet, the researchers were given 2 context frames and 10 sampling frames, 64x64 and 128x128 respectively. The training was carried out at a certain resolution and finally achieved very good results.

View composition

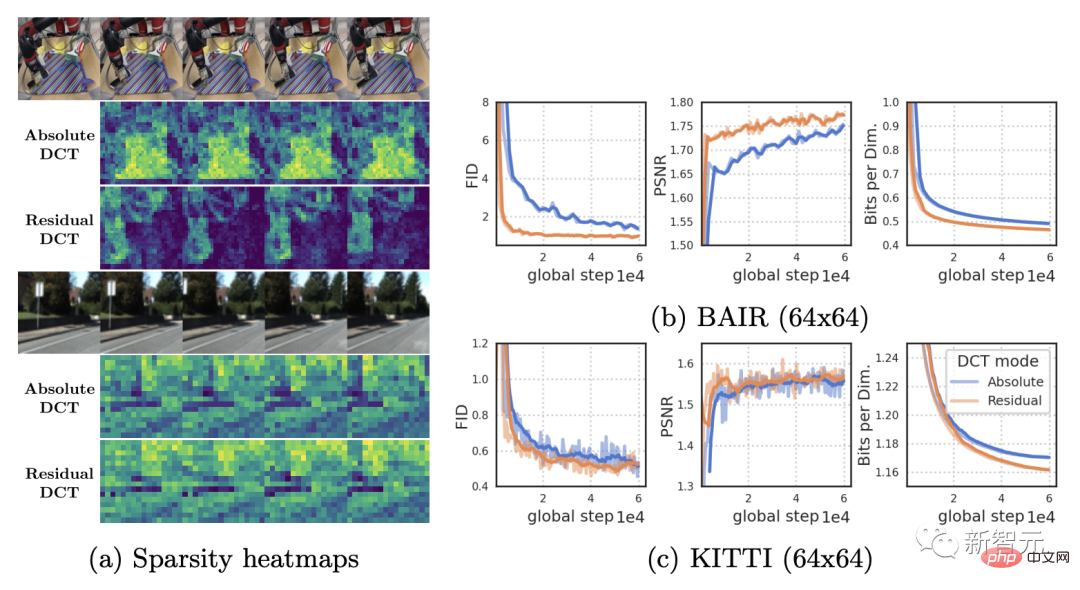

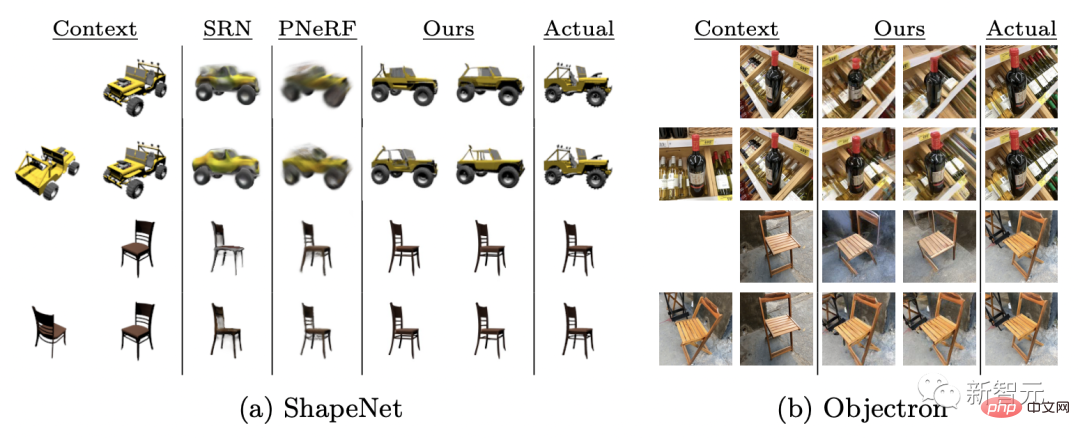

In terms of view synthesis, we work by providing camera views as context and target annotations as described in Table 1 (line 3), and uniformly sampling multiple context views up to a specified maximum.

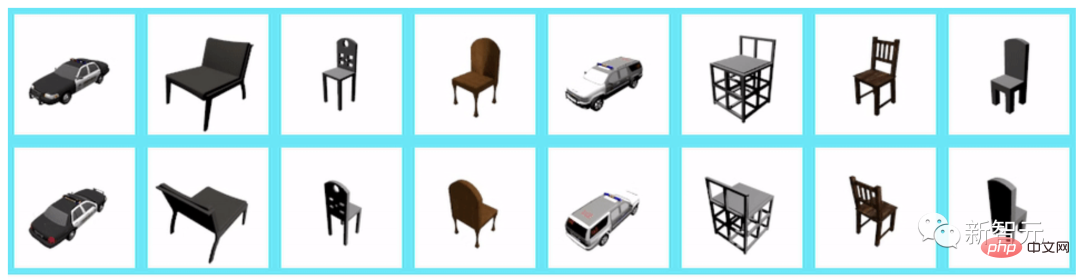

By providing 1-2 contextual views, the model Transframer is evaluated on the ShapeNet benchmark, significantly outperforming PixelNeRF and SRN.

Additionally after evaluation on the dataset Objectron, it can be seen that when given a single input view, the model produces Coherent output, but missing some features like crossed chair legs.

When 1 context view is given, the view synthesized at 128×128 resolution is as follows:

When two more context views are given, the view synthesized at 128×128 resolution is as follows:

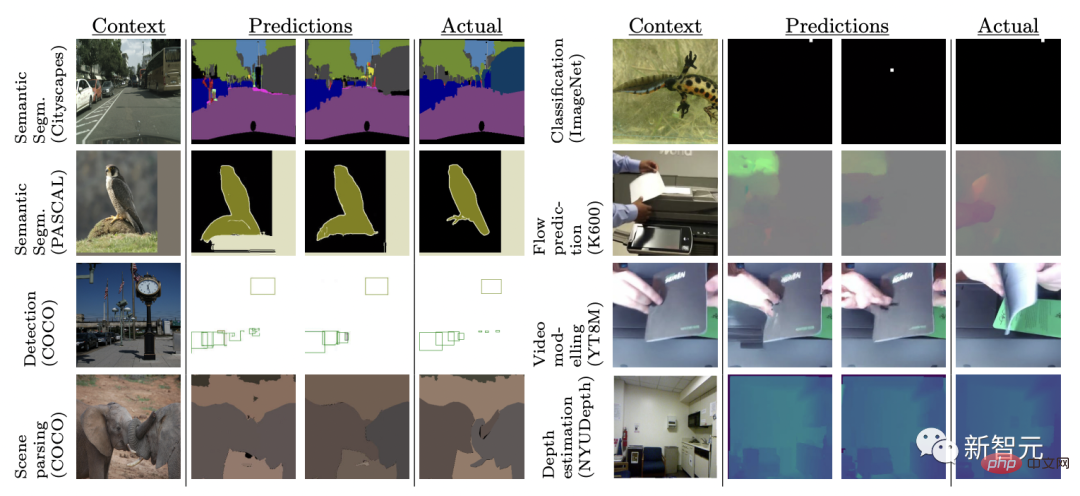

Multiple Vision Tasks

Different computer vision tasks often use complex architecture and loss function to handle.

Here, the researchers jointly trained the Transframer model on 8 different tasks and datasets using the same loss function.

The 8 tasks are: optical flow prediction of a single image, object classification, detection and segmentation, semantic segmentation (on 2 data sets), Future frame prediction and depth estimation.

The results show that Transframer learns to generate different samples in completely different tasks. In some tasks, such as Cityscapes, the model produces output of good quality.

# However, the quality of model outputs on tasks such as future frame prediction and bounding box detection is variable, suggesting that modeling in this setting is more challenging. sex.

The above is the detailed content of Give me a picture and generate a 30-second video!. For more information, please follow other related articles on the PHP Chinese website!