Technology peripherals

Technology peripherals

AI

AI

Full-body tracking, not afraid of occlusion, two Chinese people from CMU made a DensePose based on WiFi signals

Full-body tracking, not afraid of occlusion, two Chinese people from CMU made a DensePose based on WiFi signals

Full-body tracking, not afraid of occlusion, two Chinese people from CMU made a DensePose based on WiFi signals

Over the past few years, great progress has been made in human pose estimation using 2D and 3D sensors such as RGB sensors, LiDARs or radar, driven by applications such as autonomous driving and VR. However, these sensors have some limitations, both technically and in practical use. First of all, the cost is high, and ordinary families or small businesses often cannot afford LiDAR and radar sensors. Second, these sensors are too power-hungry for daily and household use.

As for RGB cameras, narrow fields of view and poor lighting conditions can severely impact camera-based methods. Occlusions become another obstacle that prevents camera-based models from generating reasonable pose predictions in images. Indoor scenes are particularly difficult, as furniture often blocks people. What's more, privacy concerns hinder the use of these technologies in non-public places, and many people are reluctant to install cameras in their homes to record their actions. But in the medical field, for safety, health and other reasons, many elderly people sometimes have to perform real-time monitoring with the help of cameras and other sensors.

Recently, three researchers from CMU proposed in the paper "DensePose From WiFi" that In some cases, WiFi signals can be used as a substitute for RGB images To conduct human body perception. Lighting and occlusion have little impact on WiFi solutions for indoor surveillance. WiFi signals help protect personal privacy, and the equipment needed is affordable. The key takeaway is that many homes have WiFi installed, so the technology could potentially expand to monitor the health of older adults or identify suspicious behavior in the home.

Paper address: https://arxiv.org/pdf/2301.00250.pdf

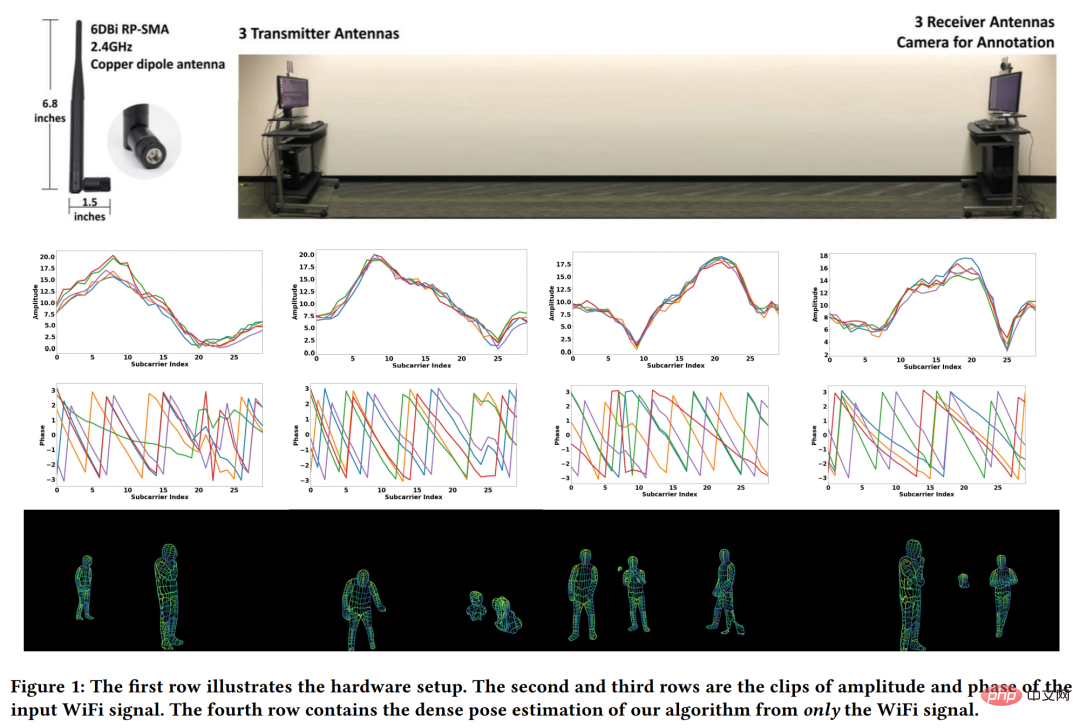

The researcher wants to The problem to be solved is shown in the first row of Figure 1 below. Given 3 WiFi transmitters and 3 corresponding receivers, can dense human posture correspondences be detected and restored in a cluttered environment with multiple people (the fourth row of Figure 1)? It should be noted that many WiFi routers (such as TP-Link AC1750) have 3 antennas, so only 2 such routers are needed in this method. Each router costs about $30, meaning the entire setup is still much cheaper than LiDAR and radar systems.

In order to achieve the effect shown in the fourth row of Figure 1, the researcher got inspiration from the deep learning architecture of computer vision and proposed a that can be executed based on WiFi Neural network architecture for dense pose estimation, and realizes dense pose estimation using only WiFi signals in scenes with occlusion and multiple people.

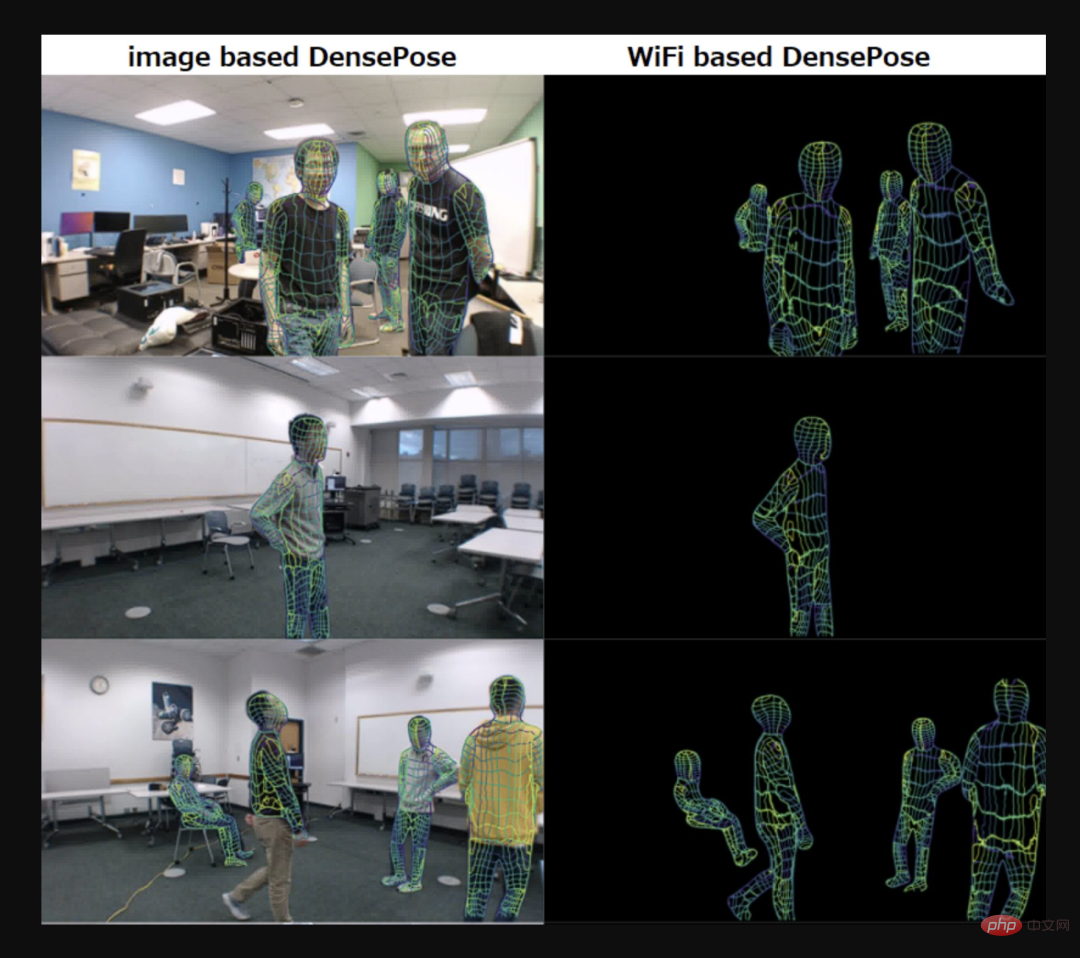

The left picture below shows image-based DensePose, and the right picture shows WiFi-based DensePose.

## Source: Twitter @AiBreakfast

In addition, it is worth mentioning that the first and second authors of the paper are both Chinese. Jiaqi Geng, the first author of the paper, obtained a master's degree in robotics from CMU in August last year, and Dong Huang, the second author, is now a senior project scientist at CMU.

Method Introduction

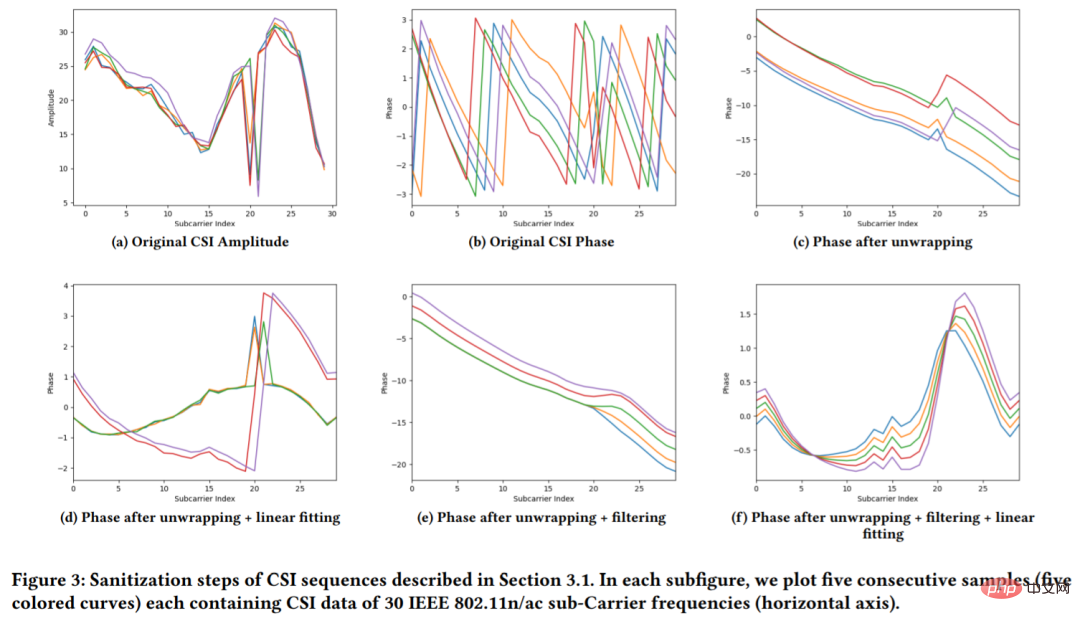

To use WiFi to generate UV coordinates of the human body surface requires three components: First, pass The amplitude and phase steps clean up the original CSI (Channel-state-information, indicating the ratio between the transmitted signal wave and the received signal wave) signal; then, the processed CSI samples are converted through a dual-branch encoder-decoder network is a 2D feature map; then the 2D feature map is fed into an architecture called DensePose-RCNN (mainly converting 2D images into 3D human models) to estimate the UV map.

The original CSI samples are noisy (see Figure 3 (b)), not only that, most WiFi-based solutions ignore the CSI signal phase and focus on the amplitude of the signal (see Figure 3 (a) )). However discarding phase information can have a negative impact on model performance. Therefore, this study performs sanitization processing to obtain stable phase values to better utilize CSI information.

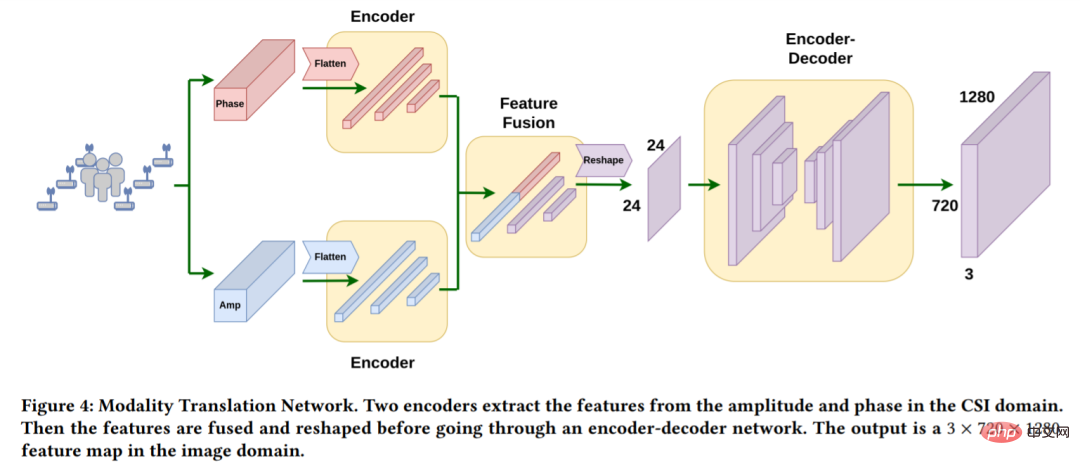

#In order to estimate the UV mapping in the spatial domain from the one-dimensional CSI signal, we first need to convert the network input from the CSI domain to spatial domain. This article is completed using Modality Translation Network (as shown in Figure 4). After some operations, a 3×720×1280 scene representation in the image domain generated by the WiFi signal can be obtained.

After obtaining a 3×720×1280 scene representation in the image domain, this study uses a method similar to DensePose-RCNN Network architecture WiFi-DensePose RCNN to predict human body UV map. Specifically, in WiFi-DensePose RCNN (Figure 5), this study uses ResNet-FPN as the backbone and extracts spatial features from the obtained 3 × 720 × 1280 image feature map. The output is then fed to the region proposal network. In order to better utilize complementary information from different sources, WiFi-DensePose RCNN also contains two branches, DensePose head and Keypoint head, after which the processing results are merged and input to the refinement unit.

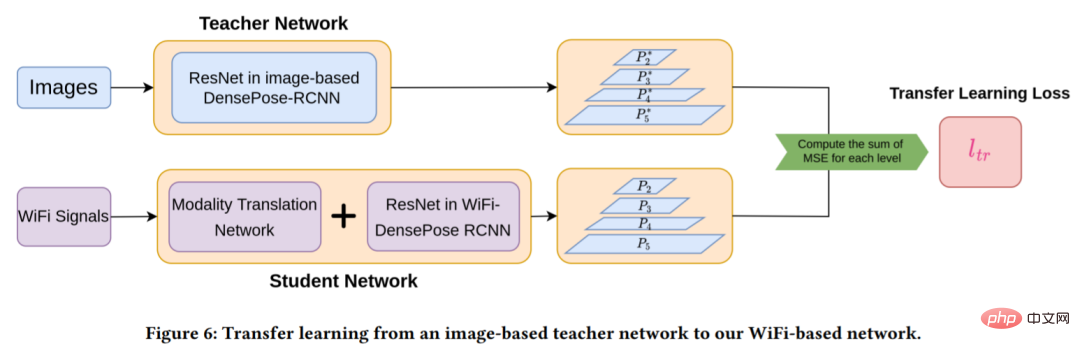

However training Modality Translation Network and WiFi-DensePose RCNN networks from random initialization requires a lot of time (approximately 80 hours). In order to improve training efficiency, this study migrated an image-based DensPose network to a WiFi-based network (see Figure 6 for details).

Directly initializing a WiFi-based network with image-based network weights did not work, so this study first trained a The image-based DensePose-RCNN model serves as the teacher network, and the student network consists of modality translation network and WiFi-DensePose RCNN. The purpose of this is to minimize the difference between the multi-layer feature maps generated by the student model and the teacher model.

Experiment

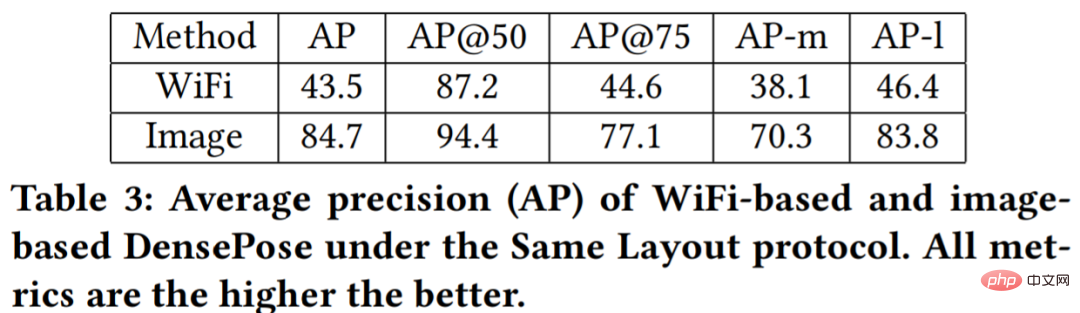

Table 1 results show that the WiFi-based method obtained a very high AP@50 value of 87.2, which shows that the model can effectively detect The approximate location of human body bounding boxes. AP@75 is relatively low with a value of 35.6, which indicates that human body details are not perfectly estimated.

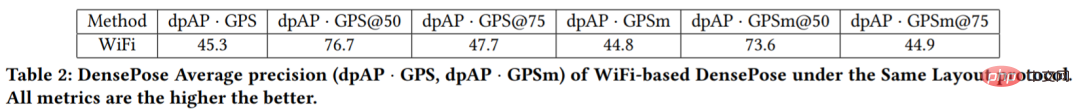

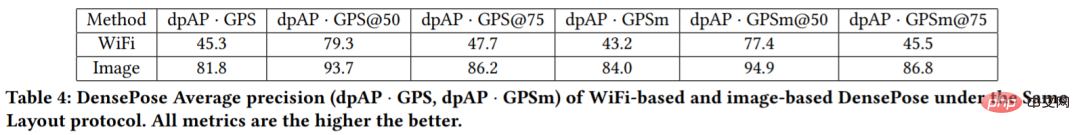

Table 2 results show that dpAP・GPS@50 and dpAP・GPSm@50 have higher values, but dpAP・GPS@75 and dpAP・GPSm @75 is a lower value. This shows that our model performs well in estimating the pose of the human torso, but still has difficulties in detecting details such as limbs.

The quantitative results in Tables 3 and 4 show that the image-based method yields very high AP than the WiFi-based method. The difference between AP-m values and AP-l values of WiFi-based models is relatively small. The study suggests this is because people further away from the camera take up less space in the image, which results in less information about those objects. Instead, the WiFi signal contains all information about the entire scene, regardless of the subject's location.

The above is the detailed content of Full-body tracking, not afraid of occlusion, two Chinese people from CMU made a DensePose based on WiFi signals. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

What to do if the HP printer cannot connect to wifi - What to do if the HP printer cannot connect to wifi

Mar 06, 2024 pm 01:00 PM

What to do if the HP printer cannot connect to wifi - What to do if the HP printer cannot connect to wifi

Mar 06, 2024 pm 01:00 PM

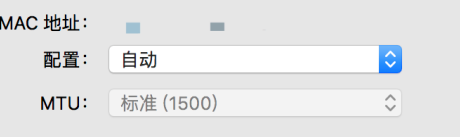

When many users use HP printers, they are not familiar with what to do if the HP printer cannot connect to wifi. Below, the editor will bring you solutions to the problem of HP printers not connecting to wifi. Let us take a look below. Set the mac address of the HP printer to automatically select and automatically join the network. Check to change the network configuration. Use dhcp to enter the password to connect to the HP printer. It shows that it is connected to wifi.

A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models

Jan 14, 2024 pm 07:48 PM

A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models

Jan 14, 2024 pm 07:48 PM

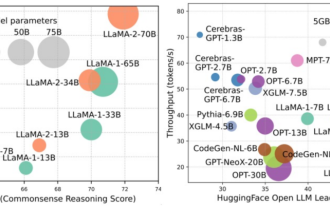

Large-scale language models (LLMs) have demonstrated compelling capabilities in many important tasks, including natural language understanding, language generation, and complex reasoning, and have had a profound impact on society. However, these outstanding capabilities require significant training resources (shown in the left image) and long inference times (shown in the right image). Therefore, researchers need to develop effective technical means to solve their efficiency problems. In addition, as can be seen from the right side of the figure, some efficient LLMs (LanguageModels) such as Mistral-7B have been successfully used in the design and deployment of LLMs. These efficient LLMs can significantly reduce inference memory while maintaining similar accuracy to LLaMA1-33B

Why can't I connect to Wi-Fi in Windows 10?

Jan 16, 2024 pm 04:18 PM

Why can't I connect to Wi-Fi in Windows 10?

Jan 16, 2024 pm 04:18 PM

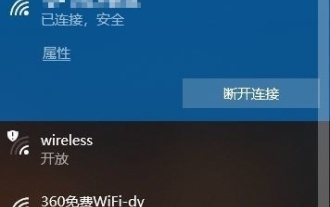

When we use the win10 operating system to connect to a wifi wireless network, we will find a prompt that the wifi network cannot be connected and is restricted. For this kind of problem, I think you can try to find your own network in the Network and Sharing Center, and then make a series of adjustments. Let’s take a look at the specific steps to see how the editor did it~Why can’t Win10 connect to wifi? Method 1: 1. Right-click the wireless WIFI icon in the notification area at the bottom of the computer screen, select “Open Network and Internet Settings”, and then Click the "Change Adapter Options" button. 2. In the pop-up network connection interface, look for the wireless connection named "WLAN", right-click again, and select "Close" (or "Disable"). 3. Wait

How to solve the problem of not being able to enter the wifi password in win10

Dec 30, 2023 pm 05:43 PM

How to solve the problem of not being able to enter the wifi password in win10

Dec 30, 2023 pm 05:43 PM

Not being able to enter the password for win10wifi is a very depressing problem. Usually it is the card owner. Just reopen or restart the computer. For users who still can't solve the problem, hurry up and take a look at the detailed solution tutorial. Win10 Wifi Unable to Enter Password Tutorial Method 1: 1. Unable to enter password may be a problem with our keyboard connection, carefully check whether the keyboard can be used. 2. If we need to use the keypad to enter numbers, we also need to check whether the keypad is locked. Method 2: Note: Some users reported that the computer could not be turned on after performing this operation. In fact, it is not the cause of this setting, but the problem of the computer system itself. After performing this operation, it will not affect the normal startup of the computer, and the computer system will not

Solution to Win11 unable to display WiFi

Jan 29, 2024 pm 04:03 PM

Solution to Win11 unable to display WiFi

Jan 29, 2024 pm 04:03 PM

WiFi is an important medium for us to surf the Internet, but many users have recently reported that Win11 does not display WiFi, so what should we do? Users can directly click on the service under the search option, and then select the startup type to change to automatic or click on the network and internet on the left to operate. Let this site carefully introduce to users the analysis of the problem of Win11 computer not displaying the wifi list. Method 1 to resolve the problem that the Wi-Fi list cannot be displayed on the win11 computer: 1. Click the search option. 3. Then we change the startup type to automatic. Method 2: 1. Press and hold win+i to enter settings. 2. Click Network and Internet on the left. 4. Subsequently

What is the reason why the wifi function cannot be turned on? Attachment: How to fix the wifi function that cannot be turned on

Mar 14, 2024 pm 03:34 PM

What is the reason why the wifi function cannot be turned on? Attachment: How to fix the wifi function that cannot be turned on

Mar 14, 2024 pm 03:34 PM

Nowadays, in addition to data and wifi, mobile phones have two ways to access the Internet, and OPPO mobile phones are no exception. But what should we do if we can’t turn on the wifi function when using it? Don't worry yet, you might as well read this tutorial, it will help you! What should I do if my phone’s wifi function cannot be turned on? It may be because there is a slight delay when the WLAN switch is turned on. Please wait 2 seconds to see if it is turned on. Do not click continuously. 1. You can try to enter "Settings>WLAN" and try to turn on the WLAN switch again. 2. Please turn on/off airplane mode and try to turn on the WLAN switch again. 3. Restart the phone and try to see if WLAN can be turned on normally. 4. It is recommended to try restoring factory settings after backing up data. If none of the above methods solve your problem, please bring the purchased

How to solve the problem of not being able to connect to Wifi after updating Win11_How to solve the problem of not being able to connect to Wifi after updating Win11

Mar 20, 2024 pm 05:50 PM

How to solve the problem of not being able to connect to Wifi after updating Win11_How to solve the problem of not being able to connect to Wifi after updating Win11

Mar 20, 2024 pm 05:50 PM

Windows 11 system is currently the system that attracts the most attention. Many users have upgraded to Win 11. Many computer operations can only be completed after being connected to the Internet. But what should you do if you cannot connect to wifi after updating to Win 11? The editor will tell you about the update below. Solution to the problem of not being able to connect to wifi after win11. 1. First, open the Start menu, enter "Settings" and click "Troubleshooting". 2. Then select "Other Troubleshooting" and click Run on the right side of the Internet connection. 3. Finally, the system will automatically help you solve the problem of unable to connect to wifi.

Solution to the disappearance of wifi icon on win11 computer

Jan 07, 2024 pm 12:33 PM

Solution to the disappearance of wifi icon on win11 computer

Jan 07, 2024 pm 12:33 PM

In the newly updated win11 system, many users find that they cannot find the wifi icon. For this reason, we have brought you a solution to the disappearance of the wifi icon on win11 computers. Turn on the setting switch to enable wifi settings. . What to do if the wifi icon disappears on a win11 computer: 1. First, right-click the lower taskbar, and then click "Taskbar Settings". 2. Then click the "Taskbar" option in the left taskbar. 3. After pulling down, you can see the notification area and click "Select which icons are displayed on the taskbar". 4. Finally, you can see the network settings below, and turn on the switch at the back.