Paper link: https://arxiv.org/abs/2203.12602

The code and pre-training weights have been open sourced on Github: https://github.com/MCG-NJU/VideoMAE

Directory1. Background introduction

2. Research motivation

3. Method introduction

4. VideoMAE implementation details

5. Ablation experiment

6. Important features of VideoMAE

7. Main results

8. Impact of the community

9. Summary

Video Self-supervised Learning: Does not use label information, self-supervision by design The agent task is to learn spatiotemporal representation information from video data. Existing video self-supervised pre-training algorithms are mainly divided into two categories: (1) Self-supervised methods based on contrastive learning, such as CoCLR, CVRL, etc. (2) Self-supervised methods based on time-series related agent tasks, such as DPC, SpeedNet, Pace, etc.

Action Recognition: Classify a given trimmed video (Trimmed Video) and identify the actions of the characters in this video. The current mainstream methods are 2D-based (TSN, TSM, TDN, etc.), 3D-based (I3D, SlowFast, etc.) and Transformer-based (TimeSformer, ViViT, MViT, VideoSwin, etc.). As a basic task in the video field, action recognition is often used as the backbone network (Backbone) for various downstream tasks in the video field (such as temporal behavior detection, spatiotemporal action detection) to extract spatiotemporal features at the entire video level or at the video clip level.

Action Detection: This task not only requires action classification of the video, identifying the actions of the characters in the video, but also using a bounding box within the space. box) marks the spatial position of the character. Action detection has a wide range of application scenarios in movie video analysis, sports video analysis and other scenarios.

Since the visual self-attention model (Vision Transformer) was proposed at the end of 2020, Transformer has been widely used in the field of computer vision and helped improve a Performance on a series of computer vision tasks.

However, Vision Transformer needs to utilize large-scale labeled data sets for training. Initially, the original ViT (Vanilla Vision Transformer) achieved good performance through supervised pre-training using hundreds of millions of labeled images. Current Video Transformers are usually based on Vision Transformer models trained on image data (such as TimeSformer, ViViT, etc.) and rely on pre-trained models of large-scale image data (such as ImageNet-1K, ImageNet-21K, JFT-300M, etc.). TimeSformer and ViViT have both tried to train the Video Transformer model from scratch in the video data set, but they have not been able to achieve satisfactory results. Therefore, how to effectively train Video Transformer, especially the original ViT (Vanilla Vision Transformer), directly on video datasets without using any other pre-trained models or additional image data is still an urgent problem to be solved . It should be noted that existing video datasets are relatively small compared to image datasets. For example, the widely used Kinectics-400 data set only has more than 200,000 training samples. The number of samples is about 1/50 of the ImageNet-21K data set and 1/1500 of the JFT-300M data set. There is a gap of several orders of magnitude. At the same time, compared with training an image model, the computational overhead of training a video model is also much higher. This further increases the difficulty of training Video Transformer on video datasets.

Recently, the self-supervised training paradigm of "masking-and-reconstruction" has achieved success in natural language processing (BERT) and image understanding (BEiT, MAE) success. Therefore, we try to use this self-supervised paradigm to train Video Transformer on video data sets, and propose a video self-supervised pre-training algorithm VideoMAE (Video MAE) based on the proxy task of masking-and-reconstruction. Masked Autoencoder). The ViT model pre-trained by VideoMAE can achieve significantly better results than other methods on larger video data sets such as Kinetics-400 and Something-Something V2, as well as relatively small video data sets such as UCF101 and HMDB51.

MAE adopts an asymmetric encoder-decoder architecture for self-supervised prediction of masking and reconstruction. training tasks. A 224x224 resolution input image is first divided into non-overlapping visual pixel blocks (tokens) of size 16 × 16. Each pixel block (token) is converted into a high-dimensional feature through a block embedding (token embedding) operation. MAE uses a higher mask ratio (75%) to randomly mask out some pixel blocks (tokens). After the masking operation, the remaining pixel blocks are sent to the encoder for feature extraction. Immediately afterwards, the feature blocks extracted by the encoder are spliced with another part of preset learnable pixel blocks (learnable tokens) to form features that are as large as the original input image size. Finally, a lightweight decoder is used to reconstruct the original image based on these features (during the actual experiment, the reconstruction target is a normalized pixel block (normalized token).

Compared with image data, video data contains more frames and has richer motion information. This section will first analyze Let’s take a look at the characteristics of video data.

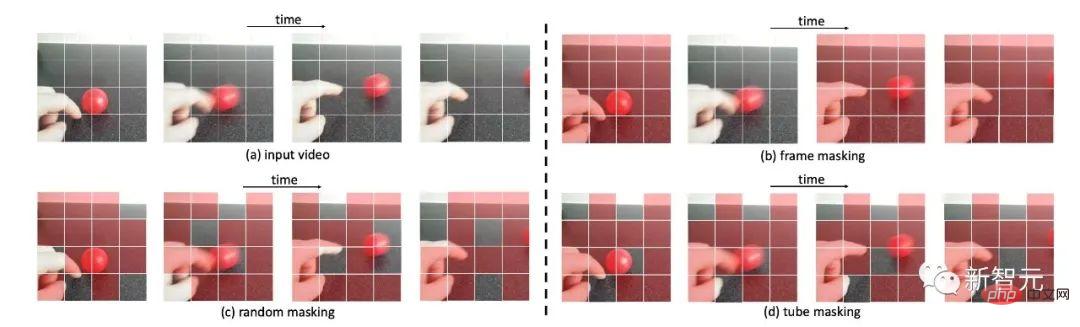

Examples of different masking strategies for video data

Video data contains dense image frames, and the semantic information contained in these image frames changes very slowly over time. It can be seen that the dense and continuous color image frames in the video is highly redundant, as shown in the figure. This redundancy may cause two problems in the process of implementing MAE. First, if the dense frame rate of the original video (such as 30 FPS) is used for pre-training, the training efficiency will be very low. Because this setting will make the network pay more attention to static appearance features in the data or some locally changing motion features. Secondly, the temporal redundancy in the video will greatly dilute the motion features in the video. Therefore, this situation makes the task of reconstructing the masked pixel patches relatively simple under normal masking rates (e.g., 50% to 75%). These problems will affect the pre-trained performance of Backbone as an encoder. Extract motion features in the process.

Video can be regarded as generated by the evolution of static pictures over time, so There are also semantic correspondences between video frames. If the masking strategy is not designed specifically, this temporal correlation may increase the risk of "information leakage" during the reconstruction process. Specifically, as shown in the figure, if Using a global random mask or a randomly masked image frame, the network can exploit the temporal correlation in the video to perform block reconstruction by "copying and pasting" unoccluded pixel blocks at the corresponding temporal positions in adjacent frames. In this case It can also complete the agent task to a certain extent, but it may cause VideoMAE to only learn lower semantic temporal correspondence features instead of high-level abstract semantic information, such as the spatiotemporal reasoning ability of video content. In order to alleviate this situation , a new masking strategy needs to be designed to make the reconstruction task more challenging, so that the network can better learn the spatiotemporal feature representation in the video.

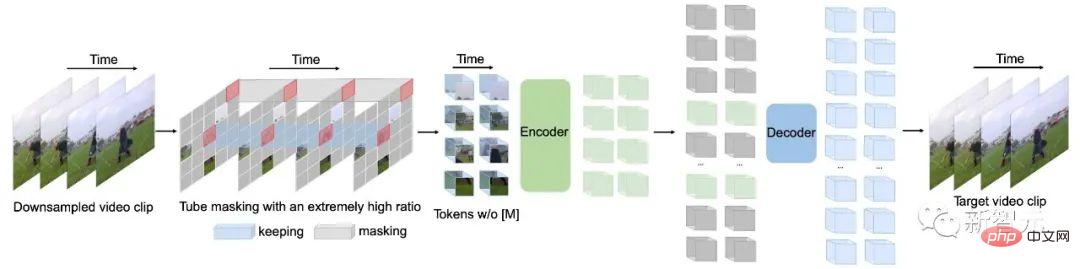

The overall framework of VideoMAE

In order to solve the video pre-training process in the previous article To address the problems that may be encountered when using masking-and-reconstruction tasks, we introduce some new designs in VideoMAE.

According to the previous analysis of the timing redundancy existing in dense consecutive frames in the video, we chose to use timing intervals in VideoMAE sampling strategy to conduct more efficient video self-supervised pre-training. Specifically, a video segment consisting of $t$ consecutive frames is first randomly sampled from the original video. The video clips are then compressed into frames using temporally spaced sampling, with each frame containing  pixels. In the specific experimental settings, the sampling intervals on the Kinetics-400 and Something-Something V2 datasets are set to 4 and 2 respectively.

pixels. In the specific experimental settings, the sampling intervals on the Kinetics-400 and Something-Something V2 datasets are set to 4 and 2 respectively.

Before input into the encoder, for the sampled video clips, pixel blocks are processed in the form of spatiotemporal joint Embed. Specifically, visual pixels of size  in a video clip of size

in a video clip of size  are considered as one visual pixel block. Therefore,

are considered as one visual pixel block. Therefore,  visual pixel blocks can be obtained from the sampled video clips after passing through the space-time block embedding (cube embedding) layer. In this process, the channel dimensions of the visual pixel block will also be mapped to. This design can reduce the spatiotemporal dimension size of the input data, and also helps alleviate the spatiotemporal redundancy of video data to a certain extent.

visual pixel blocks can be obtained from the sampled video clips after passing through the space-time block embedding (cube embedding) layer. In this process, the channel dimensions of the visual pixel block will also be mapped to. This design can reduce the spatiotemporal dimension size of the input data, and also helps alleviate the spatiotemporal redundancy of video data to a certain extent.

In order to solve the problem caused by the timing redundancy and To solve the "information leakage" problem caused by temporal correlation, this method chooses to adopt a pipeline masking strategy in the process of self-supervised pre-training. The pipeline masking strategy can naturally extend the masking method of a single frame color image to the entire video sequence, that is, visual pixel blocks at the same spatial location in different frames will be masked. Specifically, the pipeline masking policy can be expressed as . Different times t share the same value. Using this masking strategy, tokens at the same spatial location will always be masked. So for some visual pixel patches (e.g., the pixel patch containing the finger in line 4 of the example image of different masking strategies), the network will not be able to find its corresponding part in other frames. This design helps mitigate the risk of "information leakage" during the reconstruction process, allowing VideoMAE to reconstruct the masked token by extracting high-level semantic information from the original video clip.

Compared with image data, video data has stronger redundancy, and the information density of video data is much lower than that of images. This feature allows VideoMAE to use extremely high mask rates (e.g. 90% to 95%) for pre-training. It is worth noting that the default mask rate of MAE is 75%. Experimental results show that using an extremely high mask rate can not only speed up pre-training (only 5% to 10% of visual pixel blocks are input to the encoder), but also improve the representation ability of the model and the effect in downstream tasks .

As mentioned in the previous article, VideoMAE uses an extremely high masking rate and only retains a very small number of tokens as input to the encoder. . In order to better extract the spatiotemporal features of this part of the unoccluded token, VideoMAE chose to use the original ViT as the Backbone, and at the same time used spatiotemporal joint self-attention in the attention layer (that is, the model structure of the original ViT was not changed). Therefore all unoccluded tokens can interact with each other in the self-attention layer. The  -level computational complexity of the spatio-temporal joint self-attention mechanism is the computational bottleneck of the network. In the previous article, an extremely high mask ratio strategy was used for VideoMAE, which only unmasked tokens (such as 10%) is input into the encoder. This design can effectively alleviate the

-level computational complexity of the spatio-temporal joint self-attention mechanism is the computational bottleneck of the network. In the previous article, an extremely high mask ratio strategy was used for VideoMAE, which only unmasked tokens (such as 10%) is input into the encoder. This design can effectively alleviate the  level computational complexity problem to a certain extent.

level computational complexity problem to a certain extent.

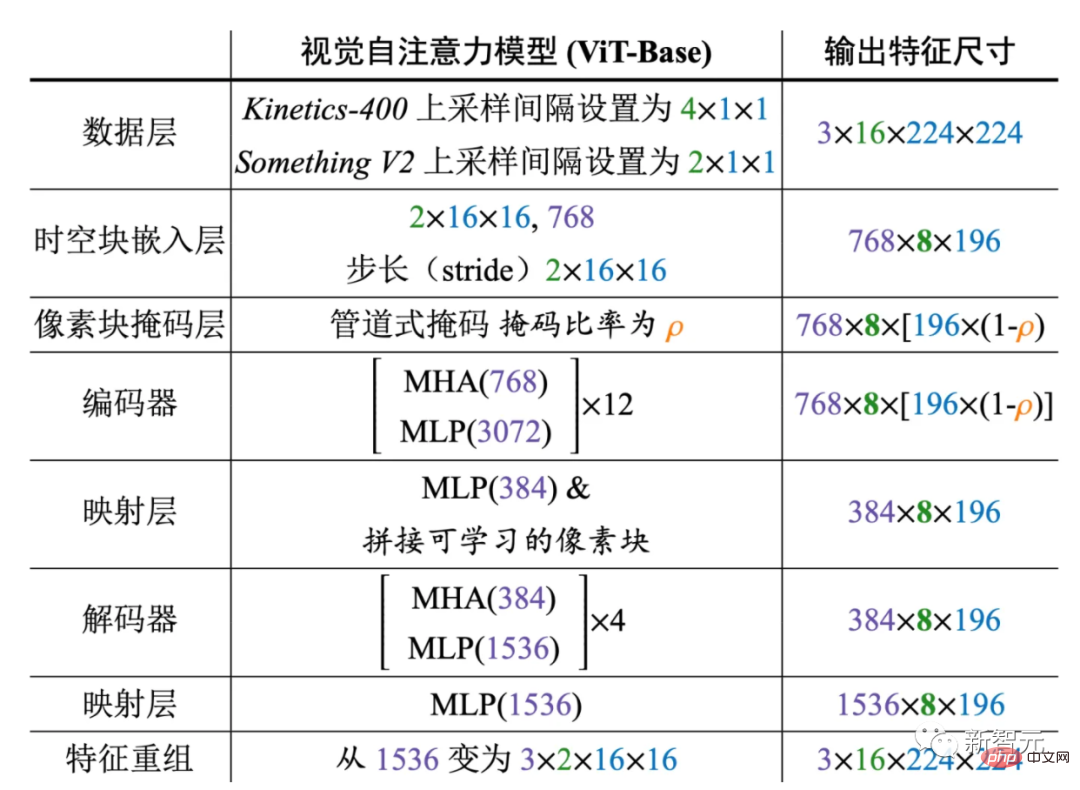

##Specific design details of the VideoMAE framework

The above figure shows the specific architectural design of the encoder and decoder used by VideoMAE (taking ViT-B as an example). We evaluate VideoMAE on four downstream video action recognition datasets and one action detection dataset. These datasets focus on different aspects of motion information in videos. Kinetics-400 is a large-scale YouTube video dataset containing approximately 300,000 cropped video clips covering 400 different action categories. The Kinetics-400 dataset mainly contains activities in daily life, and some categories are highly correlated with interactive objects or scene information. The videos in the Something-Something V2 dataset mainly contain different objects performing the same actions, so action recognition in this dataset focuses more on motion attributes rather than object or scene information. The training set contains approximately 170,000 video clips, and the validation set contains approximately 25,000 video clips. UCF101 and HMDB51 are two relatively small video action recognition datasets. The training set of UCF101 contains approximately 9500 videos, and the training set of HMDB51 contains approximately 3500 videos. During the experiment, we first used VideoMAE to perform self-supervised pre-training on the network on the training set, then performed supervised fine-tuning of the encoder (ViT) on the training set, and finally evaluated the performance of the model on the validation set. . For the action detection data set AVA, we will first load the model trained on the Kinetics-400 data set and perform supervised fine-tuning of the encoder (ViT).

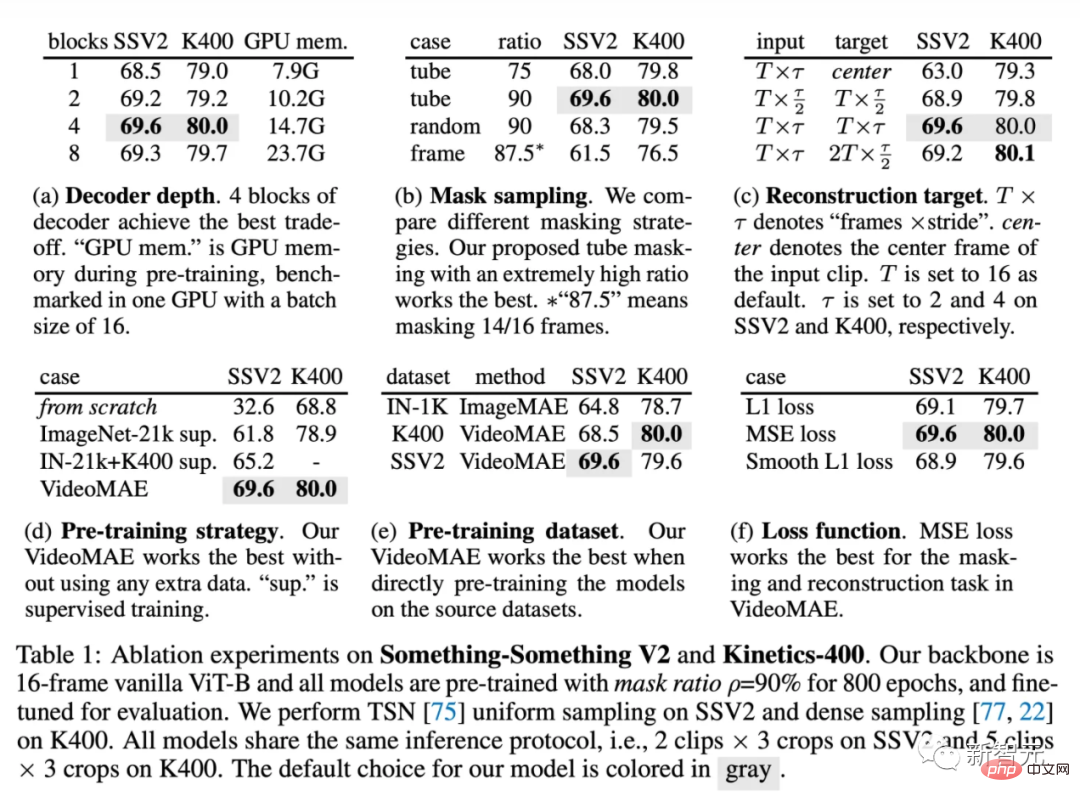

Ablation experimentThis section conducts ablation experiments on VideoMAE on Something-Something V2 and Kinetics-400 data sets. The ablation experiment uses the original ViT model with 16 frames of input by default. At the same time, when evaluating after fine-tuning, 2 video clips and 3 crops were selected for testing on Something-Something V2, and 5 video clips and 3 crops were selected for testing on Kinetics-400.

The lightweight decoder is VideA key component in oMAE. Experimental results using decoders of different depths are shown in Table (a). Unlike MAE, deeper decoders in VideoMAE can achieve better performance, while shallower decoders can effectively reduce GPU memory usage. The number of decoder layers is set to 4 by default. Following the empirical design of MAE, the channel width of the decoder in VideoMAE is set to half of the channel width of the encoder (for example, when ViT-B is used as the encoder, the channel width of the decoder is set to 384).

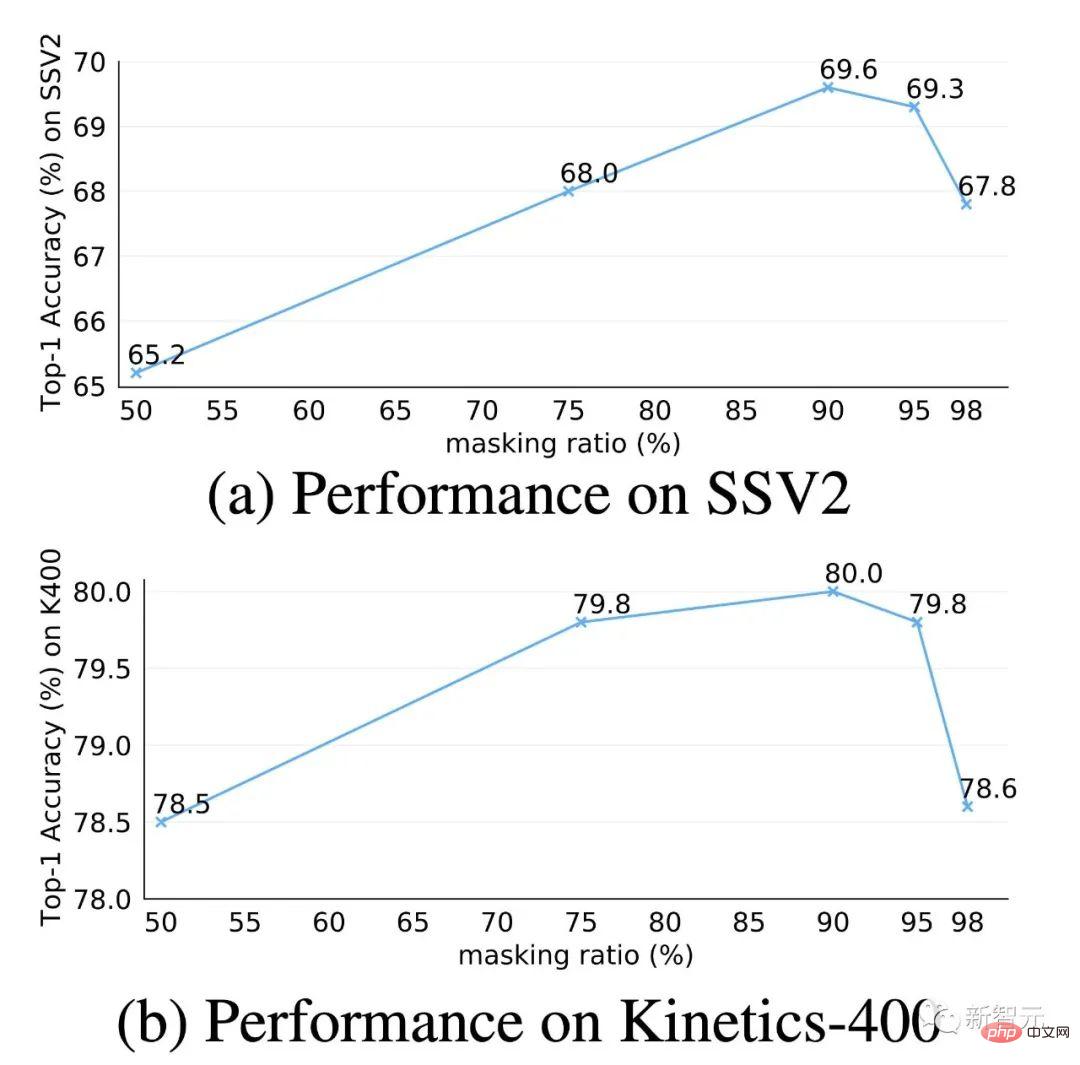

Compare different masking strategies to the pipelined masking strategy using a masking ratio of 75%. As shown in Table (b), the performance of global random masking and random masking image frames is worse than the pipeline masking strategy. This may be due to the fact that the pipeline masking strategy can alleviate the timing redundancy and timing correlation in the video data to a certain extent. If the mask ratio is increased to 90%, VideoMAE's performance on Something-Something can be further improved from 68.0% to 69.6%. The design of the masking strategy and masking ratio in VideoMAE can make masking plus reconstruction a more challenging proxy task, forcing the model to learn higher-level spatiotemporal features.

The reconstruction target in VideoMAE is compared here, and the results are in Table (c). First, VideoMAE's performance in downstream tasks will be significantly reduced if only the center frame in the video clip is used as the reconstruction target. At the same time, VideoMAE is also very sensitive to the sampling interval. If you choose to reconstruct a more densely packed video clip, the results will be significantly lower than the default temporally downsampled video clip. Finally, we also tried to reconstruct the denser frames in the video clips from the temporally downsampled video clips, but this setting would require decoding more frames, making the training slower and not very effective.

The pre-training strategy in VideoMAE is compared here, and the results are shown in Table (d). Similar to the experimental conclusions of previous methods (TimeSformer, ViViT), training ViT from scratch on Something-Something V2, a data set that is more sensitive to motion information, cannot achieve satisfactory results. If the pre-trained ViT model on the large-scale image data set (ImageNet-21K) is used as initialization, better accuracy can be obtained, which can be improved from 32.6% to 61.8%. Using models pretrained on ImageNet-21K and Kinetics-400 further improved the accuracy to 65.2%. ViT, which is pre-trained from the video data set itself using VideoMAE, can ultimately achieve the best performance of 69.6% without using any additional data. Similar conclusions were reached on Kinetics-400.

The pre-training data set in VideoMAE is compared here, and the results are shown in Table (e). First, according to the settings of MAE, ViT is self-supervised pre-trained on ImageNet-1K for 1600 epochs. The 2D block embedding layer is then inflated into a 3D spatiotemporal block embedding layer using strategies in I3D, and the model is fine-tuned on the video dataset. This training paradigm can outperform models trained supervised from scratch. Next, the performance of the MAE pretrained model was compared with the ViT model pretrained by VideoMAE on Kinetics-400. It can be found that VideoMAE can achieve better performance than MAE. However, both pre-trained models failed to achieve better performance than VideoMAE, which was only self-supervised pre-trained on the Something-Something V2 dataset. It can be analyzed that the domain difference between the pre-training data set and the target data set may be an important issue.

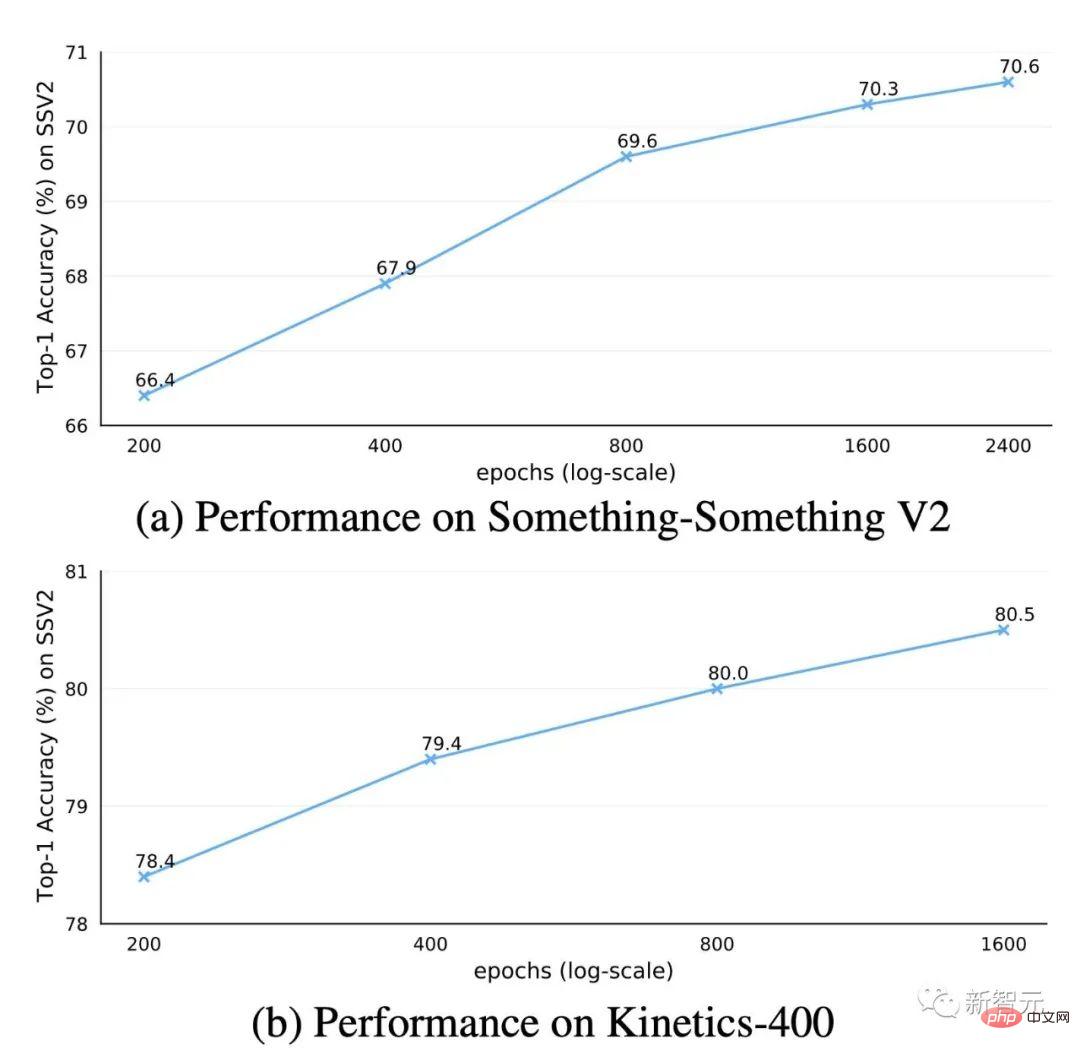

Total rounds of pre-training in VideoMAE Impact

In ablation experiments, the total number of epochs for VideoMAE pre-training is set to 800 by default. We attempt to conduct an in-depth exploration of the pre-training epochs on the Kinetics-400 and Something-Something V2 datasets. According to the results in the figure, using longer pre-training epochs leads to consistent gains on both datasets.

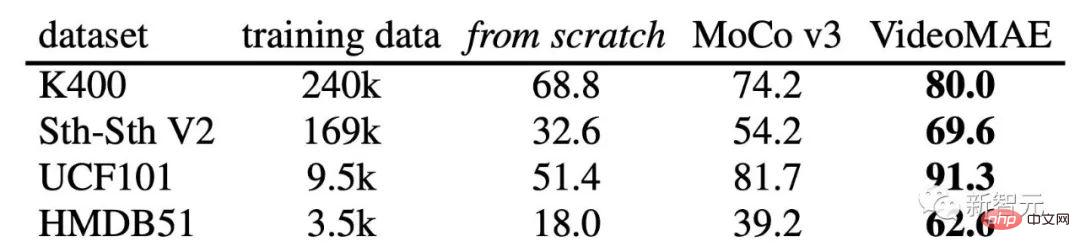

Performance comparison between VideoMAE and MoCov3 on different downstream video action recognition data sets

Many previous works have conducted extensive research on video self-supervised pre-training, but these methods mainly use convolutional neural networks as Backbone, and there are few methods to study ViT-based training mechanisms. Therefore, to verify the effectiveness of ViT-based VideoMAE for video self-supervised pre-training, we compared two ViT-based training methods: (1) supervised training of the model from scratch, (2) using a contrastive learning method (MoCo v3) Perform self-supervised pre-training. According to the experimental results, it can be found that VideoMAE is significantly better than the other two training methods. For example, on the Kinetics-400 dataset, which has the largest data size, VideoMAE is approximately 10% more accurate than training from scratch and approximately 6% higher than the results of MoCo v3 pre-training. The excellent performance of VideoMAE shows that the self-supervised paradigm of masking-and-reconstruction provides an efficient pre-training mechanism for ViT. At the same time, it is worth noting that as the training set becomes smaller, the performance gap between VideoMAE and the other two training methods becomes larger and larger. It is worth noting that even though the HMDB51 dataset only contains about 3500 video clips, the pre-trained model based on VideoMAE can still achieve very satisfactory accuracy. This new result demonstrates that VideoMAE is a data-efficient learner. This is different from contrastive learning, which requires a large amount of data for pre-training. VideoMAE's data-efficient features are particularly important in scenarios where video data is limited.

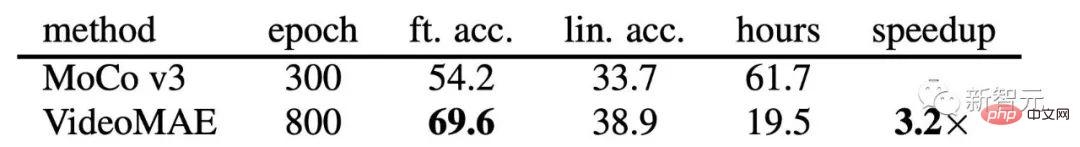

##Efficiency analysis of VideoMAE and MoCov3 on Something-SomethingV2 dataset

We also further compared the computational efficiency of pre-training using VideoMAE and pre-training using MoCo v3. Due to the extremely challenging proxy task of masking and reconstruction, the network can only observe 10% of the input data (90% of tokens are masked) in each iteration, so VideoMAE requires more training rounds. A very high proportion of tokens are obscured. This design greatly saves the calculation consumption and time of pre-training. VideoMAE pre-training for 800 rounds only takes 19.5 hours, while MoCo v3 pre-training for 300 rounds takes 61.7 hours.

Extremely high masking rate

The extremely high mask rate is one of the core designs in VideoMAE. We conduct an in-depth exploration of this design on the Kinetics-400 and Something-Something V2 datasets. According to the results in the figure, when the mask ratio is very high, even 95%, the network still shows excellent performance on these two important datasets for downstream video action recognition tasks. This phenomenon is hugely different from BERT in natural language processing and MAE for images. The existence of temporal redundancy and temporal correlation in video data allows VideoMAE to operate with extremely high mask ratios compared to image data and natural language.

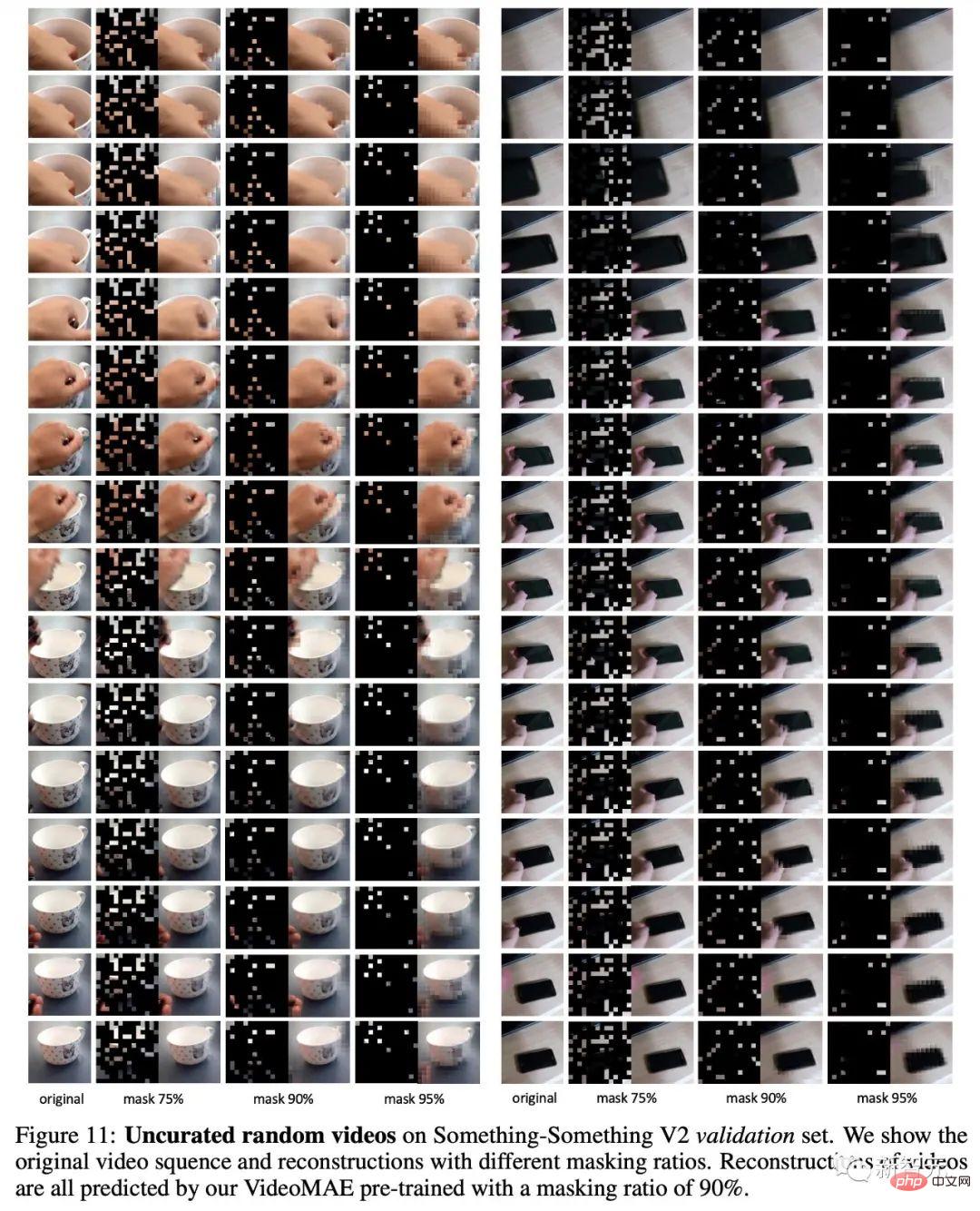

We also visualize reconstructed examples of pre-trained VideoMAE. It can be found from the figure that VideoMAE can produce satisfactory reconstruction results even at extremely high mask rates. This means that VideoMAE can learn and extract spatiotemporal features in videos.

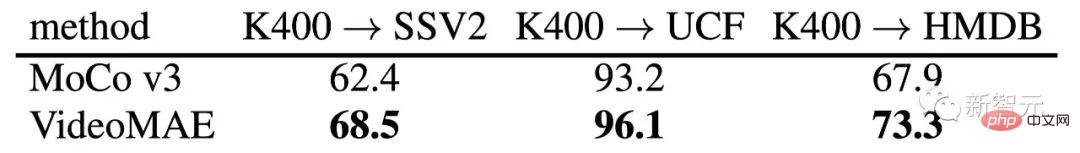

In order to further study the features learned by VideoMAE, this section evaluates the generalization and transfer capabilities of pre-trained VideoMAE. The table above shows the transfer effect of VideoMAE pre-trained on the Kinetics-400 data set to Something-Something V2, UCF101 and HMDB51 data sets. At the same time, the table also shows the migration ability of the model pre-trained using MoCo v3. According to the results in the table, the transfer and generalization capabilities of the model pre-trained using VideoMAE are better than the model pre-trained based on MoCo v3. This shows that VideoMAE can learn more transferable feature representations. VideoMAE pretrained on the Kinetics-400 dataset performs better than VideoMAE pretrained directly on the UCF101 and HMDB51 datasets. But the model pre-trained on the Kinetics-400 dataset transfers poorly on the Something-Something V2 dataset.

To further explore the reasons for this inconsistency, we conducted experiments on reducing the number of pre-training videos on the Something-Something V2 dataset . The exploration process includes two experiments: (1) using the same number of training rounds (epoch) for pre-training, (2) using the same number of iterations (iteration) for pre-training. From the results in the figure, we can find that when reducing the number of pre-training samples, using more training iterations can also improve the performance of the model. Even if only 42,000 pre-trained videos are used, VideoMAE trained directly on the Something-Something V2 dataset can still achieve better accuracy (68.7) than the pre-trained Kinetics-400 dataset using 240,000 video data. % vs. 68.5%). This finding means that domain differences are another important factor to pay attention to during video self-supervised pre-training. When there are domain differences between the pre-training dataset and the target dataset, the quality of the pre-training data is more important than the quantity of the data. At the same time, this finding also indirectly verifies that VideoMAE is a data-efficient learner for video self-supervised pre-training.

Something-Something V2 data set experimental results

Kinetics-400 data set experimental results

UCF101 and HMDB51 data set experimental results

VideoMAE’s Top-1 accuracy on Something-Something V2 and Kinetics-400 without using any additional data Reached 75.4% and 87.4% respectively. It should be noted that the current state-of-the-art methods on the Something-Something V2 dataset strongly rely on models pre-trained on external datasets for initialization. In contrast, VideoMAE is able to significantly outperform the previous best method by about 5% in accuracy without utilizing any external data. VideoMAE can also achieve excellent performance on the Kinetics-400 data set. In the case of limited video data (for example, the UCF101 dataset only contains less than 10,000 training videos, and the HMDB51 only contains about 3500 training videos), VideoMAE does not need to utilize any additional image and video data, and can also be used in these Far surpassing previous best methods on small-scale video datasets.

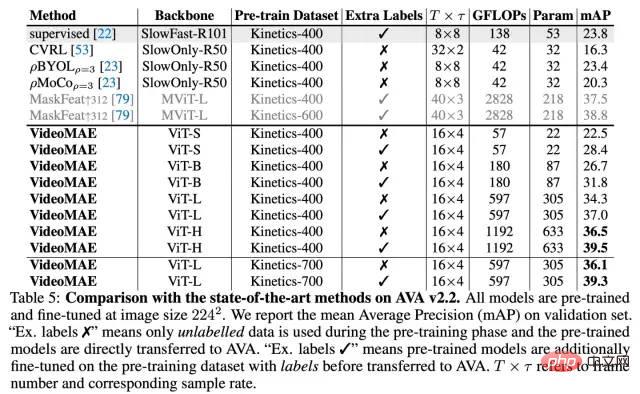

AVA v2.2 data set experimental results

In addition to the traditional action classification task, we further verified the representation ability of the VideoMAE model on the more sophisticated understanding task of video action detection. We selected the AVA v2.2 data set for experiments. In the experiment, the pre-trained model on the Kinetics-400 data set will first be loaded, and then ViT will be fine-tuned in a supervised manner. It can be found from the table that the ViT model pre-trained by VideoMAE can achieve very good results on the AVA v2.2 data set. If the self-supervised pre-trained ViT model is further supervised fine-tuned on Kinetics-400, it can perform better on the action detection task (3 mAP-6mAP improvement). This also shows that the performance of the VideoMAE self-supervised pre-trained model can be further improved by performing supervised fine-tuning on the upstream data set and then migrating to downstream tasks.

We open sourced the VideoMAE model and code in April this year and received continued attention and recognition from the community.

According to the Paper with Code list, VideoMAE has occupied the top spot on the Something-Something V2[1] and AVA 2.2[2] lists respectively. Half a year (from the end of March 2022 to the present). Without utilizing any external data, VideoMAE’s results on Kinetics-400[3], UCF101[4], and HMDB51[5] datasets are also the best so far.

https://huggingface.co/docs/transformers/main/en/model_doc/videomae

A few months ago, VideoMAE’s model was included in Hugging Face’s Transformers official repository. It was the first video understanding model included in the repository! To a certain extent, it also reflects the community’s recognition of our work! We hope that our work can provide a simple and efficient baseline method for Transformer-based video pre-training, and also inspire subsequent Transformer-based video understanding methods.

https://github.com/open-mmlab/mmaction2/tree/dev-1.x/configs/recognition/videomae

The current video understanding warehouse MMAction2 also supports inference of the VideoMAE model.

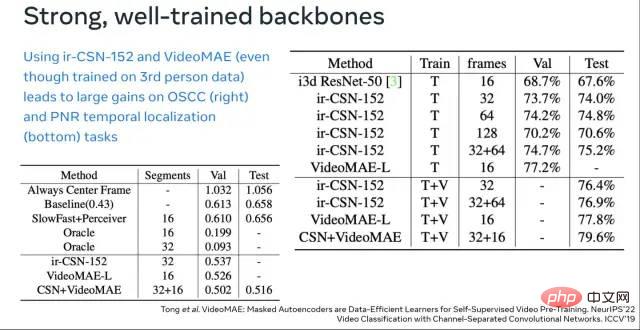

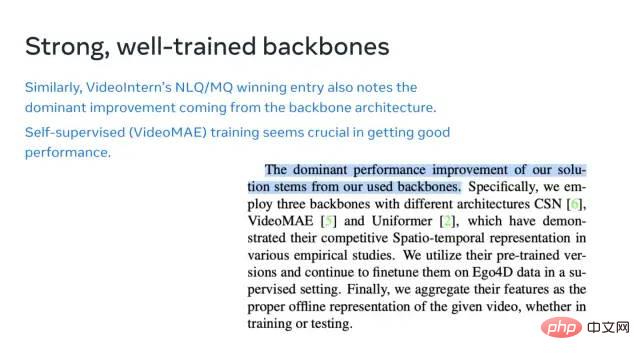

At the just-concluded ECCV 2022 2nd International Ego4D Workshop, VideoMAE has quickly become a powerful tool to help everyone play the game. Shanghai Artificial Intelligence Laboratory won championships in multiple sub-tracks of this Ego4D Challenge. Among them, VideoMAE serves as an important backbone, providing powerful video features for their solution. It is worth noting that from the first picture above, it can be found that the effect of VideoMAE (ViT-L) pre-trained only on Kinetics-400 can already surpass that of the IG-65M video data set (approximately Kinetics-400 sample data 300 times) on pre-trained ir-CSN-152. This also further verifies the powerful representation ability of the VideoMAE pre-trained model.

The main contributions of our work include the following three aspects:

• We were the first to propose VideoMAE, a video self-supervised pre-training framework based on ViT masking and reconstruction. Even with self-supervised pre-training on smaller-scale video data sets, VideoMAE can still achieve excellent performance. In order to solve the "information leakage" problem caused by temporal redundancy and temporal correlation, we propose tube masking with an extremely high masking rate. ). Experiments show that this design is the key to VideoMAE's ultimate ability to achieve SOTA effects. At the same time, due to the asymmetric encoder-decoder architecture of VideoMAE, the computational consumption of the pre-training process is greatly reduced, which greatly saves the time of the pre-training process.

• VideoMAE has successfully extended the experience in NLP and image fields to the field of video understanding in a natural but valuable way, verifying that simple proxy tasks based on masking and reconstruction can provide video self-supervised pre-training. A simple yet very effective solution. The performance of the ViT model after self-supervised pre-training using VideoMAE is significantly better than train from scratch or contrastive learning methods on downstream tasks in the field of video understanding (such as action recognition, action detection).

• There are two interesting findings during the experiment, which may have been overlooked by previous research work in NLP and image understanding: (1) VideoMAE is a data-efficient learner . Even on the video data set HMDB51, which only has about 3,000 videos, VideoMAE is able to complete self-supervised pre-training and can achieve results far exceeding those of other methods on downstream classification tasks. (2) For video self-supervised pre-training, when there is an obvious domain gap between the pre-training data set and the downstream task data set, the quality of the video data may be more important than the quantity.

The above is the detailed content of VideoMAE: a new paradigm of simple and efficient video self-supervised pre-training. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life

What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence

How to solve too many logins

How to solve too many logins

windows cannot open add printer

windows cannot open add printer

what is c#

what is c#

Solution to the problem that setting the Chinese interface of vscode does not take effect

Solution to the problem that setting the Chinese interface of vscode does not take effect

How to cut long pictures on Huawei mobile phones

How to cut long pictures on Huawei mobile phones

How to format hard drive in linux

How to format hard drive in linux