ChatGPT can become the top model that is popular all over the world, and it is indispensable for the super computing power behind it.

Data shows that the total computing power consumption of ChatGPT is approximately 3640PF-days (that is, if one quadrillion calculations are performed per second, it will take 3640 days to calculate).

So, how did the supercomputer that Microsoft built specifically for OpenAI come into being?

On Monday, Microsoft posted two articles in a row on its official blog, personally decrypting this ultra-expensive supercomputer and Azure's major upgrade - joining thousands of Nvidia's latest Powerful H100 graphics card and faster InfiniBand network interconnect technology.

Based on this, Microsoft also officially announced the latest ND H100 v5 virtual machine. The specific specifications are as follows:

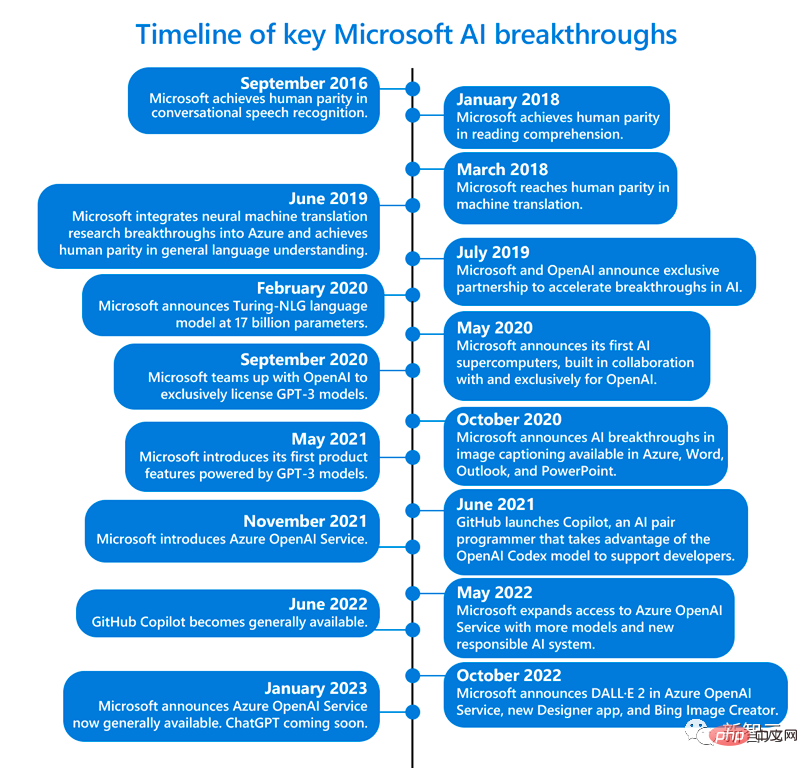

About five years ago, OpenAI proposed a bold proposal to Microsoft The idea – build an artificial intelligence system that could forever change the way humans and computers interact.

At that time, no one could have thought that this would mean that AI could use pure language to create any picture described by humans, and humans could use chatbots to write poems, lyrics, and Papers, emails, menus...

In order to build this system, OpenAI needs a lot of computing power - the kind that can truly support ultra-large-scale calculations.

But the question is, can Microsoft do it?

After all, there was no hardware that could meet the needs of OpenAI at the time, and it was uncertain whether building such a huge supercomputer in the Azure cloud service would directly crash the system.

Then, Microsoft began a difficult exploration.

Nidhi Chappell (left), product lead for Azure high-performance computing and artificial intelligence at Microsoft, and Phil Waymouth, senior director of strategic partnerships at Microsoft (Right)

#To build the supercomputer that supports the OpenAI project, it spent hundreds of millions of dollars to connect tens of thousands of Nvidia A100 chips together on the Azure cloud computing platform, and remodeled server racks.

In addition, in order to tailor this supercomputing platform for OpenAI, Microsoft is very dedicated and has been paying close attention to the needs of OpenAI and keeping abreast of their most critical needs when training AI.

How much does such a big project cost? Scott Guthrie, Microsoft's executive vice president of cloud computing and artificial intelligence, would not disclose the exact amount, but said it was "probably more than" a few hundred million dollars.

OpenAI’s problemsPhil Waymouth, Microsoft’s executive in charge of strategic partnerships, pointed out that the scale of cloud computing infrastructure required for OpenAI training models is unprecedented in the industry of.

Exponentially growing network GPU cluster sizes beyond what anyone in the industry has ever attempted to build.

The reason why Microsoft is determined to cooperate with OpenAI is because it firmly believes that this unprecedented scale of infrastructure will change history and create new AI and new programming platforms for customers. Provide products and services that actually serve their interests.

Now it seems that these hundreds of millions of dollars were obviously not wasted - the bet was right.

On this supercomputer, the models that OpenAI can train are becoming more and more powerful, and it has unlocked the amazing functions of AI tools. ChatGPT, which almost started the fourth industrial revolution of mankind, was born.

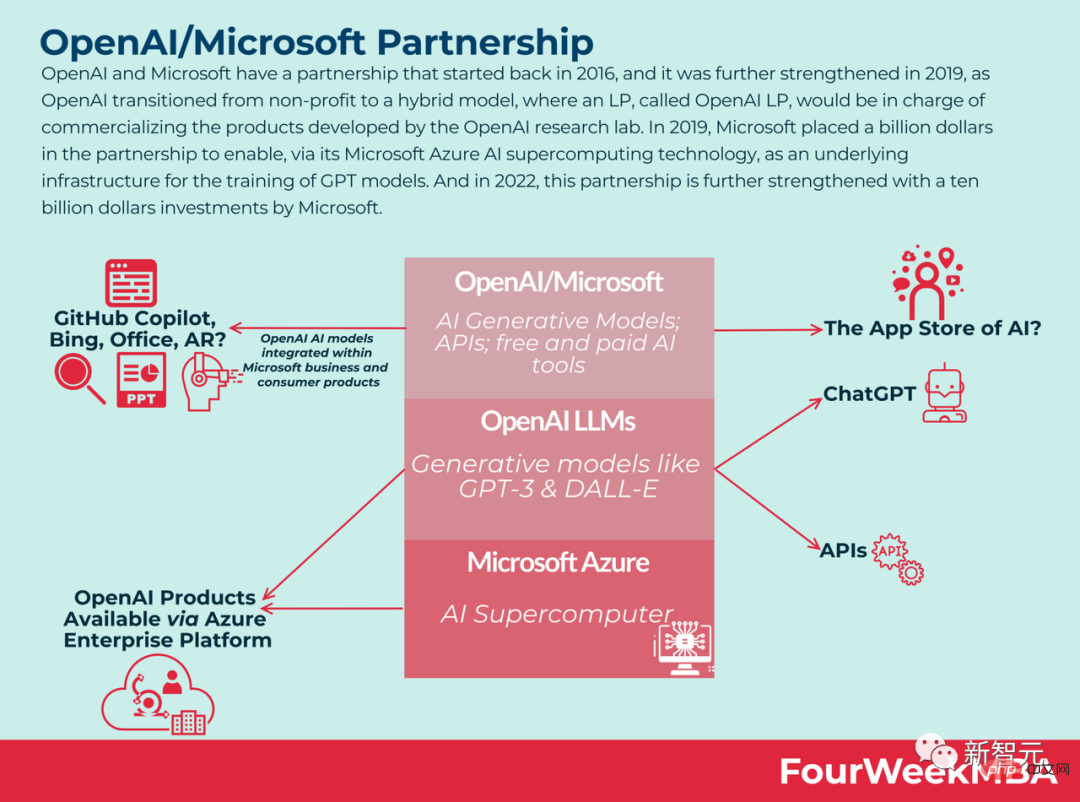

Very satisfied, Microsoft poured another $10 billion into OpenAI in early January.

It can be said that Microsoft’s ambition to break through the boundaries of AI supercomputing has paid off. What is reflected behind this is the transformation from laboratory research to AI industrialization.

Currently, Microsoft’s office software empire has begun to take shape.

ChatGPT version of Bing can help us search for vacation arrangements; the chatbot in Viva Sales can help marketers write emails; GitHub Copilot can help developers continue to write code; Azure OpenAI service It allows us to access OpenAI's large language model and access Azure's enterprise-level functions.

In fact, in November last year, Microsoft officially announced that it would join forces with Nvidia to build "the world's most One of the most powerful AI supercomputers to handle the huge computing load required to train and scale AI.

This supercomputer is based on Microsoft's Azure cloud infrastructure and uses tens of thousands of Nvidia H100 and A100 Tensor Core GPUs, as well as its Quantum-2 InfiniBand network platform.

Nvidia said in a statement that the supercomputer can be used to study and accelerate generative AI models such as DALL-E and Stable Diffusion.

As AI researchers begin to use more powerful GPUs to handle more complex AI workloads, they see greater potential for AI models , these models understand nuances well enough to handle many different language tasks simultaneously.

Simply put, the larger the model, the more data you have, the longer you can train it, and the better the accuracy of the model.

But these larger models will soon reach the boundaries of existing computing resources. And Microsoft understands what the supercomputer OpenAI needs looks like and how large it needs to be.

This is obviously not something that simply means buying a bunch of GPUs and connecting them together and then they can start working together.

Nidhi Chappell, product lead for Azure high-performance computing and artificial intelligence at Microsoft, said: “We need to allow larger models to train for longer, which means not only do you need to have the largest Infrastructure, you also have to make it run reliably over the long term."

Microsoft has to make sure it can cool all those machines and chips, said Alistair Speirs, director of global infrastructure at Azure. Examples include using outside air in cooler climates and high-tech evaporative coolers in hotter climates.

In addition, because all the machines are started at the same time, Microsoft also has to consider their placement with the power supply. It's like what might happen if you turn on the microwave, toaster, and vacuum cleaner at the same time in the kitchen, but the data center version.

What is the key to achieving these breakthroughs?

The challenge is how to build, operate and maintain tens of thousands of co-located GPUs interconnected on a high-throughput, low-latency InfiniBand network.

This scale has far exceeded the scope of testing by GPU and network equipment suppliers, and is completely unknown territory. No one knows if the hardware will break at this scale.

Nidhi Chappell, head of high-performance computing and artificial intelligence products at Microsoft Azure, explained that during the training process of LLM, the large-scale calculations involved are usually divided into thousands of GPUs in a cluster.

In a stage called allreduce, the GPUs exchange information about the work they are doing. At this time, acceleration needs to be performed through the InfiniBand network so that the GPU can complete the calculation before the next block of calculations begins.

Nidhi Chappell said that because these jobs span thousands of GPUs, in addition to ensuring the reliability of the infrastructure, many and many system-level optimizations are required to achieve optimal performance. And this is summed up through the experience of many generations.

So-called system-level optimization includes software that can effectively utilize GPU and network equipment.

Over the past few years, Microsoft has developed this technology to increase the ability to train models with tens of trillions of parameters while reducing the training cost. and the resource requirements and time required to deliver these models in production.

Waymouth pointed out that Microsoft and partners have also been gradually increasing the capacity of GPU clusters and developing InfiniBand networks to see how far they can push the data needed to keep GPU clusters running. Central infrastructure, including cooling systems, uninterruptible power systems and backup generators.

Eric Boyd, corporate vice president of Microsoft AI Platform, said that this kind of supercomputing power optimized for large-scale language model training and the next wave of AI innovation can already be directly used in Azure cloud services. get.

And Microsoft has accumulated a lot of experience through cooperation with OpenAI. When other partners come and want the same infrastructure, Microsoft can also provide it.

Currently, Microsoft’s Azure data centers have covered more than 60 regions around the world.

New virtual machine: ND H100 v5

On the above infrastructure, Microsoft has continued to improve.

Today, Microsoft officially announced new massively scalable virtual machines that integrate the latest NVIDIA H100 Tensor Core GPU and NVIDIA Quantum-2 InfiniBand network.

Through virtual machines, Microsoft can provide customers with infrastructure that scales to the scale of any AI task. According to Microsoft, Azure's new ND H100 v5 virtual machine provides developers with superior performance while calling thousands of GPUs.

Reference materials://m.sbmmt.com/link/a7bf3f5462cc82062e41b3a2262e1a21

The above is the detailed content of Revealing the secret behind the sky-high price and super calculation of ChatGPT! Tens of thousands of Nvidia A100s cost Microsoft hundreds of millions of dollars. For more information, please follow other related articles on the PHP Chinese website!