In recent years, reinforcement learning in self-game has achieved superhuman performance in a series of games such as Go and chess. Furthermore, the idealized version of self-play also converges to a Nash equilibrium. Nash equilibrium is very famous in game theory. This theory was proposed by John Nash, the founder of game theory and Nobel Prize winner. That is, in a game process, regardless of the other party's strategy choice, one party will choose a certain strategy. strategy, the strategy is called a dominant strategy. If any player chooses the optimal strategy when the strategies of all other players are determined, then this combination is defined as a Nash equilibrium.

Previous research has shown that the seemingly effective continuous control strategies in self-game can also be exploited by countermeasures, suggesting that self-game may not be as powerful as previously thought. This leads to a question: Is confrontational strategy a way to overcome self-game, or is self-game strategy itself insufficient?

In order to answer this question, researchers from MIT, UC Berkeley and other institutions conducted some research. They chose a field that they are good at in self-games, namely Go. Specifically, they conducted an attack on KataGo, the strongest publicly available Go AI system. For a fixed network (freezing KataGo), they trained an end-to-end adversarial strategy. Using only 0.3% of the calculations when training KataGo, they obtained an adversarial strategy and used this strategy to attack KataGo. In this case, their strategy achieved a 99% winning rate against KataGo, which is comparable to the top 100 European Go players. And when KataGo used enough searches to approach superhuman levels, their win rate reached 50%. Crucially, the attacker (in this paper referring to the strategy learned in this study) cannot win by learning a general Go strategy.

Here we need to talk about KataGo. As this article says, when they wrote this article, KataGo was still the most powerful public Go AI system. With the support of search, it can be said that KataGo is very powerful, defeating ELF OpenGo and Leela Zero, which are superhuman themselves. Now the attacker in this study has defeated KataGo, which can be said to be very powerful.

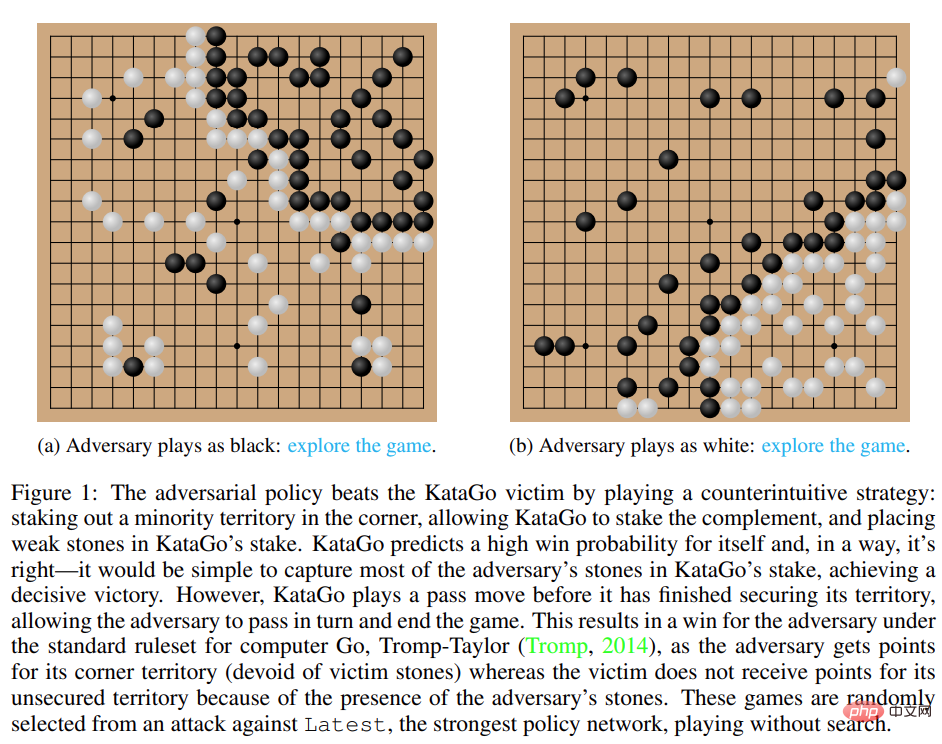

Figure 1: Adversarial strategy defeats KataGo victim.

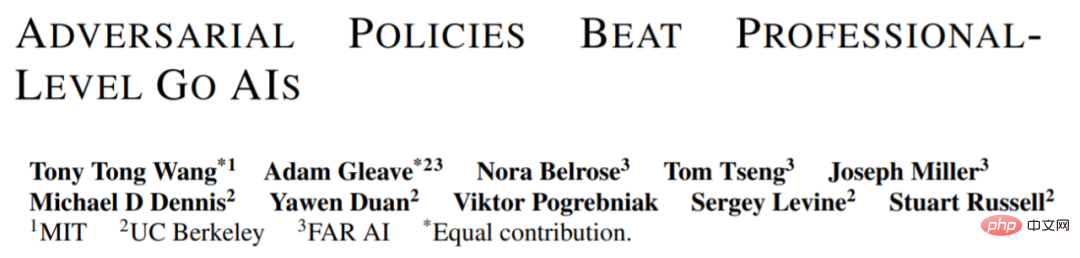

Interestingly, the adversarial strategy proposed in this study cannot defeat human players, and even amateur players can significantly outperform the proposed model.

Previous methods such as KataGo and AlphaZero usually train the agent to play games by itself, and the game opponent is the agent itself. In this research by MIT, UC Berkeley and other institutions, a game is played between the attacker (adversary) and fixed victim (victim) agents, and the attacker is trained in this way. The research hopes to train attackers to exploit game interactions with victim agents rather than just imitating game opponents. This process is called "victim-play".

In conventional self-game, the agent models the opponent's actions by sampling from its own policy network. This approach does work. In the game of self. But in victim-play, modeling the victim from the attacker's policy network is the wrong approach. To solve this problem, this study proposes two types of adversarial MCTS (A-MCTS), including:

During training, the study trained adversarial strategies against games against frozen KataGo victims. Without search, the attacker can achieve >99% win rate against KataGo victims, which is comparable to the top 100 European Go players. Furthermore, the trained attacker achieved a win rate of over 80% in 64 rounds played against the victim agent, which the researchers estimate was comparable to the best human Go players.

It is worth noting that these games show that the countermeasures proposed in this study are not entirely gaming, but rather deceiving KataGo into positioning in a position favorable to the attacker. End the game early. In fact, while the attacker was able to exploit gaming strategies comparable to those of the best human Go players, it was easily defeated by human amateurs.

In order to test the attacker's ability to play against humans, the study asked Tony Tong Wang, the first author of the paper, to actually play against the attacker model. Wang had never learned the game of Go before this research project, but he still beat the attacker model by a huge margin. This shows that while the adversarial strategy proposed in this study can defeat an AI model that can defeat top human players, it cannot defeat human players. This may indicate that some AI Go models have bugs.

Attack Victim Policy Network

First, the researchers evaluated themselves The attack method was evaluated on the performance of KataGo (Wu, 2019), and it was found that the A-MCTS-S algorithm achieved a winning rate of more than 99% against the search-free Latest (KataGo's latest network).

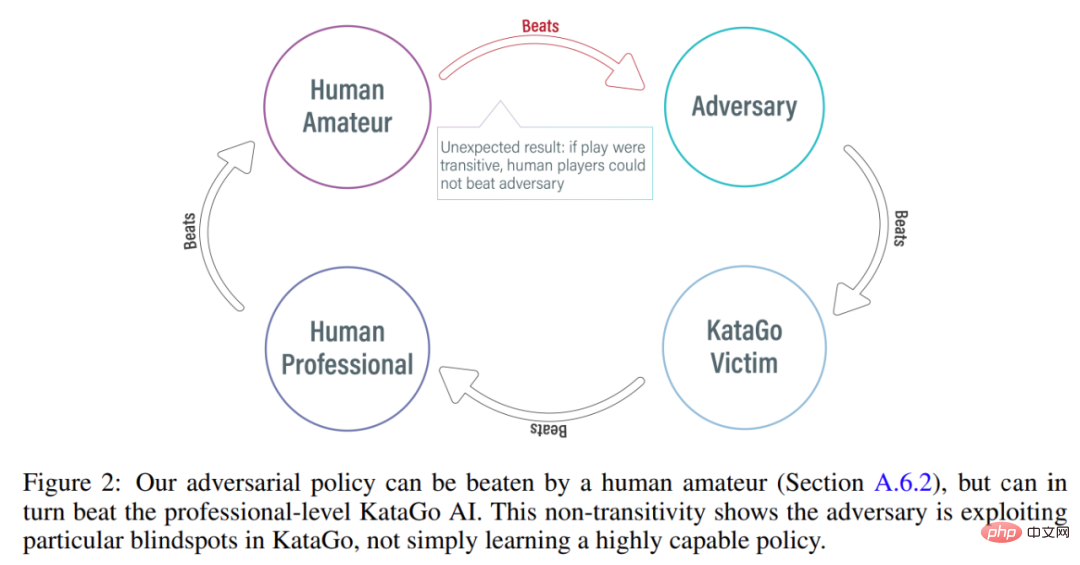

As shown in Figure 3 below, the researcher evaluated the performance of the self-confrontation strategy on the Initial and Latest policy networks. They found that during most of the training, the self-attacker achieved a high winning rate (above 90%) against both victims. However, as time goes by, the attacker overfits Latest, and the winning rate against Initial drops to about 20%.

The researchers also evaluated the best counter-strategy checkpoints against Latest, achieving a win rate of over 99%. Moreover, such a high win rate is achieved while the adversarial strategy is trained for only 3.4 × 10^7 time steps, which is 0.3% of the victim time steps.

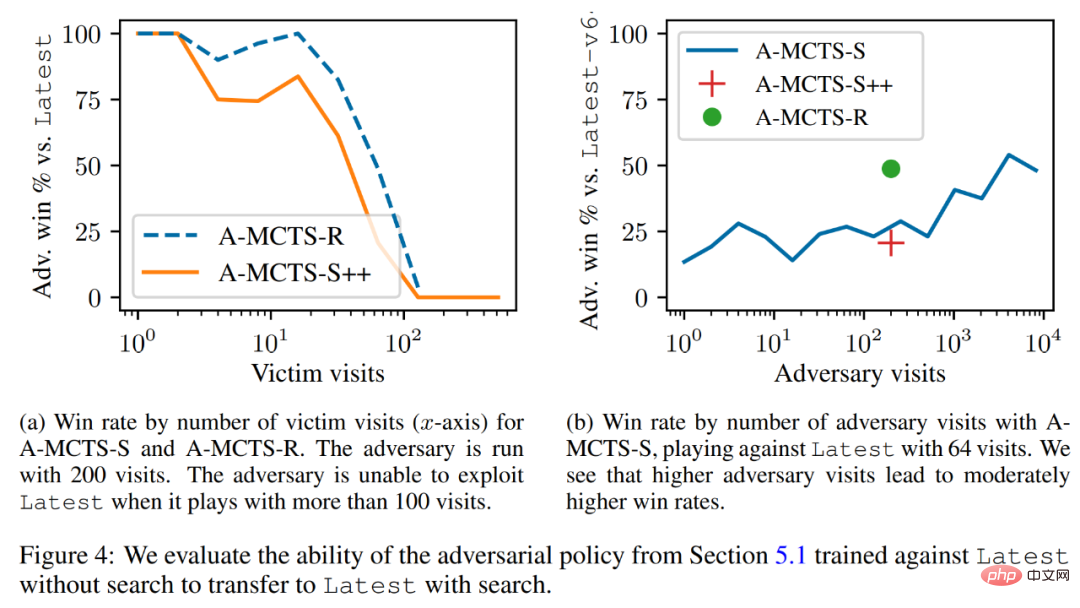

Migrate to victims with searches

Researchers will The adversarial strategy was successfully transferred to the low search mechanism, and the adversarial strategy trained in the previous section was evaluated for its ability to search Latest. As shown in Figure 4a below, they found that A-MCTS-S’s win rate against victims dropped to 80% at 32 victim rounds. But here, the victim does not search during training and inference.

In addition, the researchers also tested A-MCTS-R and found that it performed better, with 32 victim rounds. Latest achieved a win rate of over 99%, but at round 128 the win rate dropped below 10%.

In Figure 4b, the researchers show that when the attacker comes to 4096 rounds, A-MCTS-S achieves a maximum winning rate of 54% against Latest. This is very similar to the performance of A-MCTS-R at 200 epochs, which achieved a 49% win rate.

Other evaluation

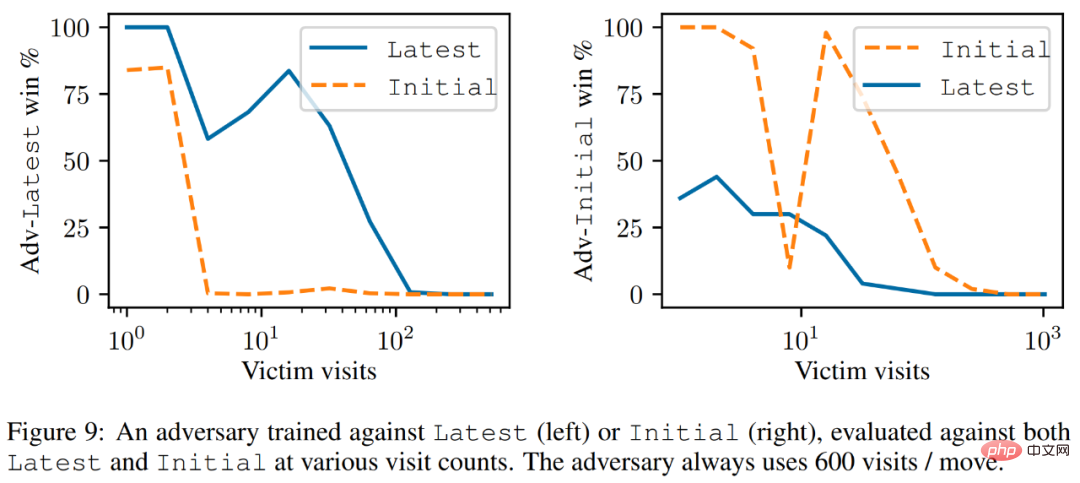

As shown in Figure 9 below, the researchers found that although Latest is a more powerful intelligence body, but the attacker trained against Latest performs better against Latest than Initial.

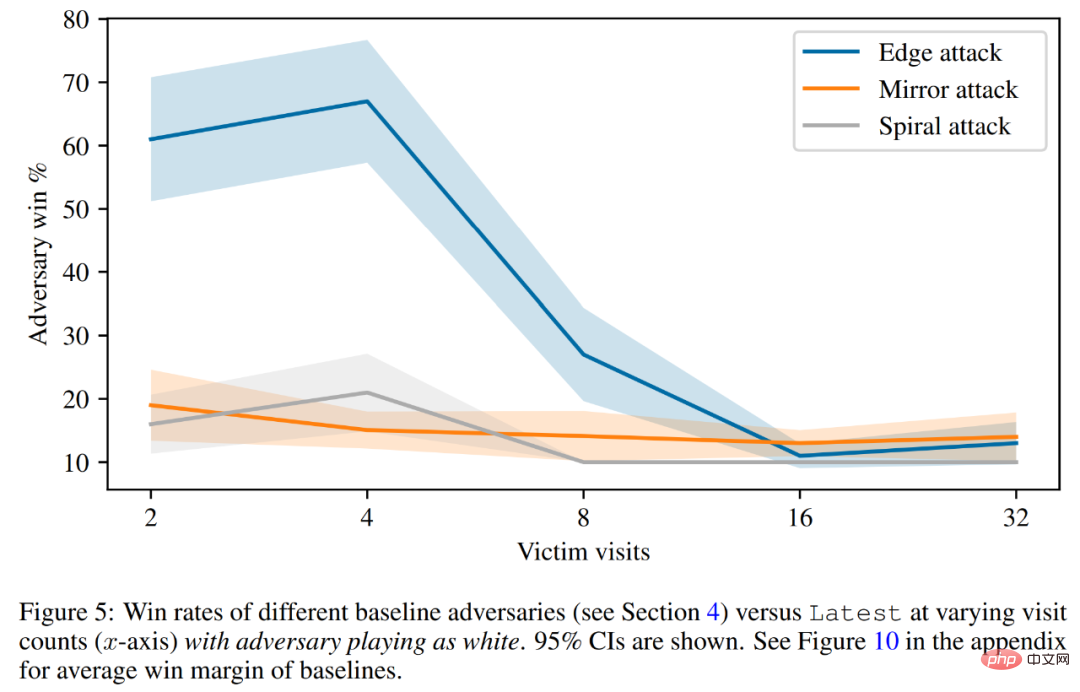

Finally, the researchers discussed the attack principle, including victim value prediction and hard-coded defense evaluation. As shown in Figure 5 below, all baseline attacks perform significantly worse than the adversarial strategies they were trained on.

Please refer to the original paper for more technical details.

The above is the detailed content of Use magic to defeat magic! A Go AI that rivaled top human players lost to its peers. For more information, please follow other related articles on the PHP Chinese website!

How to set up a domain name that automatically jumps

How to set up a domain name that automatically jumps What is CONNECTION_REFUSED

What is CONNECTION_REFUSED What are the SEO diagnostic methods?

What are the SEO diagnostic methods? How to solve the "NTLDR is missing" error on your computer

How to solve the "NTLDR is missing" error on your computer Usage of sprintf function in php

Usage of sprintf function in php Can Douyin sparks be lit again if they have been off for more than three days?

Can Douyin sparks be lit again if they have been off for more than three days? press any key to restart

press any key to restart linear-gradient usage

linear-gradient usage