As one of the most prestigious AI academic conferences in the world, NeurIPS is an important event in the academic community every year. Its full name is Neural Information Processing Systems, which is usually hosted by the NeurIPS Foundation in December every year. .

The content discussed at the conference includes deep learning, computer vision, large-scale machine learning, learning theory, optimization, sparse theory and many other subdivisions.

This year's NeurIPS is the 36th and will be held for two weeks from November 28th to December 9th.

The first week will be an in-person meeting at the Ernest N. Morial Convention Center in New Orleans, USA, and the second week will be an online meeting.

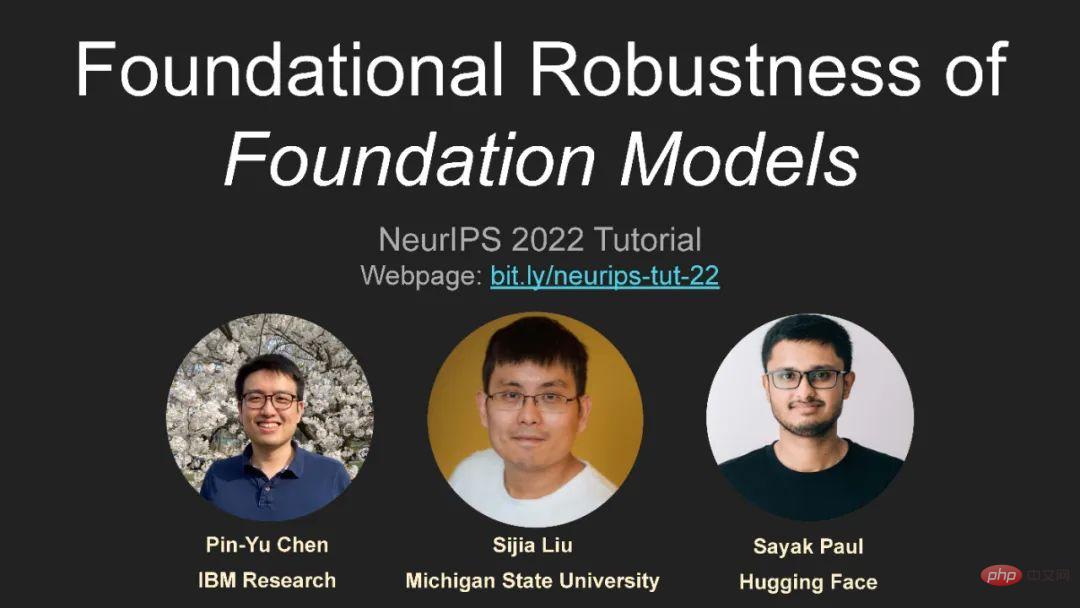

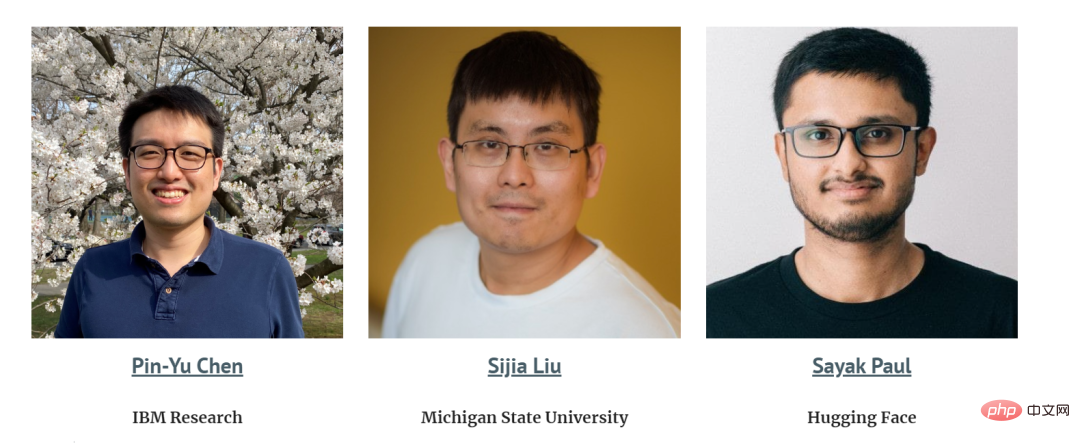

Scholars from IBM Research Center and other scholars talk about the robustness of large models, which is very worthy of attention!

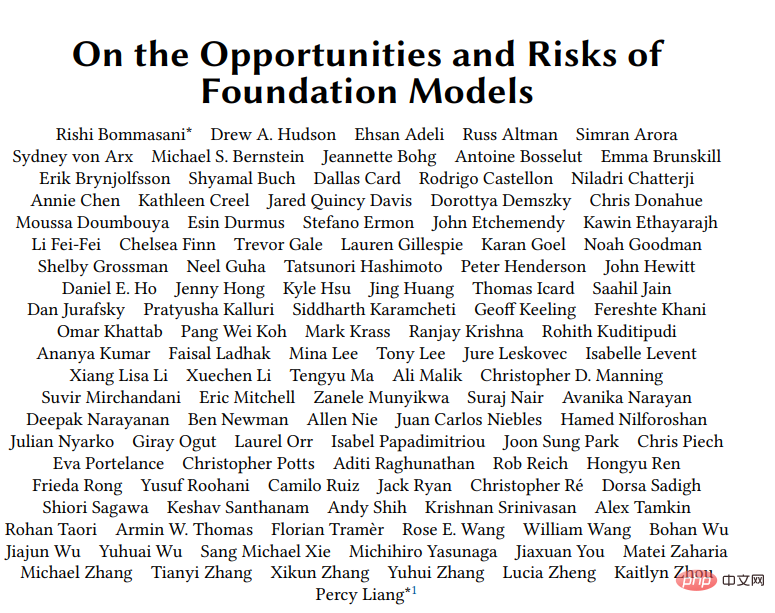

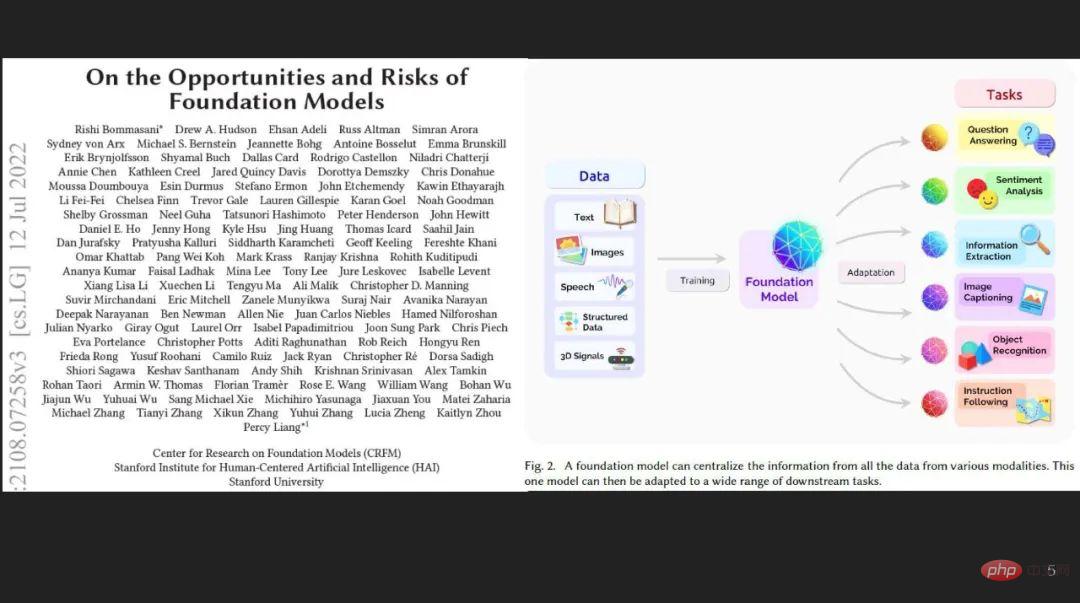

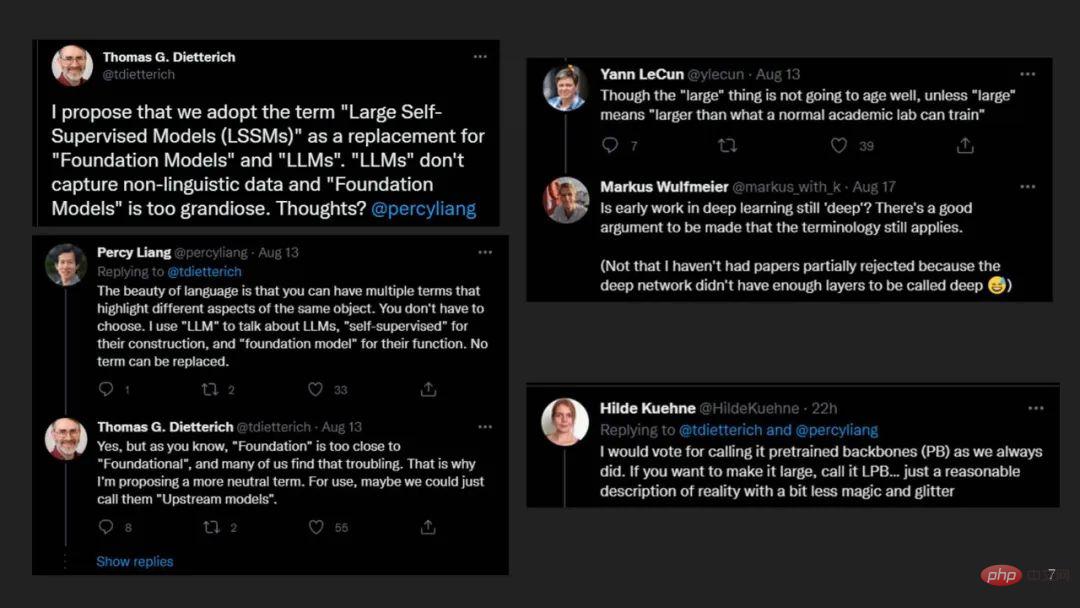

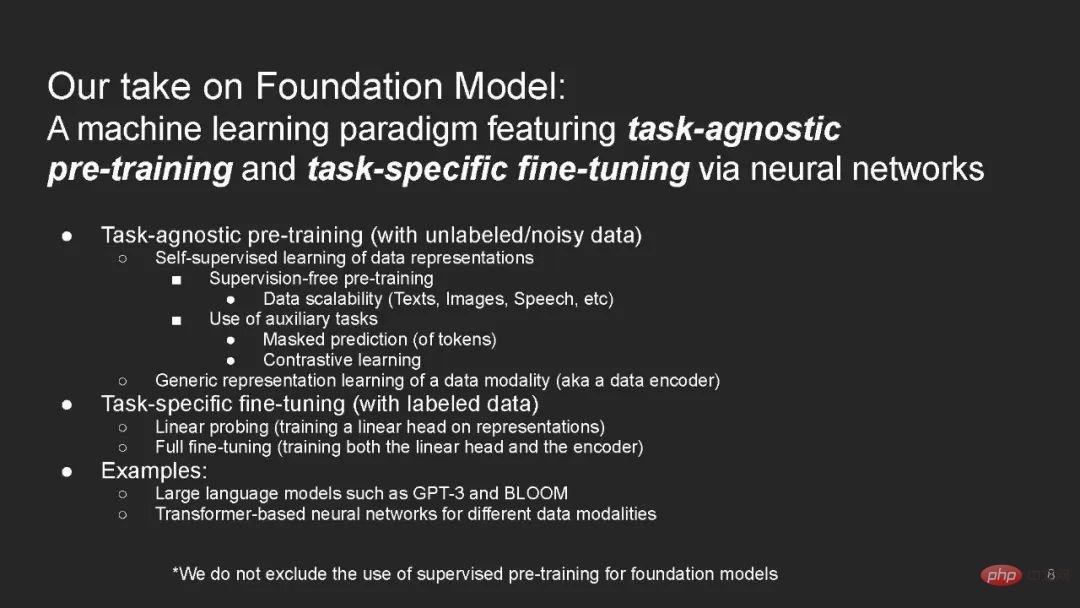

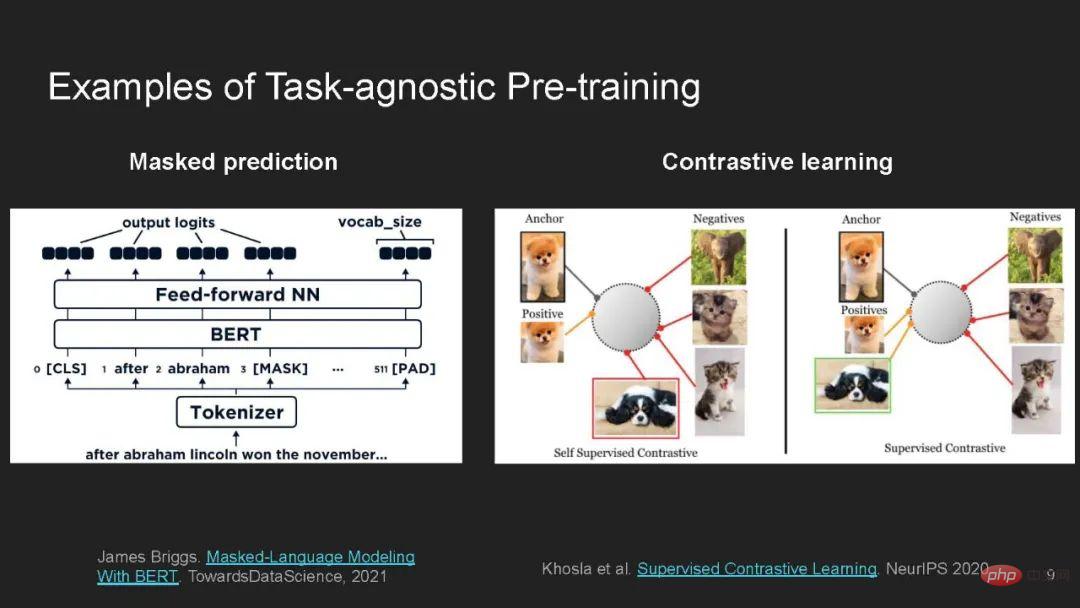

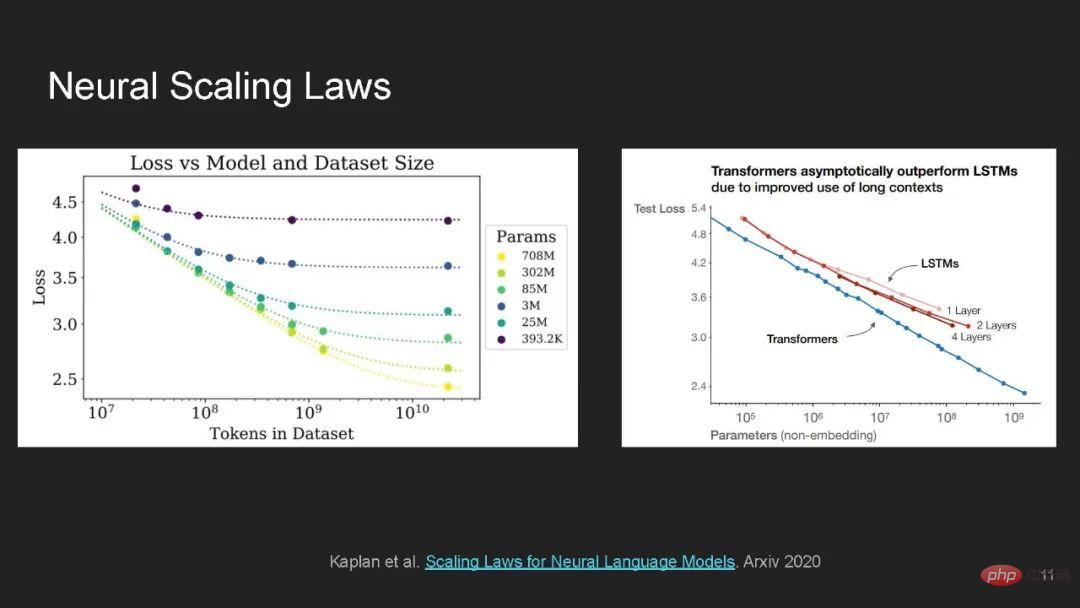

The basic model adopts deep learning method, pre-training on large-scale unlabeled data, and fine-tuning through supervision of specific tasks. Become a mainstream technology for machine learning.

Although the base model holds a lot of promise in learning general representations and few/zero-shot generalization across domains and data patterns, it also suffers from the excessive data volume and complexity used. Neural network architectures, they pose unprecedented challenges and considerable risks in terms of robustness and privacy.

This tutorial aims to provide a coursera-like online tutorial, containing comprehensive lectures, a practical and interactive Jupyter/Colab real-time coding demonstration, and a tutorial on trustworthiness in the basic model. Group discussion on different aspects of sexuality.

https://sites.google.com/view/neurips2022-frfm-turotial

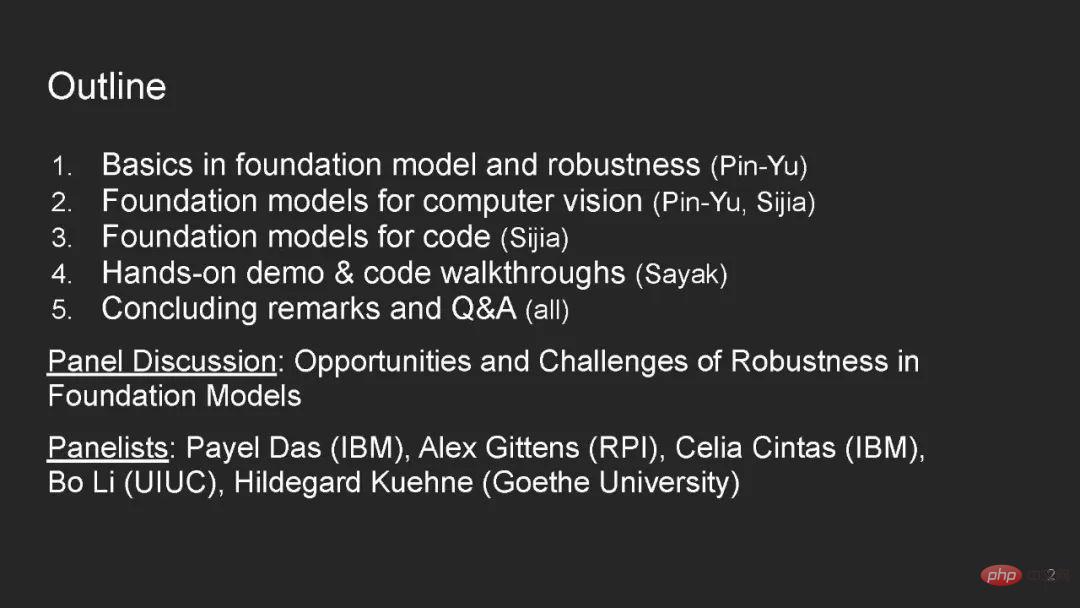

Directory content:

Speaker:

Real-world machine learning systems need to be robust to distribution changes - they should work well on test distributions that are different from the training distribution.

Such as poverty maps in under-resourced countries [Xie et al. 2016; Jean et al. 2016], self-driving cars [Yu et al. 2020a; Sun et al. 2020a], High-risk applications such as medical diagnosis [AlBadawy et al. 2018; Dai and Gool 2018] require the model to generalize well to environments not seen in the training data. For example, test samples come from different countries and are in different environments. Driving conditions, or from different hospitals.

Previous work has shown that these distribution changes can lead to large performance degradation even for current state-of-the-art models [Blitzer et al. 2006; Daumé III 2007; Sugiyama et al. al. 2007; Ganin and Lempitsky 2015; Peng et al. 2019; Kumar et al. 2020a; Arjovsky et al. 2019; Szegedy et al. 2014; Hendrycks and Dietterich 2019; Sagawa et al. 2020a; Recht et al. 2019; Abney 2007; Ruder and Plank 2018; Geirhos et al. 2018; Kumar et al. 2020b; Yu et al. 2020b; Geirhos et al. 2020; Xie et al. 2021a; Koh et al. 2021].

A base model is trained on a large and diverse unlabeled data set sampled from the distribution , and can then be adapted to many downstream tasks.

, and can then be adapted to many downstream tasks.

For each downstream task , the base model is within a labeled distribution sampled from the training distribution

, the base model is within a labeled distribution sampled from the training distribution Train on the (in-distribution, ID) training data, and then evaluate on the out-of-distribution (OOD) test distribution

Train on the (in-distribution, ID) training data, and then evaluate on the out-of-distribution (OOD) test distribution .

.

For example, a poverty map prediction model [Xie et al. 2016; Jean et al. 2016] can learn useful features for all countries in unlabeled satellite data around the world, and then Fine-tuning is performed on labeled examples from Nigeria and finally evaluated on Malawi where labeled examples are lacking.

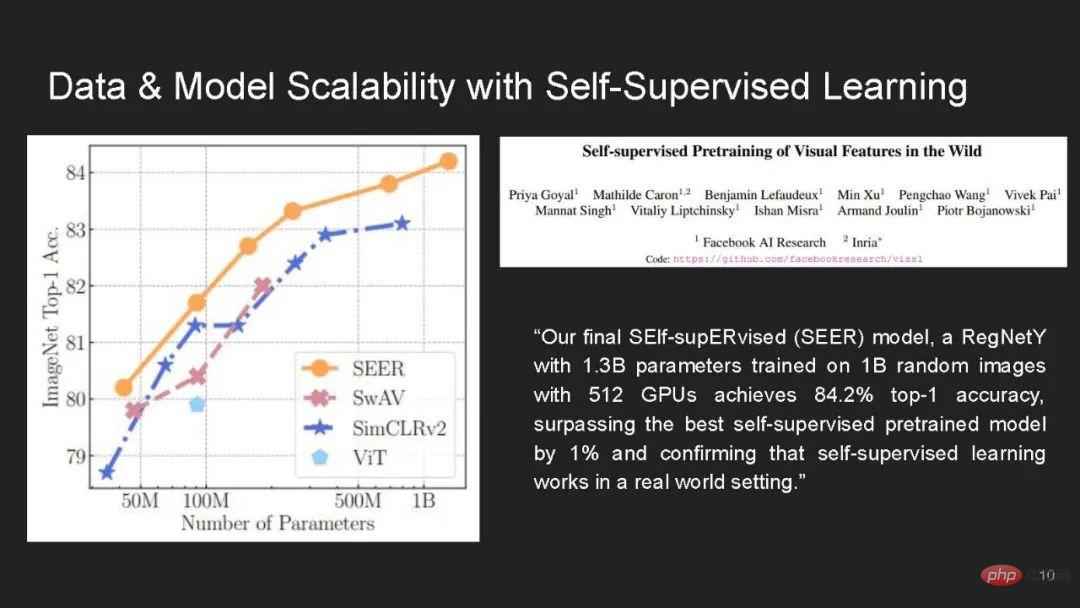

We believe that: 1) The base model is a particularly promising approach in terms of robustness. Existing work shows that pretraining on unlabeled data is an effective and general method to improve accuracy on the OOD test distribution, in contrast to many robustness interventions that are limited to limited distribution changes.

However, we also discussed 2) why the underlying model may not always cope with distribution changes, such as some due to spurious correlations or distribution changes over time.

Finally, 3) we outline several research directions that exploit and improve the robustness of the underlying model.

We note that one way for the base model to improve the performance of downstream tasks is to provide inductive biases (through model initialization) for the adapted model, which are outside the downstream training data. learned on a variety of data sets.

However, the same inductive bias can also encode harmful associations from pre-training data and lead to representation and assignment harms in the presence of distribution changes.

#

#

The above is the detailed content of How reliable are large models? IBM and other scholars' latest tutorial on 'Basic Robustness of Basic Models”. For more information, please follow other related articles on the PHP Chinese website!

How do PR subtitles appear word for word?

How do PR subtitles appear word for word? How to create virtual wifi in win7

How to create virtual wifi in win7 What should I do if my iPad cannot be charged?

What should I do if my iPad cannot be charged? What are the computer performance online testing software?

What are the computer performance online testing software? How to turn off windows security center

How to turn off windows security center How to open vcf file in windows

How to open vcf file in windows What exchange is EDX?

What exchange is EDX? How to open the download permission of Douyin

How to open the download permission of Douyin