The Artificial Intelligence Bill aims to create a common regulatory and legal framework for the use of artificial intelligence, including how it is developed, what companies can use it for, and the legal consequences of not complying with the requirements. The bill could require companies to obtain approval in certain circumstances before adopting artificial intelligence, outlaw certain uses of artificial intelligence deemed too risky, and create a public list of other high-risk uses of artificial intelligence.

In an official report on the law, the European Commission said that at a broad level, the law seeks to transform the EU into a paradigm for trustworthy artificial intelligence. Legalization. This paradigm “requires that AI be legally, ethically and technologically strong, while respecting democratic values, human rights and the rule of law”.

1. Ensure that artificial intelligence systems deployed and used on the EU market are safe and respect existing legal fundamental rights and EU values ;

2. Ensure legal certainty to promote investment and innovation in AI;

3. Strengthen governance and effective enforcement of existing laws on fundamental rights and security as they apply to AI systems;

4. Promote the development of a unified market for legal, safe and trustworthy artificial intelligence applications and prevent market fragmentation.

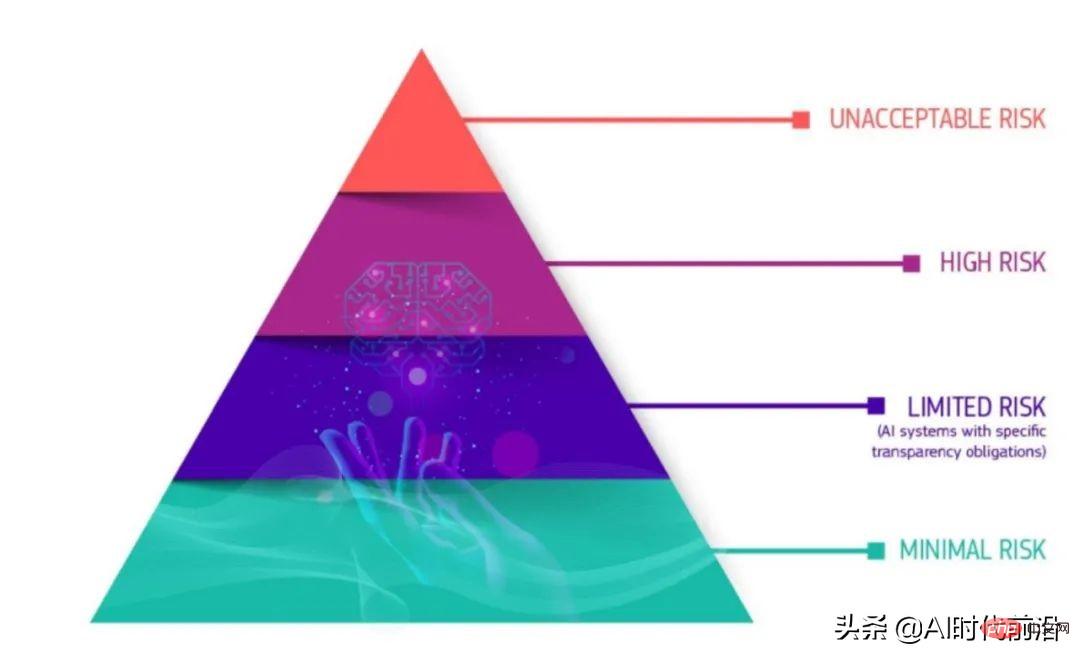

While many details of the new law are still up in the air, key being the definition of artificial intelligence, a core element appears to be its "Product Security Framework," which divides future AI products into four security level categories, Applicable to all industries.

According to a 2021 European Commission report on the new law compiled by Maruritz Kop of the Stanford-Vienna Transatlantic Technology Law Forum, the bottom of the “criticality pyramid” is the least risky system. AI applications that fall into this category do not need to comply with the transparency requirements that riskier systems must pass. In the "limited risk" category, there are some transparency requirements for AI systems, such as chatbots.

——The European Union’s new AI bill divides AI programs into four categories, from bottom to top: low risk, limited risk, high risk and unacceptable risk.

In high-risk AI categories with strict requirements for transparency, supervision will be more stringent. According to the introduction, high-risk categories include AI applications in:

The fourth category at the top of the tower is artificial intelligence systems with "unacceptable risk". These apps are basically illegal because they pose too many risks. Examples of such applications include systems to manipulate behavior or people or "specifically vulnerable groups," social scoring, and real-time and remote biometric systems.

High-risk categories are likely to be the focus of many companies’ efforts to ensure transparency and compliance. The EU’s Artificial Intelligence Bill will advise companies to take four steps before launching AI products or services that fall into high-risk categories.

The above is the detailed content of European artificial intelligence bill is about to be introduced, which may affect global technology regulation. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence There are several types of php arrays

There are several types of php arrays How to open gff file

How to open gff file How to integrate idea with Tomcat

How to integrate idea with Tomcat hdtunepro usage

hdtunepro usage Introduction to the three core components of hadoop

Introduction to the three core components of hadoop What's the matter with Douyin crashing?

What's the matter with Douyin crashing?