Can machines think? Can artificial intelligence be as smart as humans?

A new study shows that artificial intelligence may be able to do it.

In a non-linguistic Turing test, a research team led by Professor Agnieszka Wykowska of the Italian Institute of Technology found that human-like behavioral variability (Behavioral variability) can blur the distinction between humans and machines, that is, it can Helping robots look more like humans.

Specifically, their artificial intelligence program passed the nonverbal Turing test by simulating behavioral variability in human reaction times while playing games about matching shapes and colors with human teammates.

The related research paper is titled "Human-like behavioral variability blurs the distinction between a human and a machine in a nonverbal Turing test" and has been published in the scientific journal Science Robotics.

The research team stated that this work can provide guidance for future robot design, giving robots human-like behaviors that humans can perceive.

Regarding this research, Linköping University cognitive systems professor Tom Ziemke and postdoctoral researcher Sam Thellman believe that the research results "blur the difference between humans and machines" and provide a scientific understanding of human social cognition. Made very valuable contributions in other aspects.

However, "Human similarity is not necessarily the ideal goal for the development of artificial intelligence and robotics, and making artificial intelligence less like humans may be a smarter approach."

The key idea of the Turing Test is that complex questions about the possibility of machine thinking and intelligence can be verified by testing whether humans can tell whether they are interacting with another human or a machine.

Today, the Turing Test is used by scientists to evaluate what behavioral characteristics should be implemented in artificial agents to make it impossible for humans to distinguish computer programs from human behavior.

Herbert Simon, the pioneer of artificial intelligence, once said: "If the behavior displayed by the program is similar to that displayed by humans, then we call them intelligent." Similarly, Elaine Rich described artificial intelligence as Intelligence is defined as “the study of how to make computers do things that humans currently do better.”

The non-verbal Turing test is a form of Turing test. Passing the non-verbal Turing test is not easy for artificial intelligence, as they are not as adept as humans at detecting and distinguishing subtle behavioral characteristics of other people (objects).

So, can a humanoid robot pass the non-verbal Turing test and embody human characteristics in its physical behavior? In the non-verbal Turing test, the research team sought to see whether an AI could be programmed to vary its reaction times within a range similar to human behavioral variation, and thus be considered human.

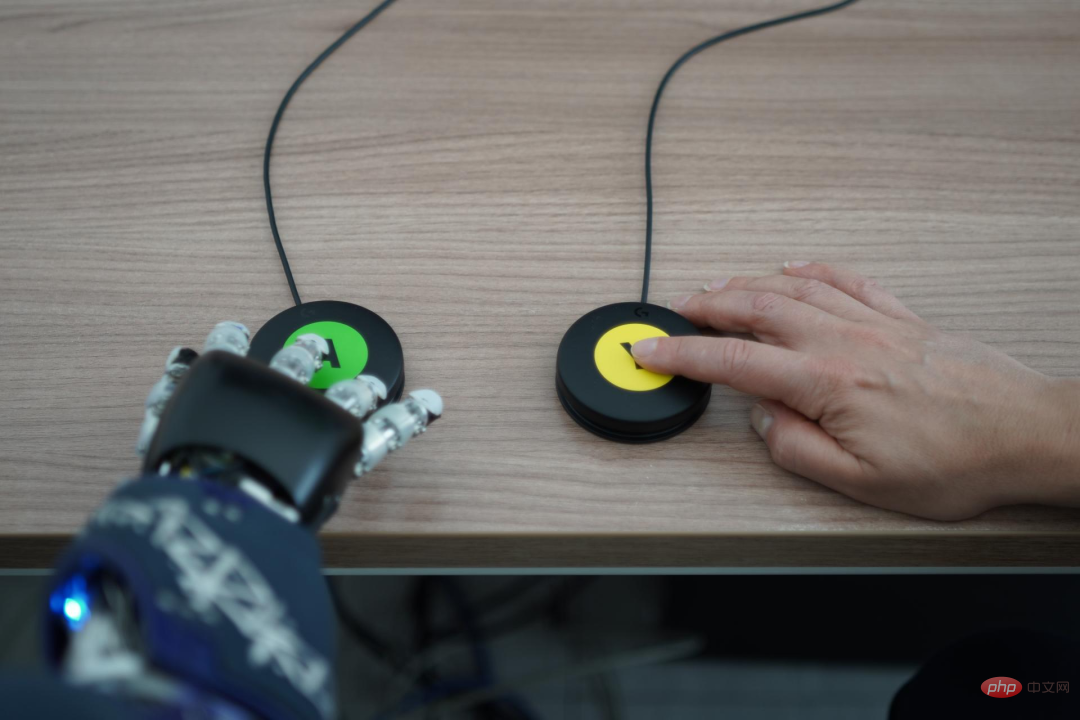

To do this, they placed humans and robots in a room with different colors and shapes on the screen.

Participants pressed a button when the shape or color changed, and the robot responded to this signal by clicking on the opposite color or shape displayed on the screen.

Participants pressed a button when the shape or color changed, and the robot responded to this signal by clicking on the opposite color or shape displayed on the screen.

During testing, the robot was sometimes controlled remotely by humans, Sometimes controlled by artificial intelligence trained to mimic behavioral variability.

During testing, the robot was sometimes controlled remotely by humans, Sometimes controlled by artificial intelligence trained to mimic behavioral variability.

Picture | Participants were asked to judge whether the robot's behavior was pre-programmed or controlled by a human. (Source: The paper)

Picture | Participants were asked to judge whether the robot's behavior was pre-programmed or controlled by a human. (Source: The paper)

The results showed that participants could easily tell when the robot was being operated by another person.

However, when the robot was operated by artificial intelligence, the probability of participants guessing incorrectly exceeded 50%.

Figure|Average accuracy of Turing test. (Source: The paper)

This means that their artificial intelligence passed the non-verbal Turing test.

However, the researchers also suggest that variability in human-like behavior may only be a necessary and insufficient condition for passing the non-verbal Turing test of entity artificial intelligence, since it can also manifest itself in human environments.

Artificial intelligence research has long targeted human similarity as a goal and metric, and Wykowska’s team’s research demonstrates that behavioral variability may be used to make robots more like humans.

Ziemke and others believe that making artificial intelligence less like humans may be a wiser approach, and elaborated on the two cases of self-driving cars and chat robots.

For example, when you are about to cross a crosswalk on the road and see a car approaching you, from a distance, you may not be able to tell whether it is a self-driving car, so you can only Judging by the car's behavior.

(Source: Pixabay)

However, even if you see someone sitting in front of the steering wheel, you cannot be sure that this person is actively controlling the vehicle. Or is it just monitoring the driving operation of the vehicle.

"This has a very important impact on traffic safety. If a self-driving car cannot indicate to others whether it is in self-driving mode, it may lead to unsafe human-machine interactions."

Maybe some people would say that ideally, you don't need to know whether a car is self-driving, because in the long run, self-driving cars may be better at driving than humans. However, for now, people’s trust in self-driving cars is far from enough.

The chatbot is closer to the real-life scenario of Turing’s original test. Many companies use chatbots in their online customer service, where conversation topics and interaction options are relatively limited. In this context, chatbots are often more or less indistinguishable from humans.

(Source: Pixabay)

So, the question is, should companies tell customers about the non-human status of chatbots? Once told, it often leads to negative reactions from consumers, such as a decrease in trust.

As the above cases illustrate, while human-like behavior may be an impressive achievement from an engineering perspective, the indistinguishability of humans and machines brings significant Psychological, ethical and legal issues.

On the one hand, people interacting with these systems must know the nature of what they are interacting with to avoid deception. Taking chatbots as an example, California in the United States has enacted a chatbot information disclosure law since 2018, making it clear that disclosure is a strict requirement.

On the other hand, there are examples where chatbots and human customer service agents are even more indistinguishable. For example, when it comes to self-driving, interactions between self-driving cars and other road users don't have the same clear start and end points, they're often not one-to-one, and they have certain real-time constraints.

So the question is when and how should the identity and capabilities of self-driving cars be communicated.

Furthermore, fully automated cars may still be decades away. Therefore, mixed traffic and varying degrees of partial automation are likely to be a reality for the foreseeable future.

There has been a lot of research on what external interfaces self-driving cars might need to communicate with people. However, little is known about the complexities that vulnerable road users such as children and people with disabilities are actually able and willing to cope with.

Thus, the above general rule that "persons interacting with such a system must be informed of the nature of the objects to be interacted with" may only be possible to follow under more explicit circumstances.

Similarly, this ambivalence is reflected in discussions of social robotics research: Given humans’ tendency to anthropomorphize mental states and assign them human-like attributes, many researchers aim to make robots look and behave the same. more human-like so that they can interact in a more or less human-like manner.

However, others argue that robots should be easily identifiable as machines to avoid overly anthropomorphic attributes and unrealistic expectations.

"So it may be wiser to use these findings to make robots less human-like." In the early days of artificial intelligence, imitating humans may have been a common goal in the industry, "but as artificial intelligence has Today, as it has become a part of people's daily lives, we need to at least think about the direction in which we should strive to achieve human-like artificial intelligence."

The above is the detailed content of Does artificial intelligence need to be like humans?. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life

What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence

What to do if Linux prompts No such file or directory when executing a file

What to do if Linux prompts No such file or directory when executing a file

Thunder vip patch

Thunder vip patch

What does edge computing gateway mean?

What does edge computing gateway mean?

JavaScript escape characters

JavaScript escape characters

How to check the ftp server address

How to check the ftp server address

What does ps mask mean?

What does ps mask mean?