John Ousterhout, a Stanford professor and inventor of the Tcl language, once wrote a book "The Philosophy of Software Design", which systematically discussed the general principles of software design. and methodology, the core point of the entire book is: the core of software design is to reduce complexity.

In fact, this point of view also applies to software design involving underlying hardware adaptation.

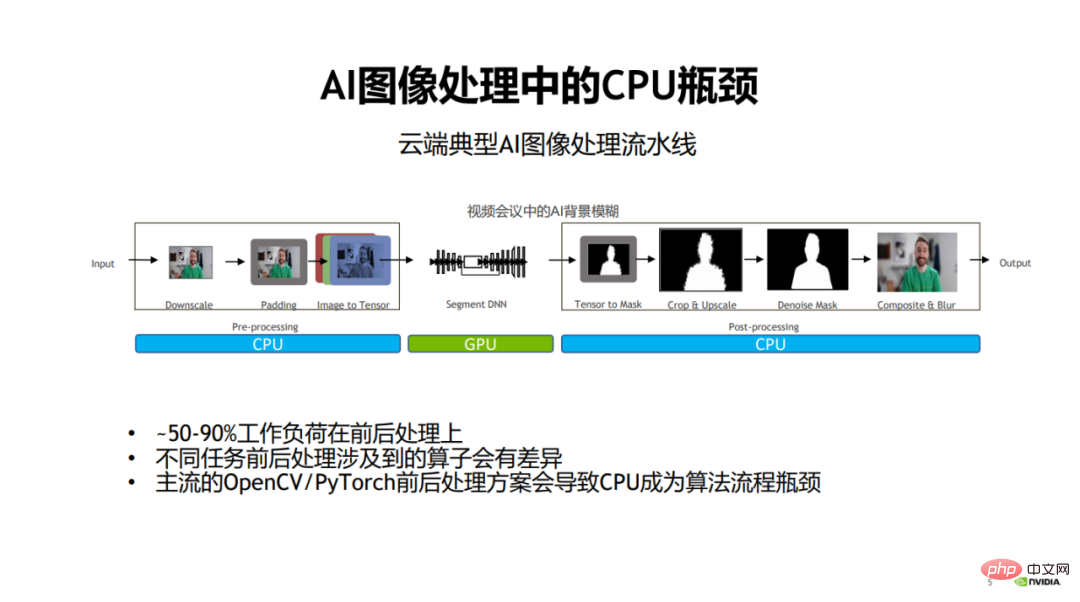

Take visual model development as an example. In the past visual model development process, people generally paid more attention to the optimization of the model itself to improve speed and effect. However, people pay little attention to the image pre-processing (pre-processing) and post-processing stages.

When model calculation, that is, the main stage of model training and inference, becomes more and more efficient, the pre- and post-processing stages of images increasingly become the performance bottleneck of image processing tasks.

Specifically, in the traditional image processing process, the pre- and post-processing parts are usually operated by the CPU. , this will result in 50% to more than 90% of the workload in the entire process being related to pre- and post-processing, and thus they will become the performance bottleneck of the entire algorithm process.

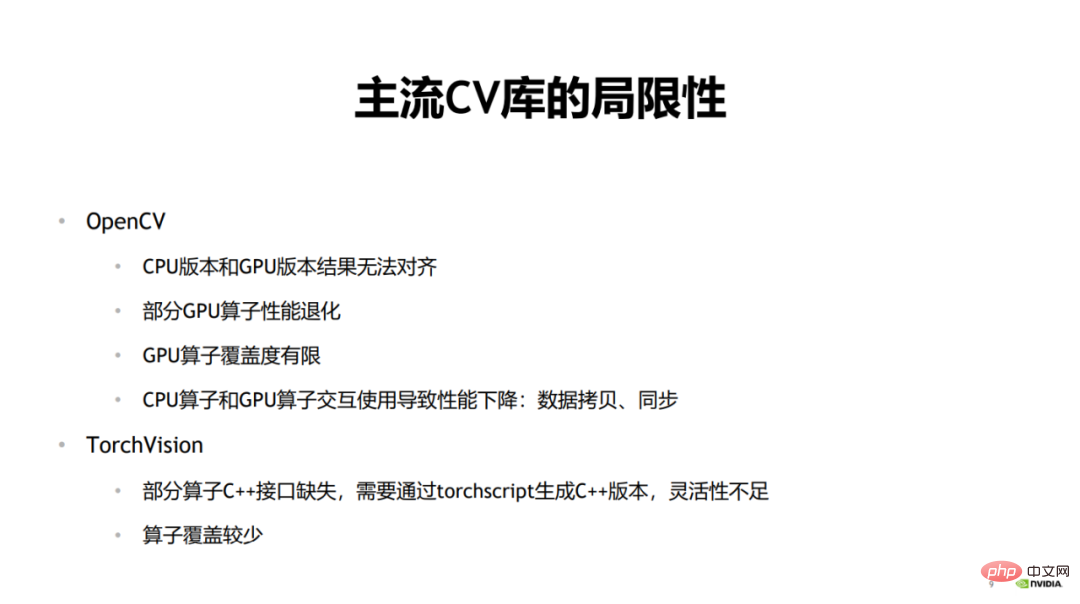

The above problems are currently on the market The main limitation of mainstream CV libraries in application scenarios is that inconsistency in dependence on underlying hardware leads to complexity and performance bottlenecks. As John Ousterhout summed up the causes of complexity: Complexity stems from dependencies.

TorchVision When doing model inference, some operators lack C interfaces, resulting in a lack of flexibility when calling. If you want to generate a C version, you must generate it via TorchScript. This will cause a lot of inconvenience in use, because inserting operators from other libraries in the middle of the process for interactive use will bring extra overhead and workload. Another disadvantage of TorchVision is that the operator coverage is not high.

The above are the limitations of the current mainstream CV libraries.

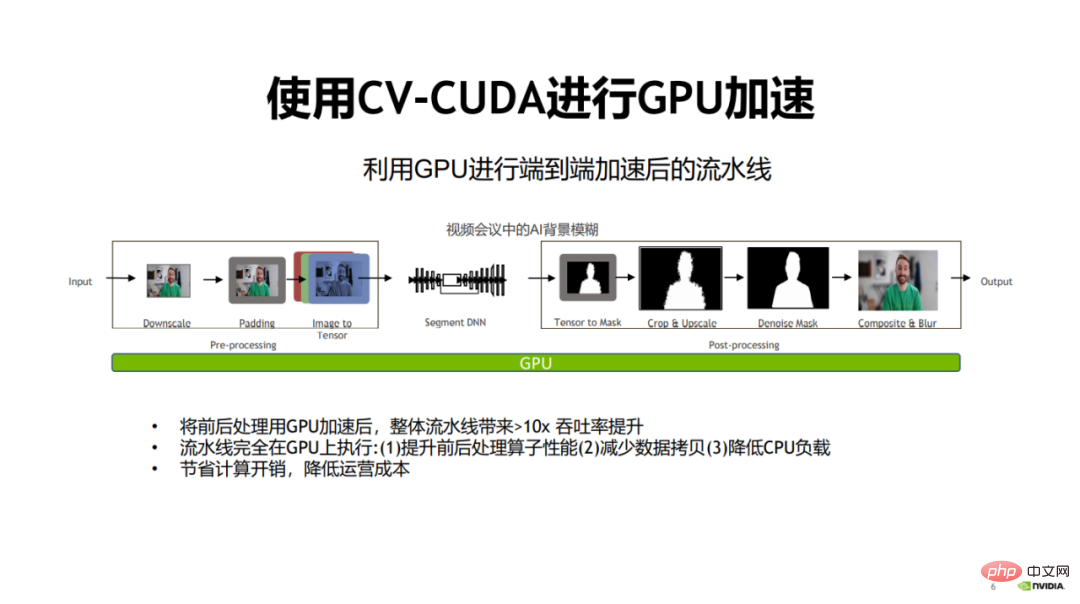

Since the performance bottleneck of pre- and post-processing mainly lies in the use of CPU calculations, and the model The technology of using GPU in the computing stage has become increasingly mature.

#So, a natural solution is to use GPU to accelerate pre- and post-processing, which will greatly improve the performance of the entire algorithm pipeline.

To this end, NVIDIA and ByteDance jointly open sourced the image preprocessing operator library CV-CUDA. CV-CUDA can run efficiently on GPU, and the operator speed can reach about a hundred times that of OpenCV.

On January 15, 2023, from 9:30 to 11:30, the "CV-CUDA First Open Class" hosted by NVIDIA invited 3 students from NVIDIA, ByteDance, and Sina Weibo Technical experts (Zhang Yi, Sheng Yiyao, Pang Feng) shared in-depth on related topics. This article summarizes the essence of the speeches of the three experts.

There are many benefits to using GPU instead of CPU. First of all, after the pre- and post-processing operators are migrated to the GPU, the computational efficiency of the operators can be improved.

Secondly, since all processes are performed on the GPU, data copying between the CPU and GPU can be reduced.

Finally, after migrating the CPU load to the GPU, the CPU load can be reduced and the CPU can be used to process other tasks that require complex logic.

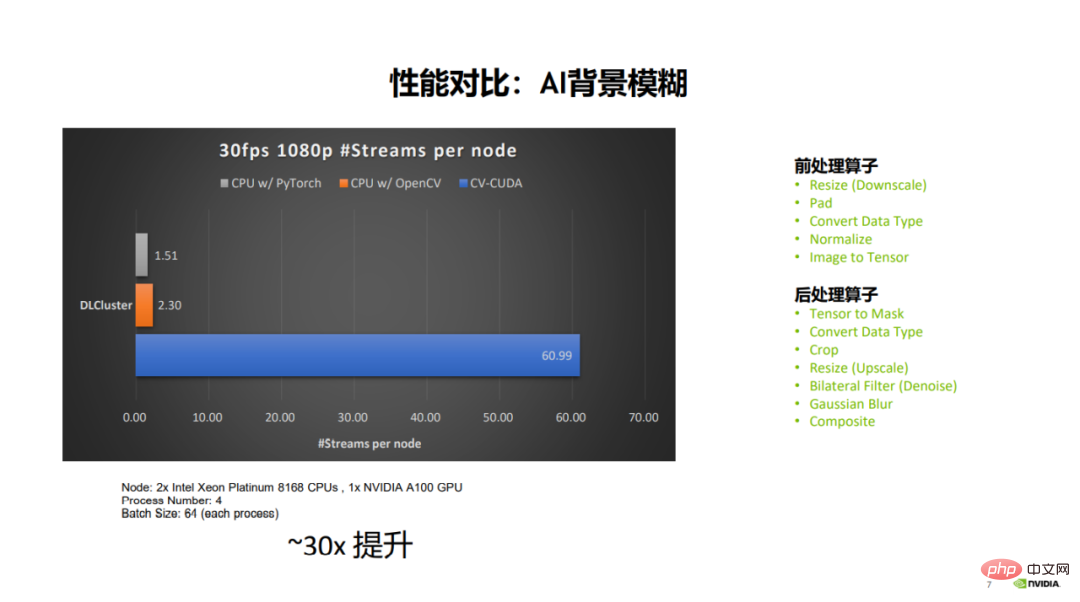

After migrating the entire process to the GPU, the entire pipeline can be improved by nearly 30 times, thus Save computing overhead and reduce operating costs.

It can be seen from the comparison of the data in the figure that under the same server and parameter configuration, OpenCV can open up to 2-3 30fps 1080p video streams. For parallel streams, PyTorch (CPU) can open up to 1.5 parallel streams, while CV-CUDA can open up to 60 parallel streams. It can be seen that the overall performance improvement is very large. The pre-processing operators involved include resize, padding, normalize, etc., and the post-processing operators include crop, resize, compose, etc.

Why GPU can adapt to the acceleration needs of pre- and post-processing ? Benefit from the asynchronous between model calculation and pre- and post-processing, and adapt to the parallel computing capabilities of GPU.

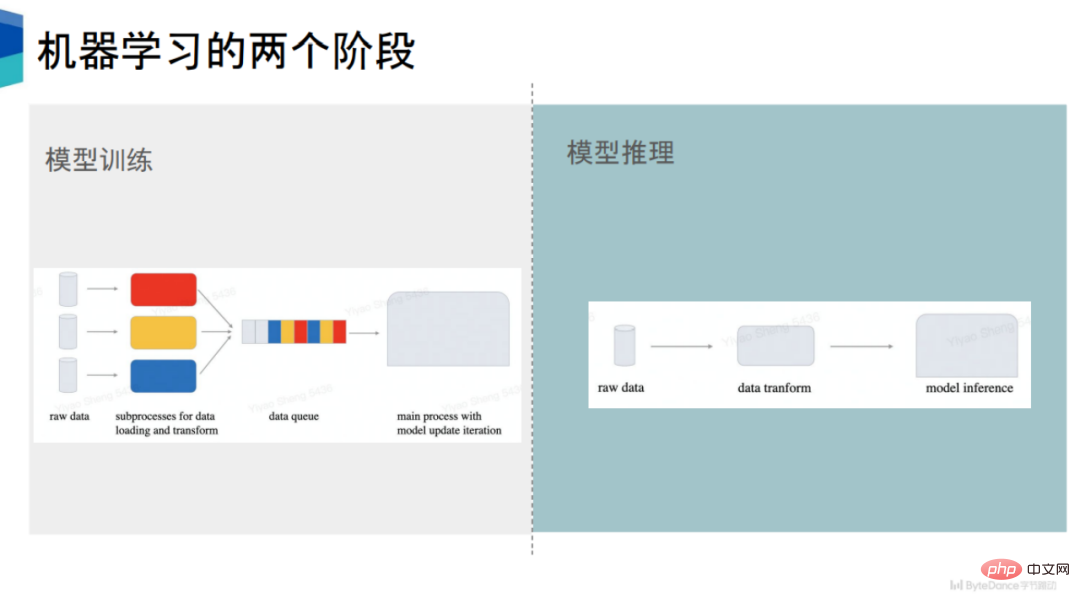

##We The preprocessing asynchronousization of model training and model inference is explained separately.

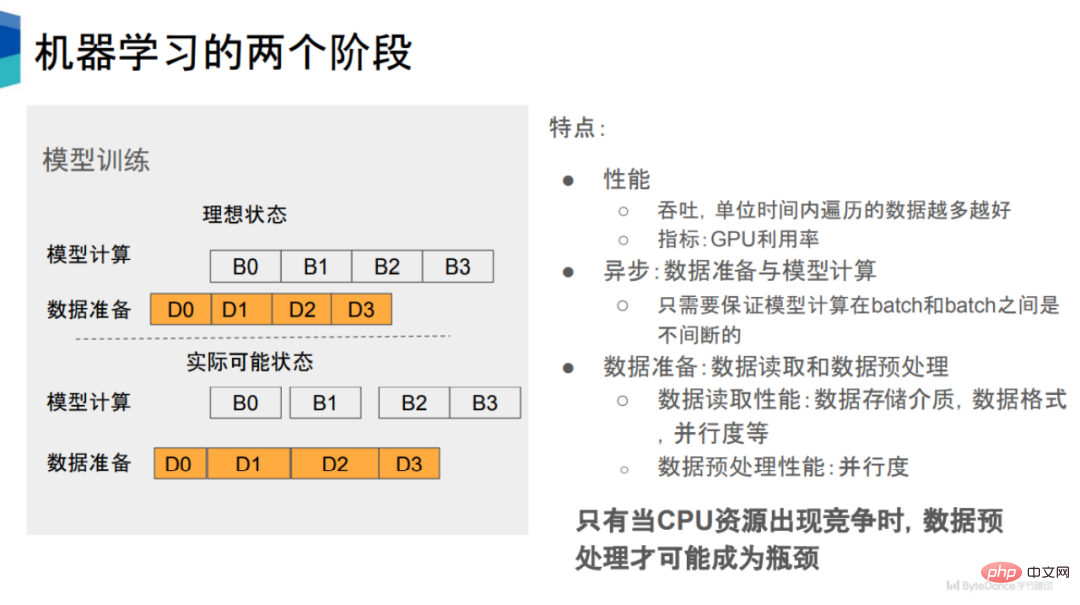

##Model training can be divided into two parts, the first is data preparation, and the second is model calculation. The current mainstream machine learning frameworks, such as PyTorch and TensorFlow, are asynchronous between data preparation and model calculation. Taking PyTorch as an example, it will start multiple sub-processes for data preparation. As shown in the figure, it contains two states, namely model calculation and data preparation. There is a time sequence relationship between the two. For example, when D0 is completed, You can proceed to B0, and so on. #From a performance perspective, we expect the speed of data preparation to keep up with the speed of model calculation. However, in actual situations, some data reading and data preprocessing processes take a long time, resulting in a certain window period before corresponding model calculations can be performed, resulting in a decrease in GPU utilization. Data preparation can be divided into data reading and data preprocessing. These two stages can be executed serially or in parallel, such as under the PyTorch framework. It is executed serially. There are many factors that affect the performance of data reading, such as data storage media, storage format, parallelism, number of execution processes, etc. In contrast, The factor that affects the performance of data preprocessing is relatively simple, which is the degree of parallelism. The higher the degree of parallelism, the better the performance of data preprocessing. In other words, making data preprocessing and model calculation asynchronous and increasing the parallelism of data preprocessing can improve the performance of data preprocessing. 2. Preprocessing asynchronousization of model inference

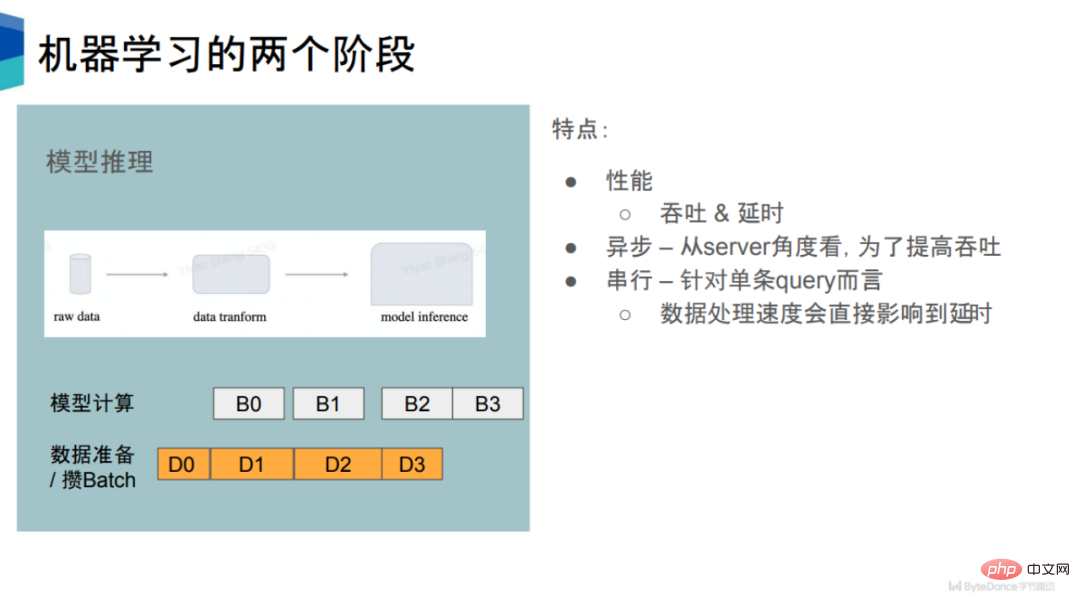

In In the model inference phase, its performance has two indicators, the first is throughput, and the second is delay. To a certain extent, these two indicators are mutually exclusive.

For a single query, after the server receives the data, it will preprocess the data and then perform model inference. So for a single query, it is a serial process to a certain extent.

But this is very inefficient and will waste a lot of computing resources. In order to improve throughput, many inference engines will use the same strategy as the training phase to asynchronously prepare data and model calculations. In the data preparation phase, a certain amount of queries will be accumulated and combined into a batch, and then subsequent calculations will be performed to improve the overall throughput.

In terms of throughput, model inference and model training are relatively similar. By moving the data preprocessing stage from the CPU to the GPU, throughput gains can be achieved.

At the same time, from the perspective of delay, for each query statement, if the time spent in the preprocessing process can be reduced, the delay for each query will be shortened accordingly.

Another feature of model reasoning is that the amount of model calculation is relatively small, because it only involves forward calculation and not backward calculation. This means that model inference requires higher data preprocessing.

Assuming there are enough CPU resources for calculation, theoretically preprocessing will not become Performance bottleneck. Because once you find that the performance cannot keep up, you only need to add processes to do preprocessing operations.

Therefore, data preprocessing may become a performance bottleneck only when there is competition for CPU resources.

In actual business, competition for CPU resources is very common, which will lead to reduced GPU utilization in subsequent training and inference stages, and thus reduced training speed.

As GPU computing power continues to increase, it is foreseeable that the speed requirements for the data preparation phase will become higher and higher.

For this reason, it is a natural choice to move the preprocessing part to the GPU to alleviate the problem of CPU resource competition and improve GPU utilization.

Overall, this design reduces the complexity of the system and directly adapts the main body of the model pipeline to the GPU, which can greatly help improve the utilization of both GPU and CPU. At the same time, it also avoids the problem of result alignment between different versions, reduces dependencies, and conforms to the software design principles proposed by John Ousterhout.

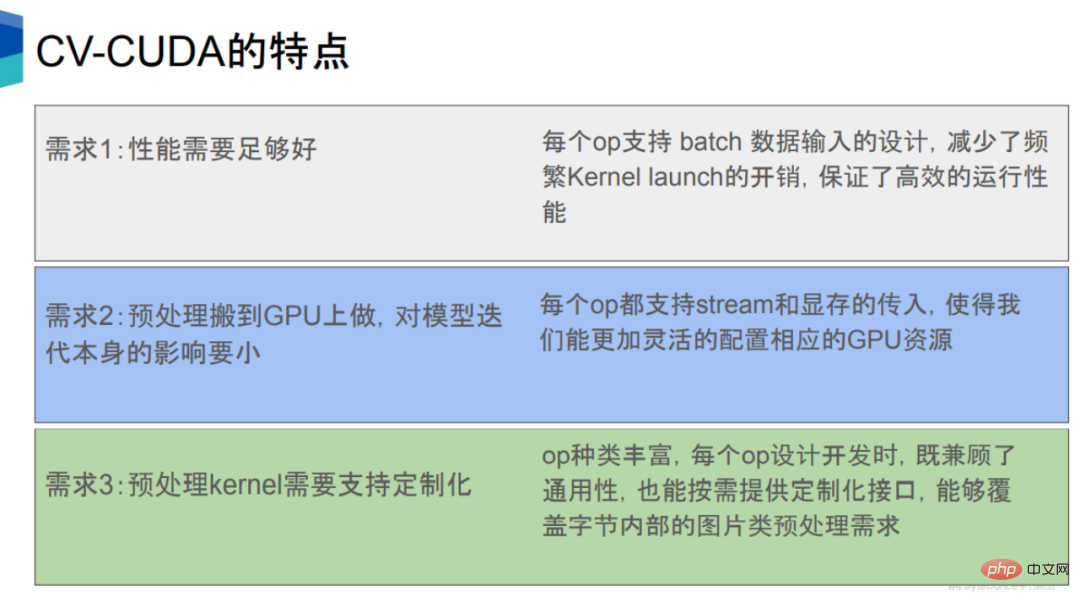

Moving the pre-processing and post-processing processes to the GPU needs to meet multiple requirements condition.

#The first is that its performance must be at least better than that of the CPU. This is mainly based on the high concurrent computing capabilities of GPUs.

#The second is to accelerate preprocessing and not have a negative impact on other processes such as model inference. For the second requirement, each operator of CV-CUDA has an interface between stream and CUDA memory, so that the GPU resources can be allocated more reasonably, so that when running these preprocessing operators on the GPU, it will not have too much impact. to the model calculation itself.

Thirdly, Internet companies have very diverse business needs, involving many types of models, and corresponding preprocessing logic. Therefore, preprocessing calculations Subclasses need to be developed to be customized, allowing greater flexibility to implement complex logic.

Generally speaking, CV-CUDA analyzes the model pipeline from the aspects of hardware, software, algorithm, language, etc. The pre- and post-processing stages are accelerated and the entire pipeline is unified.

In terms of hardware, CV-CUDA is based on the parallel computing capabilities of the GPU, which can greatly improve the speed and throughput of pre- and post-processing and reduce the number of models. Compute waiting time and improve GPU utilization.

CV-CUDA supports Batch and Variable Shape modes. Batch mode supports batch processing and can take full advantage of the parallel characteristics of the GPU. However, OpenCV can only call a single image regardless of whether it is the CPU or GPU version.

Variable Shape mode means that in a batch, the length and width of each picture can be different. The length and width of images on the Internet are generally inconsistent. The mainstream framework method is to resize the length and width to the same size respectively, then package the images of the same length and width into a batch, and then process the batch. CV-CUDA can directly put images of different lengths and widths into a batch for processing, which not only improves efficiency, but is also very convenient to use.

Another meaning of Variable Shape is that when processing images, you can specify certain parameters of each image, such as rotate. For a batch of images, you can specify the rotation angle of each image.

#In terms of software, CV-CUDA has developed a large number of software optimization methods for further optimization, including performance optimization. (such as memory access optimization) and resource utilization optimization (such as video memory pre-allocation), so that it can run efficiently in cloud training and inference scenarios.

#The first is the video memory pre-allocation setting. When OpenCV calls the GPU version, some operators will execute cudaMalloc internally, which will cause a significant increase in time consumption. In CV-CUDA, all video memory pre-allocation is performed during the initialization phase, while during the training and inference phases, no video memory allocation operations are performed, thereby improving efficiency.

#Secondly, all operators operate asynchronously. CV-CUDA integrates a large number of kernels, thereby reducing the number of kernels, thereby reducing kernel startup time and data copy and erasure, and improving overall operating efficiency.

Thirdly, CV-CUDA also optimizes memory access, such as merging memory access, vectorized reading and writing, etc., to improve bandwidth utilization and also Use shared memory to improve memory access, read and write efficiency.

Finally, CV-CUDA has also made a lot of optimizations in calculations, such as fast math, warp reduce/block reduce, etc.

In terms of algorithm, CV-CUDA’s operators are independently designed and customized to support Very complex logic implementation and easy to use and debug.

How to understand independent design? There are two forms of operator calling in the image processing library. One is the overall pipeline form, which can only obtain the results of the pipeline, such as DALI, and the other is the modular independent operator form, which can obtain each operator. Individual results, such as OpenCV. CV-CUDA uses the same calling form as OpenCV, which is more convenient to use and debug.

#In terms of language, CV-CUDA supports a rich API, which can seamlessly connect pre- and post-processing to training and inference scenarios. .

These APIs include commonly used C, C, Python interfaces, etc., which allows us to support both training and inference scenarios. It also supports PyTorch and TensorRT. Interface, in the future, CV-CUDA will also support interfaces such as Triton, TensorFlow, and JAX.

In the inference stage, you can directly use the Python or C interface for inference, as long as you ensure that the pre- and post-processing, model, and GPU are placed on the same stream during inference. Can.

By demonstrating the use of CV-CUDA in NVIDIA, NVIDIA, and From the application cases of Jiedong and Sina Weibo, we can realize how significant the performance improvement brought by CV-CUDA is.

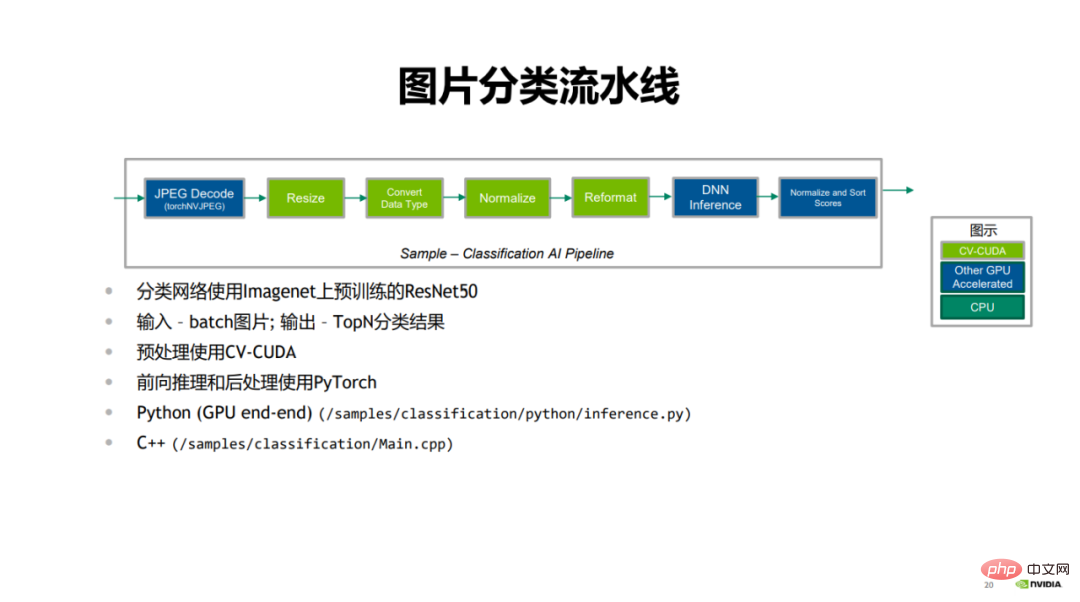

The first is the image classification case shown by NVIDIA.

In the image classification pipeline, the first is JPEG decode, which decodes the image; the green part is the pre-processing step, including resize, convert data type, normalize and reformat; the blue part is the forward reasoning process using PyTorch, and finally the classification results are scored and sorted.

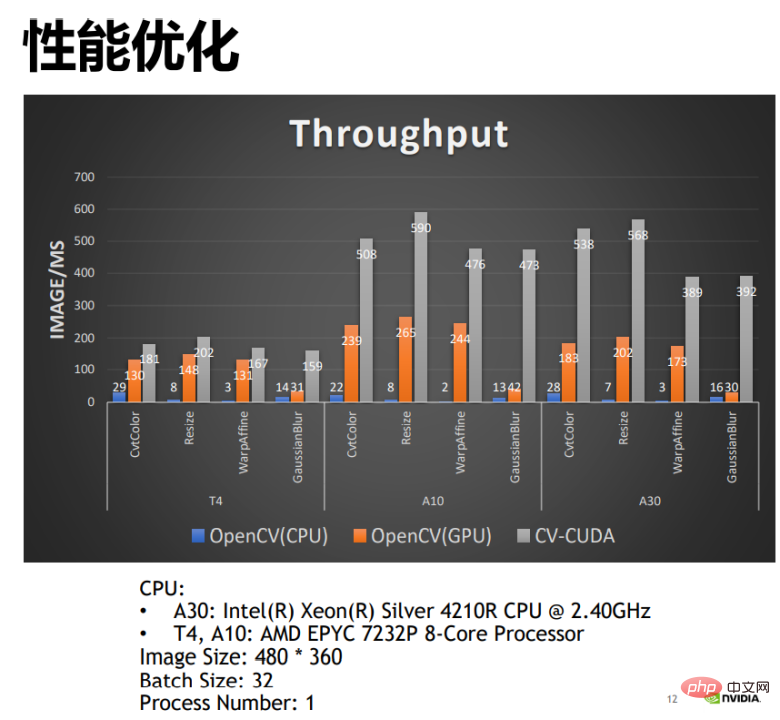

Comparing the performance of the CPU version and GPU version of CV-CUDA and OpenCV can be found , the GPU version of OpenCV can achieve greater performance improvement compared to the CPU version, and by applying CV-CUDA, the performance can be doubled. For example, the number of images processed by OpenCV's CPU operator per millisecond is 22, and the number of images processed by the GPU operator per millisecond is more than 200. CV-CUDA can process more than 500 images per millisecond, and its throughput is that of OpenCV's CPU. It is more than 20 times that of the GPU version and twice that of the GPU version. The performance improvement is obvious.

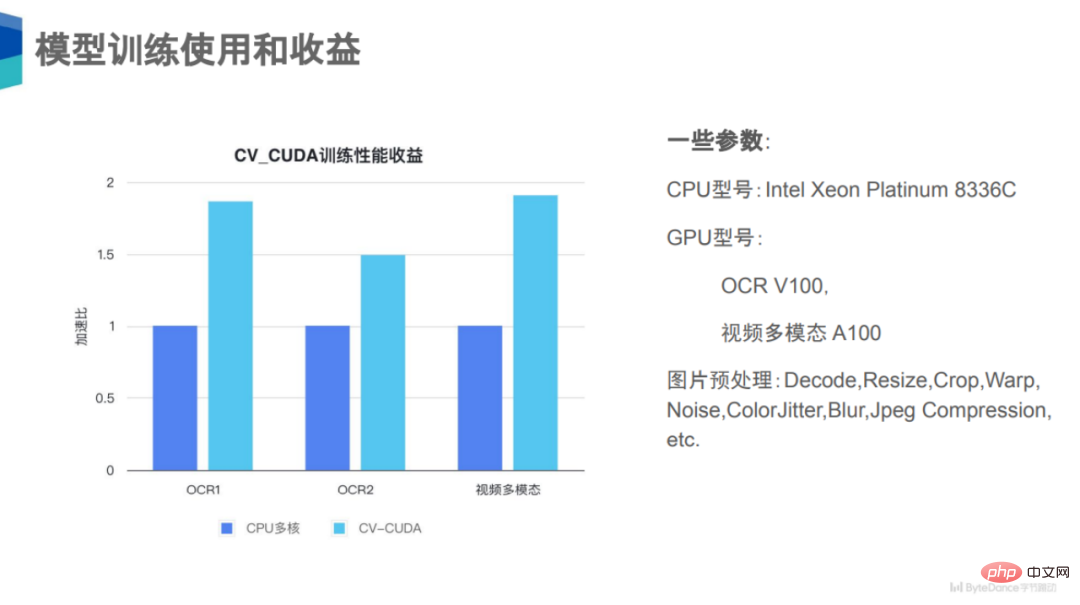

#The second is the three cases of OCR1, OCR2, and video multi-modality presented by ByteDance.

In model training, you can see that in OCR1, OCR2, video multi-modal On three tasks, performance gains of 50% to 100% were obtained after using CV-CUDA.

Why is there such a big performance gain? In fact, one thing these three tasks have in common is that their image preprocessing logic is very complex, such as decode, resize, crop, etc., and these are still large categories. In fact, there may be many smaller ones in each operator category. Class or subclass preprocessing. For these three tasks, there may be more than a dozen types of data enhancement on the preprocessing link, so the computational pressure on the CPU is very high. If this part of the calculation can be moved to the GPU, the CPU's Resource competition will be significantly reduced, and the overall throughput will be greatly improved.

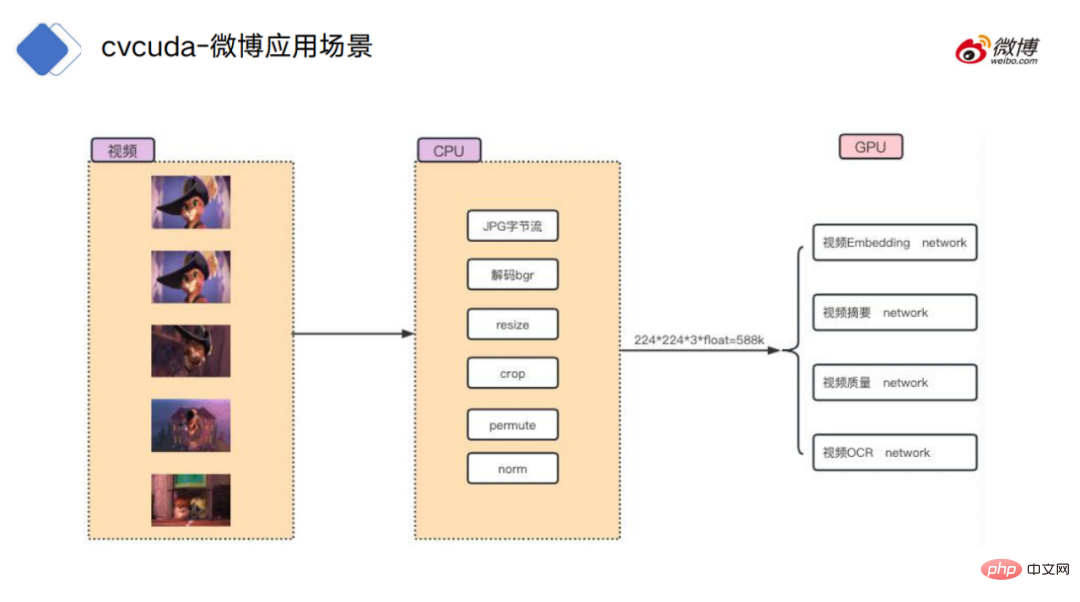

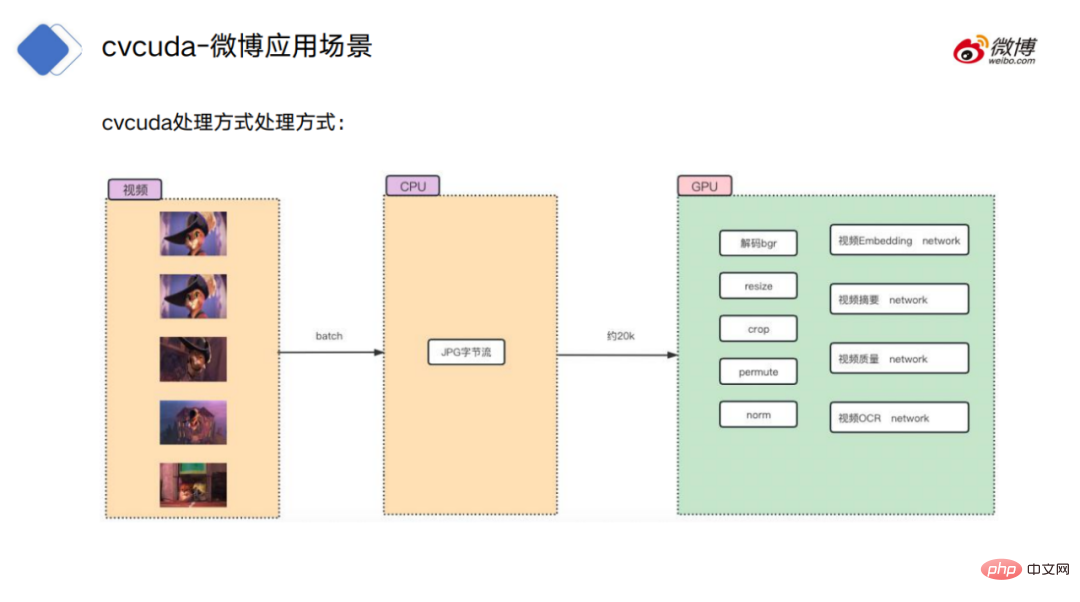

#Finally, there is a video processing case shown on Sina Weibo.

For the video processing process, the traditional approach is to first put the video frames in the CPU environment Decoding: decode the original byte stream into image data, then perform some regular operations, such as resize, crop, etc., and then upload the data to the GPU for specific model calculations.

The processing method of CV-CUDA is to decode the CPU and put it in the memory. The byte stream is uploaded to the GPU, and the preprocessing is also located on the GPU, so that it can be seamlessly connected with the model calculation without the need for copy operations between the video memory and the memory.

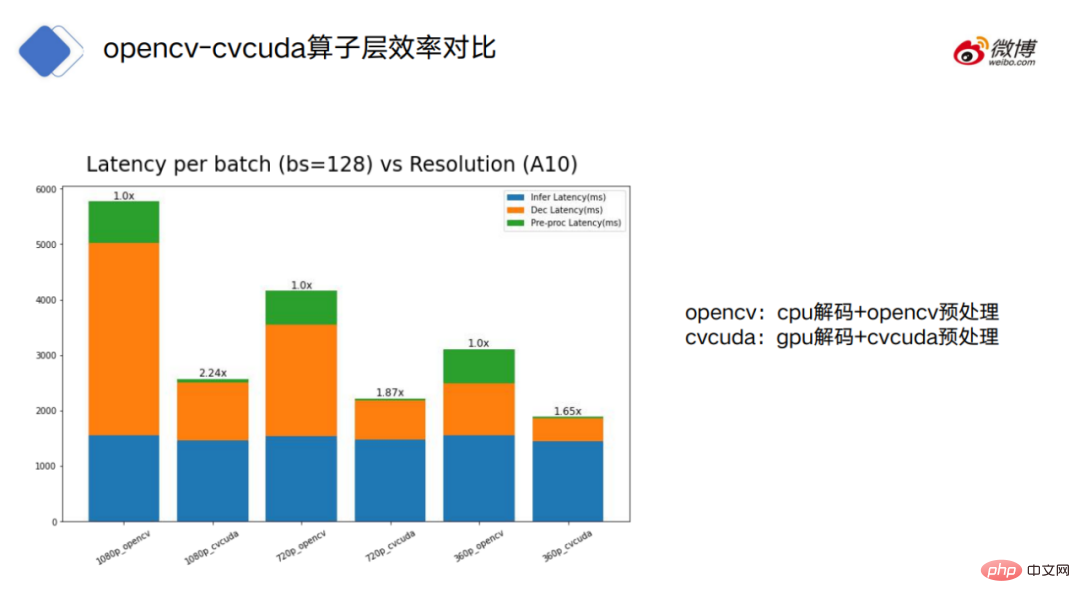

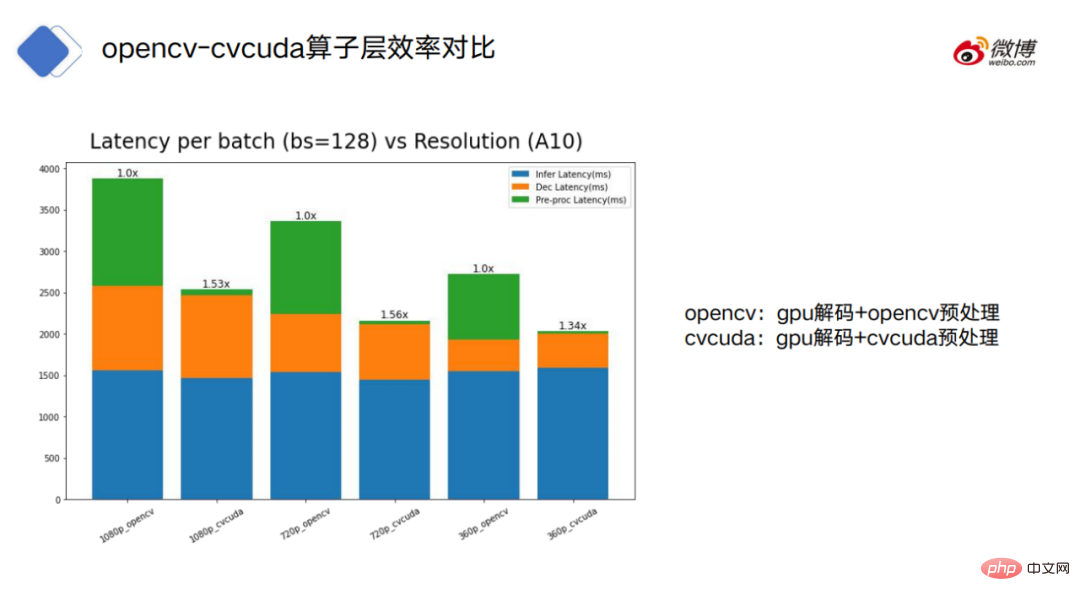

The figure shows the use of OpenCV (odd numbers) and CV-CUDA (even numbers) Respective processing time, blue refers to the consumption time of the model, orange refers to the consumption time of decoding, and green refers to the consumption time of preprocessing.

OpenCV can be divided into two modes: CPU decoding and GPU decoding. CV-CUDA only uses GPU decoding mode.

#It can be seen that for CPU-decoded OpenCV, OpenCV’s decoding and preprocessing are much more time-consuming than CV-CUDA.

Looking at the case of OpenCV using GPU decoding, we can see that OpenCV and CV- The time consuming of CUDA in the model and decoding parts is close, but there is still a big gap in preprocessing.

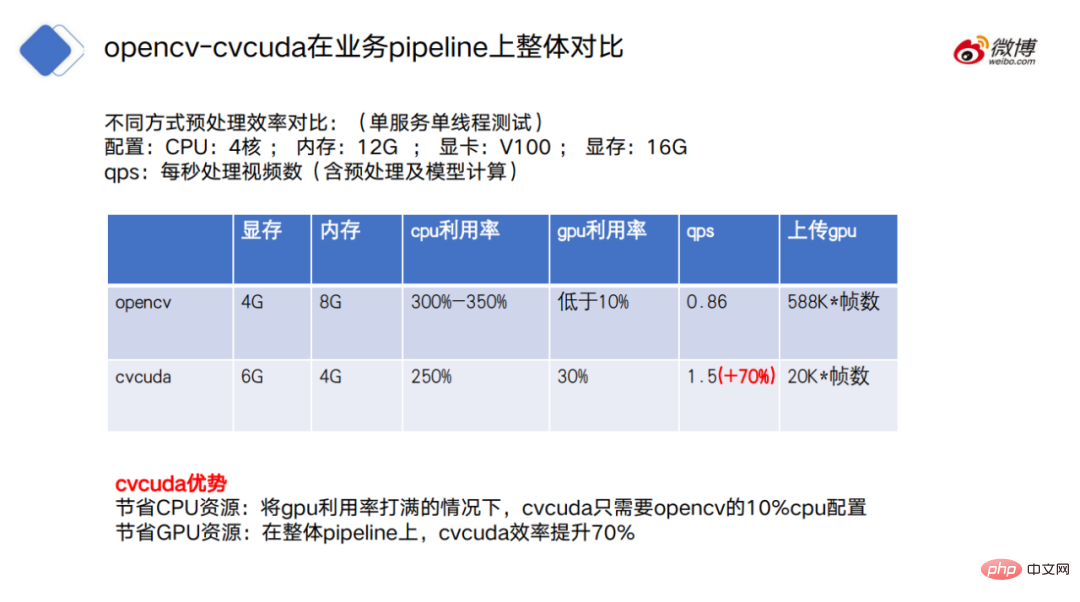

##In terms of overall pipeline comparison, CV-CUDA also has obvious advantages. On the one hand CV-CUDA saves more CPU resources, that is, when the GPU utilization is full, CV-CUDA only requires 10% of the CPU configuration of OpenCV; at the same time, CV-CUDA also saves more GPU resources. In the overall pipeline, CV- CUDA efficiency increased by 70%.

For example, the CPU can still decode and resize images, and then put them on the GPU for processing after resizing.

Why put decoding and resize on the CPU? First of all, for image decoding, the GPU’s hard decoding unit is actually limited. Secondly, for resize, usually resize will convert a larger picture into a smaller picture.

If the data is copied to the GPU before resize, it may occupy a lot of bandwidth for video memory data transfer.

Of course, how to allocate the workload between the CPU and GPU still needs to be judged based on the actual situation.

The most important principle is not to alternate calculations between CPU and GPU, because there is overhead in transmitting data across devices. If the alternation is too frequent, the benefits brought by the calculation itself may be flattened, resulting in a decrease in performance instead of an increase.

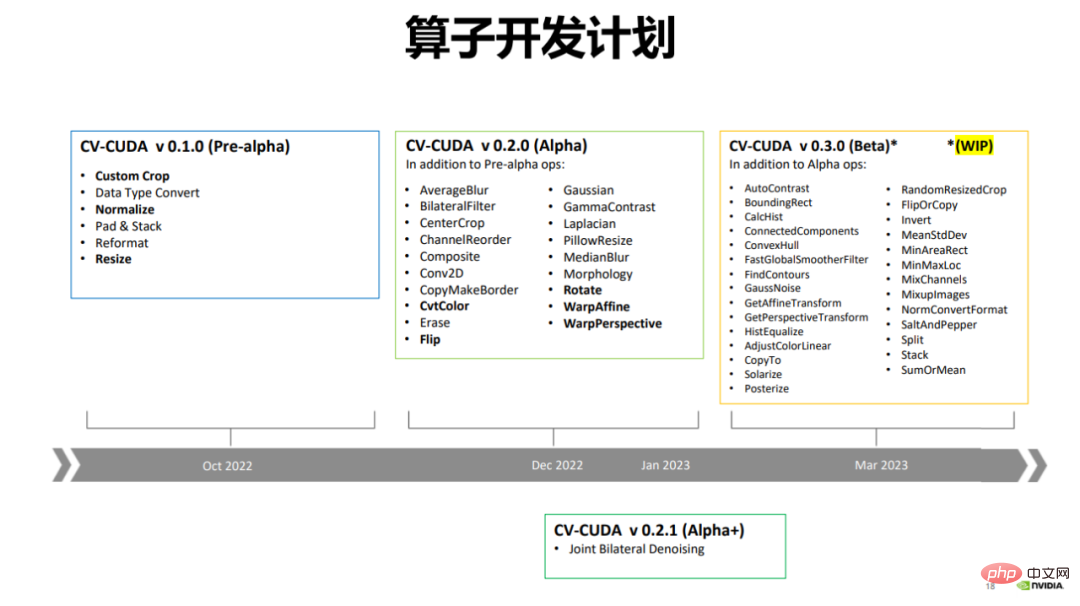

In December 2022, CV-CUDA released the alpha version, which contains more than 20 operators, such as commonly used Flip, Rotate, Perspective, Resize, etc. .

Currently OpenCV has more operators, with thousands of operators. CV-CUDA currently only accelerates the more commonly used operators, and will continue to do so in the future. Add new operators.

CV-CUDA will also release a beta version in March this year, which will add more than 20 operators to more than 50 operators. The beta version will include some very commonly used operators, such as ConvexHull, FindContours, etc.

Return Looking back at the design plan of CV-CUDA, we can find that there are not too complicated principles behind it, and it can even be said to be clear at a glance.

#From a complexity perspective, this can be said to be the advantage of CV-CUDA. "The Philosophy of Software Design" mentions a principle for judging software complexity - if a software system is difficult to understand and modify, it is very complex; if it is easy to understand and modify, it is very simple.

The effectiveness of CV-CUDA can be understood as the adaptability of the model calculation stage to the GPU, which drives the adaptability of the pre- and post-processing stages to the GPU. . And this trend has actually just begun.

The above is the detailed content of Throughput increased by 30 times: CV pipeline moves toward full-stack parallelization. For more information, please follow other related articles on the PHP Chinese website!