Referring VOS (RVOS) is a newly emerging task. It aims to segment the objects referred to by the text from a video sequence based on the reference text. . Compared with semi-supervised video object segmentation, RVOS only relies on abstract language descriptions instead of pixel-level reference masks, providing a more convenient option for human-computer interaction and therefore has received widespread attention.

## Paper link: https://www.aaai.org/AAAI22Papers/AAAI-1100.LiD.pdf

The main purpose of this research is to solve two major challenges faced in existing RVOS tasks:

In this regard, this study proposes an end-to-end RVOS framework for cross-modal element migration - YOFO , its main contributions and innovations are:

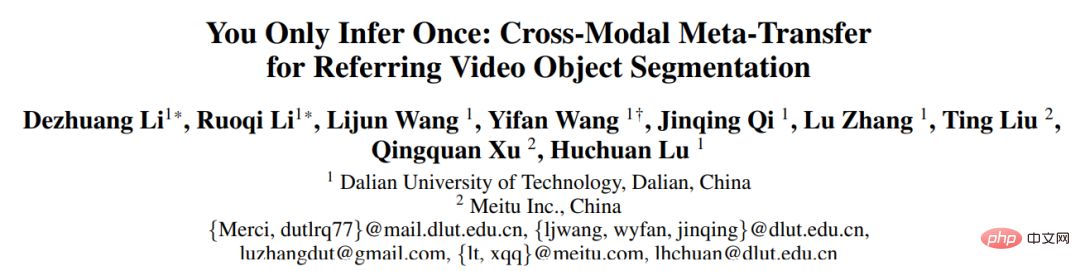

The main process of the YOFO framework is as follows: input images and text first pass through the image encoder and language encoder to extract features, and then in multi-scale Cross-modal feature mining module for fusion. The fused bimodal features are simplified in the meta-transfer module that contains the memory library to eliminate redundant information in the language features. At the same time, temporal information can be preserved to enhance temporal correlation, and finally the segmentation results are obtained through a decoder.

Figure 1: Main process of YOFO framework.

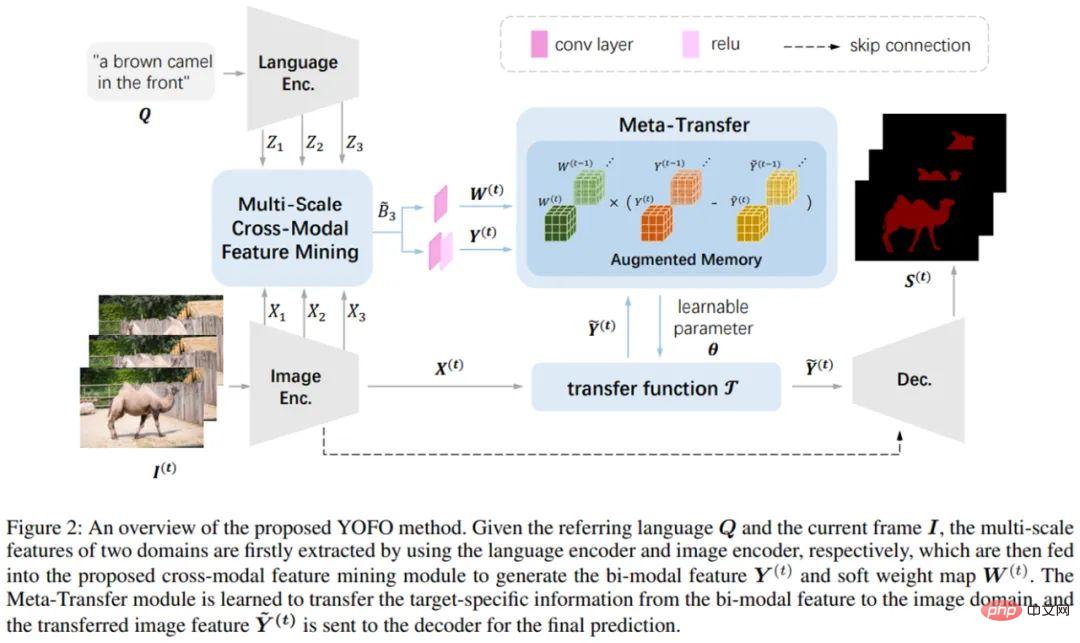

Multi-scale cross-modal feature mining module: This module passes the Fusion of two modal features of different scales can maintain the consistency between the scale information conveyed by the image features and the language features. More importantly, it ensures that the language information will not be diluted and overwhelmed by the multi-scale image information during the fusion process.

Figure 2: Multi-scale cross-modal feature mining module.

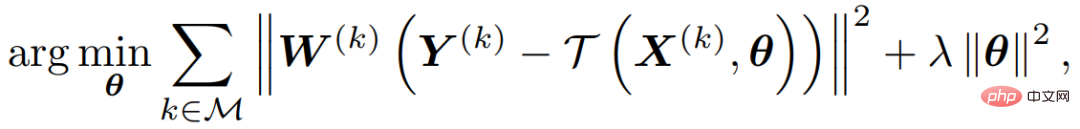

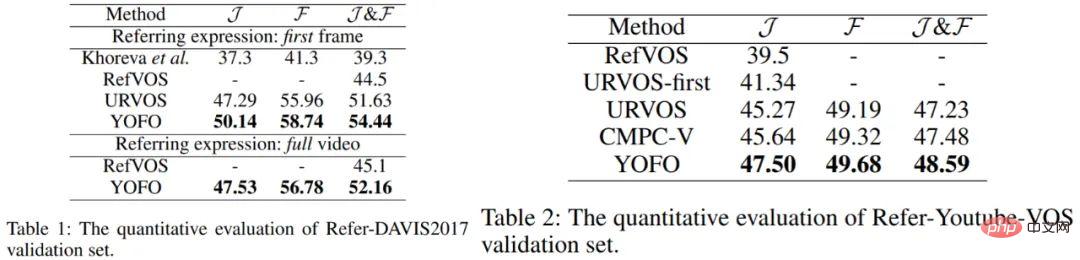

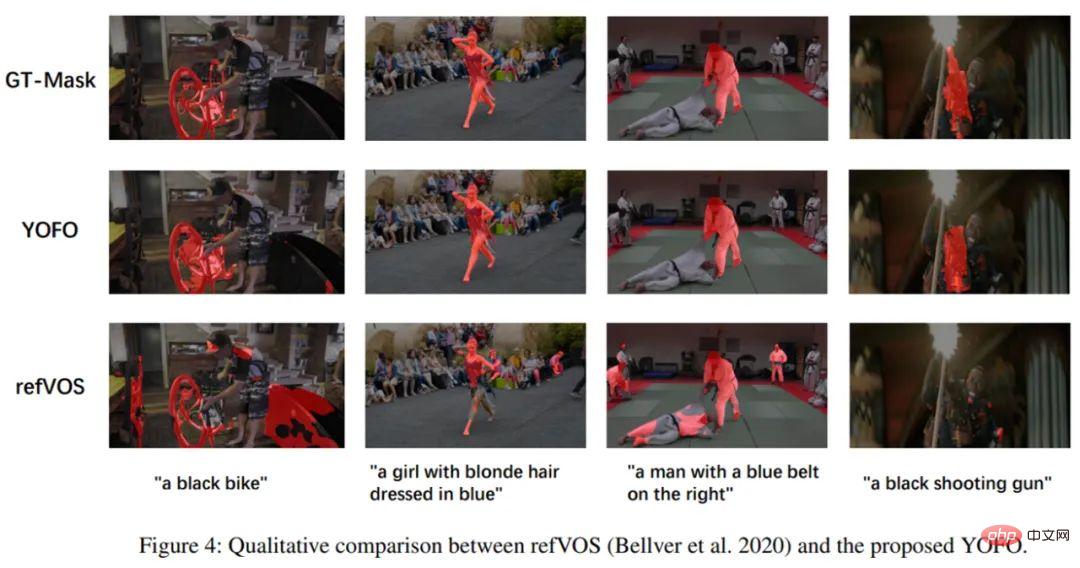

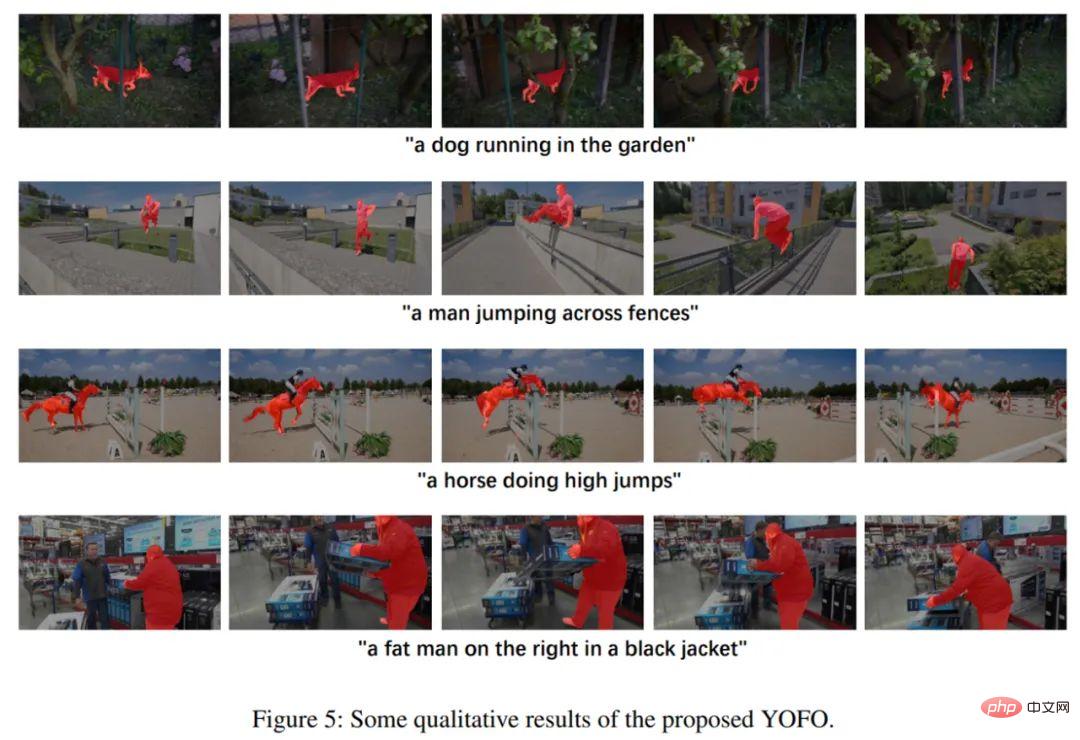

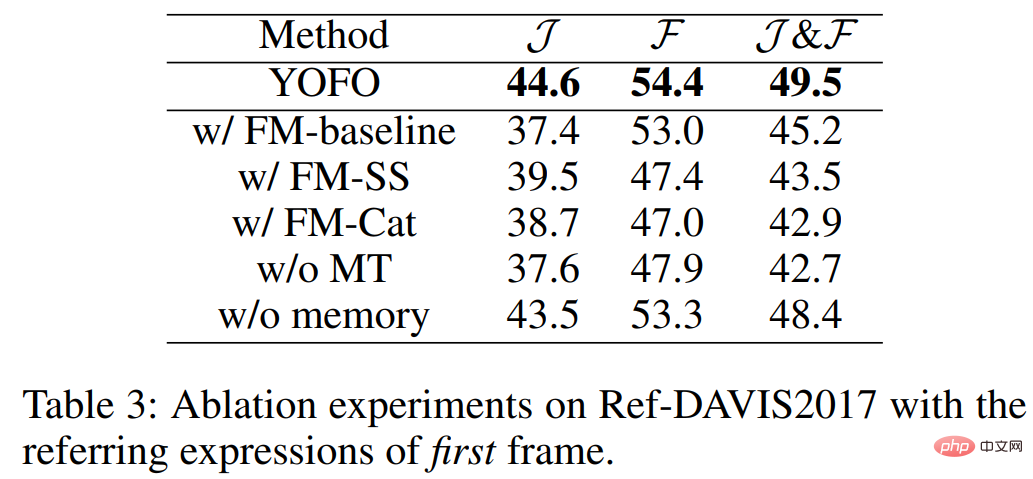

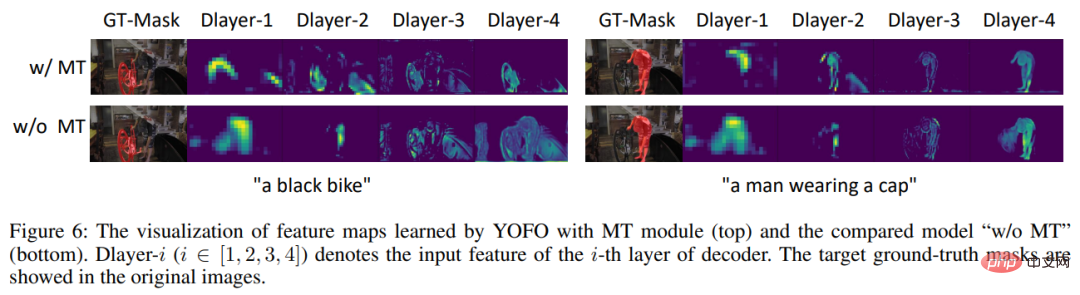

##Meta Migration Module: A learning-to-learn strategy is adopted, and the process can be simply described as the following mapping function. The migration function is a convolution, then The optimization process can be expressed as the following objective function: Among them, M represents A memory bank that can store historical information. W represents the weight of different positions and can give different attention to different positions in the feature. Y represents the bimodal features of each video frame stored in the memory bank. This optimization process maximizes the ability of the meta-transfer function to reconstruct bimodal features, and also enables the entire framework to be trained end-to-end. ##Training and testing: The loss function used in training is lovasz loss, and the training set is two video data sets Ref-DAVIS2017 , Ref-Youtube-VOS, and use the static data set Ref-COCO to perform random affine transformation to simulate video data as auxiliary training. The meta-migration process is performed during training and prediction, and the entire network runs at a speed of 10FPS on 1080ti. The method used in the study has achieved excellent results on two mainstream RVOS data sets (Ref-DAVIS2017 and Ref-Youtube-VOS). The quantitative indicators and some visualization renderings are as follows: ## Figure 3: Quantitative indicators on two mainstream data sets. Figure 4: Visualization on the VOS dataset. Figure 5: Other visualization effects of YOFO. Figure 6: Effectiveness of feature mining module (FM) and meta-transfer module (MT). Figure 7: Comparison of decoder output features before and after using the MT module. About the research team is its convolution kernel parameter:

is its convolution kernel parameter:

The above is the detailed content of Based on cross-modal element transfer, the reference video object segmentation method of Meitu & Dalian University of Technology requires only a single stage. For more information, please follow other related articles on the PHP Chinese website!

Introduction to the framework used by vscode

Introduction to the framework used by vscode

How to open the download permission of Douyin

How to open the download permission of Douyin

Install and configure vnc on ubunt

Install and configure vnc on ubunt

Can the appdata folder be deleted?

Can the appdata folder be deleted?

What are the differences between tomcat and nginx

What are the differences between tomcat and nginx

What to do if the web page cannot be accessed

What to do if the web page cannot be accessed

What is the difference between original screen and assembled screen?

What is the difference between original screen and assembled screen?

What are the data collection technologies?

What are the data collection technologies?