Hello everyone, I am Teacher Fei. As a classic machine learning framework, scikit-learn has been developed for more than ten years since its birth, but its operation Speed has been widely criticized by users. Friends who are familiar with scikit-learn should know that some of the built-in computing acceleration functions in scikit-learn based on libraries such as joblib have limited effects and cannot make full use of computing power.

The knowledge I want to introduce to you today can help us improve the computing efficiency of scikit-learn by dozens or even thousands of times without changing the original code. Let's go!

In order to achieve the effect of accelerating operations, we only need to install the sklearnex extension library, which can help us in On devices with Intel processors, computing efficiency is greatly improved.

With a cautious attitude, we can do experiments in a separate conda virtual environment. All commands are as follows. We also install jupyterlab as an IDE:

conda create -n scikit-learn-intelex-demo python=3.8 -c https://mirrors.sjtug.sjtu.edu.cn/anaconda/pkgs/main -y

conda activate scikit-learn-intelex-demo

pip install scikit-learn scikit-learn-intelex jupyterlab -i https://pypi.douban.com/simple/

After completing the preparation of the experimental environment, We write test code in jupyter lab to see the acceleration effect. The method of use is very simple. We only need to run the following code before importing scikit-learn related function modules in the code:

from sklearnex import patch_sklearn, unpatch_sklearn

patch_sklearn()

Success After turning on the acceleration mode, the following information will be printed:

The other thing to do is to continue executing your original scikit-learn code later. I usually write it by myself And I briefly tested it on the old Savior laptop used to develop open source projects.

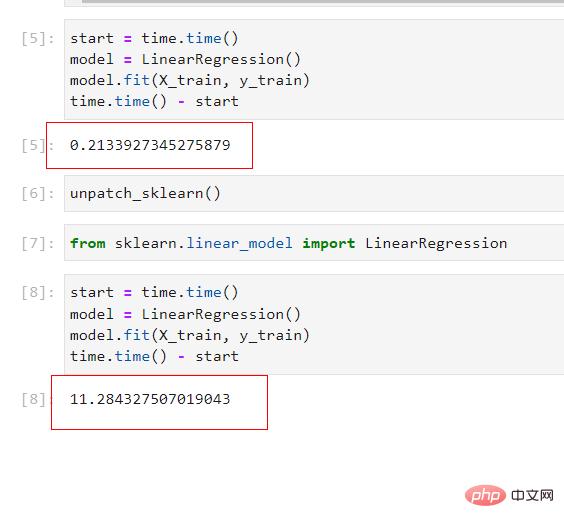

Taking linear regression as an example, on an example data set with millions of samples and hundreds of features, it only takes 0.21 seconds to complete the training of the training set after turning on acceleration, and using unpatch_sklearn() After forcibly turning off the acceleration mode (note that scikit-learn related modules need to be re-imported), the training time immediately increased to 11.28 seconds, which means that through sklearnex we have gained more than 50 times the computing speed!

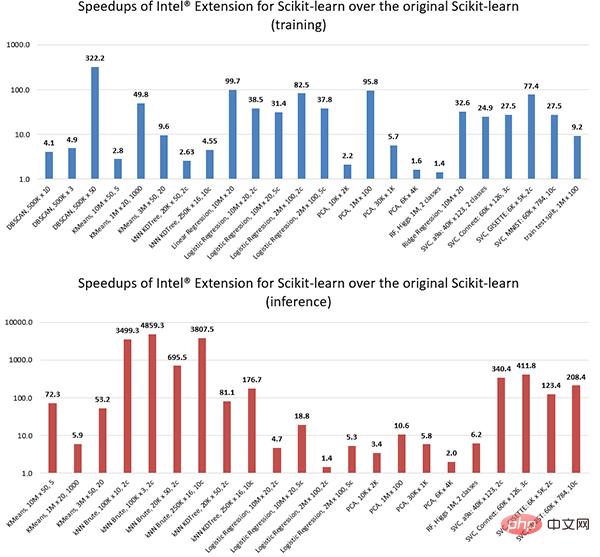

According to the official statement, the more powerful the CPU, the higher the performance improvement ratio will be. The picture below is the official test under the Intel Xeon Platinum 8275CL processor. The performance improvement results obtained after a series of algorithms can not only improve the training speed, but also improve the model inference and prediction speed. In some scenarios, the performance improvement can even reach thousands of times:

The official also provides some ipynb examples (https://github.com/intel/scikit-learn-intelex/tree/master/examples/notebooks), showing K-means, DBSCAN, random forest, logic Interested readers can download and learn examples of various commonly used algorithms such as regression and ridge regression.

The above is the detailed content of One line of code speeds up sklearn operations thousands of times. For more information, please follow other related articles on the PHP Chinese website!

Introduction to the usage of vbs whole code

Introduction to the usage of vbs whole code What to do if normal.dotm error occurs

What to do if normal.dotm error occurs What is function

What is function css3 gradient properties

css3 gradient properties How to solve waiting for device

How to solve waiting for device Where should I fill in my place of birth: province, city or county?

Where should I fill in my place of birth: province, city or county? Virtual currency exchange platform

Virtual currency exchange platform What are the classifications of linux systems?

What are the classifications of linux systems?