This article will introduce in detail the most common hyperparameter optimization methods used to improve machine learning results.

Translator| The solution is to add more training data. Additional data is often helpful (except in certain circumstances), but generating high-quality data can be very expensive. Hyperparameter optimization saves us time and resources by using existing data to get the best model performance.

As the name suggests, hyperparameter optimization is the process of determining the best combination of hyperparameters for a machine learning model to satisfy the optimization function (i.e., maximize the performance of the model given the data set under study). In other words, each model provides multiple tuning “buttons” of options that we can change until we achieve an optimal combination of hyperparameters for our model. Some examples of parameters that we can change during hyperparameter optimization can be the learning rate, the architecture of the neural network (e.g., the number of hidden layers), regularization, etc.

I will provide a high-level comparison table at the beginning of the article for the reader’s reference, and then further explore, explain, and implement each item in the comparison table throughout the rest of the article.

Table 1: Comparison of hyperparameter optimization methods1. Grid search algorithm Grid search may be a super The simplest and most intuitive method of parameter optimization, which involves an exhaustive search for the best combination of hyperparameters in a defined search space. The "search space" in this context is the entire hyperparameters and the values of such hyperparameters considered during optimization. Let’s understand grid search better with an example.

Grid search may be a super The simplest and most intuitive method of parameter optimization, which involves an exhaustive search for the best combination of hyperparameters in a defined search space. The "search space" in this context is the entire hyperparameters and the values of such hyperparameters considered during optimization. Let’s understand grid search better with an example.

Suppose we have a machine learning model with only three parameters. Each parameter can take the value provided in the table:

parameter_1 = [1 , 2, 3]

parameter_2 = [a, b, c]parameter_3 = [x, y, z]

We don’t know which combination of these parameters will optimize our Optimization function of the model (i.e. providing the best output for our machine learning model). In grid search, we simply try every combination of these parameters, measure the model performance for each parameter, and simply choose the combination that yields the best performance! In this example, parameter 1 can take on 3 values (i.e. 1, 2, or 3), parameter 2 can take on 3 values (i.e. a, b, and c), and parameter 3 can take on 3 values (i.e. x, y, and z). In other words, there are 3*3*3=27 combinations in total. The grid search in this example will involve 27 rounds of evaluating the performance of the machine learning model to find the best performing combination.

As you can see, this method is very simple (similar to a trial and error task), but it also has some limitations. Let's summarize the advantages and disadvantages of this method.

Among them, the advantages include:

'min_samples_split': [2, 5, 10],

'min_samples_leaf': [1, 5, 10]}The above search space consists of a total combination of 4*5*3*3=180 hyperparameters. We will use grid search to find the combination that optimizes the objective function as follows:# Import libraries

from sklearn.model_selection import GridSearchCV

from sklearn.datasets import load_iris

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import cross_val_score

import time

# Load Iris data set

iris = load_iris()

X, y = iris.data, iris.target

#Define hyperparameter search space

search_space = {'n_estimators': [10, 100, 500, 1000],

'max_depth': [2, 10, 25, 50, 100],

'min_samples_split': [2, 5, 10 ],

'min_samples_leaf': [1, 5, 10]}

#Define Random Forest Classifier

clf = RandomForestClassifier(random_state=1234)

# Generate optimizer object

optimizer = GridSearchCV(clf, search_space, cv=5, scoring='accuracy')

#Storage the starting time for calculating the total time

start_time = time.time()

#Optimizer on fitting data

optimizer.fit(X, y)

# Store the end time so that it can be used to calculate the total time consuming

end_time = time.time ()

# Print the best hyperparameter set and corresponding score

print(f"selected hyperparameters:")

print(optimizer.best_params_)

print("")

print(f"best_score: {optimizer.best_score_}")

print(f"elapsed_time: {round(end_time-start_time, 1)}")

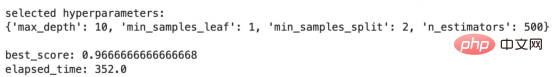

The output of the above code is as follows :

#Here we can see the hyperparameter values selected using grid search. Among them, best_score describes the evaluation results using the selected set of hyperparameters, and elapsed_time describes the time it took my local laptop to execute this hyperparameter optimization strategy. As you proceed to the next method, keep the evaluation results and elapsed time in mind for comparison. Now, let's get into the discussion of random search.

As the name suggests, random search is the process of randomly sampling hyperparameters from a defined search space. Unlike grid search, random search only selects a random subset of hyperparameter values for a predefined number of iterations (depending on available resources such as time, budget, goals, etc.) and computes the machine learning model for each hyperparameter performance, and then choose the best hyperparameter values.

Based on the above approach, you can imagine that random search is less expensive than a full grid search, but still has its own advantages and disadvantages, as follows:

Advantages:

In the next method, we will solve the "memoryless" problem of grid and random search through Bayesian optimization "shortcoming. But before discussing this method, let's implement random search.

Using the following code snippet, we will implement random search hyperparameter optimization for the same problem described in the grid search implementation.

# Import library

from sklearn.model_selection import RandomizedSearchCV

from scipy.stats import randint

# Create a RandomizedSearchCV object

optimizer = RandomizedSearchCV( clf, param_distributinotallow=search_space,

n_iter=50, cv=5, scoring='accuracy',

random_state=1234)

# Store the start time to calculate the total running time

start_time = time.time()

# Fit the optimizer on the data

optimizer.fit(X, y)

# Store the end time to calculate the total Running time

end_time = time.time()

# Print the optimal hyperparameter set and corresponding score

print(f"selected hyperparameters:")

print(optimizer .best_params_)

print("")

print(f"best_score: {optimizer.best_score_}")

print(f"elapsed_time: {round(end_time-start_time, 1)}" )

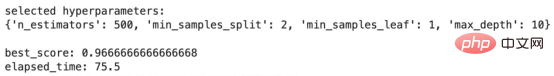

The output results of the above code are as follows:

Random search results

Compared with the results of grid search, These results are very interesting. best_score remains the same, but elapsed_time decreases from 352.0 seconds to 75.5 seconds! How impressive! In other words, the random search algorithm managed to find a set of hyperparameters that performed the same as grid search in about 21% of the time required by grid search! However, the efficiency here is much higher.

Next, let’s move on to our next method, called Bayesian optimization, which learns from every attempt during the optimization process.

Bayesian optimization is a hyperparameter optimization method that uses a probabilistic model to "learn" from previous attempts and direct the search to the hyperparameters in the search space. The best combination to optimize the objective function of the machine learning model.

The Bayesian optimization method can be divided into 4 steps, which I will describe below. I encourage you to read through these steps to better understand the process, but there is no prerequisite knowledge required to use this method.

If you are interested in learning more about Baye For detailed information on Si optimization, you can view the following post:

"Bayesian Optimization Algorithm in Machine Learning", the address is:

https://medium.com/@fmnobar/conceptual -overview-of-bayesian-optimization-for-parameter-tuning-in-machine-learning-a3b1b4b9339f.

Now, now that we understand how Bayesian optimization works, let’s look at its advantages and disadvantages.

Advantages:

3.1. Bayesian Optimization Algorithm Implementation

# Import library

from skopt import BayesSearchCV

# Perform Bayesian optimization

optimizer = BayesSearchCV(estimator=RandomForestClassifier(),

search_spaces =search_space,

n_iter=10,

cv=5,

scoring='accuracy',

random_state=1234)

# Store the start time to calculate Total running time

start_time = time.time()

optimizer.fit(X, y)

# Store the end time to calculate the total running time

end_time = time.time()

# Print the best hyperparameter set and corresponding score

print(f"selected hyperparameters:")

print(optimizer.best_params_)

print("")

print(f"best_score: {optimizer.best_score_}")

print(f"elapsed_time: {round(end_time-start_time, 1)}")

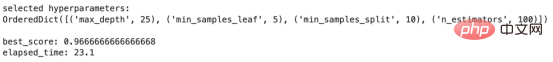

The output results of the above code are as follows:

Bayesian optimization results

Bayesian optimization results

Another set of interesting results! The best_score is consistent with the results we obtained with grid and random search, but the result took only 23.1 seconds compared to 75.5 seconds for random search and 352.0 seconds for grid search! In other words, using Bayesian optimization takes approximately 93% less time than grid search. This is a huge productivity boost that becomes even more meaningful in larger, more complex models and search spaces.

Note that Bayesian optimization only used 10 iterations to obtain these results because it can learn from previous iterations (as opposed to random and grid search).

The table below compares the results of the three methods discussed so far. The “Methodology” column describes the hyperparameter optimization method used. This is followed by the hyperparameters selected using each method. "Best Score" is the score obtained using a specific method, and then "Elapsed Time" represents how long it took for the optimization strategy to run on my local laptop. The last column, Gained Efficiency, assumes grid search as the baseline and then calculates the efficiency gained by each of the other two methods compared to grid search (using elapsed time). For example, since random search takes 75.5 seconds and grid search takes 352.0 seconds, the efficiency of random search relative to the grid search baseline is calculated as 1–75.5/352.0=78.5%.

Table 2 - Method Performance Comparison Table

Two main conclusions in the above comparison table:

In this article, we discussed what hyperparameter optimization is and introduced the three most common methods used for this optimization exercise. We then introduce each of these three methods in detail and implement them in a classification exercise. Finally, we compare the results of implementing the three methods. We find that methods such as Bayesian optimization learned from previous attempts can significantly improve efficiency, which can be an important factor in large complex models such as deep neural networks, where efficiency can be a determining factor.

Zhu Xianzhong, 51CTO community editor, 51CTO expert blogger, lecturer, computer teacher at a university in Weifang, and a veteran in the freelance programming industry.

Original title: Hyperparameter Optimization — Intro and Implementation of Grid Search, Random Search and Bayesian Optimization, author: Farzad Mahmoodinobar

The above is the detailed content of Comparison of hyperparameter optimization: grid search, random search and Bayesian optimization. For more information, please follow other related articles on the PHP Chinese website!