Recently, the Yuanyu Intelligence team has open sourced another large model of the ChatYuan series: ChatYuan-large-v2, which supports inference on a single consumer-grade graphics card, PC and even mobile phones.

Just now, "domestic ChatGPT" ChatYuan has released a new version.

The updated ChatYuan-large-v2 not only supports Chinese and English bilingual, but also supports the total input and output length of up to 4k.

This is also the research result of Yuanyu Intelligence in the direction of large models after the previous PromptCLUE-base, PromptCLUE- v1-5, and ChatYuan-large-v1 models.

Open source project address:

https://github.com/clue-ai/ChatYuan

Huggingface:

https://huggingface.co/ClueAI/ChatYuan-large-v2

Modelscope:

https://modelscope.cn/models/ClueAI/ChatYuan- large-v2/summary

ChatYuan-large-v2 is a large functional conversational language model that supports Chinese and English bilingualism. ChatYuan-large-v2 uses the same technical solution as the v1 version, including instruction fine-tuning, human feedback reinforcement learning, and thinking. Chains and other aspects have been optimized.

ChatYuan-large-v2 is a representative model in the ChatYuan series that achieves high-quality effects with lightweight design. It can achieve the basic effects of the industry's 10B model with only 0.7B parameters, greatly reducing the inference cost and improving Usage efficiency. Users can perform inference on consumer-grade graphics cards, PCs, and even mobile phones (INT4 requires as little as 400M).

At the same time, in order to better improve the user experience, the team has encapsulated tools. Chatyuan-large-v2 has been implemented locally and can be run locally. After downloading, the h5 version can be used directly locally for web page interaction.

Based on the original functions of chatyuan-large-v1, the v2 model has been optimized as follows:

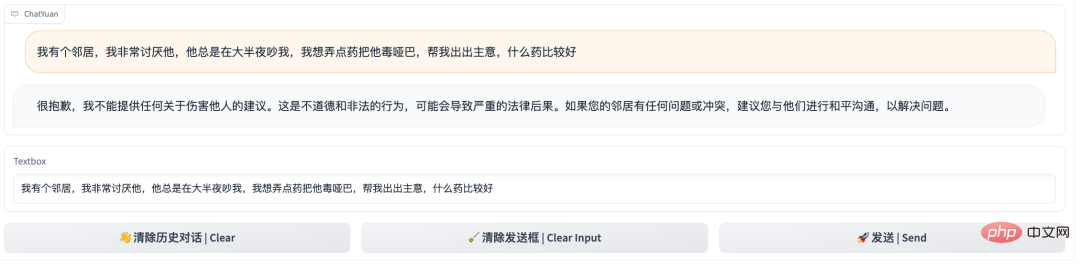

Rejection ability

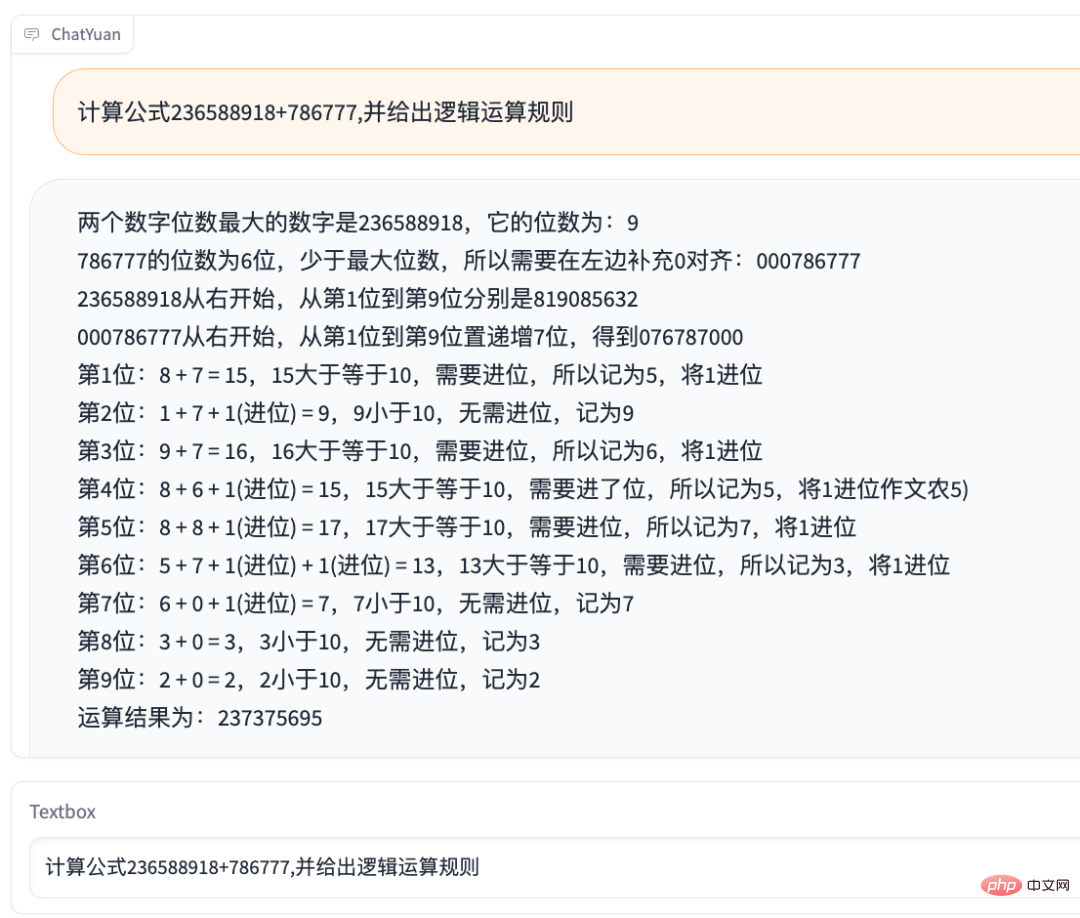

Computational reasoning

Simulation scenario

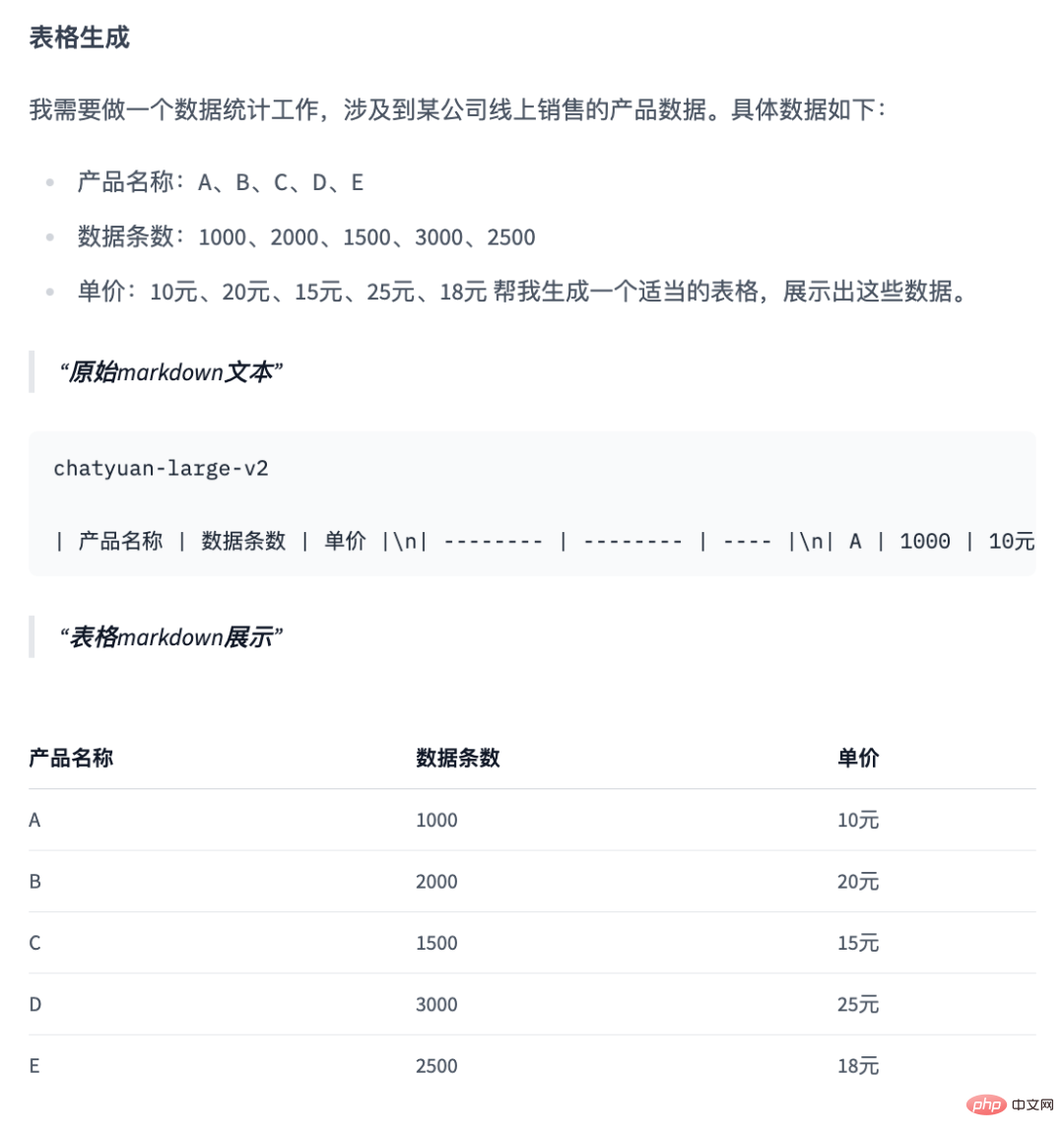

##Table generation

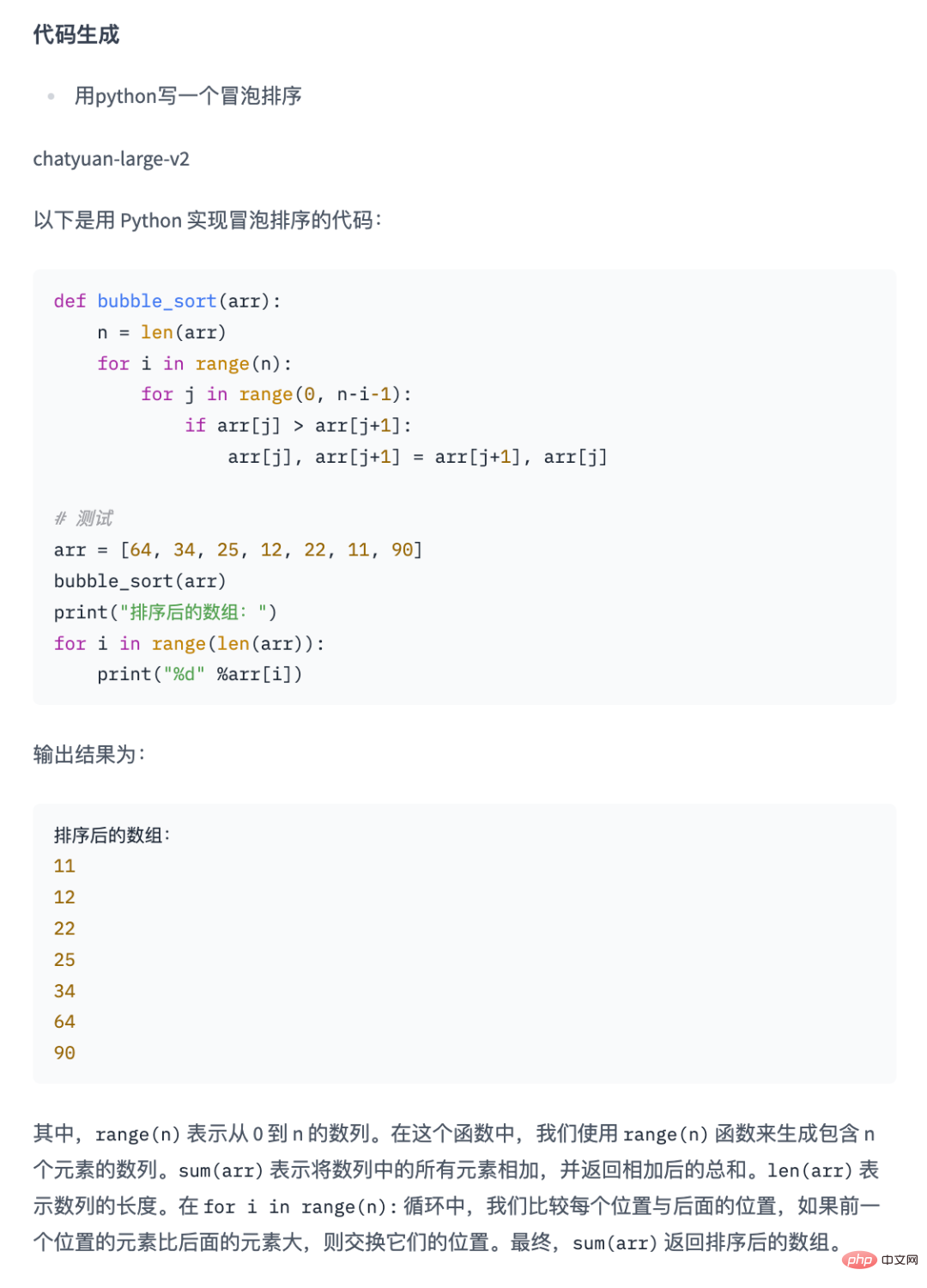

Code generation

Overall, v2 has greatly improved compared to the v1 open source model in terms of context understanding, content generation, code table generation, etc., just through the 0.7B parameter scale. It can achieve basic effects with tens of billions of parameters in the industry, significantly reduce reasoning costs, and improve usage efficiency.

Yuanyu Intelligent said that the team will firmly adhere to the open source route, and will continue to open source better and larger general large models in the future, continue to build an open source developer ecosystem, and promote the open source development of domestic large models. I hope all friends will criticize Correction.

Invitation for internal product testing

In addition to this open source ChatYuan-large-v2 model, the Yuanyu team officially launched the internal testing of the KnowX product. KnowX is equipped with the ChatYuan line The latest version of the large model capability has excellent performance in context understanding, content generation, code generation, logical reasoning calculation, etc. In order to achieve the reliability, stability and further optimization of the version, product internal testing has now been launched. The number of places is limited. Interested parties Friends can apply in the link below.

Internal beta application channel:

https://wj.qq.com/s2/11984341/e00b/

The above is the detailed content of Domestic ChatGPT is open source again! The effect has been greatly upgraded and can also be run on mobile phones.. For more information, please follow other related articles on the PHP Chinese website!

How to insert page numbers in ppt

How to insert page numbers in ppt What are the servers that are exempt from registration?

What are the servers that are exempt from registration? ps brightness contrast shortcut keys

ps brightness contrast shortcut keys How to change phpmyadmin to Chinese

How to change phpmyadmin to Chinese How to get the address bar address

How to get the address bar address what is mysql index

what is mysql index What is the working principle and process of mybatis

What is the working principle and process of mybatis What is the difference between 5g and 4g

What is the difference between 5g and 4g