This article brings you relevant knowledge about python, which mainly introduces the relevant content about multi-process, including what is multi-process, process creation, inter-process synchronization, and process Chi and so on, let’s take a look at it together, I hope it will be helpful to everyone.

Recommended learning: python video tutorial

Program: For example, xxx.py is a program, which is a static

Process: After a program is run, the resources used by the code are called processes. It is the basic unit for the operating system to allocate resources. Not only can multitasking be completed through threads, but also processes can be done

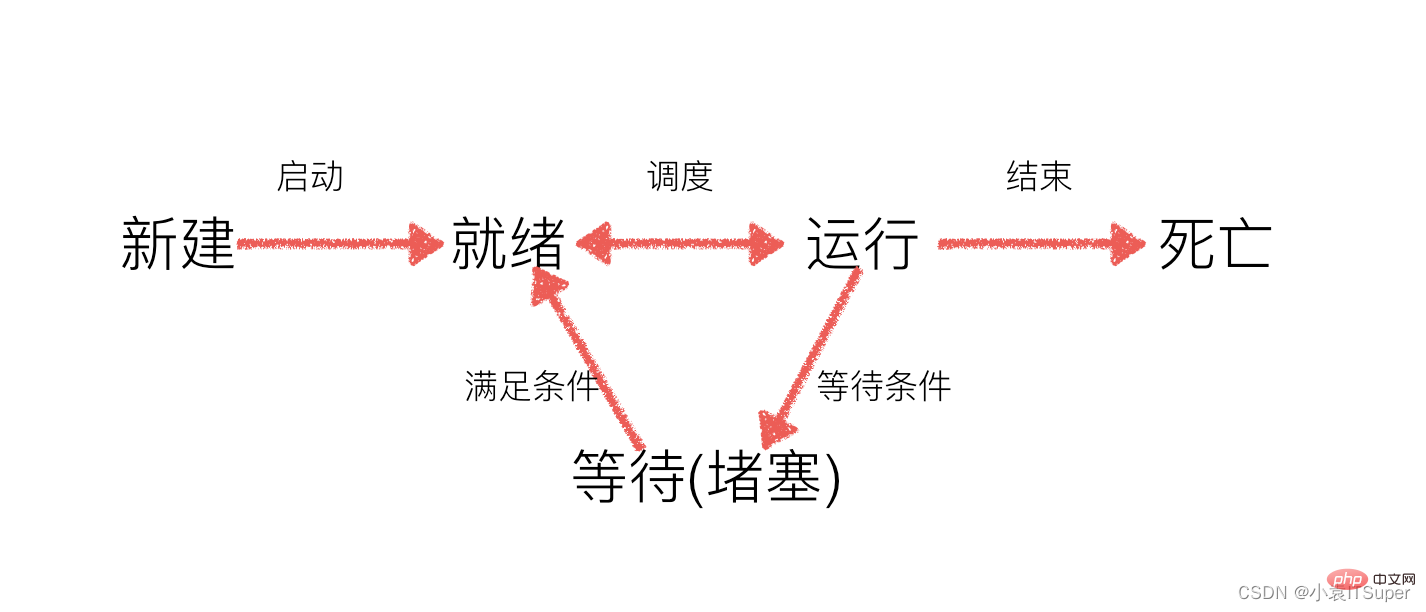

During work, the number of tasks is often greater than the number of CPU cores, that is, there must be some tasks being executed, and Some other tasks are waiting for the CPU to execute, resulting in different states

multiprocessingThe module generates a process by creating aProcessobject and then calling itsstart()method,Processis the same asthreading.Thread API.

Syntax format:multiprocessing.Process(group=None, target=None, name=None, args=(), kwargs={}, *, daemon =None)

Parameter description:

group: Specifies the process group, which is not used in most casestarget: If a function reference is passed, the child process can be tasked to execute the code here name: Set a name for the process , you can not set args: The parameters passed to the function specified by the target are passed in the form of tuples kwargs: To the target The specified function passes named parametersThe multiprocessing.Process object has the following methods and properties:

| Method name/property | Explanation |

|---|---|

run() |

The specific execution method of the process |

start() |

Start a child process instance (create a child process) |

join([timeout ]) |

If the optional parameter timeout is the default value None, it will block until the process calling the join() method terminates; if timeout is a positive number, it will block for up to timeout seconds |

name |

The alias of the current process, the default is Process-N, N is an integer increasing from 1 |

pid |

The pid (process number) of the current process |

is_alive() |

Determine whether the child process of the process is still alive |

##exitcode | The exit code of the child process|

daemon | The daemon flag of the process is a Boolean value. |

authkey | The authentication key for the process. |

sentinel | Numeric handle to the system object that will become ready when the process ends. |

terminate() | Immediately terminate the child process regardless of whether the task is completed|

kill() | Same as terminate(), but uses the SIGKILL signal on Unix. |

close() | Close the Process object and release all resources associated with it

| Method name | Description |

|---|---|

q=Queue() |

Initialize the Queue() object. If the maximum number of messages that can be received is not specified in the brackets, or the number is a negative value, then Represents that there is no upper limit on the number of messages that can be accepted (until the end of memory) |

Queue.qsize() |

Returns the number of messages contained in the current queue |

Queue.empty() |

If the queue is empty, return True, otherwise False |

Queue.full() |

If the queue is full, return True, otherwise False |

Queue.get([block[ , timeout]]) |

Get a message in the queue and then remove it from the queue. The default value of block is True. 1. If the block uses the default value and no timeout (in seconds) is set, and the message queue is empty, the program will be blocked (stopped in the reading state) until the message is read from the message queue. If timeout is set , it will wait for timeout seconds, and if no message has been read, a "Queue.Empty" exception will be thrown. 2. If the block value is False and the message queue is empty, the "Queue.Empty" exception will be thrown immediately |

## Queue.get_nowait() | Quite Queue.get(False)|

Queue.put(item,[block[, timeout]]) |

Write item messages to the queue, the default value of block is True. 1. If the block uses the default value and no timeout (in seconds) is set, if there is no space for writing in the message queue, the program will be blocked (stopped in the writing state) until space is made available in the message queue. If timeout is set, it will wait for timeout seconds. If there is no space, a "Queue.Full" exception will be thrown. 2. If the block value is False, if there is no space to write in the message queue, the "Queue.Full" exception will be thrown immediately |

|

| 方法名 | 说明 |

|---|---|

close() |

关闭Pool,使其不再接受新的任务 |

terminate() |

不管任务是否完成,立即终止 |

join() |

主进程阻塞,等待子进程的退出, 必须在close或terminate之后使用 |

初始化Pool时,可以指定一个最大进程数,当有新的请求提交到Pool中时,如果池还没有满,那么就会创建一个新的进程用来执行该请求;但如果池中的进程数已经达到指定的最大值,那么该请求就会等待,直到池中有进程结束,才会用之前的进程来执行新的任务,请看下面的实例:

# -*- coding:utf-8 -*-from multiprocessing import Poolimport os, time, randomdef worker(msg):

t_start = time.time()

print("%s开始执行,进程号为%d" % (msg,os.getpid()))

# random.random()随机生成0~1之间的浮点数

time.sleep(random.random()*2)

t_stop = time.time()

print(msg,"执行完毕,耗时%0.2f" % (t_stop-t_start))po = Pool(3) # 定义一个进程池,最大进程数3for i in range(0,10):

# Pool().apply_async(要调用的目标,(传递给目标的参数元祖,))

# 每次循环将会用空闲出来的子进程去调用目标

po.apply_async(worker,(i,))print("----start----")po.close()

# 关闭进程池,关闭后po不再接收新的请求po.join()

# 等待po中所有子进程执行完成,必须放在close语句之后print("-----end-----")运行结果:

----start---- 0开始执行,进程号为21466 1开始执行,进程号为21468 2开始执行,进程号为21467 0 执行完毕,耗时1.01 3开始执行,进程号为21466 2 执行完毕,耗时1.24 4开始执行,进程号为21467 3 执行完毕,耗时0.56 5开始执行,进程号为21466 1 执行完毕,耗时1.68 6开始执行,进程号为21468 4 执行完毕,耗时0.67 7开始执行,进程号为21467 5 执行完毕,耗时0.83 8开始执行,进程号为21466 6 执行完毕,耗时0.75 9开始执行,进程号为21468 7 执行完毕,耗时1.03 8 执行完毕,耗时1.05 9 执行完毕,耗时1.69 -----end-----

如果要使用Pool创建进程,就需要使用multiprocessing.Manager()中的Queue()

而不是multiprocessing.Queue(),否则会得到一条如下的错误信息:RuntimeError: Queue objects should only be shared between processes through inheritance.

下面的实例演示了进程池中的进程如何通信:

# -*- coding:utf-8 -*-# 修改import中的Queue为Managerfrom multiprocessing import Manager,Poolimport os,time,randomdef reader(q):

print("reader启动(%s),父进程为(%s)" % (os.getpid(), os.getppid()))

for i in range(q.qsize()):

print("reader从Queue获取到消息:%s" % q.get(True))def writer(q):

print("writer启动(%s),父进程为(%s)" % (os.getpid(), os.getppid()))

for i in "itcast":

q.put(i)if __name__=="__main__":

print("(%s) start" % os.getpid())

q = Manager().Queue() # 使用Manager中的Queue

po = Pool()

po.apply_async(writer, (q,))

time.sleep(1) # 先让上面的任务向Queue存入数据,然后再让下面的任务开始从中取数据

po.apply_async(reader, (q,))

po.close()

po.join()

print("(%s) End" % os.getpid())运行结果:

(11095) start writer启动(11097),父进程为(11095)reader启动(11098),父进程为(11095)reader从Queue获取到消息:i reader从Queue获取到消息:t reader从Queue获取到消息:c reader从Queue获取到消息:a reader从Queue获取到消息:s reader从Queue获取到消息:t(11095) End

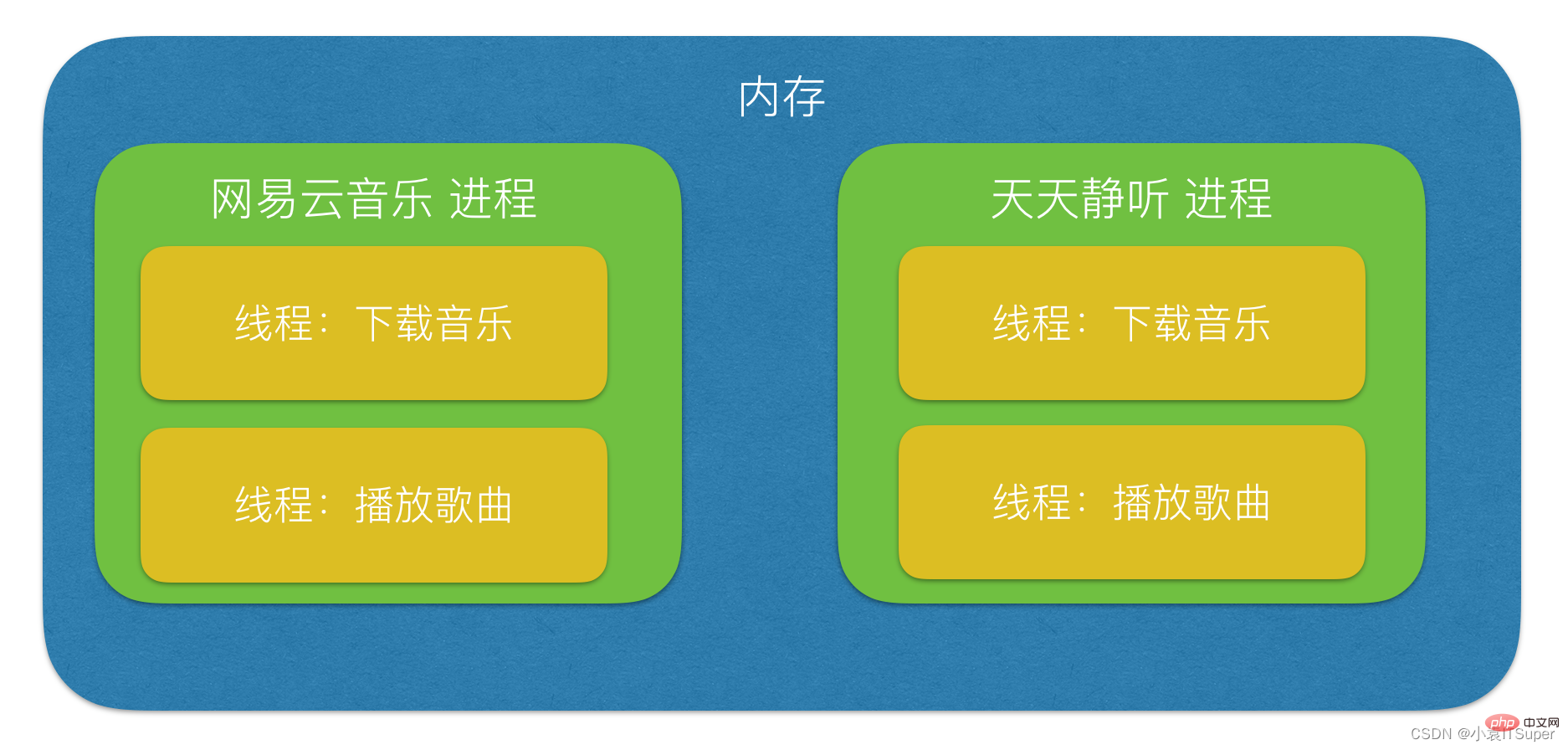

进程:能够完成多任务,比如 在一台电脑上能够同时运行多个QQ

线程:能够完成多任务,比如 一个QQ中的多个聊天窗口

定义的不同

进程是系统进行资源分配和调度的一个独立单位.

线程是进程的一个实体,是CPU调度和分派的基本单位,它是比进程更小的能独立运行的基本单位.线程自己基本上不拥有系统资源,只拥有一点在运行中必不可少的资源(如程序计数器,一组寄存器和栈),但是它可与同属一个进程的其他的线程共享进程所拥有的全部资源.

推荐学习:python视频教程

The above is the detailed content of Summary of Python multi-process knowledge points. For more information, please follow other related articles on the PHP Chinese website!