This article will sort out and share some high-frequency Redis interview questions with you, and take you through the core knowledge points of Redis, involving data structure, memory model, IO model, persistent RDB, etc. I hope it will be helpful to you!

Many people only know that it is a K/V NoSQl in-memory database, single thread... This is because they do not fully understand Redis and cannot continue to ask more questions.

This question is a basic understanding. We can implement it from the underlying data structures of different data types in Redis, completely based on memory, IO multiplexing network model, thread model, progressive rehash...

We can first talk about how fast it is. According to official data, Redis's QPS can reach about 100,000 (requests per second). Those who are interested can refer to the official benchmark program "How fast is Redis?" 》, address: redis.io/topics/benc…

The horizontal axis is the number of connections, and the vertical axis is QPS.

This picture reflects an order of magnitude. Through quantification, it makes the interviewer feel that you have read the official documents and are very rigorous.

Redis is a memory-based database. Compared with disk databases, it completely beats the speed of disks.

Both read and write operations are completed in memory. Let's compare the differences between memory operations and disk operations respectively.

Disk call

Memory operation

Memory is directly controlled by the CPU, also It is the memory controller integrated inside the CPU, so the memory is directly connected to the CPU and enjoys the optimal bandwidth for communication with the CPU.

Finally, a picture is used to quantify the various delay times of the system (part of the data is quoted from Brendan Gregg)

When I was learning MySQL, I knew that the B Tree data structure was used in order to improve the retrieval speed, so the fast speed of Redis should also be related to the data structure.

Redis has a total of 5 data types, String, List, Hash, Set, SortedSet.

Different data types are supported by one or more data structures at the bottom, in order to pursue faster speed.

Brother Ma’s message: We can explain the advantages of the underlying data structure of each data type separately. Many people only know the data type, and telling the underlying data structure can make people’s eyes shine.

SDS in len save this The length of the string, O(1) time complexity to query the string length information.

Space pre-allocation: After SDS is modified, the program will not only allocate the necessary space required for SDS, but also allocate additional unused space.

Lazy space release: When shortening the SDS, the program will not reclaim the excess memory space, but use the free field to record the number of bytes and not release them later. If an append operation is required, the unused space in free is used directly, reducing memory allocation.

Compressed list is one of the underlying implementations of the three data types: List, hash, and sorted Set.

When a list has only a small amount of data, and each list item is either a small integer value or a relatively short string, then Redis will use a compressed list as the underlying implementation of the list key.

This way the memory is compact and saves memory.

Subsequent versions have transformed the list data structure, using quicklist instead of ziplist and linkedlist.

Quicklist is a mixture of ziplist and linkedlist. It divides linkedlist into segments. Each segment uses ziplist for compact storage, and multiple ziplists are connected in series using bidirectional pointers.

The sorting function of sorted set type is implemented through the "skip list" data structure.

The skip list (skiplist) is an ordered data structure that maintains multiple pointers to other nodes in each node to achieve the purpose of quickly accessing nodes.

The skip list adds multi-level indexes on the basis of the linked list, and realizes rapid positioning of data through several jumps in the index position, as shown in the following figure:

When a set only contains integer value elements, and the number of elements in this set is not large, Redis will use an integer set as the underlying implementation of the set key to save memory.

Code Brother’s message: We need to note that the single-thread of Redis refers to the network IO of Redis (network IO after version 6.x Using multi-threading) and key-value pair instruction reading and writing are executed by one thread. For Redis persistence, cluster data synchronization, asynchronous deletion, etc., they are all executed by other threads.

Don’t say that Redis has only one thread.

Single thread refers to the execution of Redis key-value pair read and write instructions in a single thread.

Let me talk about the official answer first, which makes people feel rigorous enough, instead of just reciting some blogs based on what others say.

Official answer: Because Redis is a memory-based operation, the CPU is not the bottleneck of Redis. The bottleneck of Redis is most likely to be the size of the machine memory or the network bandwidth. Since single-threading is easy to implement and the CPU will not become a bottleneck, it is logical to adopt a single-threaded solution. Original address: redis.io/topics/faq.

Why not use multi-threaded execution to fully utilize the CPU?Before running each task, the CPU needs to know where the task is loaded and started running. That is, the system needs help setting up the CPU registers and program counter beforehand, which is called the CPU context.

When switching contexts, we need to complete a series of work, which is a very resource-consuming operation.

Introducing multi-threaded development requires the use of synchronization primitives to protect concurrent reading and writing of shared resources, increasing code complexity and debugging difficulty.What are the benefits of single thread?

typedef struct redisObject{

//类型

unsigned type:4;

//编码

unsigned encoding:4;

//指向底层数据结构的指针

void *ptr;

//...

}robj;chained hashing: That is, the elements in the same bucket are saved using a linked list. However, when the linked list is too long, the search performance may deteriorate. Therefore, in order to pursue speed, Redis uses two global hash tables. Used for rehash operations to increase the number of existing hash buckets and reduce hash conflicts.

Start by default using "hash table 1" to save key-value pair data, and "hash table 2" has no allocated space at this time. When more and more data triggers the rehash operation, the following operations are performed:It is worth noting that the process of remapping the data from hash table 1 to hash table 2 is not a one-time process. This will cause Redis to block and be unable to provide services.

Instead, progressive rehash is used. Each time a client request is processed, it starts from the first index in "hash table 1" and rehash this position. All data is copied to "hash table 2", and the rehash is dispersed into multiple requests to avoid time-consuming blocking.

Redis’ data persistence uses the “RDB data snapshot” method to achieve rapid recovery from downtime. However, executing full data snapshots too frequently has two serious performance overheads:

So Redis also designed the AOF post-write log to record the instructions for modifying the memory.

Interviewer: What is RDB memory snapshot?

When Redis executes the "write" command, the memory data will continue to change. The so-called memory snapshot refers to the status data of the data in Redis memory at a certain moment.

It’s like time is frozen at a certain moment. When we take pictures, we can completely record the moment of a certain moment through photos.

Redis is similar to this, which is to capture the data at a certain moment in the form of a file and write it to the disk. This snapshot file is called RDB file. RDB is the abbreviation of Redis DataBase.

#When doing data recovery, directly read the RDB file into the memory to complete the recovery.

Interviewer: During the generation of RDB, can Redis handle write requests at the same time?

Yes, Redis uses the operating system's multi-process copy-on-write technology COW (Copy On Write) to achieve snapshot persistence and ensure data consistency.

Redis will call the glibc function during persistence fork to generate a child process. Snapshot persistence is completely handled by the child process, and the parent process continues to process client requests.

When the main thread executes the write command to modify the data, the data will be copied. bgsave The child process reads the copy data and writes it to the RDB file.

This not only ensures the integrity of the snapshot, but also allows the main thread to modify the data at the same time, avoiding the impact on normal business.

Interviewer: So what is AOF?

The AOF log records all the modified instruction sequences since the creation of the Redis instance. Then it can be restored by executing all instructions sequentially on an empty Redis instance, that is, "replaying". The state of the memory data structure of the current instance of Redis.

AOF configuration items provided by RedisappendfsyncThe writeback strategy directly determines the efficiency and security of the AOF persistence function.

aof_buf buffer will be written to the AOF file immediately after the write command is executed. There is no best-of-both-worlds strategy, we need to make a trade-off between performance and reliability.

Interviewer: Since RDB has two performance problems, why not use AOF.

AOF pre-write log records each "write" command operation. It will not cause performance loss like RDB full snapshot, but the execution speed is not as fast as RDB. At the same time, too large log files will also cause performance problems.

So, Redis has designed a killer "AOF rewriting mechanism". Redis provides the bgrewriteaof instruction to slim down the AOF log.

The principle is to open a sub-process to traverse the memory and convert it into a series of Redis operation instructions, which are serialized into a new AOF log file. After the serialization is completed, the incremental AOF log that occurred during the operation is appended to the new AOF log file. After the appending is completed, the old AOF log file is immediately replaced, and the slimming work is completed.

#Interviewer: How to achieve as little data loss as possible while taking into account performance?

When restarting Redis, we rarely use rdb to restore the memory state because a large amount of data will be lost. We usually use AOF log replay, but the performance of AOF log replay is much slower than RDB, so when the Redis instance is large, it takes a long time to start.

In order to solve this problem, Redis 4.0 brings a new persistence option-Hybrid persistence. Store the contents of the rdb file together with the incremental AOF log file. The AOF log here is no longer the full log, but the incremental AOF log that occurred during the period from the beginning of persistence to the end of persistence. Usually this part of the AOF log is very small.

SoWhen Redis restarts, you can load the rdb content first, and then replay the incremental AOF log, which can completely replace the previous AOF full file replay, and the restart efficiency is greatly improved.

Redis master-slave architecture data synchronizationRedis provides a master-slave mode, which copies a redundant copy of data to other Redis servers through master-slave replication.Interviewer: How to ensure data consistency between master and slave?In order to ensure the consistency of the replica data, the master-slave architecture adopts a read-write separation method.

Interviewer: Does master-slave replication have other functions?

Interviewer: How is master-slave replication implemented?Synchronization is divided into three situations:

Interviewer: How to achieve the first synchronization?

The first replication process of the master-slave database can be roughly divided into three phases: the connection establishment phase (ie, the preparation phase), the phase of synchronizing data from the master database to the slave database, and the phase of sending new data during synchronization. Write commands to the slave library stage;

command to generate an RDB file and sends the file to the slave library. At the same time, the main library opens up for each slave A replication buffer buffer records all write commands received since the RDB file was generated. Save the RDB from the library, clear the database, and then load the RDB data into memory.

Interviewer: What should I do if the network between the master and slave databases is disconnected? Do I need to copy the full volume again after disconnecting?Before Redis 2.8, if the master-slave library experienced a network interruption during command propagation, the slave library would perform a full copy with the master library again, which was very expensive. Starting from Redis 2.8, after the network is disconnected, the master-slave library will use incremental replication to continue synchronization. Incremental replication:

Used for replication after network interruption and other situations. Only the write commands executed by the master node during the interruption are sent to the slave node, which is more efficient than full replication.

The secret of disconnecting and reconnecting incremental replication is therepl_backlog_buffer buffer. No matter when, the master will record the write instruction operation in repl_backlog_buffer, because the memory Limited, repl_backlog_buffer is a fixed-length circular array, if the array content is full, it will overwrite the previous content from the beginning.

master_repl_offset to record the position offset written by itself, and slave uses slave_repl_offset to record the offset that has been read.

runID, slave_repl_offsetSend to master.

master_repl_offset and slave_repl_offset to the slave library.

The incremental copy execution process is as follows:

Interviewer: After completing full synchronization, how to synchronize data during normal operation?

When the master-slave library completes full replication, a network connection will be maintained between them. The master library will use this connection to synchronize subsequent command operations received successively to the slave library. This process Also known as command propagation based on long connections, the purpose of using long connections is to avoid the overhead caused by frequent connection establishment.

Interviewer: Sure, I know so much, do you know the Sentinel Cluster Principle?

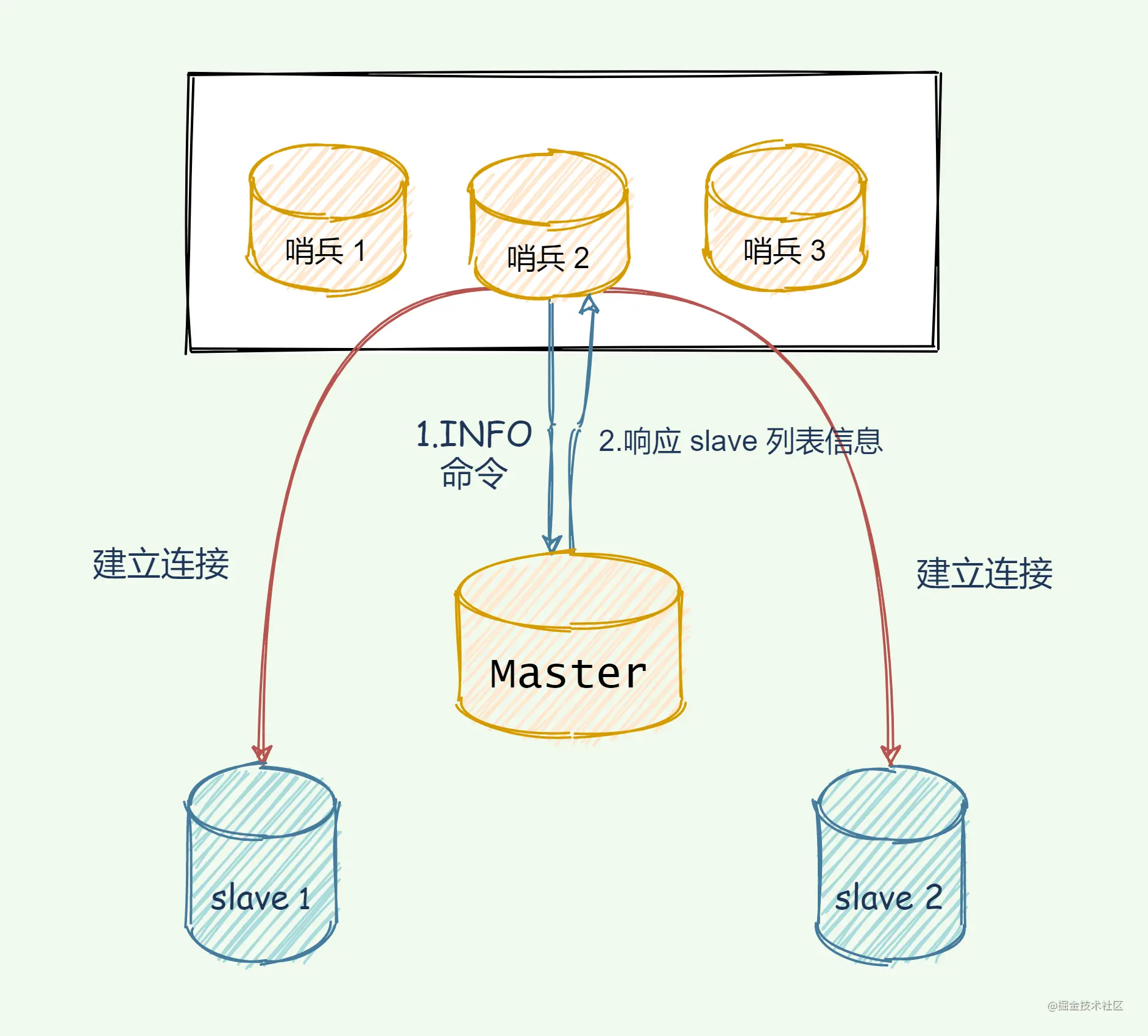

Sentinel is an operating mode of Redis. It focuses on monitoring the running status of Redis instances (master nodes, slave nodes), and can pass a series of actions when the master node fails. The mechanism realizes master selection and master-slave switching, realizes failover, and ensures the availability of the entire Redis system.

His architecture diagram is as follows:

#Redis Sentinel has the following capabilities:

Interviewer: How do the sentinels know each other?

The sentinel establishes communication with the master and uses the publish/subscribe mechanism provided by the master to publish its own information, such as height and weight, whether single, IP, port...

master has a## Dedicated channel of #__sentinel__:hello, used for publishing and subscribing messages between sentinels. This is like __sentinel__:hello WeChat group. Sentinels use the WeChat group established by master to publish their own messages, and at the same time pay attention to the messages released by other sentinels.

Interviewer: Although the sentinels have established a connection with each other, they still need to establish a connection with the slave. Otherwise, they cannot be monitored. How do you know the slave and monitor them?The key is to use the master to achieve it. The sentinel sends the

INFO command to the master. The master naturally knows all the slaves under his sect. So after the master receives the command, it tells the sentinel the slave list.

Interviewer: In addition to sentries, are there any other high-availability methods?There is a Cluster cluster to achieve high availability. The Redis cluster monitored by the Sentinel cluster has a master-slave architecture and cannot be easily expanded.

Using Redis Cluster cluster mainly solves various slow problems caused by large data storage, and also facilitates horizontal expansion.

When facing millions or tens of millions of users, horizontally scalable Redis slicing clusters will be a very good choice.

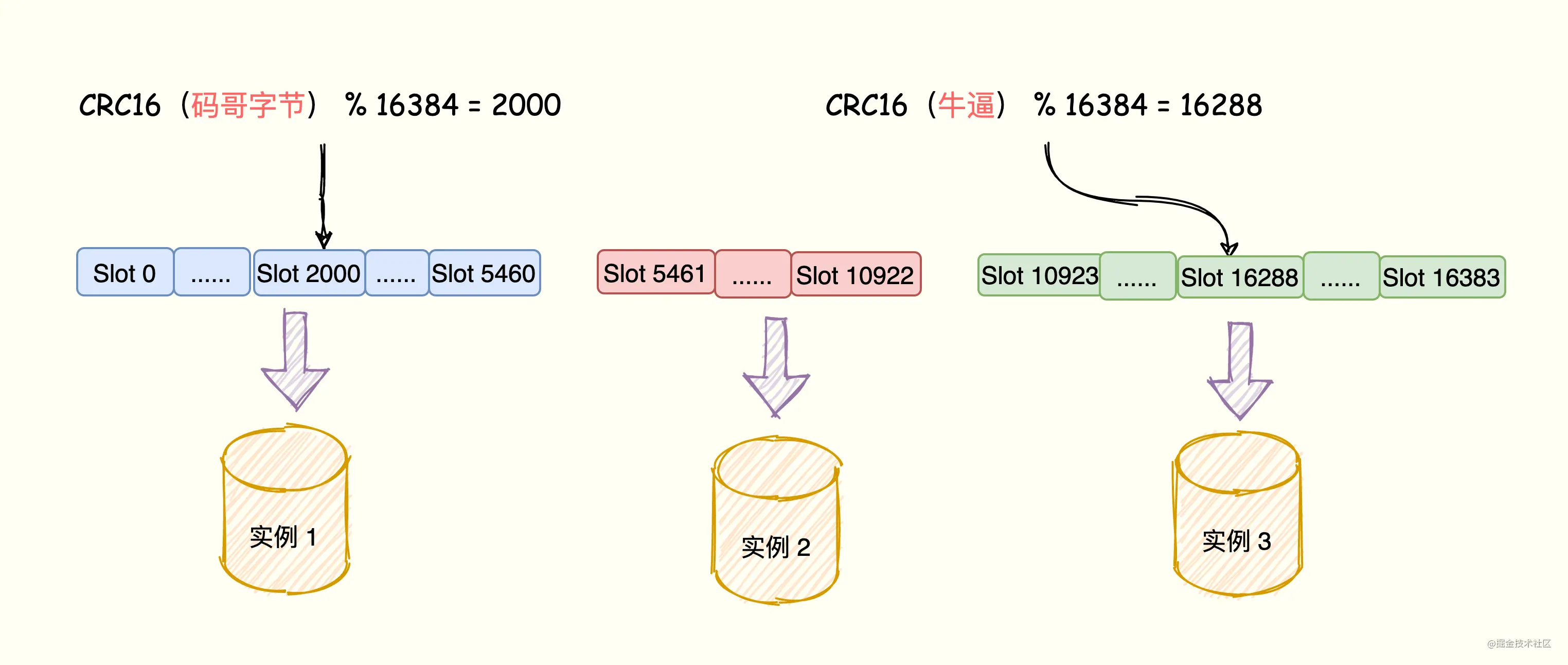

Interviewer: What is a Cluster?Redis cluster is a distributed database solution. The cluster manages data through sharding (a practice of "divide and conquer") and provides replication and failover functions. Divide the data into 16384 slots, and each node is responsible for a part of the slots. Slot information is stored in each node. It is decentralized. As shown in the figure, the cluster consists of three Redis nodes. Each node is responsible for a part of the data of the entire cluster. The amount of data that each node is responsible for may be different.

Gossip protocol, and finally each node saves Depends on the allocation of slots to other nodes.

Interviewer: How are hash slots mapped to Redis instances?

Interviewer: How about Cluster Implement failover?

Redis cluster nodes use the Gossip protocol to broadcast their own status and changes in their knowledge of the entire cluster. For example, if a node discovers that a certain node is lost (PFail), it will broadcast this information to the entire cluster, and other nodes can also receive this lost connection information.

If a node receives that the number of disconnections from a node (PFail Count) has reached the majority of the cluster, it can mark the node as determined to be offline (Fail) and then broadcast it to the entire cluster. Force other nodes to also accept the fact that the node has gone offline, and immediately perform a master-slave switch on the lost node.

Interviewer: How does the client determine which instance the accessed data is distributed on?

The Redis instance will send its hash slot information to other instances in the cluster through the Gossip protocol, realizing the diffusion of hash slot allocation information.

In this way, each instance in the cluster has mapping relationship information between all hash slots and instances.

When the client connects to any instance, the instance responds to the client with the mapping relationship between the hash slot and the instance, and the client caches the hash slot and instance mapping information locally.

When the client makes a request, the hash slot corresponding to the key will be calculated, and then the instance where the data is located will be located through the locally cached hash slot instance mapping information, and the request will be sent to the corresponding instance.

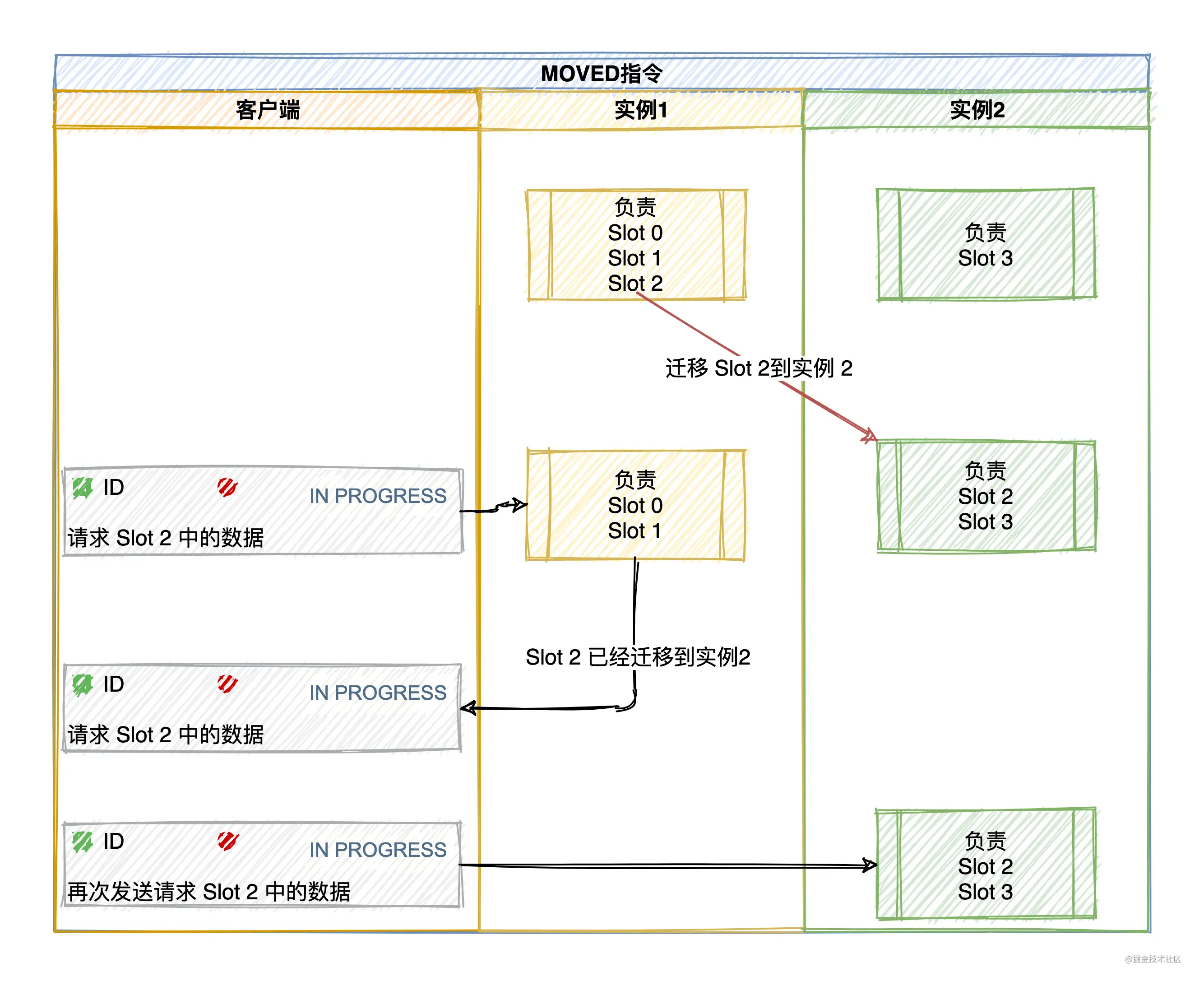

#Interviewer: What is the Redis redirection mechanism?

The mapping relationship between hash slots and instances has changed due to new instances or load balancing redistribution. The client sends the request to the instance, and this instance does not have corresponding data. , the Redis instance will tell the client to send the request to other instances.

Redis tells the client through MOVED errors and ASK errors.

MOVED Error (load balancing, data has been migrated to other instances): When the client sends a key-value pair operation request to an instance, When the slot in which the key is located is not owned by itself, the instance will return a MOVED error and redirect to the node that is responsible for the slot.

At the same time, the client will also update the local cache to correctly update the corresponding relationship between the slot and the Redis instance .

If there is a lot of data in a certain slot, part of it will be migrated to the new instance, and part of it will not be migrated.

If the requested key is found on the current node, execute the command directly, otherwise an ASK error response will be required.

When the slot migration is not completed, if the Slot where the key that needs to be accessed is being migrated from instance 1 to instance 2 (if the key is no longer in instance 1), instance 1 will return an ASK error message to the client: The hash slot where the key requested by the client is located is being migrated to instance 2. You first send an ASKING command to instance 2, and then send the operation command .

For example, the client requests to locate slot 16330 with key = "Official Account: Code Byte" on instance 172.17.18.1. If node 1 can find it, it will directly execute the command, otherwise it will respond with an ASK error message and Direct the client to the target node being migrated, 172.17.18.2.

Note: The ASK error command does not update the client cached hash slot allocation information.

This article mainly goes over the core content of Redis, involving data structure, memory model, IO model, persistent RDB and AOF, master-slave replication principle, sentinel principle, cluster principle.

Original address: https://juejin.cn/post/6976257378094481444

Author: Code Brother Byte

More programming related knowledge, Please visit: programming video! !

The above is the detailed content of Sharing of high-frequency Redis interview questions will help you master core knowledge points!. For more information, please follow other related articles on the PHP Chinese website!

Commonly used database software

Commonly used database software

What are the in-memory databases?

What are the in-memory databases?

Which one has faster reading speed, mongodb or redis?

Which one has faster reading speed, mongodb or redis?

How to use redis as a cache server

How to use redis as a cache server

How redis solves data consistency

How redis solves data consistency

How do mysql and redis ensure double-write consistency?

How do mysql and redis ensure double-write consistency?

What data does redis cache generally store?

What data does redis cache generally store?

What are the 8 data types of redis

What are the 8 data types of redis