What is a reptile?

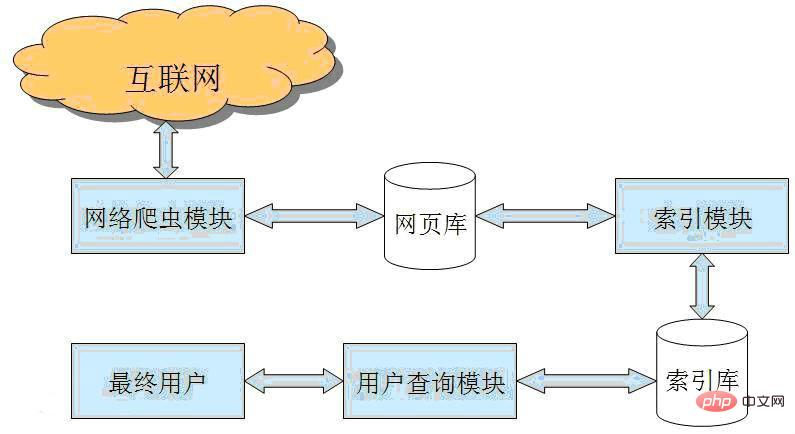

Web crawler is a program or script that automatically crawls World Wide Web information according to certain rules. They are widely used in Internet search engines or other similar websites. , can automatically collect the content of all pages it can access to obtain or update the content and retrieval methods of these websites. Functionally speaking, crawlers are generally divided into three parts: data collection, processing, and storage.

Traditional crawlers start from the URL of one or several initial web pages and obtain the URL on the initial web page. During the process of crawling the web page, they continuously extract new URLs from the current page and put them into the queue until the system requirements are met. Certain stopping conditions. The workflow of the focused crawler is more complex, and it requires filtering links unrelated to the topic based on a certain web page analysis algorithm, retaining useful links and putting them into the URL queue waiting to be crawled. Then, it will select the web page URL to be crawled next from the queue according to a certain search strategy, and repeat the above process until it stops when a certain condition of the system is reached. In addition, all web pages crawled by crawlers will be stored by the system, subjected to certain analysis, filtering, and indexing for subsequent query and retrieval; for focused crawlers, the analysis results obtained in this process may also be Give feedback and guidance for future crawling processes.

The above is the detailed content of What is a reptile?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

How long does it take to learn python crawler

Oct 25, 2023 am 09:44 AM

How long does it take to learn python crawler

Oct 25, 2023 am 09:44 AM

The time it takes to learn Python crawlers varies from person to person and depends on factors such as personal learning ability, learning methods, learning time and experience. Learning Python crawlers is not just about learning the technology itself, but also requires good information gathering skills, problem solving skills and teamwork skills. Through continuous learning and practice, you will gradually grow into an excellent Python crawler developer.

Practical crawler practice: using PHP to crawl stock information

Jun 13, 2023 pm 05:32 PM

Practical crawler practice: using PHP to crawl stock information

Jun 13, 2023 pm 05:32 PM

The stock market has always been a topic of great concern. The daily rise, fall and changes in stocks directly affect investors' decisions. If you want to understand the latest developments in the stock market, you need to obtain and analyze stock information in a timely manner. The traditional method is to manually open major financial websites to view stock data one by one. This method is obviously too cumbersome and inefficient. At this time, crawlers have become a very efficient and automated solution. Next, we will demonstrate how to use PHP to write a simple stock crawler program to obtain stock data. allow

Crawler Tips: How to Handle Cookies in PHP

Jun 13, 2023 pm 02:54 PM

Crawler Tips: How to Handle Cookies in PHP

Jun 13, 2023 pm 02:54 PM

In crawler development, handling cookies is often an essential part. As a state management mechanism in HTTP, cookies are usually used to record user login information and behavior. They are the key for crawlers to handle user authentication and maintain login status. In PHP crawler development, handling cookies requires mastering some skills and paying attention to some pitfalls. Below we explain in detail how to handle cookies in PHP. 1. How to get Cookie when writing in PHP

Deep mining: using Go language to build efficient crawlers

Jan 30, 2024 am 09:17 AM

Deep mining: using Go language to build efficient crawlers

Jan 30, 2024 am 09:17 AM

In-depth exploration: Using Go language for efficient crawler development Introduction: With the rapid development of the Internet, obtaining information has become more and more convenient. As a tool for automatically obtaining website data, crawlers have attracted increasing attention and attention. Among many programming languages, Go language has become the preferred crawler development language for many developers due to its advantages such as high concurrency and powerful performance. This article will explore the use of Go language for efficient crawler development and provide specific code examples. 1. Advantages of Go language crawler development: High concurrency: Go language

Start your Java crawler journey: learn practical skills to quickly crawl web data

Jan 09, 2024 pm 01:58 PM

Start your Java crawler journey: learn practical skills to quickly crawl web data

Jan 09, 2024 pm 01:58 PM

Practical skills sharing: Quickly learn how to crawl web page data with Java crawlers Introduction: In today's information age, we deal with a large amount of web page data every day, and a lot of this data may be exactly what we need. In order to quickly obtain this data, learning to use crawler technology has become a necessary skill. This article will share a method to quickly learn how to crawl web page data with a Java crawler, and attach specific code examples to help readers quickly master this practical skill. 1. Preparation work Before starting to write a crawler, we need to prepare the following

Efficient Java crawler practice: sharing of web data crawling techniques

Jan 09, 2024 pm 12:29 PM

Efficient Java crawler practice: sharing of web data crawling techniques

Jan 09, 2024 pm 12:29 PM

Java crawler practice: How to efficiently crawl web page data Introduction: With the rapid development of the Internet, a large amount of valuable data is stored in various web pages. To obtain this data, it is often necessary to manually access each web page and extract the information one by one, which is undoubtedly a tedious and time-consuming task. In order to solve this problem, people have developed various crawler tools, among which Java crawler is one of the most commonly used. This article will lead readers to understand how to use Java to write an efficient web crawler, and demonstrate the practice through specific code examples. 1. The base of the reptile

Efficiently crawl web page data: combined use of PHP and Selenium

Jun 15, 2023 pm 08:36 PM

Efficiently crawl web page data: combined use of PHP and Selenium

Jun 15, 2023 pm 08:36 PM

With the rapid development of Internet technology, Web applications are increasingly used in our daily work and life. In the process of web application development, crawling web page data is a very important task. Although there are many web scraping tools on the market, these tools are not very efficient. In order to improve the efficiency of web page data crawling, we can use the combination of PHP and Selenium. First, we need to understand what PHP and Selenium are. PHP is a powerful

Analysis and solutions to common problems of PHP crawlers

Aug 06, 2023 pm 12:57 PM

Analysis and solutions to common problems of PHP crawlers

Aug 06, 2023 pm 12:57 PM

Analysis of common problems and solutions for PHP crawlers Introduction: With the rapid development of the Internet, the acquisition of network data has become an important link in various fields. As a widely used scripting language, PHP has powerful capabilities in data acquisition. One of the commonly used technologies is crawlers. However, in the process of developing and using PHP crawlers, we often encounter some problems. This article will analyze and give solutions to these problems and provide corresponding code examples. 1. Description of the problem that the data of the target web page cannot be correctly parsed.