There was a need some time ago: transfer some data from the production environment to the test server for testing. Since I only need to import the data of a specific account, I thought about writing a script to assemble the data into SQL statements and export them into SQL files, then transfer them to the test server and import them into MySQL. The imagination is beautiful, but the process is painful. Let’s summarize the following points.

1The idea of the script is to query specific account data and assemble and splice it into sql text data. It should be noted here that the query value needs to be filtered, because some fields contain some illegal characters, such as: ' (single quotation mark), " (double quotation mark), ` (the second one from the upper left corner of the keyboard). These symbols are spliced together. When making a sql statement, the value will change because the other end of the closure cannot be found, causing problems in splicing the sql statement. Therefore, these characters need to be replaced. I use the str_replace() function to set these symbols to empty.

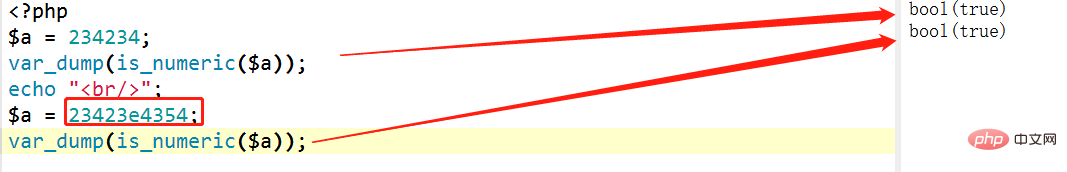

2, I use the is_numeri function to determine whether the value is a numeric type structure. If so, add "" (double quotes), but there is a problem with is_numeri. Some values have the letter e. , it also returns true,

This is actually a problem, because the value cannot be inserted into MySQL without adding "".

Later, I added the is_float() and is_string() functions at the same time to determine whether the value needs to be added in double quotes.

3. Since the amount of exported data is relatively large, I divided it into Four PHP processes are used to run, and one process runs 10,000 user data, which can save a lot of time. However, it should be noted that the stored SQL text also needs to be distinguished, because multiple processes writing a text file at the same time may Data loss occurred (I found out later and spent a lot of time to restore the data).

4, use the tar -xcvf command to compress and package the sql file, a 7G file. It can be compressed to more than 700 M, and the compression effect is still good.PHP Video Tutorial

1, due to the relatively large amount of data Large, I also divided several processes to run the data. I used the MySQL source command to import these large sql files

mysql>source D:/www/sql/data.sql;

The sql file contains the use database, so there is no use database here.

Use the source command to import multiple files. You can create a new sou.sql file, which stores the following commands.

For example:

source c:/1.sql; source c:/2.sql;

In this way, you can import multiple files in one source command. sql file.

There were also some problems during the period:

Occurrence:

## After checking, it’s because the amount of data in a single table is large. Generally speaking, mysql will restrict SQL with a large amount of data in a single table.

Solution:Change the max_allowed_packet packet size

Option 1, temporary modification: enter the command set global max_allowed_packet = size; (Note that the size here can only be filled in bytes. After restarting the mysql service, the configuration will become invalid! )

Option 2, modify the my.ini file and add max_allowed_packet=size in the [mysqld] section

2. Since the source command cannot record the location record in this way, error message, so I later switched to the linux shell method to importas follows: sql.sh

#!/bin/bash p="d:backup/sql.sql" //绝对路径 User='abc' Password='123' mysql -u $User -p$Password -e "source $f" 2>err1.txt;//输出错误到文件中,方便后面查询 echo 'OK!'

By importing in this way, you can find some error prompts to facilitate troubleshooting later.

mysql video tutorialThe above is the detailed content of Summary of PHP+MySQL implementing massive data import and export. For more information, please follow other related articles on the PHP Chinese website!