Backend Development

Backend Development

Python Tutorial

Python Tutorial

Detailed introduction of mysql function for geographical location geo processing (with code)

Detailed introduction of mysql function for geographical location geo processing (with code)

Detailed introduction of mysql function for geographical location geo processing (with code)

This article brings you a detailed introduction to the mysql function for geographical location geo processing (with code). It has certain reference value. Friends in need can refer to it. I hope it will be helpful to you.

Currently, more and more businesses are based on LBS, nearby people, takeout locations, nearby businesses, etc. Now we will discuss the solution for the business scenario closest to me.

Currently known solutions are:

mysql custom function calculation mysql geo index mongodb geo index postgresql PostGis index redis geoElasticSearchThis article tests the performance of mysql function operation

Preparation Work

Create data table

CREATE TABLE `driver` ( `id` int(11) unsigned NOT NULL AUTO_INCREMENT, `lng` float DEFAULT NULL, `lat` float DEFAULT NULL, PRIMARY KEY (`id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8;

Create test data

Understand basic geographical knowledge before creating data:

- The value range of global longitude and latitude is: Latitude -90~90, longitude -180~180

- The range of longitude and latitude in China is approximately: Latitude 3.86~53.55, longitude 73.66~135.05

- The latitude of the administrative center of Beijing is 39.92, and the longitude is 116.46

- The farther north, the greater the latitude value, and the farther east, the greater the longitude value

- Conversion of degrees and minutes : Convert the unit data of degrees and minutes into data of the units of degrees, the formula: degrees = degrees and minutes/60

- Minutes and seconds conversion: Convert the data of the units of degrees, minutes and seconds into data of the units of degrees, the formula: degrees = degrees and minutes/60 Seconds / 60 / 60

In the case of equal latitude:

- The longitude is every 0.00001 degrees, and the distance differs by about 1 meter

In the case of equal longitude:

- Every 0.00001 degree latitude, the distance difference is about 1.1 meters

mysql function calculation

DELIMITER // CREATE DEFINER=`root`@`localhost` FUNCTION `getDistance`( `lng1` float(10,7) , `lat1` float(10,7) , `lng2` float(10,7) , `lat2` float(10,7) ) RETURNS double COMMENT '计算2坐标点距离' BEGIN declare d double; declare radius int; set radius = 6371000; #假设地球为正球形,直径为6371000米 set d = (2*ATAN2(SQRT(SIN((lat1-lat2)*PI()/180/2) *SIN((lat1-lat2)*PI()/180/2)+ COS(lat2*PI()/180)*COS(lat1*PI()/180) *SIN((lng1-lng2)*PI()/180/2) *SIN((lng1-lng2)*PI()/180/2)), SQRT(1-SIN((lat1-lat2)*PI()/180/2) *SIN((lat1-lat2)*PI()/180/2) +COS(lat2*PI()/180)*COS(lat1*PI()/180) *SIN((lng1-lng2)*PI()/180/2) *SIN((lng1-lng2)*PI()/180/2))))*radius; return d; END// DELIMITER ;

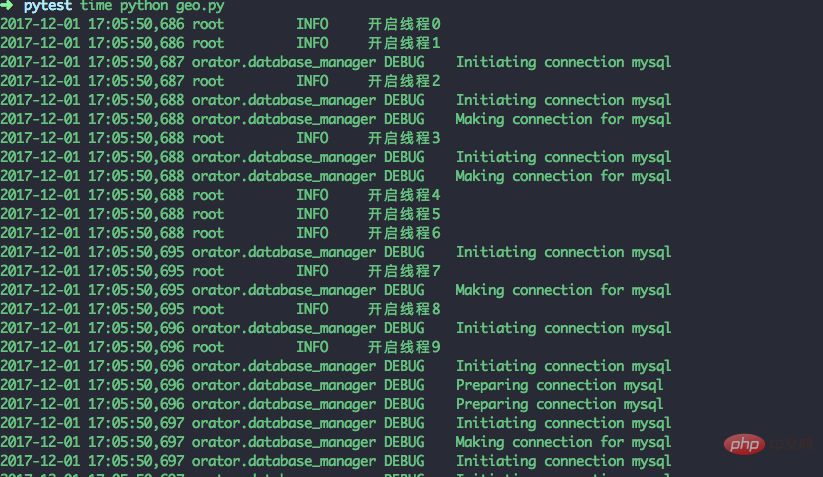

Create data python script

# coding=utf-8

from orator import DatabaseManager, Model

import logging

import random

import threading

""" 中国的经纬度范围 纬度3.86~53.55,经度73.66~135.05。大概0.00001度差距1米 """

# 创建 日志 对象

logger = logging.getLogger()

handler = logging.StreamHandler()

formatter = logging.Formatter(

'%(asctime)s %(name)-12s %(levelname)-8s %(message)s')

handler.setFormatter(formatter)

logger.addHandler(handler)

logger.setLevel(logging.DEBUG)

# Connect to the database

config = {

'mysql': {

'driver': 'mysql',

'host': 'localhost',

'database': 'dbtest',

'user': 'root',

'password': '',

'prefix': ''

}

}

db = DatabaseManager(config)

Model.set_connection_resolver(db)

class Driver(Model):

__table__ = 'driver'

__timestamps__ = False

pass

def ins_driver(thread_name,nums):

logger.info('开启线程%s' % thread_name)

for _ in range(nums):

lng = '%.5f' % random.uniform(73.66, 135.05)

lat = '%.5f' % random.uniform(3.86, 53.55)

driver = Driver()

driver.lng = lng

driver.lat = lat

driver.save()

thread_nums = 10

for i in range(thread_nums):

t = threading.Thread(target=ins_driver, args=(i, 400000))

t.start()

The above script creates 10 threads, and 10 threads insert 40,000 pieces of data. It took 150.18s to execute, and a total of 400,000 pieces of data were inserted

Test

- Test environment

System: mac os

Memory :16G

cpu: intel core i5

Hard disk: 500g solid state drive

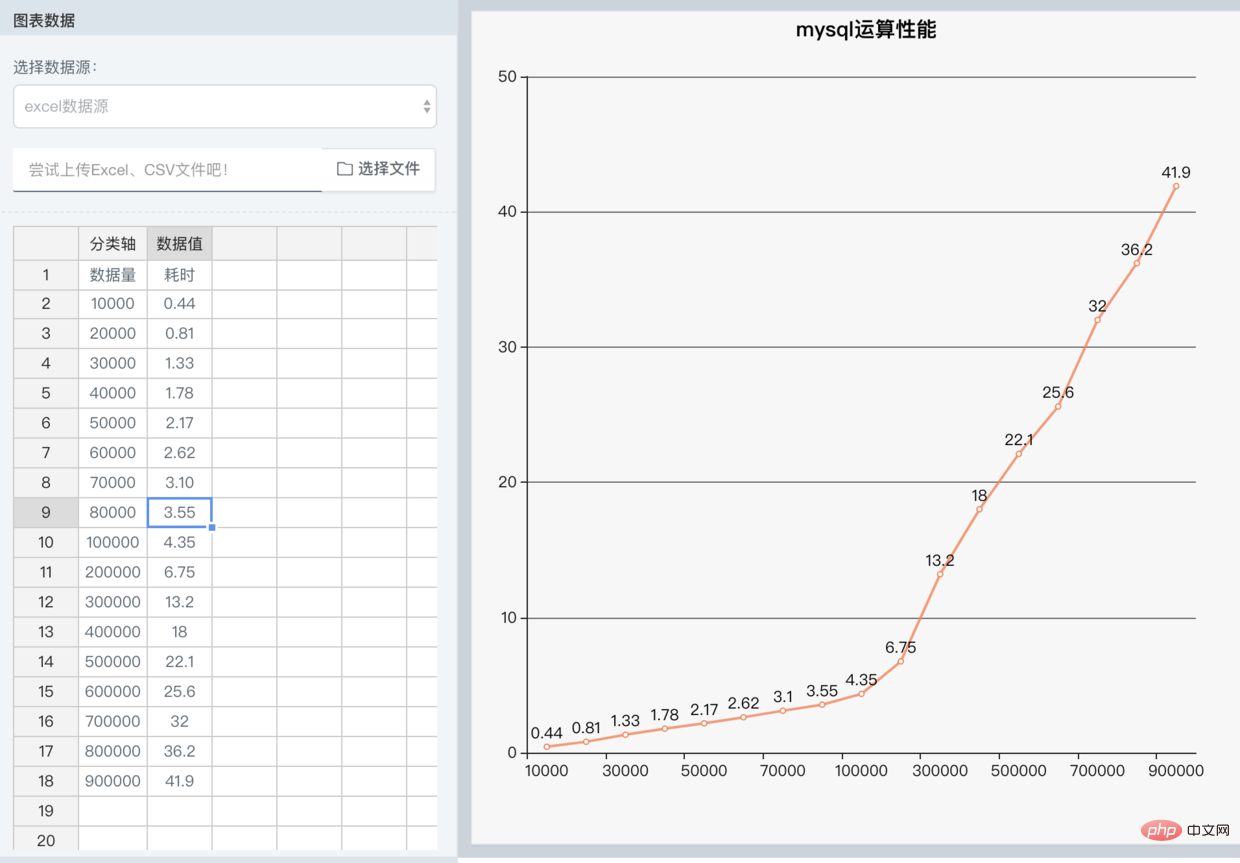

Under the test, find the 10 closest drivers to the coordinate point (134.38753, 18.56734)

select *,`getDistance`(134.38753,18.56734,`lng`,`lat`) as dis from driver ORDER BY dis limit 10

- Time consuming: 18.0s

- explain: full table scan

I tested from 10,000 to 100,000 at intervals of 10,000 and from 100,000 to 90 Changes in results every 100,000 tests

- This solution will take more than 1 time to query when the data volume reaches 30,000 Seconds

- Approximately every 10,000 additional entries will increase the time consumption by 0.4 seconds

The above is the detailed content of Detailed introduction of mysql function for geographical location geo processing (with code). For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

python connect to sql server pyodbc example

Jul 30, 2025 am 02:53 AM

python connect to sql server pyodbc example

Jul 30, 2025 am 02:53 AM

Install pyodbc: Use the pipinstallpyodbc command to install the library; 2. Connect SQLServer: Use the connection string containing DRIVER, SERVER, DATABASE, UID/PWD or Trusted_Connection through the pyodbc.connect() method, and support SQL authentication or Windows authentication respectively; 3. Check the installed driver: Run pyodbc.drivers() and filter the driver name containing 'SQLServer' to ensure that the correct driver name is used such as 'ODBCDriver17 for SQLServer'; 4. Key parameters of the connection string

What is statistical arbitrage in cryptocurrencies? How does statistical arbitrage work?

Jul 30, 2025 pm 09:12 PM

What is statistical arbitrage in cryptocurrencies? How does statistical arbitrage work?

Jul 30, 2025 pm 09:12 PM

Introduction to Statistical Arbitrage Statistical Arbitrage is a trading method that captures price mismatch in the financial market based on mathematical models. Its core philosophy stems from mean regression, that is, asset prices may deviate from long-term trends in the short term, but will eventually return to their historical average. Traders use statistical methods to analyze the correlation between assets and look for portfolios that usually change synchronously. When the price relationship of these assets is abnormally deviated, arbitrage opportunities arise. In the cryptocurrency market, statistical arbitrage is particularly prevalent, mainly due to the inefficiency and drastic fluctuations of the market itself. Unlike traditional financial markets, cryptocurrencies operate around the clock and their prices are highly susceptible to breaking news, social media sentiment and technology upgrades. This constant price fluctuation frequently creates pricing bias and provides arbitrageurs with

python shutil rmtree example

Aug 01, 2025 am 05:47 AM

python shutil rmtree example

Aug 01, 2025 am 05:47 AM

shutil.rmtree() is a function in Python that recursively deletes the entire directory tree. It can delete specified folders and all contents. 1. Basic usage: Use shutil.rmtree(path) to delete the directory, and you need to handle FileNotFoundError, PermissionError and other exceptions. 2. Practical application: You can clear folders containing subdirectories and files in one click, such as temporary data or cached directories. 3. Notes: The deletion operation is not restored; FileNotFoundError is thrown when the path does not exist; it may fail due to permissions or file occupation. 4. Optional parameters: Errors can be ignored by ignore_errors=True

How to execute SQL queries in Python?

Aug 02, 2025 am 01:56 AM

How to execute SQL queries in Python?

Aug 02, 2025 am 01:56 AM

Install the corresponding database driver; 2. Use connect() to connect to the database; 3. Create a cursor object; 4. Use execute() or executemany() to execute SQL and use parameterized query to prevent injection; 5. Use fetchall(), etc. to obtain results; 6. Commit() is required after modification; 7. Finally, close the connection or use a context manager to automatically handle it; the complete process ensures that SQL operations are safe and efficient.

python threading timer example

Jul 29, 2025 am 03:05 AM

python threading timer example

Jul 29, 2025 am 03:05 AM

threading.Timer executes functions asynchronously after a specified delay without blocking the main thread, and is suitable for handling lightweight delays or periodic tasks. ①Basic usage: Create Timer object and call start() method to delay execution of the specified function; ② Cancel task: Calling cancel() method before the task is executed can prevent execution; ③ Repeating execution: Enable periodic operation by encapsulating the RepeatingTimer class; ④ Note: Each Timer starts a new thread, and resources should be managed reasonably. If necessary, call cancel() to avoid memory waste. When the main program exits, you need to pay attention to the influence of non-daemon threads. It is suitable for delayed operations, timeout processing, and simple polling. It is simple but very practical.

python read file line by line example

Jul 30, 2025 am 03:34 AM

python read file line by line example

Jul 30, 2025 am 03:34 AM

The recommended way to read files line by line in Python is to use withopen() and for loops. 1. Use withopen('example.txt','r',encoding='utf-8')asfile: to ensure safe closing of files; 2. Use forlineinfile: to realize line-by-line reading, memory-friendly; 3. Use line.strip() to remove line-by-line characters and whitespace characters; 4. Specify encoding='utf-8' to prevent encoding errors; other techniques include skipping blank lines, reading N lines before, getting line numbers and processing lines according to conditions, and always avoiding manual opening without closing. This method is complete and efficient, suitable for large file processing

How to run Python script with arguments in VSCode

Jul 30, 2025 am 04:11 AM

How to run Python script with arguments in VSCode

Jul 30, 2025 am 04:11 AM

TorunaPythonscriptwithargumentsinVSCode,configurelaunch.jsonbyopeningtheRunandDebugpanel,creatingoreditingthelaunch.jsonfile,andaddingthedesiredargumentsinthe"args"arraywithintheconfiguration.2.InyourPythonscript,useargparseorsys.argvtoacce

How to share data between multiple processes in Python?

Aug 02, 2025 pm 01:15 PM

How to share data between multiple processes in Python?

Aug 02, 2025 pm 01:15 PM

Use multiprocessing.Queue to safely pass data between multiple processes, suitable for scenarios of multiple producers and consumers; 2. Use multiprocessing.Pipe to achieve bidirectional high-speed communication between two processes, but only for two-point connections; 3. Use Value and Array to store simple data types in shared memory, and need to be used with Lock to avoid competition conditions; 4. Use Manager to share complex data structures such as lists and dictionaries, which are highly flexible but have low performance, and are suitable for scenarios with complex shared states; appropriate methods should be selected based on data size, performance requirements and complexity. Queue and Manager are most suitable for beginners.