When we browse the Internet every day, we often see some good-looking pictures, and we want to save and download these pictures, or use them as desktop wallpapers, or as design materials. The following article will introduce to you the relevant information about using python to implement the simplest web crawler. Friends in need can refer to it. Let's take a look together.

Preface

Web crawlers (also known as web spiders, web robots, among the FOAF community, more often called web crawlers ) is a program or script that automatically captures World Wide Web information according to certain rules. Recently, I have become very interested in python crawlers. I would like to share my learning path here and welcome your suggestions. We communicate with each other and make progress together. Not much to say, let’s take a look at the detailed introduction:

1. Development tools

The author uses The best tool is sublime text3. Its short and concise (maybe men don't like this word) fascinates me very much. It is recommended for everyone to use. Of course, if your computer configuration is good, pycharm may be more suitable for you.

sublime text3 To build a python development environment, it is recommended to view this article:

[sublime to build a python development environment]

As the name suggests, crawlers are like bugs , crawling on the big web of the Internet. In this way, we can get what we want.

Since we want to crawl on the Internet, we need to understand the URL, the legal name "Uniform Resource Locator", and the nickname "Link". Its structure mainly consists of three parts:

(1) Protocol: such as the HTTP protocol we commonly see in URLs.

(2) Domain name or IP address: Domain name, such as: www.baidu.com, IP address, that is, the corresponding IP after domain name resolution.

(3) Path: directory or file, etc.

3. urllib develops the simplest crawler

(1) Introduction to urllib

| Module | Introduce |

|---|---|

| Exception classes raised by urllib.request. | |

| Parse URLs into or assemble them from components. | |

| Extensible library for opening URLs. | |

| Response classes used by urllib. | |

| Load a robots.txt file and answer questions about fetchability of other URLs. |

(2 ) Develop the simplest crawler

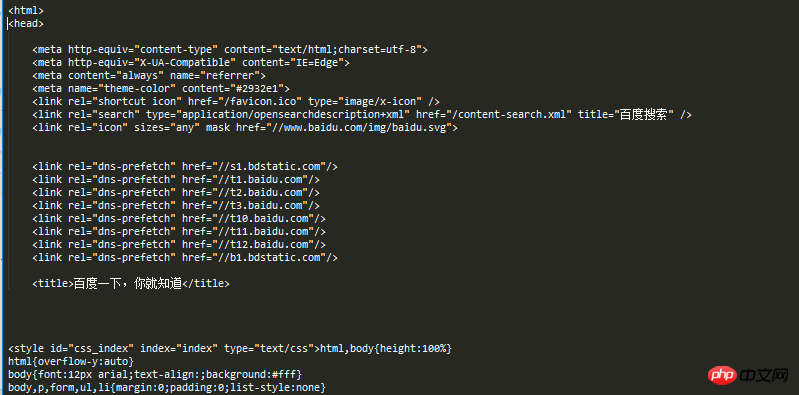

from urllib import request

def visit_baidu():

URL = "http://www.baidu.com"

# open the URL

req = request.urlopen(URL)

# read the URL

html = req.read()

# decode the URL to utf-8

html = html.decode("utf_8")

print(html)

if __name__ == '__main__':

visit_baidu()

from urllib import request

def vists_baidu():

# create a request obkect

req = request.Request('http://www.baidu.com')

# open the request object

response = request.urlopen(req)

# read the response

html = response.read()

html = html.decode('utf-8')

print(html)

if __name__ == '__main__':

vists_baidu()(3) Error handling

##

from urllib import request

from urllib import error

def Err():

url = "https://segmentfault.com/zzz"

req = request.Request(url)

try:

response = request.urlopen(req)

html = response.read().decode("utf-8")

print(html)

except error.HTTPError as e:

print(e.code)

if __name__ == '__main__':

Err() 404 is the printed error code. You can Baidu for detailed information about this.

404 is the printed error code. You can Baidu for detailed information about this.

URLError can be captured through its reason attribute.

chuliHTTPError code is as follows:

from urllib import request

from urllib import error

def Err():

url = "https://segmentf.com/"

req = request.Request(url)

try:

response = request.urlopen(req)

html = response.read().decode("utf-8")

print(html)

except error.URLError as e:

print(e.reason)

if __name__ == '__main__':

Err()The running result is as shown in the figure:

Since in order to handle errors, it is best to write both errors into the code. After all, the more detailed the code, the clearer it will be. It should be noted that HTTPError is a subclass of URLError, so HTTPError must be placed in front of URLError, otherwise URLError will be output, such as 404 as Not Found.

Since in order to handle errors, it is best to write both errors into the code. After all, the more detailed the code, the clearer it will be. It should be noted that HTTPError is a subclass of URLError, so HTTPError must be placed in front of URLError, otherwise URLError will be output, such as 404 as Not Found.

The code is as follows:

from urllib import request

from urllib import error

# 第一种方法,URLErroe和HTTPError

def Err():

url = "https://segmentfault.com/zzz"

req = request.Request(url)

try:

response = request.urlopen(req)

html = response.read().decode("utf-8")

print(html)

except error.HTTPError as e:

print(e.code)

except error.URLError as e:

print(e.reason)You can change the url to view the output forms of various errors.

The above is the detailed content of The simplest web crawler tutorial in python. For more information, please follow other related articles on the PHP Chinese website!