MultiProcessing module is an excellent multi-threaded MultiThreading module that handles concurrency.

I have come into contact with this library a little bit before, but did not study it in depth. This time I studied it when I was idle and bored to solve my doubts.

Today we will study the apply_async and map methods. Rumor has it that these two methods allocate processes in the process pool to related functions. I want to verify it.

Look at the official website’s explanation of these two:

apply_async(func[, args[, kwds[, callback[, error_callback]]]])

A variant of the apply() method which returns a result object.

If callback is specified then it should be a callable which accepts a single argument. When the result becomes ready callback is applied to it, that is unless the call failed, in which case the error_callback is applied instead.

If error_callback is specified then it should be a callable which accepts a single argument. If the target function fails, then the error_callback is called with the exception instance.

Callbacks should complete immediately since otherwise the thread which handles the results will get blocked.

map(func, iterable[, chunksize])

A parallel equivalent of the map() built-in function (it supports only one iterable argument though). It blocks until the result is ready.

This method chops the iterable into a number of chunks which it submits to the process pool as separate tasks. The (approximate) size of these chunks can be specified by setting chunksize to a positive integer.

Pool can provide a specified number of processes for users to call. When a new request is submitted to the pool, if the pool is not full, a new one will be created. The process is used to execute the request; but if the number of processes in the pool has reached the specified maximum, then the request will wait until a process in the pool ends, and then a new process will be created to run it

Let’s take a look at the program:

from multiprocessing import Poolimport timeimport osdef func(msg):print('msg: %s %s' % (msg, os.getpid()))

time.sleep(3)print("end")if __name__ == '__main__':

pool = Pool(4)for i in range(4):

msg = 'hello %d' % (i)

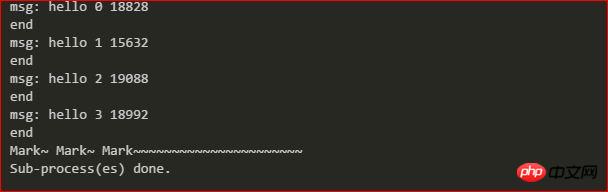

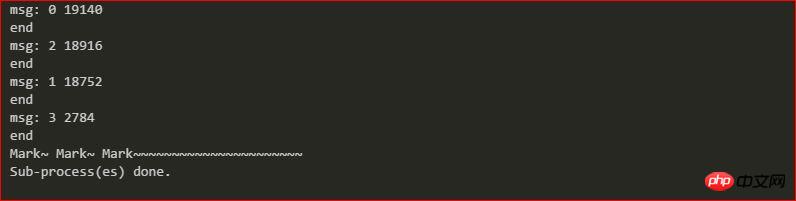

pool.apply_async(func, (msg, ))# pool.map(func, range(4))print("Mark~ Mark~ Mark~~~~~~~~~~~~~~~~~~~~~~")

pool.close()

pool.join() # 调用join之前,先调用close函数,否则会出错。执行完close后不会有新的进程加入到pool,join函数等待所有子进程结束print("Sub-process(es) done.")Run results:

from multiprocessing import Poolimport timeimport osdef func(msg):print('msg: %s %s' % (msg, os.getpid()))

time.sleep(3)print("end")if __name__ == '__main__':

pool = Pool(3)'''for i in range(4):

msg = 'hello %d' % (i)

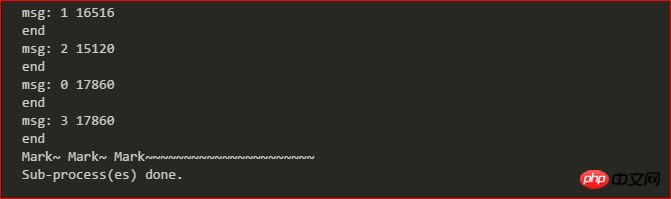

pool.apply_async(func, (msg, ))'''pool.map(func, range(4))print("Mark~ Mark~ Mark~~~~~~~~~~~~~~~~~~~~~~")

pool.close()

pool.join() # 调用join之前,先调用close函数,否则会出错。执行完close后不会有新的进程加入到pool,join函数等待所有子进程结束print("Sub-process(es) done.")

Moreover, the second parameter of the apply_async function is passed in a parameter value. Once it is run This function will allocate a process to the function. Note that it is asynchronous, so if you need to allocate multiple processes, you need a for loop or while loop; for the map function, its second parameter value receives an iteration device, so there is no need to use a for loop. Remember, what these two functions implement is to assign processes in the process pool to functions in turn.

The above is the detailed content of An in-depth explanation of the MultiProcessing library in python. For more information, please follow other related articles on the PHP Chinese website!