Coding problems have always troubled program developers, especially in Java, because Java is a cross-platform language and there is a lot of switching between coding on different platforms. Next, we will introduce the root causes of Java encoding problems; the differences between several encoding formats often encountered in Java; scenarios that often require encoding in Java; analysis of the causes of Chinese problems; possible encoding problems in developing Java Web Several places; how to control the encoding format of an HTTP request; how to avoid Chinese encoding problems, etc.

The smallest unit for storing information in a computer is 1 byte, which is 8 bits, so the range of characters that can be represented is 0 ~ 255.

There are too many symbols to be represented and cannot be completely represented by 1 byte.

Computers provide a variety of translation methods, common ones include ASCII, ISO-8859-1, GB2312, GBK, UTF-8, UTF-16, etc. These all stipulate the conversion rules. According to these rules, the computer can correctly represent our characters. These encoding formats are introduced below:

ASCII code

There are 128 in total, represented by the lower 7 bits of 1 byte, 0 ~ 31 are control characters such as line feed, carriage return, delete, etc., 32 ~ 126 are printing characters, which can be input through the keyboard and can be displayed.

ISO-8859-1

GB2312

## It is a double-byte encoding, and the total encoding range is A1 ~ F7, where A1 ~ A9 is the symbol area, containing a total of 682 symbols; B0 ~ F7 is the Chinese character area, containing 6763 Chinese characters.

If it is 1 byte, the highest bit (8th bit) is 0, which means it is an ASCII character (00 ~ 7F)

If it is 1 byte , starting with 11, the number of consecutive 1's indicates the number of bytes of this character

If it is 1 byte, starting with 10 indicates it If it is not the first byte, you need to look forward to get the first byte of the current character

2. Scenarios that require coding in Java

As shown above: The Reader class is the parent class for reading characters in Java I/O, and the InputStream class is the parent class for reading bytes. The InputStreamReader class is the bridge that associates bytes to characters. It is responsible for reading characters during the I/O process. It handles the conversion of read bytes into characters, and entrusts StreamDecoder to implement the decoding of specific bytes into characters. During the decoding process of StreamDecoder, the Charset encoding format must be specified by the user. It is worth noting that if you do not specify Charset, the default character set in the local environment will be used. For example, GBK encoding will be used in the Chinese environment.

For example, the following piece of code implements the file reading and writing function:

String file = "c:/stream.txt"; String charset = "UTF-8"; // 写字符换转成字节流 FileOutputStream outputStream = new FileOutputStream(file); OutputStreamWriter writer = new OutputStreamWriter( outputStream, charset); try { writer.write("这是要保存的中文字符"); } finally { writer.close(); } // 读取字节转换成字符 FileInputStream inputStream = new FileInputStream(file); InputStreamReader reader = new InputStreamReader( inputStream, charset); StringBuffer buffer = new StringBuffer(); char[] buf = new char[64]; int count = 0; try { while ((count = reader.read(buf)) != -1) { buffer.append(buffer, 0, count); } } finally { reader.close(); }

When our application involves I/O operations, as long as we pay attention to specifying a unified encoding and decoding Charset character set, there will generally be no garbled code problems.

Perform data type conversion from characters to bytes in memory.

1. The String class provides methods for converting strings to bytes, and also supports constructors for converting bytes into strings.

String s = "字符串"; byte[] b = s.getBytes("UTF-8"); String n = new String(b, "UTF-8");

2. Charset provides encode and decode, which correspond to the encoding from char[] to byte[] and the decoding from byte[] to char[] respectively.

Charset charset = Charset.forName("UTF-8"); ByteBuffer byteBuffer = charset.encode(string); CharBuffer charBuffer = charset.decode(byteBuffer);

...

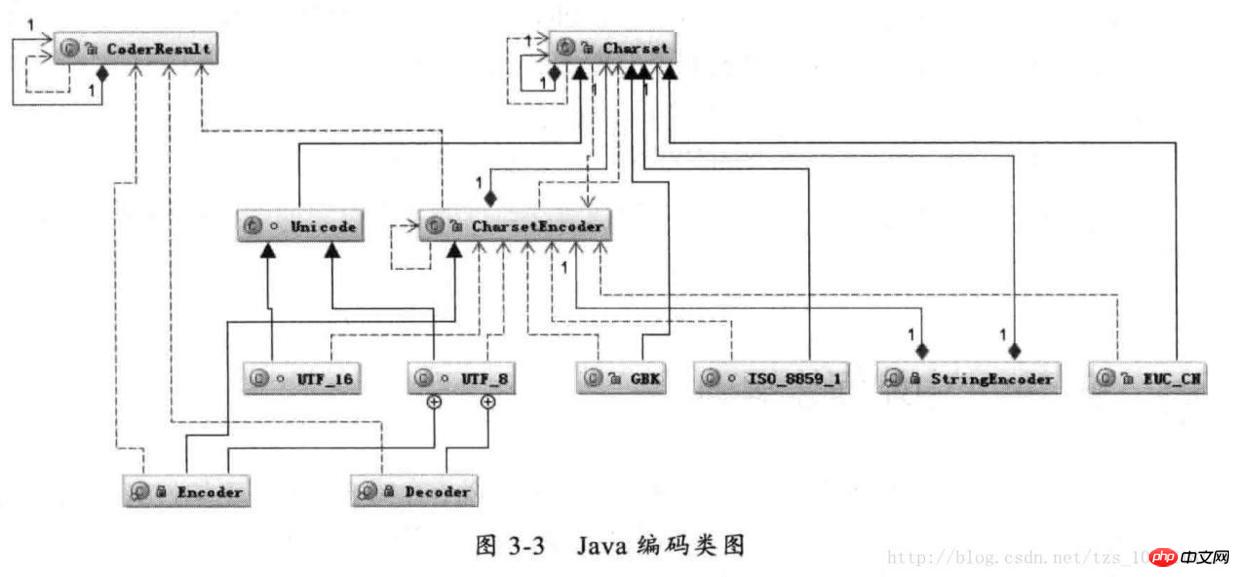

Java coding class diagram

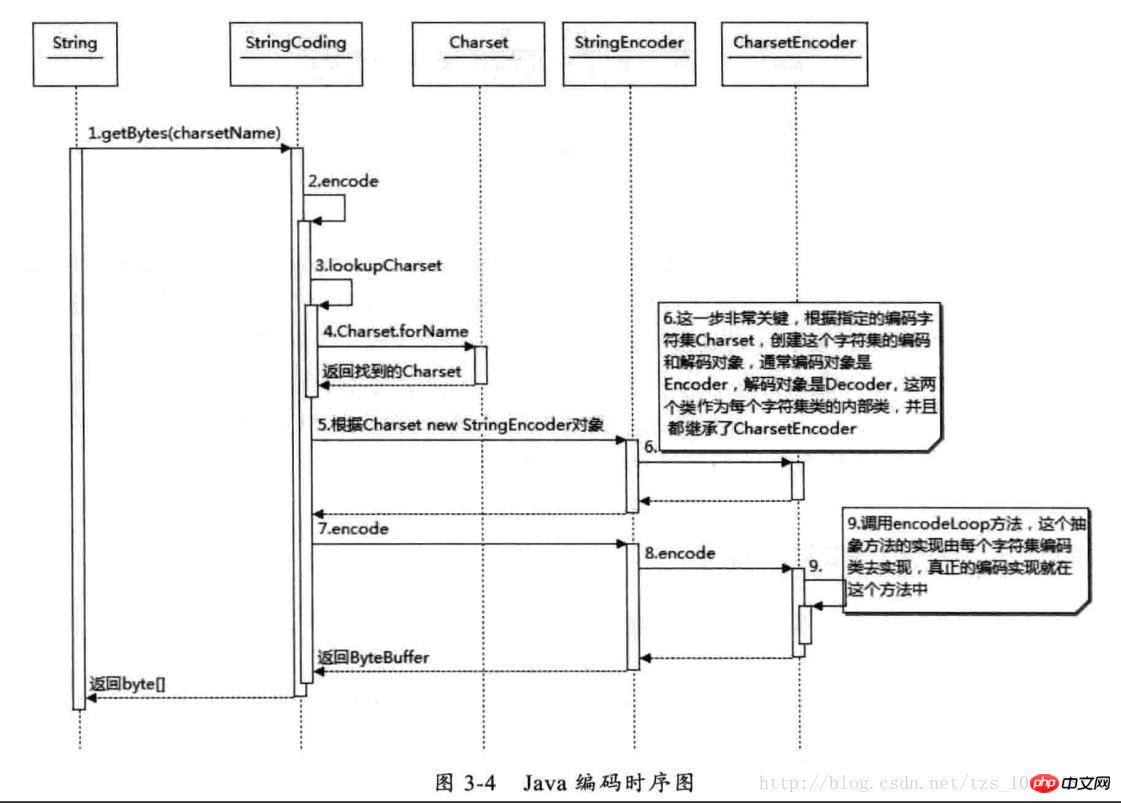

First set the Charset class according to the specified charsetName through Charset.forName(charsetName), then create a CharsetEncoder object according to Charset, and then call CharsetEncoder.encode to encode the string. Different encoding types will correspond to one class. The actual encoding process is done in these classes. The following is the timing diagram of the String.getBytes(charsetName) encoding process

Java coding sequence diagram

As can be seen from the above figure, the Charset class is found based on charsetName, and then CharsetEncoder is generated based on this character set encoding. This class is the parent class of all character encodings. For different character encoding sets, it defines how to implement encoding in its subclasses. With After obtaining the CharsetEncoder object, you can call the encode method to implement encoding. This is the String.getBytes encoding method, and other methods such as StreamEncoder are similar.

It often appears that Chinese characters become "?", which is probably caused by incorrect use of the ISO-8859-1 encoding. Chinese characters will lose information after ISO-8859-1 encoding. We usually call it a "black hole", which will absorb unknown characters. Since the default character set encoding of most basic Java frameworks or systems is ISO-8859-1, it is easy for garbled characters to occur. We will analyze how different forms of garbled characters appear later.

It can handle the following four encoding formats for Chinese characters. The encoding rules of GB2312 and GBK are similar, but GBK has a larger range and can handle all Chinese characters. Therefore, when comparing GB2312 with GBK, GBK should be selected. UTF-16 and UTF-8 both deal with Unicode encoding, and their encoding rules are not the same. Relatively speaking, UTF-16 encoding is the most efficient, it is easier to convert characters to bytes, and it is better to perform string operations. It is suitable for use between local disk and memory, and can quickly switch between characters and bytes. For example, Java's memory encoding uses UTF-16 encoding. However, it is not suitable for transmission between networks, because network transmission can easily damage the byte stream. Once the byte stream is damaged, it will be difficult to recover. In comparison, UTF-8 is more suitable for network transmission and uses single-byte storage for ASCII characters. In addition, damage to a single character will not affect other subsequent characters. The encoding efficiency is between GBK and UTF-16. Therefore, UTF-8 balances encoding efficiency and encoding security and is an ideal Chinese encoding method.

For the use of Chinese, where there is I/O, encoding will be involved. As mentioned earlier, I/O operations will cause encoding, and most of the garbled codes caused by I/O are network I/O, because now almost all Most applications involve network operations, and data transmitted over the network is in bytes, so all data must be serialized into bytes. Data to be serialized in Java must inherit the Serializable interface.

How should the actual size of a piece of text be calculated? I once encountered a problem: I wanted to find a way to compress the cookie size and reduce the amount of network transmission. At that time, I chose different compression algorithms and found that the number of characters was reduced after compression, but it did not No reduction in bytes. The so-called compression only converts multiple single-byte characters into one multi-byte character through encoding. What is reduced is String.length(), but not the final number of bytes. For example, the two characters "ab" are converted into a strange character through some encoding. Although the number of characters changes from two to one, if UTF-8 is used to encode this strange character, it may become three after encoding. or more bytes. For the same reason, for example, if the integer number 1234567 is stored as a character, it will take up 7 bytes to encode using UTF-8, and it will take up 14 bytes to encode it using UTF-16, but it only takes 4 to store it as an int number. byte to store. Therefore, it is meaningless to look at the size of a piece of text and the length of the characters themselves. Even if the same characters are stored in different encodings, the final storage size will be different, so you must look at the encoding type from characters to bytes.

我们能够看到的汉字都是以字符形式出现的,例如在 Java 中“淘宝”两个字符,它在计算机中的数值 10 进制是 28120 和 23453,16 进制是 6bd8 和 5d9d,也就是这两个字符是由这两个数字唯一表示的。Java 中一个 char 是 16 个 bit 相当于两个字节,所以两个汉字用 char 表示在内存中占用相当于四个字节的空间。

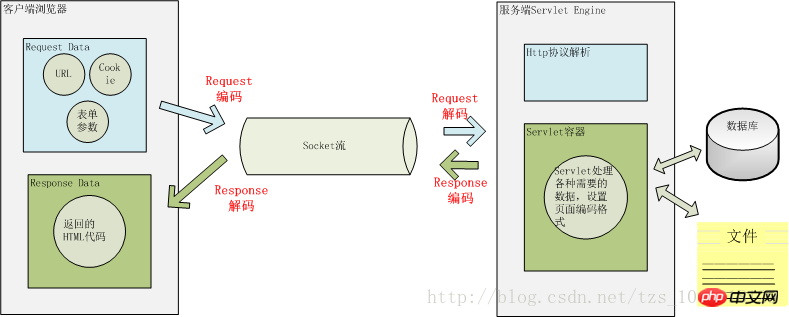

这两个问题搞清楚后,我们看一下 Java Web 中那些地方可能会存在编码转换?

用户从浏览器端发起一个 HTTP 请求,需要存在编码的地方是 URL、Cookie、Parameter。服务器端接受到 HTTP 请求后要解析 HTTP 协议,其中 URI、Cookie 和 POST 表单参数需要解码,服务器端可能还需要读取数据库中的数据,本地或网络中其它地方的文本文件,这些数据都可能存在编码问题,当 Servlet 处理完所有请求的数据后,需要将这些数据再编码通过 Socket 发送到用户请求的浏览器里,再经过浏览器解码成为文本。这些过程如下图所示:

一次 HTTP 请求的编码示例

用户提交一个 URL,这个 URL 中可能存在中文,因此需要编码,如何对这个 URL 进行编码?根据什么规则来编码?有如何来解码?如下图一个 URL:

上图中以 Tomcat 作为 Servlet Engine 为例,它们分别对应到下面这些配置文件中:

Port 对应在 Tomcat 的

junshanExample /servlets/servlet/*

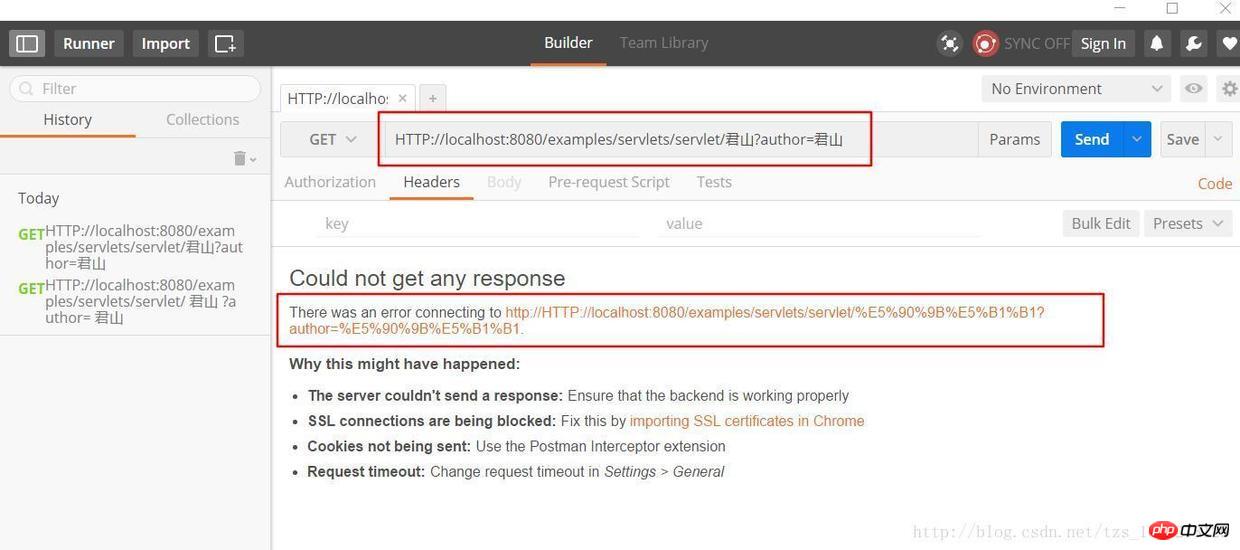

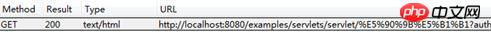

上图中 PathInfo 和 QueryString 出现了中文,当我们在浏览器中直接输入这个 URL 时,在浏览器端和服务端会如何编码和解析这个 URL 呢?为了验证浏览器是怎么编码 URL 的我选择的是360极速浏览器并通过 Postman 插件观察我们请求的 URL 的实际的内容,以下是 URL:

HTTP://localhost:8080/examples/servlets/servlet/君山?author=君山

君山的编码结果是:e5 90 9b e5 b1 b1,和《深入分析 Java Web 技术内幕》中的结果不一样,这是因为我使用的浏览器和插件和原作者是有区别的,那么这些浏览器之间的默认编码是不一样的,原文中的结果是:

君山的编码结果分别是:e5 90 9b e5 b1 b1,be fd c9 bd,查阅上一届的编码可知,PathInfo 是 UTF-8 编码而 QueryString 是经过 GBK 编码,至于为什么会有“%”?查阅 URL 的编码规范 RFC3986 可知浏览器编码 URL 是将非 ASCII 字符按照某种编码格式编码成 16 进制数字然后将每个 16 进制表示的字节前加上“%”,所以最终的 URL 就成了上图的格式了。

从上面测试结果可知浏览器对 PathInfo 和 QueryString 的编码是不一样的,不同浏览器对 PathInfo 也可能不一样,这就对服务器的解码造成很大的困难,下面我们以 Tomcat 为例看一下,Tomcat 接受到这个 URL 是如何解码的。

解析请求的 URL 是在 org.apache.coyote.HTTP11.InternalInputBuffer 的 parseRequestLine 方法中,这个方法把传过来的 URL 的 byte[] 设置到 org.apache.coyote.Request 的相应的属性中。这里的 URL 仍然是 byte 格式,转成 char 是在 org.apache.catalina.connector.CoyoteAdapter 的 convertURI 方法中完成的:

protected void convertURI(MessageBytes uri, Request request) throws Exception { ByteChunk bc = uri.getByteChunk(); int length = bc.getLength(); CharChunk cc = uri.getCharChunk(); cc.allocate(length, -1); String enc = connector.getURIEncoding(); if (enc != null) { B2CConverter conv = request.getURIConverter(); try { if (conv == null) { conv = new B2CConverter(enc); request.setURIConverter(conv); } } catch (IOException e) {...} if (conv != null) { try { conv.convert(bc, cc, cc.getBuffer().length - cc.getEnd()); uri.setChars(cc.getBuffer(), cc.getStart(), cc.getLength()); return; } catch (IOException e) {...} } } // Default encoding: fast conversion byte[] bbuf = bc.getBuffer(); char[] cbuf = cc.getBuffer(); int start = bc.getStart(); for (int i = 0; i < length; i++) { cbuf[i] = (char) (bbuf[i + start] & 0xff); } uri.setChars(cbuf, 0, length); }

从上面的代码中可以知道对 URL 的 URI 部分进行解码的字符集是在 connector 的

How to parse QueryString? The QueryString of the GET HTTP request and the form parameters of the POST HTTP request are saved as Parameters, and the parameter values are obtained through request.getParameter. They are decoded the first time the request.getParameter method is called. When the request.getParameter method is called, it will call the parseParameters method of org.apache.catalina.connector.Request. This method will decode the parameters passed by GET and POST, but their decoding character sets may be different. The decoding of the POST form will be introduced later. Where is the decoding character set of QueryString defined? It is transmitted to the server through the HTTP Header and is also in the URL. Is it the same as the decoding character set of the URI? From the previous browsers using different encoding formats for PathInfo and QueryString, we can guess that the decoded character sets will definitely not be consistent. This is indeed the case. The decoding character set of QueryString is either the Charset defined in ContentType in the Header or the default ISO-8859-1. To use the encoding defined in ContentType, you must set the connector's

Judging from the above URL encoding and decoding process, it is relatively complicated, and encoding and decoding are not fully controllable by us in the application. Therefore, we should try to avoid using non-ASCII characters in the URL in our application, otherwise it will be very difficult. You may encounter garbled characters. Of course, it is best to set the two parameters URIEncoding and useBodyEncodingForURI in

When the client initiates an HTTP request, in addition to the URL above, it may also pass other parameters in the Header such as Cookie, redirectPath, etc. These user-set values may also have encoding problems. How does Tomcat decode them?

Decoding the items in the Header is also performed by calling request.getHeader. If the requested Header item is not decoded, the toString method of MessageBytes is called. The default encoding used by this method for conversion from byte to char is also ISO-8859-1. , and we cannot set other decoding formats of the Header, so if you set the decoding of non-ASCII characters in the Header, there will definitely be garbled characters.

The same is true when we add a Header. Do not pass non-ASCII characters in the Header. If we must pass them, we can first encode these characters with org.apache.catalina.util.URLEncoder and then add them to the Header. This way Information will not be lost during the transfer from the browser to the server. It would be nice if we decoded it according to the corresponding character set when we want to access these items.

As mentioned earlier, the decoding of parameters submitted by the POST form occurs when request.getParameter is called for the first time. The POST form parameter transfer method is different from QueryString. It is passed to the server through the BODY of HTTP. When we click the submit button on the page, the browser will first encode the parameters filled in the form according to the Charset encoding format of ContentType and then submit them to the server. The server will also use the character set in ContentType for decoding. Therefore, parameters submitted through the POST form generally do not cause problems, and this character set encoding is set by ourselves and can be set through request.setCharacterEncoding(charset).

In addition, for multipart/form-data type parameters, that is, the uploaded file encoding also uses the character set encoding defined by ContentType. It is worth noting that the uploaded file is transmitted to the local temporary directory of the server in a byte stream. This The process does not involve character encoding, but the actual encoding is adding the file content to parameters. If it cannot be encoded using this encoding, the default encoding ISO-8859-1 will be used.

When the resources requested by the user have been successfully obtained, the content will be returned to the client browser through Response. This process must first be encoded and then decoded by the browser. The encoding and decoding character set of this process can be set through response.setCharacterEncoding. It will override the value of request.getCharacterEncoding and return it to the client through the Content-Type of the Header. When the browser receives the returned socket stream, it will pass the Content-Type. charset to decode. If the Content-Type in the returned HTTP Header does not set charset, the browser will decode it according to the of Html. ; to decode the charset. If not defined, browser will use default encoding for decoding. < p>

除了 URL 和参数编码问题外,在服务端还有很多地方可能存在编码,如可能需要读取 xml、velocity 模版引擎、JSP 或者从数据库读取数据等。

xml 文件可以通过设置头来制定编码格式

Velocity 模版设置编码格式:

services.VelocityService.input.encoding=UTF-8

JSP 设置编码格式:

<%@page contentType="text/html; charset=UTF-8"%>

访问数据库都是通过客户端 JDBC 驱动来完成,用 JDBC 来存取数据要和数据的内置编码保持一致,可以通过设置 JDBC URL 来制定如 MySQL:url="jdbc:mysql://localhost:3306/DB?useUnicode=true&characterEncoding=GBK"。

下面看一下,当我们碰到一些乱码时,应该怎么处理这些问题?出现乱码问题唯一的原因都是在 char 到 byte 或 byte 到 char 转换中编码和解码的字符集不一致导致的,由于往往一次操作涉及到多次编解码,所以出现乱码时很难查找到底是哪个环节出现了问题,下面就几种常见的现象进行分析。

例如,字符串“淘!我喜欢!”变成了“Ì Ô £ ¡Î Ò Ï²»¶ £ ¡”编码过程如下图所示:

字符串在解码时所用的字符集与编码字符集不一致导致汉字变成了看不懂的乱码,而且是一个汉字字符变成两个乱码字符。

例如,字符串“淘!我喜欢!”变成了“??????”编码过程如下图所示:

将中文和中文符号经过不支持中文的 ISO-8859-1 编码后,所有字符变成了“?”,这是因为用 ISO-8859-1 进行编解码时遇到不在码值范围内的字符时统一用 3f 表示,这也就是通常所说的“黑洞”,所有 ISO-8859-1 不认识的字符都变成了“?”。

例如,字符串“淘!我喜欢!”变成了“????????????”编码过程如下图所示:

这种情况比较复杂,中文经过多次编码,但是其中有一次编码或者解码不对仍然会出现中文字符变成“?”现象,出现这种情况要仔细查看中间的编码环节,找出出现编码错误的地方。

还有一种情况是在我们通过 request.getParameter 获取参数值时,当我们直接调用

String value = request.getParameter(name); 会出现乱码,但是如果用下面的方式

String value = String(request.getParameter(name).getBytes(" ISO-8859-1"), "GBK");

解析时取得的 value 会是正确的汉字字符,这种情况是怎么造成的呢?

看下如所示:

这种情况是这样的,ISO-8859-1 字符集的编码范围是 0000-00FF,正好和一个字节的编码范围相对应。这种特性保证了使用 ISO-8859-1 进行编码和解码可以保持编码数值“不变”。虽然中文字符在经过网络传输时,被错误地“拆”成了两个欧洲字符,但由于输出时也是用 ISO-8859-1,结果被“拆”开的中文字的两半又被合并在一起,从而又刚好组成了一个正确的汉字。虽然最终能取得正确的汉字,但是还是不建议用这种不正常的方式取得参数值,因为这中间增加了一次额外的编码与解码,这种情况出现乱码时因为 Tomcat 的配置文件中 useBodyEncodingForURI 配置项没有设置为”true”,从而造成第一次解析式用 ISO-8859-1 来解析才造成乱码的。

This article first summarizes the differences between several common encoding formats, then introduces several encoding formats that support Chinese, and compares their usage scenarios. Then it introduces those places in Java that involve coding issues and how coding is supported in Java. Taking network I/O as an example, it focuses on the places where encoding exists in HTTP requests, as well as Tomcat's parsing of the HTTP protocol, and finally analyzes the causes of the garbled code problems we usually encounter.

To sum up, to solve the Chinese problem, we must first figure out where character-to-byte encoding and byte-to-character decoding will occur. The most common places are reading and storing data to disk, or data passing through the network. transmission. Then, for these places, figure out how the framework or system that operates these data controls encoding, set the encoding format correctly, and avoid using the default encoding format of the software or the operating system platform.

The above is the detailed content of Analyzing Chinese encoding issues in Java Web. For more information, please follow other related articles on the PHP Chinese website!