Preface

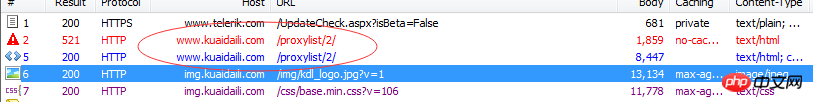

We maintain a proxy pool project on GitHub. The source of the proxy is to crawl some free proxy publishing websites. In the morning, a guy told me that one of the proxy capture interfaces was unavailable and returned status 521. I ran through the code with the mentality of helping people solve problems. I found this to be the case.

Through Fiddler packet capture comparison, it can be basically determined that JavaScript generates encrypted cookies that cause the original request to return 521.

Open the Fiddler software and use a browser to open the target site (http://www.kuaidaili.com/proxylist/2/). It can be found that the browser loads this page twice. The first time it returns 521, the second time it returns data normally. Many children who have never written a website or have little experience in crawling may wonder why this is happening? Why may the browser return data normally but the code does not?

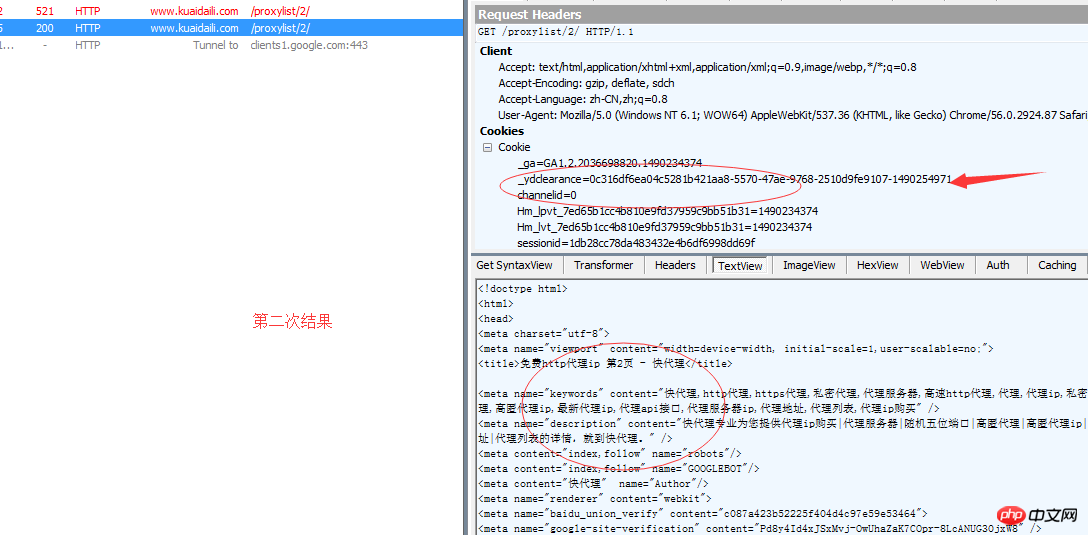

Carefully observe the results returned twice and you can find:

_ydclearance=0c316df6ea04c5281b421aa8-5570-47ae-9768-2510d9fe9107-1490254971

function lq(VA) { var qo, mo = "", no = "", oo = [0x8c, 0xcd, 0x4c, 0xf9, 0xd7, 0x4d, 0x25, 0xba, 0x3c, 0x16, 0x96, 0x44, 0x8d, 0x0b, 0x90, 0x1e, 0xa3, 0x39, 0xc9, 0x86, 0x23, 0x61, 0x2f, 0xc8, 0x30, 0xdd, 0x57, 0xec, 0x92, 0x84, 0xc4, 0x6a, 0xeb, 0x99, 0x37, 0xeb, 0x25, 0x0e, 0xbb, 0xb0, 0x95, 0x76, 0x45, 0xde, 0x80, 0x59, 0xf6, 0x9c, 0x58, 0x39, 0x12, 0xc7, 0x9c, 0x8d, 0x18, 0xe0, 0xc5, 0x77, 0x50, 0x39, 0x01, 0xed, 0x93, 0x39, 0x02, 0x7e, 0x72, 0x4f, 0x24, 0x01, 0xe9, 0x66, 0x75, 0x4e, 0x2b, 0xd8, 0x6e, 0xe2, 0xfa, 0xc7, 0xa4, 0x85, 0x4e, 0xc2, 0xa5, 0x96, 0x6b, 0x58, 0x39, 0xd2, 0x7f, 0x44, 0xe5, 0x7b, 0x48, 0x2d, 0xf6, 0xdf, 0xbc, 0x31, 0x1e, 0xf6, 0xbf, 0x84, 0x6d, 0x5e, 0x33, 0x0c, 0x97, 0x5c, 0x39, 0x26, 0xf2, 0x9b, 0x77, 0x0d, 0xd6, 0xc0, 0x46, 0x38, 0x5f, 0xf4, 0xe2, 0x9f, 0xf1, 0x7b, 0xe8, 0xbe, 0x37, 0xdf, 0xd0, 0xbd, 0xb9, 0x36, 0x2c, 0xd1, 0xc3, 0x40, 0xe7, 0xcc, 0xa9, 0x52, 0x3b, 0x20, 0x40, 0x09, 0xe1, 0xd2, 0xa3, 0x80, 0x25, 0x0a, 0xb2, 0xd8, 0xce, 0x21, 0x69, 0x3e, 0xe6, 0x80, 0xfd, 0x73, 0xab, 0x51, 0xde, 0x60, 0x15, 0x95, 0x07, 0x94, 0x6a, 0x18, 0x9d, 0x37, 0x31, 0xde, 0x64, 0xdd, 0x63, 0xe3, 0x57, 0x05, 0x82, 0xff, 0xcc, 0x75, 0x79, 0x63, 0x09, 0xe2, 0x6c, 0x21, 0x5c, 0xe0, 0x7d, 0x4a, 0xf2, 0xd8, 0x9c, 0x22, 0xa3, 0x3d, 0xba, 0xa0, 0xaf, 0x30, 0xc1, 0x47, 0xf4, 0xca, 0xee, 0x64, 0xf9, 0x7b, 0x55, 0xd5, 0xd2, 0x4c, 0xc9, 0x7f, 0x25, 0xfe, 0x48, 0xcd, 0x4b, 0xcc, 0x81, 0x1b, 0x05, 0x82, 0x38, 0x0e, 0x83, 0x19, 0xe3, 0x65, 0x3f, 0xbf, 0x16, 0x88, 0x93, 0xdd, 0x3b]; qo = "qo=241; do{oo[qo]=(-oo[qo])&0xff; oo[qo]=(((oo[qo]>>3)|((oo[qo]<<5)&0xff))-70)&0xff;} while(--qo>=2);"; eval(qo); qo = 240; do { oo[qo] = (oo[qo] - oo[qo - 1]) & 0xff; } while (--qo >= 3); qo = 1; for (; ;) { if (qo > 240) break; oo[qo] = ((((((oo[qo] + 2) & 0xff) + 76) & 0xff) << 1) & 0xff) | (((((oo[qo] + 2) & 0xff) + 76) & 0xff) >> 7); qo++; } po = ""; for (qo = 1; qo < oo.length - 1; qo++) if (qo % 6) po += String.fromCharCode(oo[qo] ^ VA); eval("qo=eval;qo(po);"); }

可以看到这个变量po为document.cookie='_ydclearance=0c316df6ea04c5281b421aa8-5570-47ae-9768-2510d9fe9107-1490254971; expires=Thu, 23-Mar-17 07:42:51 GMT; domain=.kuaidaili.com; path=/'; window.document.location=document.URL,下面还有个eval("qo=eval;qo(po);")。JS里面的eval和Python的差不多,第二句的意思就是将eval方法赋给qo。然后去eval字符串po。而字符串po的前半段的意思是给浏览器添加Cooklie,后半段window.document.location=document.URL是刷新当前页面。

这也印证了我上面的说法,首次请求没有Cookie,服务端回返回一段生成Cookie并自动刷新的JS代码。浏览器拿到代码能够成功执行,带着新的Cookie再次请求获取数据。而Python拿到这段代码就只能停留在第一步。

那么如何才能使Python也能执行这段JS呢,答案是PyV8。V8是Chromium中内嵌的javascript引擎,号称跑的最快。PyV8是用Python在V8的外部API包装了一个python壳,这样便可以使python可以直接与javascript操作。PyV8的安装大家可以自行百度。

分析完成,下面切入正题撸代码。

首先是正常请求网页,返回带加密的JS函数的html:

import re import PyV8 import requests TARGET_URL = "http://www.kuaidaili.com/proxylist/1/" def getHtml(url, cookie=None): header = { "Host": "www.kuaidaili.com", 'Connection': 'keep-alive', 'Cache-Control': 'max-age=0', 'Upgrade-Insecure-Requests': '1', 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.87 Safari/537.36', 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8', 'Accept-Encoding': 'gzip, deflate, sdch', 'Accept-Language': 'zh-CN,zh;q=0.8', } html = requests.get(url=url, headers=header, timeout=30, cookies=cookie).content return html # 第一次访问获取动态加密的JS first_html = getHtml(TARGET_URL)

由于返回的是html,并不单纯的JS函数,所以需要用正则提取JS函数的参数的参数。

# 提取其中的JS加密函数 js_func = ''.join(re.findall(r'(function .*?)', first_html)) print 'get js func:\n', js_func # 提取其中执行JS函数的参数 js_arg = ''.join(re.findall(r'setTimeout\(\"\D+\((\d+)\)\"', first_html)) print 'get ja arg:\n', js_arg

还有一点需要注意,在JS函数中并没有返回cookie,而是直接将cookie set到浏览器,所以我们需要将eval("qo=eval;qo(po);")替换成return po。这样就能成功返回po中的内容。

# -*- coding: utf-8 -*-""" ------------------------------------------------- File Name: demo_1.py.py Description : Python爬虫—破解JS加密的Cookie 快代理网站为例:http://www.kuaidaili.com/proxylist/1/ Document: Author : JHao date: 2017/3/23 ------------------------------------------------- Change Activity: 2017/3/23: 破解JS加密的Cookie ------------------------------------------------- """__author__ = 'JHao'import reimport PyV8import requests TARGET_URL = "http://www.kuaidaili.com/proxylist/1/"def getHtml(url, cookie=None): header = { "Host": "www.kuaidaili.com", 'Connection': 'keep-alive', 'Cache-Control': 'max-age=0', 'Upgrade-Insecure-Requests': '1', 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.87 Safari/537.36', 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8', 'Accept-Encoding': 'gzip, deflate, sdch', 'Accept-Language': 'zh-CN,zh;q=0.8', } html = requests.get(url=url, headers=header, timeout=30, cookies=cookie).content return htmldef executeJS(js_func_string, arg): ctxt = PyV8.JSContext() ctxt.enter() func = ctxt.eval("({js})".format(js=js_func_string)) return func(arg)def parseCookie(string): string = string.replace("document.cookie='", "") clearance = string.split(';')[0] return {clearance.split('=')[0]: clearance.split('=')[1]}# 第一次访问获取动态加密的JSfirst_html = getHtml(TARGET_URL)# first_html = """# # """# 提取其中的JS加密函数js_func = ''.join(re.findall(r'(function .*?)', first_html))print 'get js func:\n', js_func# 提取其中执行JS函数的参数js_arg = ''.join(re.findall(r'setTimeout\(\"\D+\((\d+)\)\"', first_html))print 'get ja arg:\n', js_arg# 修改JS函数,使其返回Cookie内容js_func = js_func.replace('eval("qo=eval;qo(po);")', 'return po')# 执行JS获取Cookiecookie_str = executeJS(js_func, js_arg)# 将Cookie转换为字典格式cookie = parseCookie(cookie_str)print cookie# 带上Cookie再次访问url,获取正确数据print getHtml(TARGET_URL, cookie)[0:500]

The above is the detailed content of Detailed graphic explanation of the steps for Python crawler to crack JS encrypted cookies. For more information, please follow other related articles on the PHP Chinese website!

Python crawler method to obtain data

Python crawler method to obtain data How to refund Douyin recharged Doucoin

How to refund Douyin recharged Doucoin hiberfil file introduction

hiberfil file introduction Windows driver wizard function

Windows driver wizard function How to solve the problem that Ethernet cannot connect to the internet

How to solve the problem that Ethernet cannot connect to the internet How to use the month function

How to use the month function How to bind data in dropdownlist

How to bind data in dropdownlist Windows 10 running opening location introduction

Windows 10 running opening location introduction