This article should have appeared 2 months ago, but I never had enough motivation to write it. Until, I was playing Zhihu recently and saw a similar problem. In addition, this is a boring short vacation, so I wrote this article.

This article is divided into four parts:

Basic knowledge: 3D world and quaternions

A Hello, World

Application Chapter - Advanced Example

Because I have only played the company's Oculus DK2, this article is based on DK2. .

In fact, it is very simple to use JavaScript to use VR programs:

Use Node.js to read sensor data on Oculus and provide a service using the WebSocket protocol.

Looking for a 3D game engine like Three.js to create a 3D world.

Read the value of the sensor and represent it in the 3D world.

This can also be used in hybrid applications, you only need to have a CardBoard. Use Cordova to read the data from the mobile phone sensor, and then use this data to change the state of the WebView - except that the heat will be serious, there should be no other effects.

In the 3D games we are familiar with, the position of a point is determined by three coordinates (x, y, z), as shown in the figure below Display:

These three coordinates can only represent our position in this world, but cannot look at the world up and down.

Oculus DK2 uses the MPU (Motion Processing Unit) chip MPU6500, which is the second integrated 6-axis motion processing component (the first is MPU6050). It can digitally output 6-axis or 9-axis rotation matrices, quaternion, and fusion calculation data in Euler Angle format.

At this time, we need Euler angles and quaternions to represent the state of the object in the virtual world. (PS: Forgive me for mentioning it briefly)

Euler angles are a set of angles used to describe the attitude of a rigid body. Euler proposed that any orientation of a rigid body in a three-dimensional Euclidean space can be determined by The rotation of two axes is compounded. Normally, the three axes are orthogonal to each other.

The corresponding three angles are roll (roll angle), pitch (pitch angle) and yaw (yaw angle).

The quaternion is:

Quaternion can be used to represent rotation in three-dimensional space. The other two commonly used representations of it (three-dimensional orthogonal matrices and Euler angles) are equivalent. People use quaternions to represent rotations to solve two problems. One is how to use quaternions to represent points in three-dimensional space, and the other is how to use quaternions to represent rotations in three-dimensional space.

The 6050 I played before probably looks like this. If you play a quadcopter, you should also play like this:

Copy/Paste After finishing the above content, you may not have any idea, so let’s give an example of hello, world.

Let us go back to the three steps mentioned at the beginning, we will need to do three things:

Looking for an Oculus extension for Node - however, this matter can now be left to WebVR.

Looking for a Web 3D library and its corresponding Oculus display plug-in.

Read sensor data and display it in the virtual world.

As shown in the figure below:

So I found the corresponding Node library: Node-HMD, which can be read Sensor data.

There are also Three.js and Oculus Effect plug-ins, which can display the following view:

In this way, our DK2 Control reads the sensor data and You can play in this virtual world~~.

A more detailed introduction can be found at: //m.sbmmt.com/

The above application example is still too simple. Let's look at an advanced application - this is another Hackday Idea we did two months ago, this is another "Mars Rover":

Imagine you want to see Mars, but You don't have the money to go. And you can rent such a robot, and then you can roam on Mars.

So, first we need a real-time video communication, here we use WebRTC:

Through WebRTC, we can achieve real-time communication on the computer browser, and then through Three.js, we can convert this video into an approximate 3D perspective. This video can be captured through the browser on the mobile phone, or the corresponding Web application can be written on the mobile phone.

There is an online Demo here: //m.sbmmt.com/

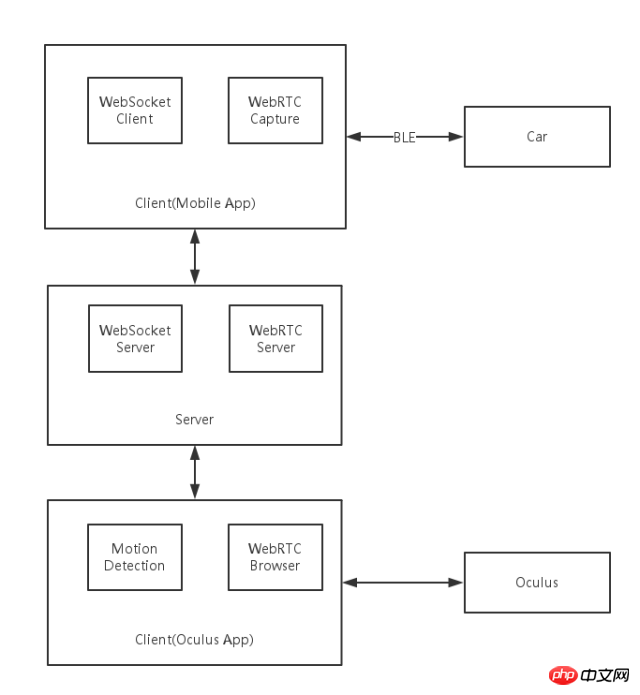

The architecture is roughly as shown below:

In this way we solve the problem of real-time video, and then we also need to control the hardware:

Use the WebSocket protocol to provide Oculus's up, down, left and right movement data

Read the sensor data on the mobile phone and transmit the data to the car through BLE.

The car can make corresponding movements through instructions.

For this part of the content, you can read my previous article "How do I Hack a Robot?" 》

Compared with C++ (C++), JavaScript is more suitable for building prototypes - fast, direct, and effective. After all, C++ compilation takes time. The operation effect is as expected. The computer fan rotates in various ways. I don't know if it is unique to Mac. However, I think this performance problem has always been there.

The above is the detailed content of Why JavaScript will conquer the VR world too. For more information, please follow other related articles on the PHP Chinese website!