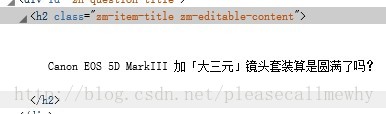

In the early stage, we crawled the title under this link:

http://www.zhihu.com/explore/recommendations

But obviously this page cannot get the answer.

A complete question page should be like this link:

http://www.zhihu.com/question/22355264

Looking carefully, aha, our encapsulation class needs to be further packaged, at least a questionDescription is needed To store the problem description:

import java.util.ArrayList;

public class Zhihu {

public String question;// 问题

public String questionDescription;// 问题描述

public String zhihuUrl;// 网页链接

public ArrayList<String> answers;// 存储所有回答的数组

// 构造方法初始化数据

public Zhihu() {

question = "";

questionDescription = "";

zhihuUrl = "";

answers = new ArrayList<String>();

}

@Override

public String toString() {

return "问题:" + question + "\n" + "描述:" + questionDescription + "\n"

+ "链接:" + zhihuUrl + "\n回答:" + answers + "\n";

}

}We add a parameter to the constructor of Zhihu to set the url value. Because the url is determined, the description and answer of the problem can be captured.

Let’s change Spider’s method of obtaining Zhihu objects and only obtain the url:

static ArrayList<Zhihu> GetZhihu(String content) {

// 预定义一个ArrayList来存储结果

ArrayList<Zhihu> results = new ArrayList<Zhihu>();

// 用来匹配url,也就是问题的链接

Pattern urlPattern = Pattern.compile("<h2>.+?question_link.+?href=\"(.+?)\".+?</h2>");

Matcher urlMatcher = urlPattern.matcher(content);

// 是否存在匹配成功的对象

boolean isFind = urlMatcher.find();

while (isFind) {

// 定义一个知乎对象来存储抓取到的信息

Zhihu zhihuTemp = new Zhihu(urlMatcher.group(1));

// 添加成功匹配的结果

results.add(zhihuTemp);

// 继续查找下一个匹配对象

isFind = urlMatcher.find();

}

return results;

}Next, in Zhihu’s construction method, obtain all detailed data through the url.

We need to process the url first, because some are for answers, its url is:

http://www.zhihu.com/question/22355264/answer/21102139

Some are for questions , its url is:

http://www.zhihu.com/question/22355264

Then we obviously need the second type, so we need to use regular expressions to cut the first type of link into the second type, Just write a function in Zhihu for this.

// 处理url

boolean getRealUrl(String url) {

// 将http://www.zhihu.com/question/22355264/answer/21102139

// 转化成http://www.zhihu.com/question/22355264

// 否则不变

Pattern pattern = Pattern.compile("question/(.*?)/");

Matcher matcher = pattern.matcher(url);

if (matcher.find()) {

zhihuUrl = "http://www.zhihu.com/question/" + matcher.group(1);

} else {

return false;

}

return true;

}The next step is to obtain each part.

Look at the title first:

Just grasp the class. The regular statement can be written as: zm-editable-content">(.+?)<

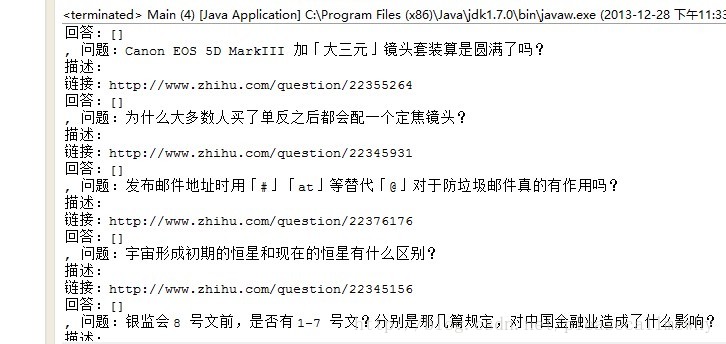

Run it and see the result:

Oh, that’s good.

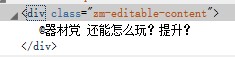

Next, grab the problem description:

Ah, the same principle, grab the class, because it should be the unique identifier of this

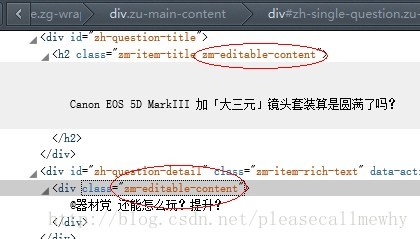

Verification method: Right-click to view the page. Source code, ctrl+F to see if there are other strings in the page.

After verification, there is really a problem:

The class in front of the title and description content are the same.

The only way to re-crawl is by modifying the regular expression:

// 匹配标题

pattern = Pattern.compile("zh-question-title.+?<h2.+?>(.+?)</h2>");

matcher = pattern.matcher(content);

if (matcher.find()) {

question = matcher.group(1);

}

// 匹配描述

pattern = Pattern

.compile("zh-question-detail.+?<div.+?>(.*?)</div>");

matcher = pattern.matcher(content);

if (matcher.find()) {

questionDescription = matcher.group(1);

}Finally, it’s a loop to capture the answer:

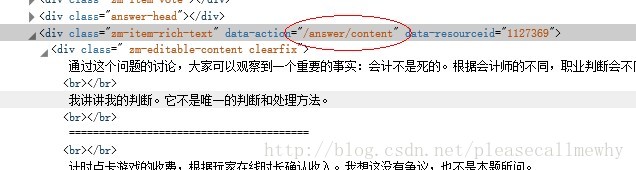

Preliminary tentative regular sentence: /answer/content.+?

After changing the code, we will find that the software runs significantly slower, because it needs to visit each web page and capture the content on it

For example, editor recommendations. If there are 20 questions, then you need to visit the web page 20 times, and the speed will slow down.

Try it out, it seems to work well:

OK, that’s it for now~ Let’s continue with some details next time. Adjustments, such as multi-threading, IO stream writing to local, etc.

The above is the content of writing Java Zhihu crawler to crawl Zhihu answers. For more related content, please pay attention to the PHP Chinese website (www.php). .cn)