Foreword: Random forest is a very flexible machine learning method that has many applications from marketing to medical insurance. It can be used in marketing to model customer acquisition and retention or to predict patient disease risk and susceptibility.

Random forests can be used for classification and regression problems, can handle a large number of features, and can help estimate the importance of variables used in modeling data.

This article is about how to build a random forest model using Python.

1 What is Random Forest

Random Forest can be used for almost any kind of prediction problem (including nonlinear problems). It is a relatively new machine learning strategy (born at Bell Labs in the 1990s) that can be used in any aspect. It belongs to the category of ensemble learning in machine learning.

1.1 Ensemble Learning

Ensemble learning is the combination of multiple models to solve a single prediction problem. Its principle is to generate multiple classifier models, each learning and making predictions independently. These predictions are finally combined to produce predictions that are as good or better than the results of individual classifiers.

Random forest is a branch of ensemble learning because it relies on the integration of decision trees. More documentation on implementing ensemble learning in Python: Scikit-Learn documentation.

1.2 Random Decision Tree

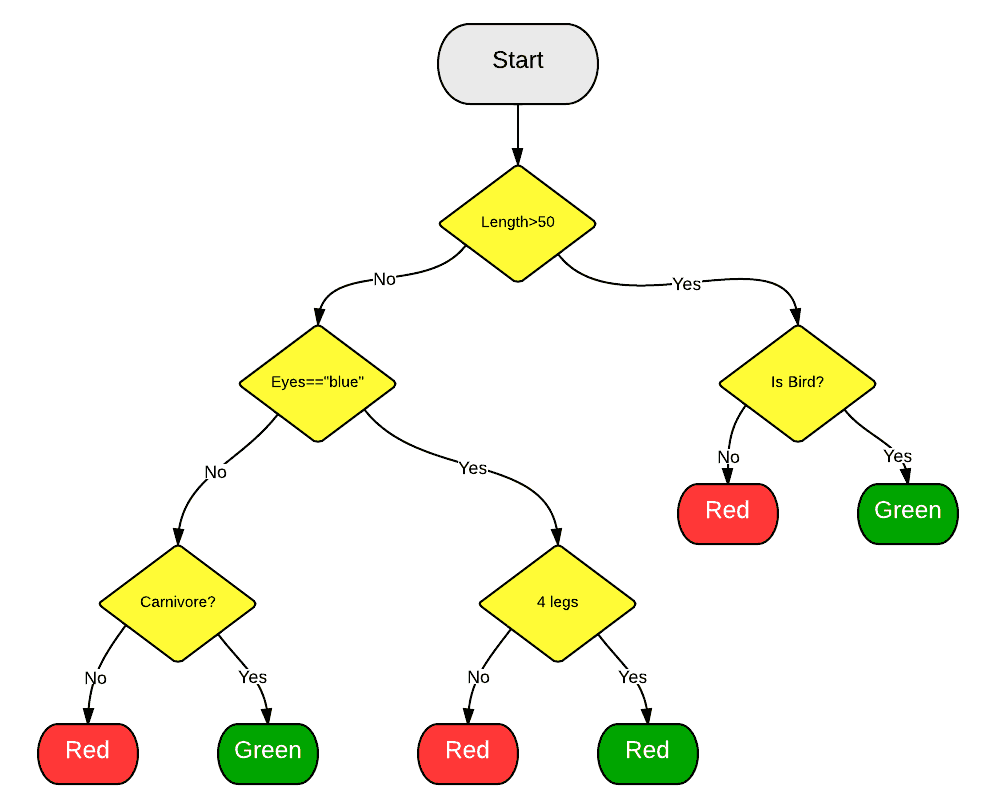

We know that random forest aggregates other models, but which model is it specifically? As can also be seen from its name, random forests aggregate classification (or regression) trees. A decision tree is composed of a series of decisions and can be used to classify observations in a data set.

1.3 Random Forest

The random forest algorithm introduced will automatically create a random decision tree group. Since these trees are randomly generated, most trees (even 99.9%) are not meaningful for solving your classification or regression problem.

1.4 Vote

So, what’s the benefit of generating even tens of thousands of bad models? Well, it really doesn't. But usefully, a handful of very good decision trees were generated along with it.

When you make a prediction, new observations come down the decision tree from top to bottom and are assigned a predicted value or label. Once each tree in the forest has been given a prediction or label, all predictions are summed together and the votes of all trees are returned as the final prediction.

Simply put, 99.9% of the predictions made by uncorrelated trees cover all situations, and these predictions will cancel each other out. The prediction results of a few excellent trees will stand out, resulting in a good prediction result.

2 Why use it?

Random forest is the Leatherman (multifunctional folding knife) of machine learning methods. You can throw almost anything at it. It does a particularly good job at estimating and inferring mapping, so it does not require too many parameter adjustments like SVM (this is very good for friends who are pressed for time).

2.1 An example of mapping

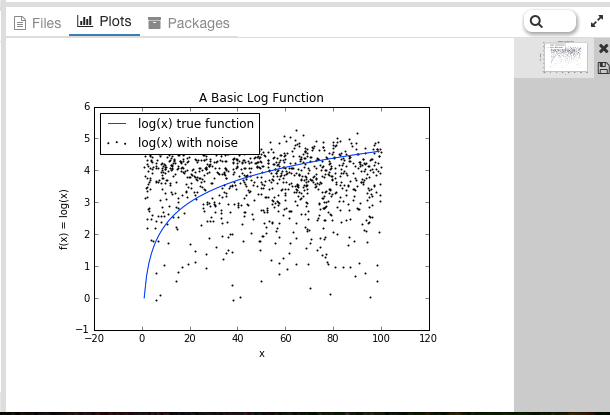

Random forest can be learned without deliberate manual data transformation. Take the function f(x)=log(x) as an example.

We will use Python to generate analysis data in Yhat’s own interactive environment Rodeo. You can download the Mac, Windows and Linux installation files of Rodeo here.

First, let’s generate the data and add noise.

import numpy as np import pylab as pl x = np.random.uniform(1, 100, 1000) y = np.log(x) + np.random.normal(0, .3, 1000) pl.scatter(x, y, s=1, label="log(x) with noise") pl.plot(np.arange(1, 100), np.log(np.arange(1, 100)), c="b", label="log(x) true function") pl.xlabel("x") pl.ylabel("f(x) = log(x)") pl.legend(loc="best") pl.title("A Basic Log Function") pl.show()

Get the following results:

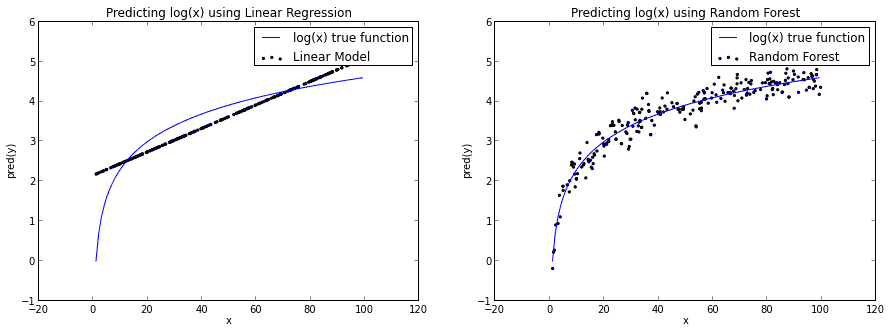

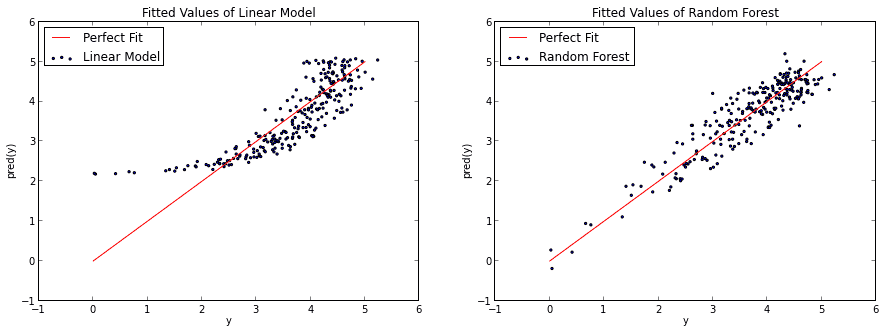

If we build a basic linear model by using x to predict y, we need to draw a straight line that bisects the log(x) function. And if we use the random forest algorithm, it can better approximate the log(x) curve and make it look more like an actual function.

Of course, you can also say that the random forest is a bit overfitting to the log(x) function. Regardless, this illustrates that random forests are not limited to linear problems.

3 How to use

3.1 Feature selection

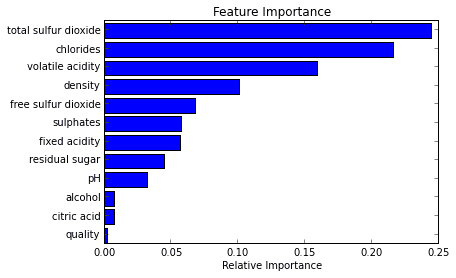

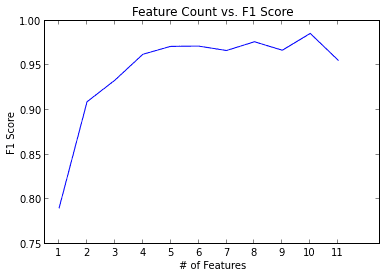

One of the best use cases of random forests is feature selection. A by-product of trying out many decision tree variables is that you can check whether the variable performs best or worst in each tree.

When some trees use a variable and others don’t, you can compare the loss or gain of information. Better implemented random forest tools can do this for you, so all you need to do is look at that method or parameter.

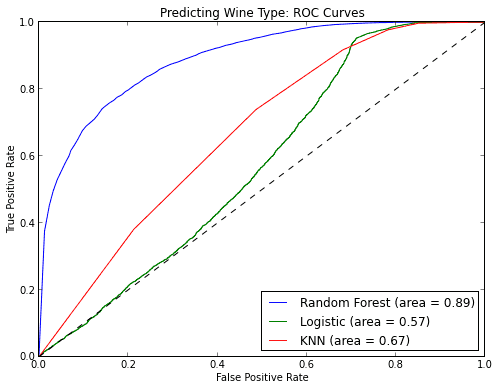

In the following example, we try to figure out which variables are most important when distinguishing red wine or white wine.

3.2 Classification

Random forest is also very good at classification problems. It can be used to make predictions for multiple possible target classes, and it can also output probabilities after adjustment. One thing you need to watch out for is overfitting.

Random forest is prone to overfitting, especially when the data set is relatively small. Be suspicious when your model makes “too good” predictions for the test set. One way to avoid overfitting is to use only relevant features in the model, such as using the feature selection mentioned earlier.

3.3 回归

随机森林也可以用于回归问题。

我发现,不像其他的方法,随机森林非常擅长于分类变量或分类变量与连续变量混合的情况。

4 一个简单的Python示例

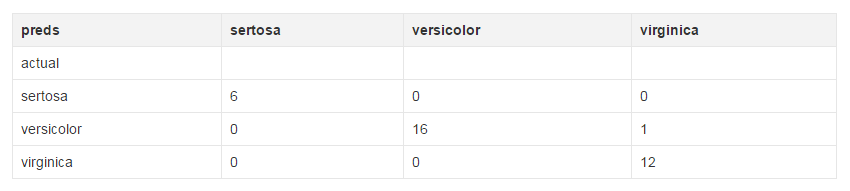

from sklearn.datasets import load_iris from sklearn.ensemble import RandomForestClassifier import pandas as pd import numpy as np iris = load_iris() df = pd.DataFrame(iris.data, columns=iris.feature_names) df['is_train'] = np.random.uniform(0, 1, len(df)) <= .75 df['species'] = pd.Categorical.from_codes(iris.target, iris.target_names) df.head() train, test = df[df['is_train']==True], df[df['is_train']==False] features = df.columns[:4] clf = RandomForestClassifier(n_jobs=2)y, _ = pd.factorize(train['species']) clf.fit(train[features], y) preds = iris.target_names[clf.predict(test[features])] pd.crosstab(test['species'], preds, rownames=['actual'], colnames=['preds'])

下面就是你应该看到的结果了。由于我们随机选择数据,所以实际结果每次都会不一样。

5 结语

随机森林相当起来非常容易。不过和其他任何建模方法一样要注意过拟合问题。如果你有兴趣用R语言使用随机森林,可以查看randomForest包。

以上就是随机森林算法入门(python)的内容,更多相关内容请关注PHP中文网(m.sbmmt.com)!