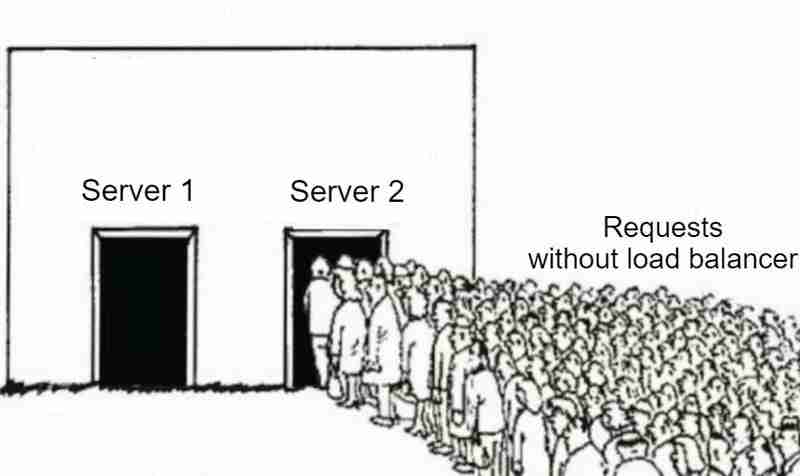

Load balancers are crucial in modern software development. If you've ever wondered how requests are distributed across multiple servers, or why certain websites feel faster even during heavy traffic, the answer often lies in efficient load balancing.

In this post, we'll build a simple application load balancer usingRound Robin algorithmin Go. The aim of this post is to understand how a load balancer works under the hood, step by step.

A load balancer is a system that distributes incoming network traffic across multiple servers. It ensures that no single server bears too much load, preventing bottlenecks and improving the overall user experience. Load balancing approach also ensure that if one server fails, then the traffic can be automatically re-routed to another available server, thus reducing the impact of the failure and increasing availability.

There are different algorithms and strategies to distribute the traffic:

In this post, we'll focus on implementing aRound Robinload balancer.

A round robin algorithm sends each incoming request to the next available server in a circular manner. If server A handles the first request, server B will handle the second, and server C will handle the third. Once all servers have received a request, it starts again from server A.

Now, let's jump into the code and build our load balancer!

type LoadBalancer struct { Current int Mutex sync.Mutex }

We'll first define a simple LoadBalancer struct with a Current field to keep track of which server should handle next request. The Mutex ensures that our code is safe to use concurrently.

Each server we load balance is defined by the Server struct:

type Server struct { URL *url.URL IsHealthy bool Mutex sync.Mutex }

Here, each server has a URL and an IsHealthy flag, which indicates whether the server is available to handle requests.

The heart of our load balancer is the round robin algorithm. Here's how it works:

func (lb *LoadBalancer) getNextServer(servers []*Server) *Server { lb.Mutex.Lock() defer lb.Mutex.Unlock() for i := 0; i < len(servers); i++ { idx := lb.Current % len(servers) nextServer := servers[idx] lb.Current++ nextServer.Mutex.Lock() isHealthy := nextServer.IsHealthy nextServer.Mutex.Unlock() if isHealthy { return nextServer } } return nil }

Our configuration is stored in a config.json file, which contains the server URLs and health check intervals (more on it in below section).

type Config struct { Port string `json:"port"` HealthCheckInterval string `json:"healthCheckInterval"` Servers []string `json:"servers"` }

The configuration file might look like this:

{ "port": ":8080", "healthCheckInterval": "2s", "servers": [ "http://localhost:5001", "http://localhost:5002", "http://localhost:5003", "http://localhost:5004", "http://localhost:5005" ] }

We want to make sure that the servers are healthy before routing any incoming traffic to them. This is done by sending periodic health checks to each server:

func healthCheck(s *Server, healthCheckInterval time.Duration) { for range time.Tick(healthCheckInterval) { res, err := http.Head(s.URL.String()) s.Mutex.Lock() if err != nil || res.StatusCode != http.StatusOK { fmt.Printf("%s is down\n", s.URL) s.IsHealthy = false } else { s.IsHealthy = true } s.Mutex.Unlock() } }

Every few seconds (as specified in the config), the load balancer sends a HEAD request to each server to check if it is healthy. If a server is down, the IsHealthy flag is set to false, preventing future traffic from being routed to it.

When the load balancer receives a request, it forwards the request to the next available server using areverse proxy. In Golang, the httputil package provides a built-in way to handle reverse proxying, and we will use it in our code through the ReverseProxy function:

func (s *Server) ReverseProxy() *httputil.ReverseProxy { return httputil.NewSingleHostReverseProxy(s.URL) }

A reverse proxy is a server that sits between a client and one or more backend severs. It receives the client's request, forwards it to one of the backend servers, and then returns the server's response to the client. The client interacts with the proxy, unaware of which specific backend server is handling the request.

In our case, the load balancer acts as a reverse proxy, sitting in front of multiple servers and distributing incoming HTTP requests across them.

When a client makes a request to the load balancer, it selects the next available healthy server using the round robin algorithm implementation in getNextServer function and proxies the client request to that server. If no healthy server is available then we send service unavailable error to the client.

http.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) { server := lb.getNextServer(servers) if server == nil { http.Error(w, "No healthy server available", http.StatusServiceUnavailable) return } w.Header().Add("X-Forwarded-Server", server.URL.String()) server.ReverseProxy().ServeHTTP(w, r) })

The ReverseProxy method proxies the request to the actual server, and we also add a custom header X-Forwarded-Server for debugging purposes (though in production, we should avoid exposing internal server details like this).

Finally, we start the load balancer on the specified port:

log.Println("Starting load balancer on port", config.Port) err = http.ListenAndServe(config.Port, nil) if err != nil { log.Fatalf("Error starting load balancer: %s\n", err.Error()) }

In this post, we built a basic load balancer from scratch in Golang using a round robin algorithm. This is a simple yet effective way to distribute traffic across multiple servers and ensure that your system can handle higher loads efficiently.

There's a lot more to explore, such as adding sophisticated health checks, implementing different load balancing algorithms, or improving fault tolerance. But this basic example can be a solid foundation to build upon.

You can find the source code in this GitHub repo.

The above is the detailed content of Building a simple load balancer in Go. For more information, please follow other related articles on the PHP Chinese website!