Li Mu: One year to start a business, three years to be alive

Report to my friends on the progress, struggles and reflections of LLM in the first year of entrepreneurship. When I was at Amazon for the fifth year, I thought about starting a business, but was delayed by the epidemic. By the seventh and a half years, I felt it was too itchy, so I resigned. Now that I think about it, if there is something that I have to try in my life, I would do it early. Because once you really start, you will find that there are so many new things to learn, and you always wonder why you didn’t start earlier. Name: The origin of BosonAI. Before starting the business, I did a series of projects named after Gluon. In quantum physics, a Gluon is a type of boson that binds quarks together, symbolizing how this project began as a joint project between Amazon and Microsoft. At that time, the project manager patted his head and the name came out, but naming was very difficult for programmers. We struggled with various file names and variable names every day. In the end, the new company simply named it after Boson. I hope everyone will smile knowingly when they get the meme "Boson and fermions make up the world". But I didn’t expect that many people would see it as Boston. "I'm in Boston, let's try it out sometime?" "Huh? But I'm in the Bay Area?" Financing: The leading investor ran away the day before signing. At the end of 2022, I thought of two projects using large language models (LLM). Productivity tool ideas. I happened to meet Zhang Yiming and asked him for advice. After the discussion, he asked: Why not do LLM itself? I subconsciously flinched: Our team at Amazon had been doing this for several years, with tens of thousands of cards, and a lot of difficulties like blabla. Yiminghehe said: These are short-term difficulties, and we need to take a long-term view. My advantage is that I listened to the advice and really did the LLM. The founding team gathered together the people responsible for data, pre-training, post-training, and architecture, and went to raise funds. With luck, I quickly received seed investment. But the money is not enough to buy the card, so I have to get the second round. The leader of this round was a very large organization, which took several months to document and negotiate terms. But the day before the signing, the leader said he would not invest, which directly led to the withdrawal of several investors. I am very grateful to the remaining investors for completing this round and getting the ticket to do LLM. If I reflect on it today, with the enthusiasm of the capital market still there at that time, I could actually continue to raise funds. Maybe like other friends, I now have one billion in cash in hand. At that time, I was worried that if I raised too much money, it would be difficult to exit, or I would be thrown into the sky. Now that I think about it, starting a business is about changing your life against the odds. What is the way out? Machine: The first early adopters bought GPUs when they had money. I asked various suppliers and the unanimous reply was that the H100 will be delivered one year later. I had an idea and wrote an email to Lao Huang directly. Lao Huang replied instantly and said he would take a look. Supermicro's CEO called an hour later. I paid a little more, jumped in line, and got the machine 20 days later. I was honored to eat crabs early. After eating crabs, I doubted my life and encountered all kinds of weird bugs. For example, the GPU power supply was insufficient, causing instability. Later, Supermicro engineers modified the bios code and patched it; for example, the cutting angle of the optical fiber was wrong, resulting in unstable communication; for example, Nvidia's recommended network layout was not optimal, so we made a new plan, and later Nvidia I also adopted this plan myself. I still don’t understand it. We bought less than a thousand cards, so we can be considered small buyers. But haven’t the big buyers encountered these problems we encountered? Why do we need our debug? At the same time, we also rented the same number of H100s, and there were all kinds of bugs. The GPU had problems every day, and we even wondered if we were the only ones on this cloud. Later, I saw the technical report of Llama 3 saying that after they switched to H100, the model was interrupted hundreds of times during training. I can sympathize with the pain between the lines. If you compare self-construction and leasing, the cost of renting for three years is almost the same as the cost of self-construction. The advantage of renting a card is peace of mind. There are two benefits to self-building. One is that if Nvidia's technology is still far ahead in three years, then it can control prices so that GPUs still retain their value? Another is the low cost of self-built data storage. Storage needs to be close to the GPU. Whether it is a large cloud or a small GPU cloud, the storage price is high. However, one model training can use several TB of space to store checkpoints, and training data storage starts at 10PB. If you use AWS S3, 10PB costs two million a year. If this money is used for self-construction, it can reach 100PB. Business: Thanks to our customers, we were very lucky to break even in the first year. Our income and expenses were even in the first year. Our expenditures are mainly on manpower and computing power. Thanks to Openai's financial resources and Nvidia's far lead, both expenditures are quite large. Our source of income is making customized models for large customers. Most of the companies that entered LLM very early were because their CEOs were very decision-making. They were not frightened by the high computing power and labor costs, and decisively pushed their internal teams to try new technologies together. I am very grateful to the client for giving us time to breathe, otherwise I would have been rushing around to various investors in the past few months. Next, more companies should try to use LLM, whether it is to upgrade their own products or reduce costs and increase efficiency. The reason is that on the one hand, technology costs are decreasing, and on the other hand, industry leaders (such as our customers) will successively release products based on LLM, rolling up the industry. We are also paying attention to the implementation of LLM on toC.La dernière vague d'acteurs de premier plan tels que c.ai et perplexity sont toujours à la recherche de modèles économiques, mais il existe également une douzaine de petites applications natives LLM qui rapportent beaucoup d'argent. Nous avons fourni un modèle pour une start-up de jeu de rôle. Ils se concentrent sur des acteurs profonds et équilibrent les revenus et les dépenses, ce qui est également formidable. Les capacités des modèles continuent d'évoluer et davantage de modalités (voix, musique, images, vidéos) sont intégrées, je pense qu'il y aura des applications plus imaginatives à l'avenir. Dans l’ensemble, l’industrie et le capital restent impatients. Cette année, plusieurs entreprises établies depuis plus d’un an mais ayant levé des milliards ont choisi de s’en retirer. De la technologie au produit, le processus est long et prend normalement 2 ou 3 ans. Compte tenu de l'émergence des besoins des utilisateurs, cela peut prendre plus de temps. Nous nous concentrons sur le présent, explorons le chemin dans le brouillard et restons optimistes quant à l’avenir. Technologie : Quatre étapes de sensibilisation au LLM La sensibilisation au LLM est passée par quatre étapes. La première étape va de Bert à GPT3, je pense que c'est une nouvelle architecture et du big data, qui peut être réalisée. Lorsque nous étions chez Amazon, nous sommes également allés immédiatement sur place pour organiser une formation et une mise en œuvre de produits à grande échelle. La deuxième étape a eu lieu lorsque GPT4 est sorti lorsque j'ai démarré mon entreprise, et j'ai été très choqué. La raison principale vient du fait que la technologie n’est pas rendue publique. Selon les rumeurs, on estime que le temps de formation d'un modèle est de 100 millions et le coût standard des données est de plusieurs dizaines de millions. De nombreux investisseurs m'ont demandé combien il en coûterait pour reproduire GPT4, et j'ai répondu 300 à 400 millions. Plus tard, l’un d’eux a investi des centaines de millions. La troisième étape correspond au premier semestre de création d’une entreprise. Nous ne pouvons pas faire GPT4, alors commençons par des problèmes spécifiques. J'ai donc commencé à rechercher des clients, notamment dans les secteurs des jeux, de l'éducation, des ventes, de la finance et des assurances. Former des modèles en fonction de besoins spécifiques. Au début, il n'y avait pas de bons modèles open source sur le marché, nous nous sommes donc formés à partir de zéro. Plus tard, de nombreux bons modèles sont sortis, ce qui a réduit nos coûts. Concevez ensuite une méthode d'évaluation basée sur le scénario commercial, marquez les données, voyez où le modèle ne fonctionne pas et améliorez-le en conséquence. Fin 2023, nous avons été agréablement surpris de constater que nos modèles de la série Photon (un type de Boson) surpassaient le GPT4 dans les applications clients. L'avantage d'un modèle personnalisé est que le coût de l'inférence est 1/10 de celui de l'appel de l'API. Bien que les API soient beaucoup moins chères aujourd’hui, notre propre technologie s’améliore également et représente toujours 1/10 du coût. De plus, les QPS, délais, etc. sont tous mieux maîtrisés. Il est entendu à ce stade que pour des applications spécifiques, nous pouvons battre les meilleurs modèles du marché. La quatrième étape est le second semestre de création d’une entreprise. Bien que le client ait obtenu le modèle demandé dans le contrat, ce n’était pas ce à quoi il s’attendait car GPT4 n’était pas suffisant. Au début de l'année, nous avons constaté qu'il était difficile pour le modèle de faire un autre bond en avant s'il était formé pour une seule application. Avec le recul, si AGI veut atteindre le niveau des humains ordinaires, ce que veulent les clients, c'est le niveau des professionnels. Les jeux nécessitent des planificateurs et des acteurs professionnels professionnels, l'éducation nécessite des professeurs médaillés d'or, les ventes nécessitent des ventes de médailles d'or, et les finances et les assurances nécessitent des analystes seniors. C’est toute l’expertise d’AGI et de l’industrie. Même si nous étions impressionnés par AGI à l’époque, nous pensions que c’était inévitable. Au début de l’année, nous avons conçu une série de modèles Higgs (God Particle, un type de Boson). La principale capacité générale est de suivre le meilleur modèle, mais de se démarquer par une certaine capacité. Les compétences que nous avons choisies étaient celles du jeu de rôle : jouer un personnage virtuel, jouer à un professeur, jouer à la vente, jouer à un analyste de jeux, etc. Il a été itéré jusqu'à la deuxième génération mi-2024. Sur Arena-Hard et AlpacaEval 2.0, qui testent les capacités générales, la V2 est comparable aux meilleurs modèles, et elle n'est pas loin derrière sur MMLU-Pro, qui teste les connaissances.

- Nous ne pouvons pas annoter autant de données que Meta, donc la V2 est meilleure que Llama3 Instruct, principalement en raison de l'innovation des algorithmes.

- Par la suite, nous avons créé un ensemble de données d'évaluation de jeux de rôle contenant des jeux de rôle et des scénarios.

- Étonnamment, son propre modèle se classe premier dans son propre classement. Cependant, la formation du modèle n'a pas été exposée aux données d'évaluation.

- Cet ensemble de données d'évaluation a été conçu à l'origine pour un usage personnel et vise à refléter véritablement les capacités du modèle et à éviter le surajustement.

- Malgré cela, les étudiants responsables de l'évaluation ont délivré un rapport technique. Il convient de noter que l'échantillon de test de jeu de rôle provenait de c.ai, mais que sa capacité de modélisation était au bas de l'échelle.

La quatrième étape de la cognition

Un bon modèle vertical doit également avoir de fortes capacités générales, telles que le raisonnement, le suivi d'instructions et d'autres capacités verticales. À long terme, les modèles généraux et verticaux s’orientent vers l’AGI. Le modèle vertical peut être plus spécialisé, avoir des spécialités exceptionnelles, avoir des capacités générales acceptables, avoir des coûts de R&D inférieurs et avoir des méthodes de R&D différentes.

La cinquième phase pour faire connaissance

est actuellement en cours et nous avons hâte de la partager au plus vite.

Vision : Compagnon humain

Nous poursuivons la vision des "agents intelligents accompagnés d'humains", avec un QE et un QI élevés, équivalents à une équipe professionnelle. Par exemple, il peut accompagner le jeu (planificateur + acteur), le sport (encourager + coach sportif) et l'apprentissage (conseil et enseignement). Le modèle reste longtemps avec vous, comprend profondément l'utilisateur et peut « considérer sincèrement l'utilisateur ».

团队:有挑战的事情得靠团队

创业后才真正体会到团队的重要性。团队成员如螺丝,构成整辆“车”,灵活应对各种情况,承载重任。公司成立初期团队人数较少,成员都很重要,没有冗余,一人不力即可能影响整体运作。我以前选项目会选自己能主导开发的,但这也意味着问题挑战性不大。创业选择了一个很大的问题去做,只能全靠团队。本文虽大量使用“我”,但工作都是团队完成的。

个人追求:名还是利?

我根据内心声音做决定,读博、做视频、创业皆是如此。创业需要强烈动机支撑,才能克服困难。我深层的动机来自对生命可能没有意义的恐惧。我选择“上进”,提升创造价值能力;选择录视频、写教材,创造教育价值;选择写工作、创业总结,创造事例价值;选择创业,团结力量创造更大价值。

最后广告下我司的招聘信息

(湾区和温哥华)https://jobs.lever.co/bosonai

有做出海应用的小伙伴也请联系我们 api@boson.ai

The above is the detailed content of Li Mu: One year to start a business, three years to be alive. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

But maybe he can’t defeat the old man in the park? The Paris Olympic Games are in full swing, and table tennis has attracted much attention. At the same time, robots have also made new breakthroughs in playing table tennis. Just now, DeepMind proposed the first learning robot agent that can reach the level of human amateur players in competitive table tennis. Paper address: https://arxiv.org/pdf/2408.03906 How good is the DeepMind robot at playing table tennis? Probably on par with human amateur players: both forehand and backhand: the opponent uses a variety of playing styles, and the robot can also withstand: receiving serves with different spins: However, the intensity of the game does not seem to be as intense as the old man in the park. For robots, table tennis

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

On August 21, the 2024 World Robot Conference was grandly held in Beijing. SenseTime's home robot brand "Yuanluobot SenseRobot" has unveiled its entire family of products, and recently released the Yuanluobot AI chess-playing robot - Chess Professional Edition (hereinafter referred to as "Yuanluobot SenseRobot"), becoming the world's first A chess robot for the home. As the third chess-playing robot product of Yuanluobo, the new Guoxiang robot has undergone a large number of special technical upgrades and innovations in AI and engineering machinery. For the first time, it has realized the ability to pick up three-dimensional chess pieces through mechanical claws on a home robot, and perform human-machine Functions such as chess playing, everyone playing chess, notation review, etc.

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

The start of school is about to begin, and it’s not just the students who are about to start the new semester who should take care of themselves, but also the large AI models. Some time ago, Reddit was filled with netizens complaining that Claude was getting lazy. "Its level has dropped a lot, it often pauses, and even the output becomes very short. In the first week of release, it could translate a full 4-page document at once, but now it can't even output half a page!" https:// www.reddit.com/r/ClaudeAI/comments/1by8rw8/something_just_feels_wrong_with_claude_in_the/ in a post titled "Totally disappointed with Claude", full of

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference being held in Beijing, the display of humanoid robots has become the absolute focus of the scene. At the Stardust Intelligent booth, the AI robot assistant S1 performed three major performances of dulcimer, martial arts, and calligraphy in one exhibition area, capable of both literary and martial arts. , attracted a large number of professional audiences and media. The elegant playing on the elastic strings allows the S1 to demonstrate fine operation and absolute control with speed, strength and precision. CCTV News conducted a special report on the imitation learning and intelligent control behind "Calligraphy". Company founder Lai Jie explained that behind the silky movements, the hardware side pursues the best force control and the most human-like body indicators (speed, load) etc.), but on the AI side, the real movement data of people is collected, allowing the robot to become stronger when it encounters a strong situation and learn to evolve quickly. And agile

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

At this ACL conference, contributors have gained a lot. The six-day ACL2024 is being held in Bangkok, Thailand. ACL is the top international conference in the field of computational linguistics and natural language processing. It is organized by the International Association for Computational Linguistics and is held annually. ACL has always ranked first in academic influence in the field of NLP, and it is also a CCF-A recommended conference. This year's ACL conference is the 62nd and has received more than 400 cutting-edge works in the field of NLP. Yesterday afternoon, the conference announced the best paper and other awards. This time, there are 7 Best Paper Awards (two unpublished), 1 Best Theme Paper Award, and 35 Outstanding Paper Awards. The conference also awarded 3 Resource Paper Awards (ResourceAward) and Social Impact Award (

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

This afternoon, Hongmeng Zhixing officially welcomed new brands and new cars. On August 6, Huawei held the Hongmeng Smart Xingxing S9 and Huawei full-scenario new product launch conference, bringing the panoramic smart flagship sedan Xiangjie S9, the new M7Pro and Huawei novaFlip, MatePad Pro 12.2 inches, the new MatePad Air, Huawei Bisheng With many new all-scenario smart products including the laser printer X1 series, FreeBuds6i, WATCHFIT3 and smart screen S5Pro, from smart travel, smart office to smart wear, Huawei continues to build a full-scenario smart ecosystem to bring consumers a smart experience of the Internet of Everything. Hongmeng Zhixing: In-depth empowerment to promote the upgrading of the smart car industry Huawei joins hands with Chinese automotive industry partners to provide

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Deep integration of vision and robot learning. When two robot hands work together smoothly to fold clothes, pour tea, and pack shoes, coupled with the 1X humanoid robot NEO that has been making headlines recently, you may have a feeling: we seem to be entering the age of robots. In fact, these silky movements are the product of advanced robotic technology + exquisite frame design + multi-modal large models. We know that useful robots often require complex and exquisite interactions with the environment, and the environment can be represented as constraints in the spatial and temporal domains. For example, if you want a robot to pour tea, the robot first needs to grasp the handle of the teapot and keep it upright without spilling the tea, then move it smoothly until the mouth of the pot is aligned with the mouth of the cup, and then tilt the teapot at a certain angle. . this

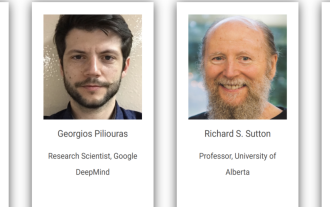

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Conference Introduction With the rapid development of science and technology, artificial intelligence has become an important force in promoting social progress. In this era, we are fortunate to witness and participate in the innovation and application of Distributed Artificial Intelligence (DAI). Distributed artificial intelligence is an important branch of the field of artificial intelligence, which has attracted more and more attention in recent years. Agents based on large language models (LLM) have suddenly emerged. By combining the powerful language understanding and generation capabilities of large models, they have shown great potential in natural language interaction, knowledge reasoning, task planning, etc. AIAgent is taking over the big language model and has become a hot topic in the current AI circle. Au