The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com

The co-authors of this paper are Dr. Li Jing, Sun Zhijie and Dr. Lin Dachao. The main members are from GTS AI Computing Lab. The main research and implementation fields include LLM training and promotion. Acceleration, AI training assurance and graph computing.

MoE has shone in the field of large language models in the past two years due to its low cost and high efficiency in the training and promotion process. As the soul of MoE, there are endless related research and discussions on how experts can maximize their learning potential. Previously, the research team of Huawei GTS AI Computing Lab proposed LocMoE, including a novel routing network structure, local loss to assist in reducing communication overhead, etc., which attracted widespread attention. The above design of LocMoE effectively alleviates the bottlenecks of some MoE classic structures in training, such as: expert routing algorithms may not be able to effectively distinguish tokens, and communication synchronization efficiency is limited by the difference in transmission bandwidth within and between nodes, etc. . In addition, LocMoE proves and solves the lower limit of expert capacity that can successfully process discriminative tokens. This lower limit is derived based on the probability distribution of discriminative tokens existing in token batches in a scenario where tokens are passively distributed to experts. Then, if experts also have the ability to select optimal tokens, the probability of discriminative tokens being processed will be greatly increased, and the lower limit of expert capacity will be further compressed. Based on the above ideas, the team further proposed a MoE architecture based on low-overhead active routing, naming it LocMoE+. LocMoE+ inherits the advantages of LocMoE's high-discrimination experts and local communication, further transforms the routing strategy, defines the affinity index between tokens and experts, and starts with this index to complete token distribution more efficiently, thereby improving training efficiency.

- Paper link: https://arxiv.org/pdf/2406.00023

Introduction to the paperThe core idea of the paper is to combine traditional passive routing with experts Active routing improves the probability of processing discriminative tokens under a certain capacity, thereby reducing sample noise and improving training efficiency. This paper starts from the relationship between a token and its assigned experts, and quantifies and defines the affinity between experts and tokens in a low computational overhead scheme. Accordingly, this paper implements a global adaptive routing strategy and rearranges and selects tokens in the expert dimension based on affinity scores. At the same time, the lower limit of expert capacity is proven to gradually decrease as the token feature distribution stabilizes, and the training overhead can be reduced. This paper is the first to combine two routing mechanisms. Based on the discovery that tokens tend to be routed to experts with smaller angles in the learning routing strategy, it breaks the obstacle of excessive overhead of existing active routing solutions affecting training efficiency. And remain consistent with the nature of passive routing. It is worth mentioning that the author chose a completely different hardware environment (server model, NPU card model, cluster networking scheme), training framework and backbone model from LocMoE to prove the high efficiency of this series of work. Scalability and ease of portability. Adaptive bidirectional route dispatch mechanismTraditional MoE has two route dispatch mechanisms: (1) hard router, direct put the whole Token features are assigned; (2) soft router, which assigns a weighted combination of token features. This article continues to consider (1) because of its lower computational cost. For the hard router scenario, it can be divided into 1) Token Choice Router (TCR), which allows each token to select top-k experts; 2) Expert Choice Router (ECR), which allows each expert to select top -C appropriate token. Due to capacity limitations, the number of tokens received by each expert has an upper limit C, so in scenario 1), the tokens received by each expert will be truncated:

Previous work pointed out that MoE training is divided into two stages: Phase 1. Routing training ensures that routing can reasonably allocate tokens, that is, tokens in different fields or with large differences can be distinguished and assigned to different experts; Phase 2. Due to token routing The role of each expert is to receive tokens in the same field or with similar properties. Each expert can acquire knowledge in related fields and properties after undergoing certain training. In summary, the key to the "success" of each step of MoE training lies in the correctness and rationality of token distribution. Contributions of this article (1) Through softmax activation function deduction, the cosine similarity between experts and tokens can more accurately measure affinity:

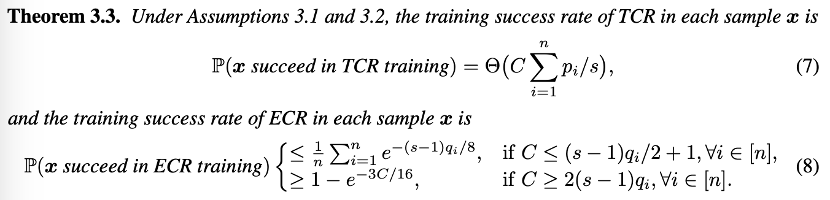

(2) From the perspective of theoretical modeling, the single training success rate of TCR and ECR is analyzed in two common scenarios: Based on theory, the author pointed out that

- In the early stage of model training, when the routing token capability is insufficient, Each time TCR is trained, it has a higher probability of successful training than ECR, and requires a larger expert capacity to ensure that the appropriate token is selected.

- In the later stage of model training, when the router has a certain ability to correctly allocate tokens, each time ECR is trained, it has a higher probability of successful training than TCR. At this time, only a smaller capacity is needed to select the appropriate token.

This theory is also very intuitive. When the router has no dispatch ability, it is better to let the token randomly select experts. When the router has certain dispatch ability, that is, when the expert can select the appropriate token, it is more appropriate to use ECR. . Therefore, the author recommends the transition from TCR to ECR, and proposes a global-level adaptive routing switching strategy. At the same time, based on the demand estimate of expert capacity, smaller expert capacity is used in the later stages of training. The experiments of this paper were conducted on the self-built cluster of Ascend 910B3 NPU, thanks to Huawei’s proprietary Cache Coherence Protocol High Performance Computing System (HCCS). High-performance inter-device data communication is achieved in multi-card scenarios, and the Huawei Collective Communication Library (HCCL) designed specifically for Ascend processors enables high-performance distributed training on high-speed links such as HCCS. The experiment uses the PyTorch for Ascend framework that is compatible with Ascend NPU and the acceleration library AscendSpeed and training framework ModelLink specially customized for Ascend devices, focusing on LLM parallel strategy and communication masking optimization. Experimental results show that without affecting the convergence or effectiveness of model training, the number of tokens each expert needs to process can be reduced by more than 60% compared to the baseline. Combined with communication optimization, training efficiency is improved by an average of 5.4% to 46.6% at cluster sizes of 32 cards, 64 cards, and 256 cards.

LocMoE+ also has a certain gain in video memory usage, especially in scenarios where the cluster size is small and the computing is intensive. Using the Ascend Insight tool to analyze memory monitoring samples, it can be seen that LocMoE+ memory usage dropped by 4.57% to 16.27% compared to the baseline, and dropped by 2.86% to 10.5% compared to LocMoE. As the cluster size increases, the gap in memory usage shrinks.

The open source evaluation sets C-Eval and TeleQnA, as well as the independently constructed ICT domain evaluation set GDAD, were used to evaluate LocMoE+’s capabilities in general knowledge and domain knowledge. Among them, GDAD covers a total of 47 sub-items, including 18,060 samples, to examine the performance of the model in the three major evaluation systems of domain tasks, domain competency certification examinations and general capabilities. After sufficient SFT, LocMoE+ improved on average by about 20.1% compared to the baseline in 16 sub-abilities of domain task capabilities, and improved by about 3.5% compared to LocMoE. Domain competency certification exams increased by 16% and 4.8% respectively. Among the 18 sub-capabilities of general capabilities, LocMoE+ improved by approximately 13.9% and 4.8% respectively. Overall, LocMoE+ shows performance improvements of 9.7% to 14.1% on GDAD, C-Eval, and TeleQnA respectively.

The above is the detailed content of Huawei GTS LocMoE+: High scalability and affinity MoE architecture, low overhead to achieve active routing. For more information, please follow other related articles on the PHP Chinese website!