World models provide a way to train reinforcement learning agents in a safe and sample-efficient manner. Recently, world models have mainly operated on discrete latent variable sequences to simulate environmental dynamics.

However, this method of compressing into compact discrete representations may ignore visual details that are important for reinforcement learning. On the other hand, diffusion models have become the dominant method for image generation, posing challenges to discrete latent models.

To promote this paradigm shift, researchers from the University of Geneva, the University of Edinburgh, and Microsoft Research jointly proposed a reinforcement learning agent trained in a diffuse world model—DIAMOND (DIffusion As a Model Of eNvironment Dreams).

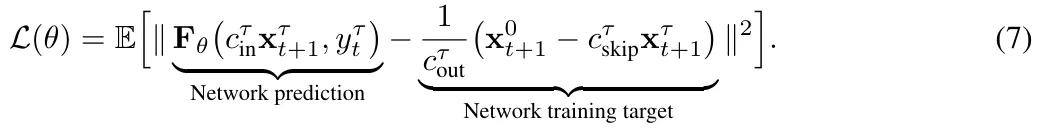

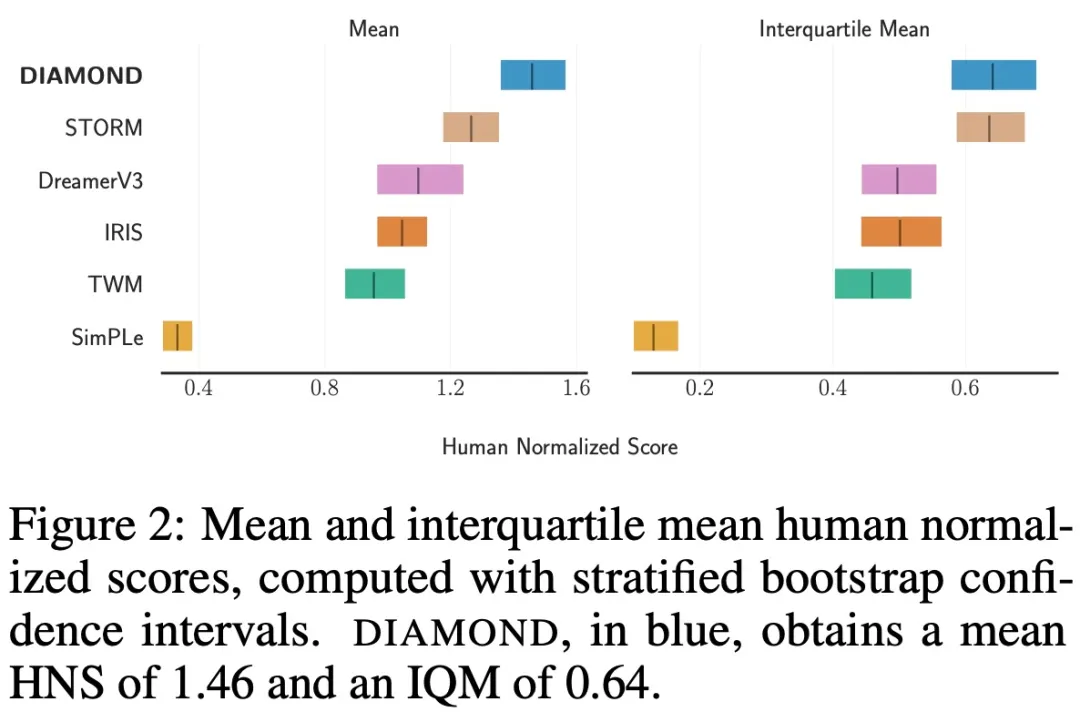

In the Atari 100k benchmark, DIAMOND+ achieved an average Human Normalized Score (HNS) of 1.46. This means that an agent trained in the world model can be fully trained at the SOTA level of an agent trained in the world model. This study provides a stability analysis to illustrate that DIAMOND design choices are necessary to ensure the long-term efficient stability of the diffusive world model.

In addition to the benefit of operating in image space, it enables the diffuse world model to become a direct representation of the environment, thus providing a deeper understanding of the world model and agent behavior. In particular, the study found that performance improvements in certain games result from better modeling of key visual details.

Next, this article introduces DIAMOND, a reinforcement learning agent trained in a diffusion world model. Specifically, we base this on the drift and diffusion coefficients f and g introduced in Section 2.2, which correspond to a specific choice of diffusion paradigm. Furthermore, this study also chose the EDM formulation based on Karras et al.

First define a disturbance kernel,, where is a real-valued function related to the diffusion time, called the noise schedule. This corresponds to setting the drift and diffusion coefficients to

is a real-valued function related to the diffusion time, called the noise schedule. This corresponds to setting the drift and diffusion coefficients to and

and .

.

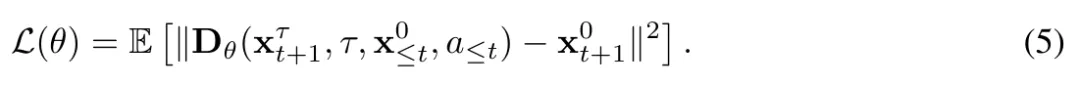

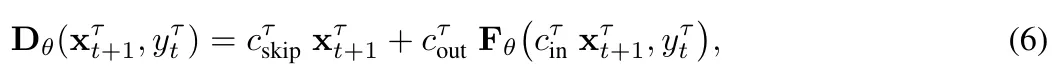

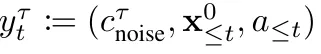

Then use the network preprocessing introduced by Karras et al. (2022), and parameterizein formula (5) as noise observations and neural network predictions Weighted sum of values:

includes all condition variables.

Preprocessor selection. Choose preprocessors and

and to maintain unit variance of network inputs and outputs at any noise level

to maintain unit variance of network inputs and outputs at any noise level .

. is the empirical conversion of noise level,

is the empirical conversion of noise level, is given by

is given by and the standard deviation of the data distribution

and the standard deviation of the data distribution , the formula is

, the formula is

Combined with formula 5 and 6. Get the training target:

training target:

##This study uses standard U-Net 2D to construct the vector field, and retain a buffer containing the past L observations and actions to condition the model. Next they concatenated these past observations channel-wise with the next noisy observation and fed the actions into the residual block of U-Net via an adaptive group normalization layer. As discussed in Section 2.3 and Appendix A, there are many possible sampling methods to generate the next observation from a trained diffusion model. While the code base released by the study supports multiple sampling schemes, the study found that Euler methods are effective without requiring additional NFE (number of function evaluations) and avoiding the unnecessary complexity of higher-order samplers or random sampling. Effective.

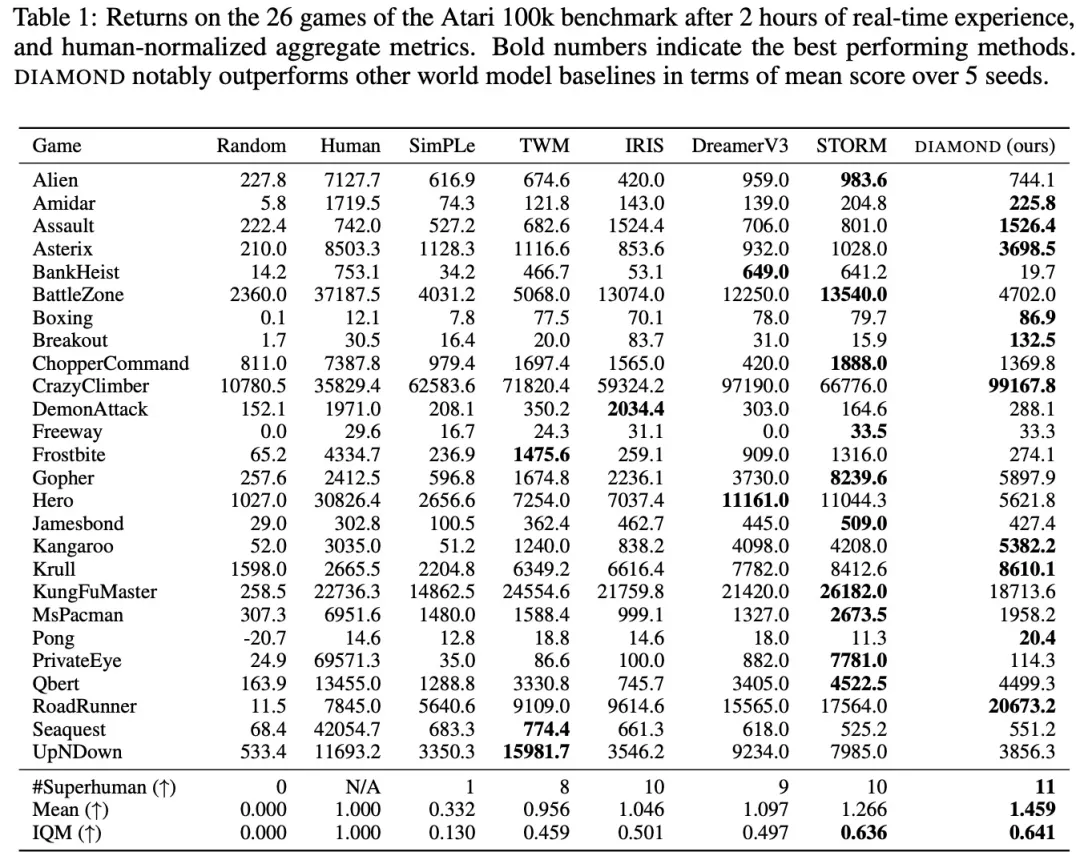

To fully evaluate DIAMOND, the study used the well-established Atari 100k benchmark, which included 26 games, using For testing the broad capabilities of an agent. For each game, the agent was only allowed 100k actions in the environment, which is roughly equivalent to 2 hours of human game time, to learn to play the game before being evaluated. For reference, an Atari agent without constraints is typically trained for 50 million steps, which corresponds to a 500-fold increase in experience. The researchers trained DIAMOND from scratch on each game using 5 random seeds. Each run used approximately 12GB of VRAM and took approximately 2.9 days on a single Nvidia RTX 4090 (1.03 GPU years total).

Table 1 compares different scores for training agents on the world model:

In order to study the stability of diffusion variables, this study analyzed the imagined trajectory generated by autoregression, as shown in Figure 3 below:

The study found that some situations require an iterative solver to drive the sampling process to a specific mode, such as the boxing game shown in Figure 4:

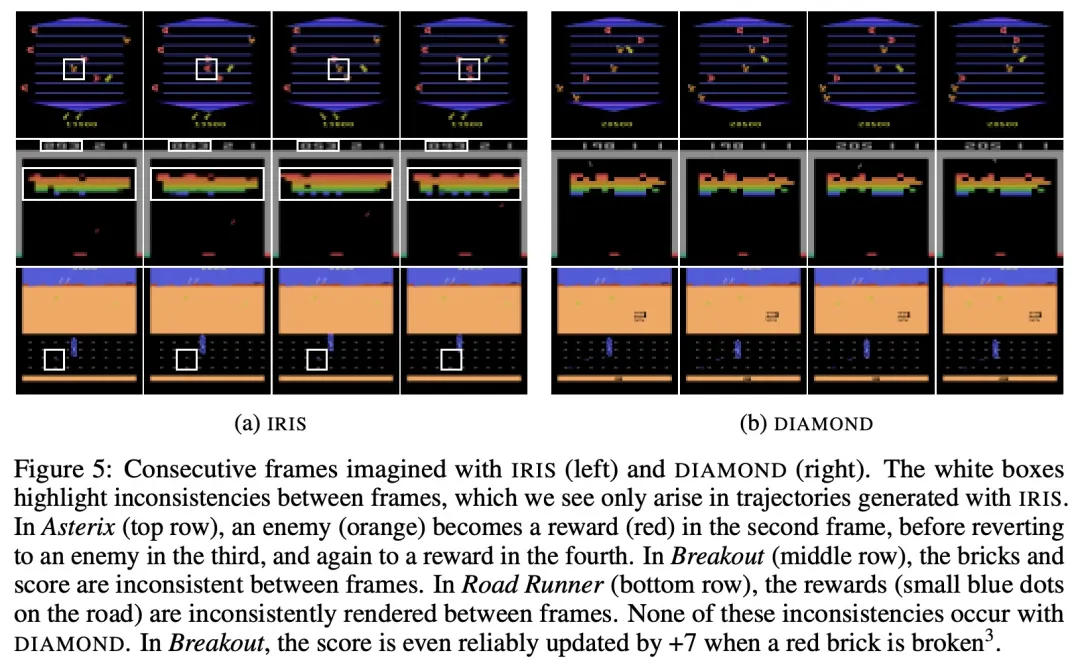

As shown in Figure 5, compared with the trajectories imagined by IRIS, the trajectories imagined by DIAMOND generally have higher visual quality and are more consistent with the real environment.

Interested readers can read the original text of the paper to learn more about the research content.

The above is the detailed content of The world model also spreads! The trained agent turns out to be pretty good. For more information, please follow other related articles on the PHP Chinese website!

How to solve the problem of access denied when booting up Windows 10

How to solve the problem of access denied when booting up Windows 10 What key do you press to recover when your computer crashes?

What key do you press to recover when your computer crashes? ps adjust edge shortcut keys

ps adjust edge shortcut keys What software is Twitter?

What software is Twitter? What types of files can be identified based on

What types of files can be identified based on Common tools for software testing

Common tools for software testing How to extract audio from video in java

How to extract audio from video in java Why can't the video in ppt play?

Why can't the video in ppt play?