Editor | Ziluo

Interactions between proteins, drugs and other biomolecules play a vital role in various biological processes. Understanding these interactions is critical to deciphering the molecular mechanisms underlying biological processes and developing new therapeutic strategies. Proteins are among the most important molecules in cells, and they perform various functions within cells. Drugs often regulate physiological processes by interacting with specific proteins. These interactions can promote or inhibit specific molecular signaling pathways. Current multi-scale computational methods often rely too much on a single scale and under-fit other scales. This may be related to the uneven multi-scale tropism and inherent greed of multi-scale learning.

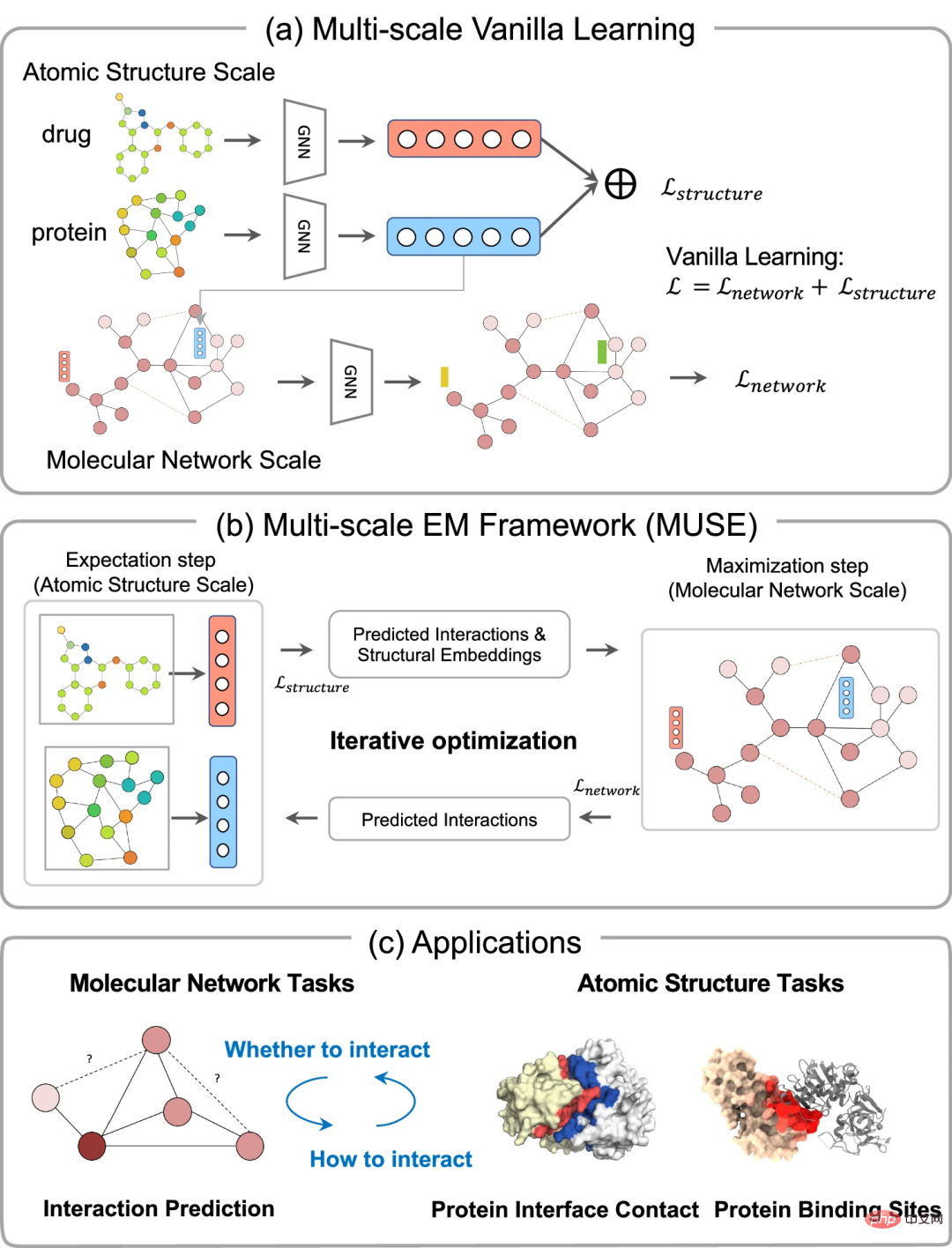

In order to alleviate the optimization imbalance, researchers from Sun Yat-sen University and Shanghai Jiao Tong University proposed a multi-scale representation learning framework MUSE based on variable expectation maximization, which can effectively integrate multi-scale information for learning. This strategy effectively fuses multi-scale information between the atomic structure and molecular network scales through mutual supervision and iterative optimization. This approach allows for greater information transfer and learning. This strategy effectively fuses multi-scale information between the atomic structure and molecular network scales through mutual supervision and iterative optimization.

MUSE+ not only outperforms the current state-of-the-art models on molecular interaction (protein-protein, drug-protein, and drug) tasks, but also outperforms the current state-of-the-art models on protein interface predictions at the atomic structure scale Model. More importantly, the multiscale learning framework can be extended to computational drug discovery at other scales.

The study, titled "

A variational expectation-maximization framework for balanced multi-scale learning of protein and drug interactions", was published in "Nature Communications" on May 25 "superior.

Paper link:

Paper link:

Predicting these interactions purely from structure is one of the most important challenges in structural biology. Current computational methods mostly predict interactions based on molecular networks or structural information and do not integrate them into a unified multiscale framework.

While some multi-view learning methods strive to fuse multi-scale information, an intuitive way to learn multi-scale representations is to combine molecular graphs with interaction networks and jointly optimize them. However, due to the imbalance and inherent greediness of multi-scale learning, these models often rely heavily on a single scale. Inability to effectively utilize information related to all scales and generalization is poor.

In addition, an effective multi-scale framework not only needs to capture rich information within different scales, but also needs to well preserve the potential relationships between them.

MUSE is used to learn multi-scale information of proteins and drugsMUSE is a multi-scale learning method that combines molecular structure modeling and protein-drug interaction network learning through a variational expectation maximization (EM) framework. The EM framework optimizes two modules, namely the expectation step (E-step) and the maximization step (M-step), in an alternating process of multiple iterations.

During the E-step, MUSE utilizes the structural information of each biomolecule to learn a valid structural representation for training in the M-step using known interactions and reinforcement samples. It takes as input protein and drug pairs and their atomic-level structural information, augmented by M-step predicted interactions. M-step takes as input the predicted interactions of the molecular-level interaction network, structural embeddings, and E-step and outputs the predicted interactions. Iterative optimization between E-step and M-step ensures interactive capture of molecular structure and network information with different learning rates at the two scales.

Mutual supervision ensures that each scale model learns in an appropriate manner, enabling it to exploit effective information at different scales. This framework will be demonstrated across multiple multiscales of interactions between proteins and drugs. It is analyzed that MUSE alleviates the imbalanced features in multi-scale learning and effectively integrates hierarchical and complementary information from different scales.

Utilizes atomic structure information to improve predictions at the molecular network scale

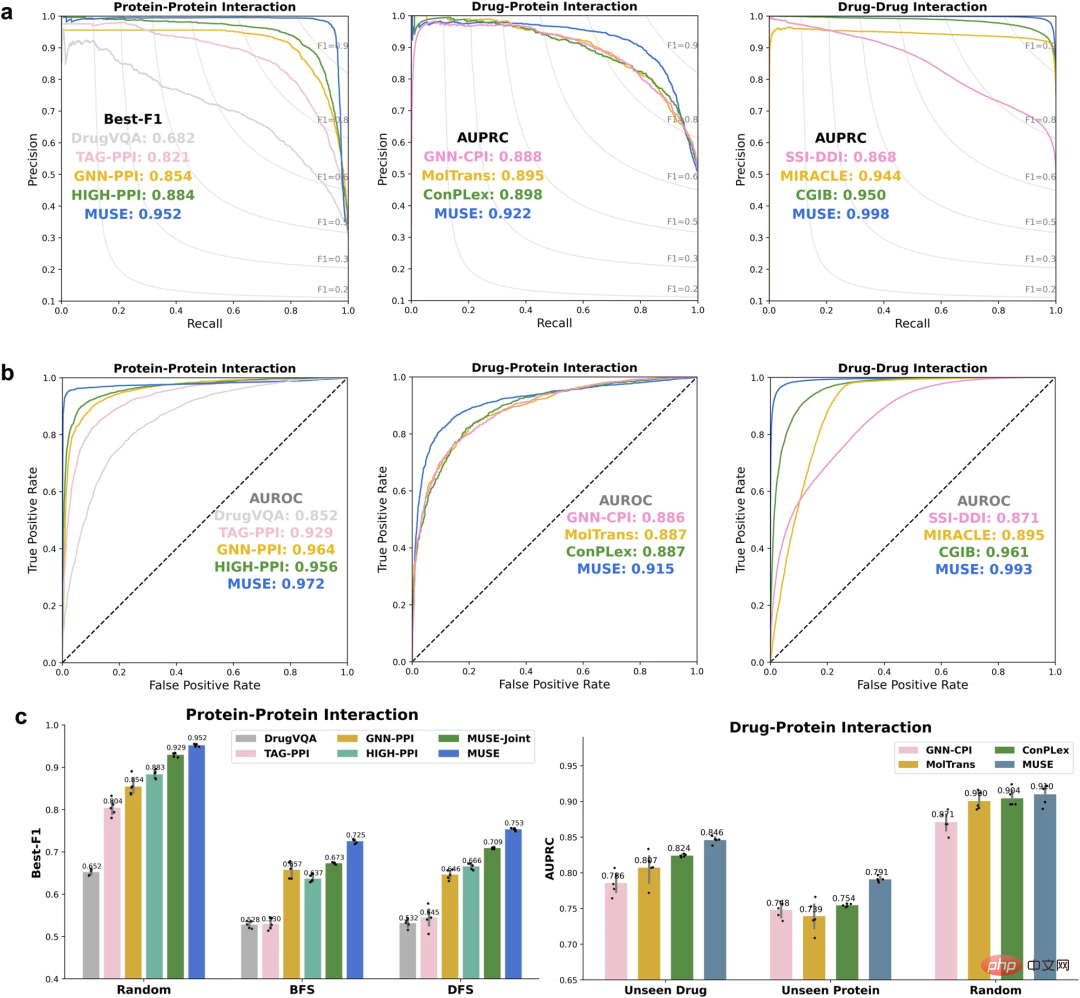

To evaluate their approach, the researchers first used MUSE to integrate atomic structure information to improve molecular network scale predictions. MUSE achieves state-of-the-art performance on three multi-scale interaction prediction tasks: protein-protein interaction (PPI), drug-protein interaction (DPI), and drug-drug interaction (DDI).

Improving atomic structure-scale predictions from the molecular network scale

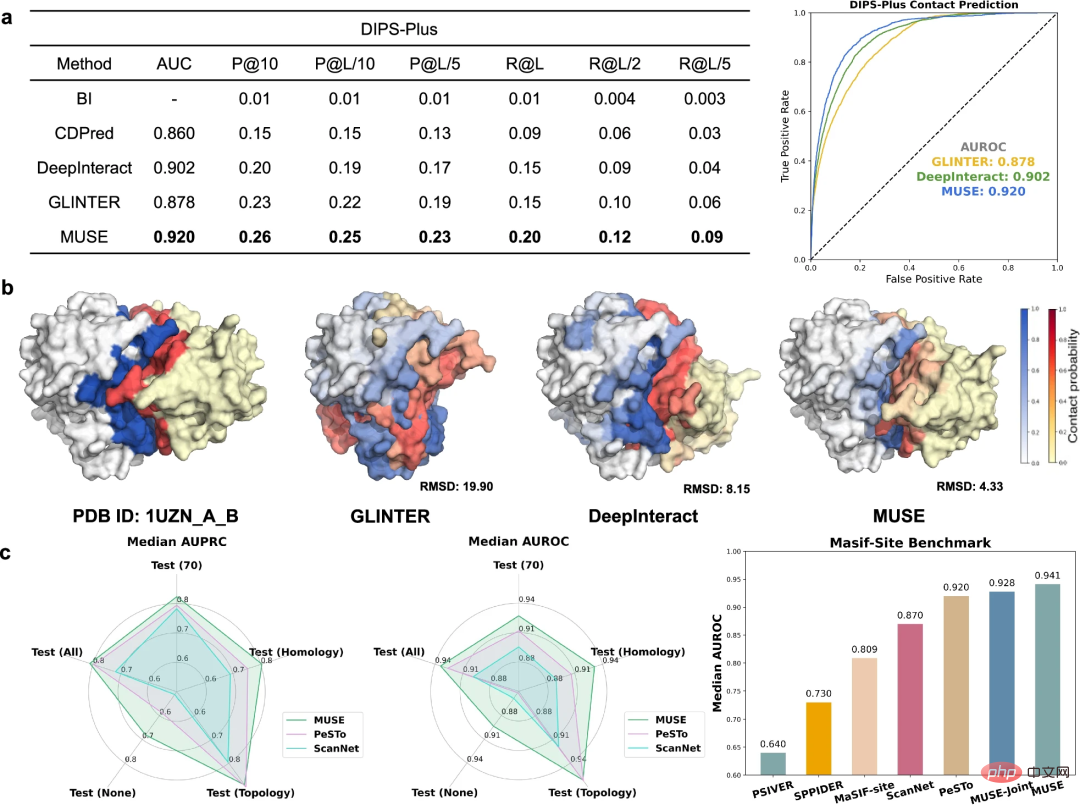

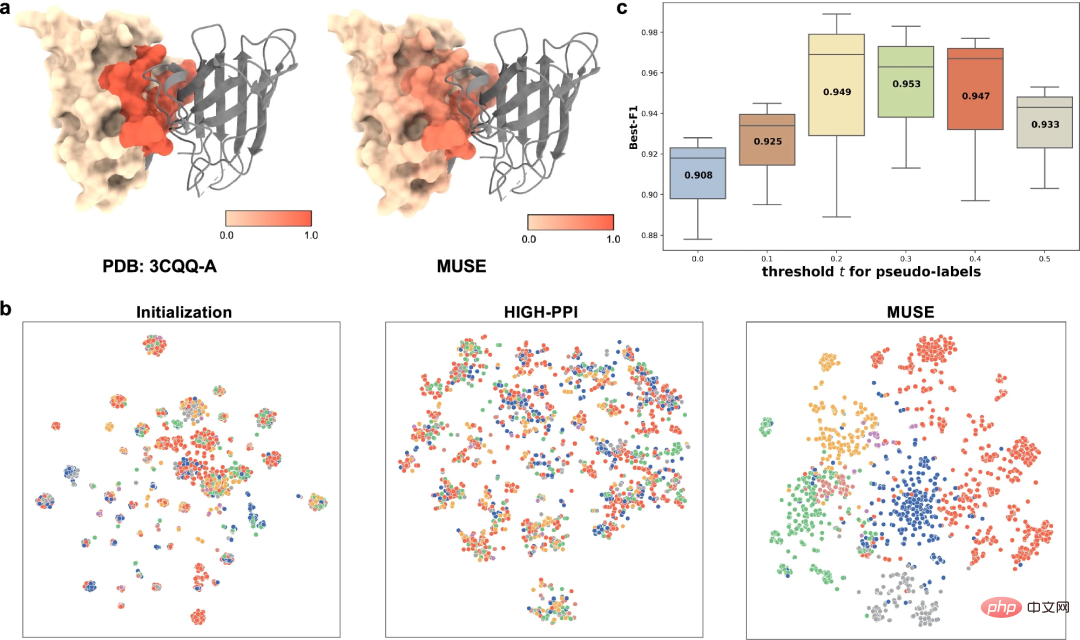

In addition to using atomic structure information to improve molecular network-scale predictions, the researchers went one step further The ability of MUSE to learn and predict structural properties at the atomic structural scale was investigated, including predicting interfacial contacts and binding sites relevant to PPIs.

To evaluate predictions of protein interchain contacts, MUSE was compared to state-of-the-art methods on the DIPS-Plus benchmark. MUSE consistently outperforms all other methods, validating its effectiveness and adaptability in atomic structure prediction.

MUSE was further evaluated to predict whether residues are directly involved in protein-protein interactions. The results demonstrate that molecular network-scale learning in MUSE can provide valuable insights into atomic structure-scale predictions.

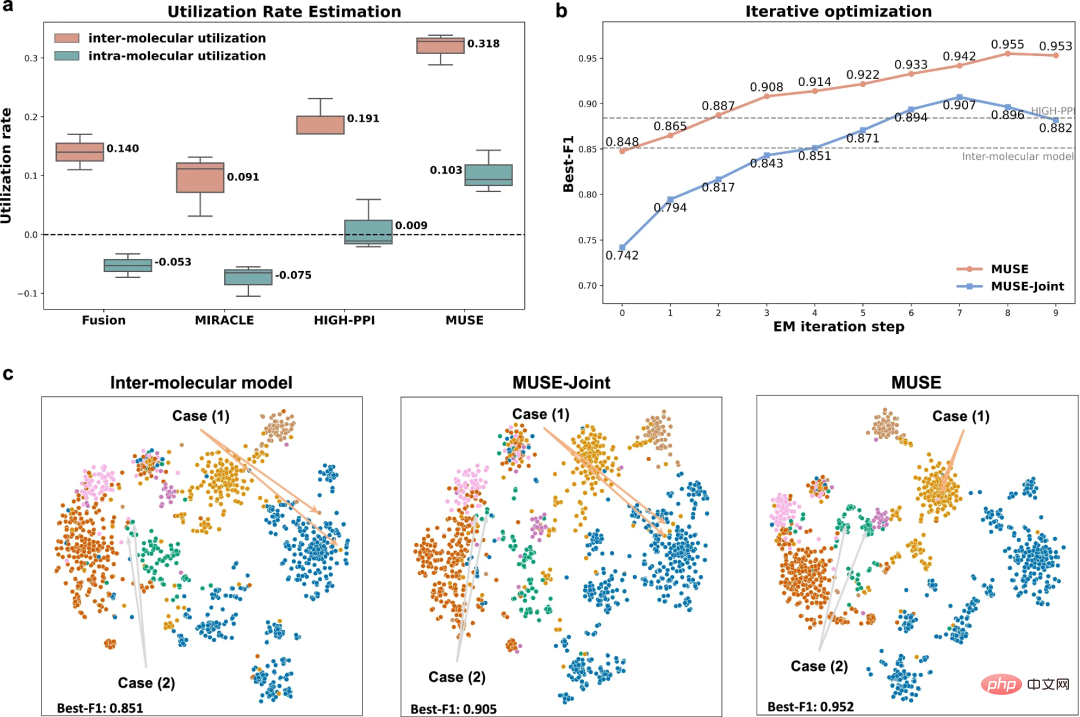

Alleviating the imbalance characteristics of multi-scale learning through iterative optimization

In order to explore why MUSE can achieve superior performance in multi-scale representation, researchers focused on the imbalance of multi-scale learning. The balancing characteristic analyzes MUSE's learning ability.

The results show that MUSE effectively alleviates the imbalance characteristics and greedy learning in multi-scale learning, ensuring the comprehensive utilization of information at different scales during the training process. In addition, experiments with utilization rate analysis allowed researchers to understand specifically what the model learned and demonstrated that using MUSE to balance model learning at different scales can enhance generalization capabilities.

Visualization and interpretation of learned multi-scale representations

To better understand the learned multi-scale representations, researchers have studied MUSE learning from different perspectives The multi-scale representation obtained includes (1) MUSE's ability to capture the atomic structure information (i.e., structural motifs and embeddings) involved in PPI, and (2) mutual supervision between the learned atomic structure and molecular network representations.

As an example of binding site prediction (PDB id: 3CQQ-A), MUSE can accurately identify residues belonging to the binding site with 97.7% accuracy. This suggests that mutual supervision in MUSE helps atomic structural scale models learn key substructures relevant to interactions.

Finally, the researchers also conducted ablation studies to study the impact of pseudo-labels predicted at the atomic structure scale on the molecular network scale.

While MUSE demonstrates state-of-the-art performance in benchmarks, it is still possible to improve its ability to handle noisy and incomplete multi-scale downstream tasks. This can be achieved by combining prior knowledge through knowledge graphs and explainable AI techniques. On the other hand, this conceptual multiscale framework can also be extended to computational drug discovery at other scales.

The above is the detailed content of SOTA performance, multi-scale learning, Sun Yat-sen University proposes protein-drug interaction AI framework. For more information, please follow other related articles on the PHP Chinese website!

The difference between WeChat service account and official account

The difference between WeChat service account and official account

How to play video with python

How to play video with python

Recommended order for learning c++ and c language

Recommended order for learning c++ and c language

How to create a web page in python

How to create a web page in python

Delete search history

Delete search history

What are the mobile operating systems?

What are the mobile operating systems?

shib coin latest news

shib coin latest news

cad2012 serial number and key collection

cad2012 serial number and key collection