How to solve the avalanche caused by redis

Cause of avalanche:

The simple understanding of cache avalanche is: due to the failure of the original cache (or the data is not loaded into the cache), the new cache has not arrived. During this period (the cache is normally obtained from Redis, as shown below) all requests that should have accessed the cache are querying the database, which puts huge pressure on the database CPU and memory. In severe cases, it can cause database downtime and system collapse.

The basic solution is as follows:

First, most system designers consider using locks or queues to ensure that there will not be a large number of threads reading and writing the database at one time. Avoiding excessive pressure on the database when the cache fails, although it can alleviate the pressure on the database to a certain extent, it also reduces the throughput of the system.

Second, analyze user behavior and try to evenly distribute cache invalidation times.

Third, if a certain cache server is down, you can consider primary and backup, such as: redis primary and backup, but double caching involves update transactions, and update may read dirty data, which needs to be solved .

Solution to the Redis avalanche effect:

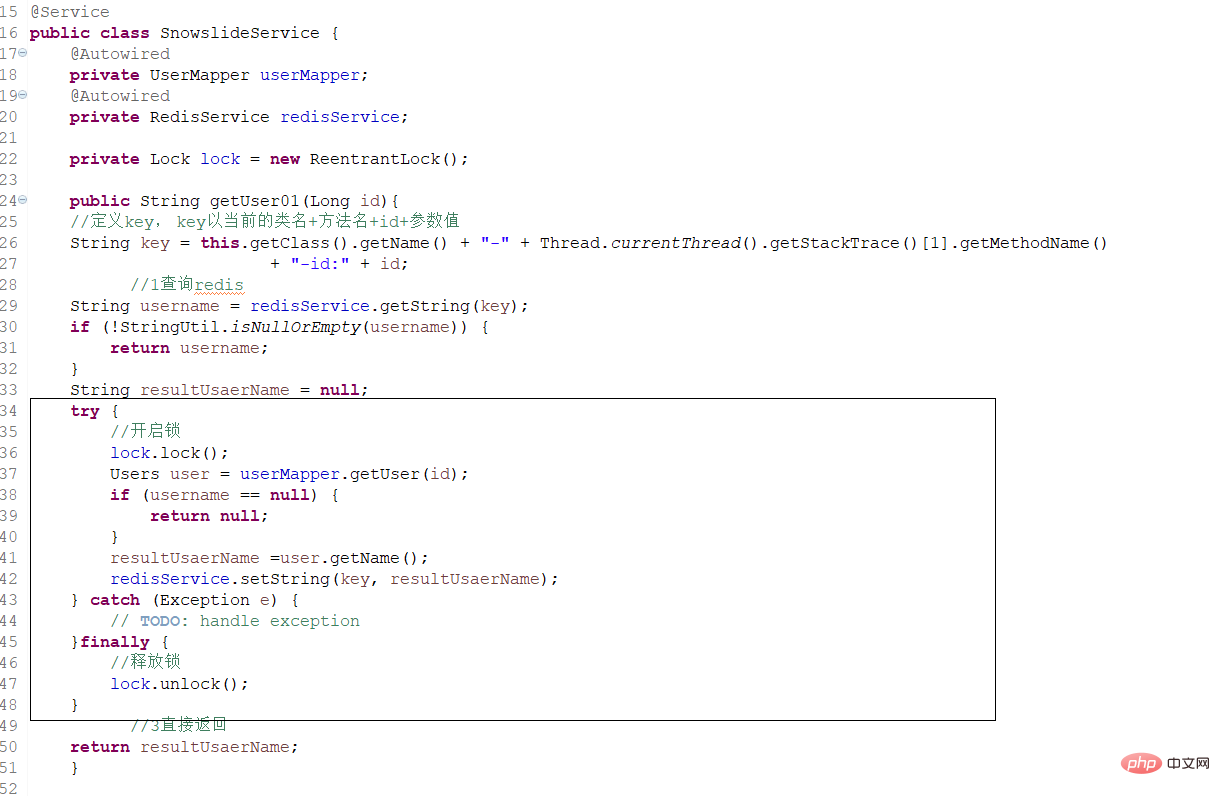

1. Distributed locks can be used. For stand-alone version, local locks

2. Message middleware method

3. First-level and second-level cache Redis Ehchache

4. Evenly distributed Redis key expiration time

Explanation:

1. When there are suddenly a large number of requests to the database server time, perform request restrictions. Using the above mechanism, it is guaranteed that only one thread (request) operates. Otherwise, queue and wait (cluster distributed lock, stand-alone local lock). Reduce server throughput and low efficiency.

Add lock!

Ensure that only one thread can enter. In fact, only one request can perform the query operation.

You can also use the current limiting strategy here. ~

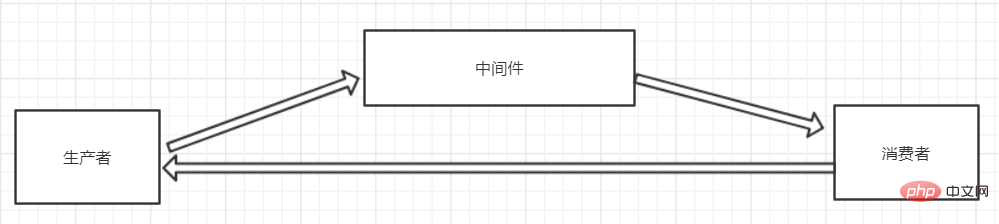

2. Use message middleware to solve

This solution is the most reliable solution!

Message middleware can solve high concurrency! ! !

If a large number of requests are accessed and Redis has no value, the query results will be stored in the message middleware (using the MQ asynchronous synchronization feature)

3. Make a second-level cache. A1 is the original cache and A2 is the copy cache. When A1 fails, you can access A2. The cache expiration time of A1 is set to short-term and A2 is set to long-term (this point is supplementary)

4. Set different expiration times for different keys to make the cache invalidation time points as even as possible.

For more Redis related knowledge, please visit the Redis usage tutorial column!

The above is the detailed content of How to solve the avalanche caused by redis. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

ArtGPT

AI image generator for creative art from text prompts.

Stock Market GPT

AI powered investment research for smarter decisions

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

How to delete keys in Redis using patterns?

Sep 14, 2025 am 12:56 AM

How to delete keys in Redis using patterns?

Sep 14, 2025 am 12:56 AM

Use the SCAN command to cooperate with UNLINK to safely delete keys that match patterns in Redis. First, use SCAN0MATCHpatternCOUNT to batch obtain the key name to avoid blocking; then use UNLINK asynchronously to improve performance. It is recommended to use redis-cli--scan--pattern'pattern'|xargsredis-cliunlink to achieve efficient deletion on the command line, and prohibit the use of KEYS command in production environments.

How to run Redis in a Docker container?

Sep 17, 2025 am 04:16 AM

How to run Redis in a Docker container?

Sep 17, 2025 am 04:16 AM

Running Redis with Docker without installing it on the host, it can be quickly started through the dockerrun command; it can customize configuration files and mount them to implement memory policies and other settings; it can persist data by naming volume redis-data; it is recommended to use DockerCompose to facilitate the deployment and maintenance of the development environment.

How to back up and restore a Redis database?

Sep 16, 2025 am 01:06 AM

How to back up and restore a Redis database?

Sep 16, 2025 am 01:06 AM

UseBGSAVEformanualorconfiguresavepointsforautomaticRDBsnapshotstobackupRedis;2.Locatethedump.rdbfileviaconfigandcopyitsecurely;3.Torestore,stopRedis,replacetheRDBfile,ensureproperpermissions,restart,andhandleAOFifenabled;4.Followbestpracticeslikesche

How to integrate Redis with a Spring Boot application?

Sep 19, 2025 am 01:28 AM

How to integrate Redis with a Spring Boot application?

Sep 19, 2025 am 01:28 AM

First add SpringDataRedis dependencies, then set Redis connection information in the configuration file, then enable cache through @EnableCaching and use cache annotations, and finally operate data through RedisTemplate or StringRedisTemplate to realize cache, session storage or high-speed data access.

How to flush a Redis database or all databases?

Sep 24, 2025 am 01:30 AM

How to flush a Redis database or all databases?

Sep 24, 2025 am 01:30 AM

UseFLUSHDBtoclearthecurrentdatabaseorFLUSHALLforalldatabases;bothsupportASYNC(background)orSYNC(blocking)modes,withASYNCpreferredinproductiontoavoidlatency.

How to install Redis on Ubuntu?

Sep 20, 2025 am 12:52 AM

How to install Redis on Ubuntu?

Sep 20, 2025 am 12:52 AM

Installing Redis can be done through APT or source code, and APT is simpler; 2. Update the package index and install redis-server; 3. Start and enable the power-on self-start; 4. Use redis-cliping to test PONG; 5. Optional configuration files to adjust bindings, passwords, etc.; 6. Restart the service and complete the installation.

Which persistence model should I choose for my application?

Sep 15, 2025 am 01:13 AM

Which persistence model should I choose for my application?

Sep 15, 2025 am 01:13 AM

Choosing a persistence model requires trade-offs based on application requirements, load behavior, and data type. Common models include memory only (fast but not durable), disk storage (slow but persistent), hybrid mode (both speed and durable), and write-pre-log (high persistence). If you process key data, you should choose a WAL or ACID database; if you can tolerate a small amount of data loss, you can choose a memory or a hybrid model. At the same time, consider the complexity of operation and maintenance, such as cloud environments, you should choose a good integrated solution. Common errors need to be avoided, such as mistakenly treating snapshots as lasting guarantees, ignoring crash recovery tests, untuning synchronization frequency, etc. In short, it is key to clarifying priorities and performing exception scenario testing.

How to estimate the unique count of a large dataset with HyperLogLog? (PFADD, PFCOUNT)

Sep 24, 2025 am 03:04 AM

How to estimate the unique count of a large dataset with HyperLogLog? (PFADD, PFCOUNT)

Sep 24, 2025 am 03:04 AM

HyperLogLog provides a memory efficient and fast unique count estimation method in Redis via PFADD and PFCOUNT commands. 1. HyperLogLog is a probability algorithm used to estimate the number of different elements in the dataset. It only requires a small amount of fixed memory to process large-scale datasets. It is suitable for tracking independent visitors or high-frequency search queries and other scenarios; 2. PFADD is used to add elements to HyperLogLog, and PFCOUNT returns the unique element estimate value in one or more structures; 3. Using meaningful key names, directly adding string values, and merging multiple HLLs to avoid repeated calculations are the best practices for using PFADD and PFCOUNT; 4. HyperLo